Interference: a source of telecommunications problems

The growing number of connected objects is set to cause a concurrent increase in interference, a phenomenon which has remained an issue since the birth of telecommunications. In the past decade, more and more research has been undertaken in this area, leading us to revisit the way in which devices handle interference.

“Throughout the history of telecommunications, we have observed an increase in the quantities of information being exchanged,” states Laurent Clavier, telecommunications researcher at IMT Nord Europe. “This phenomenon can be explained by network densification in particular,” adds the researcher. The increase in the amount of data circulating is paired with a rise in interference, which represents a problem for network operations.

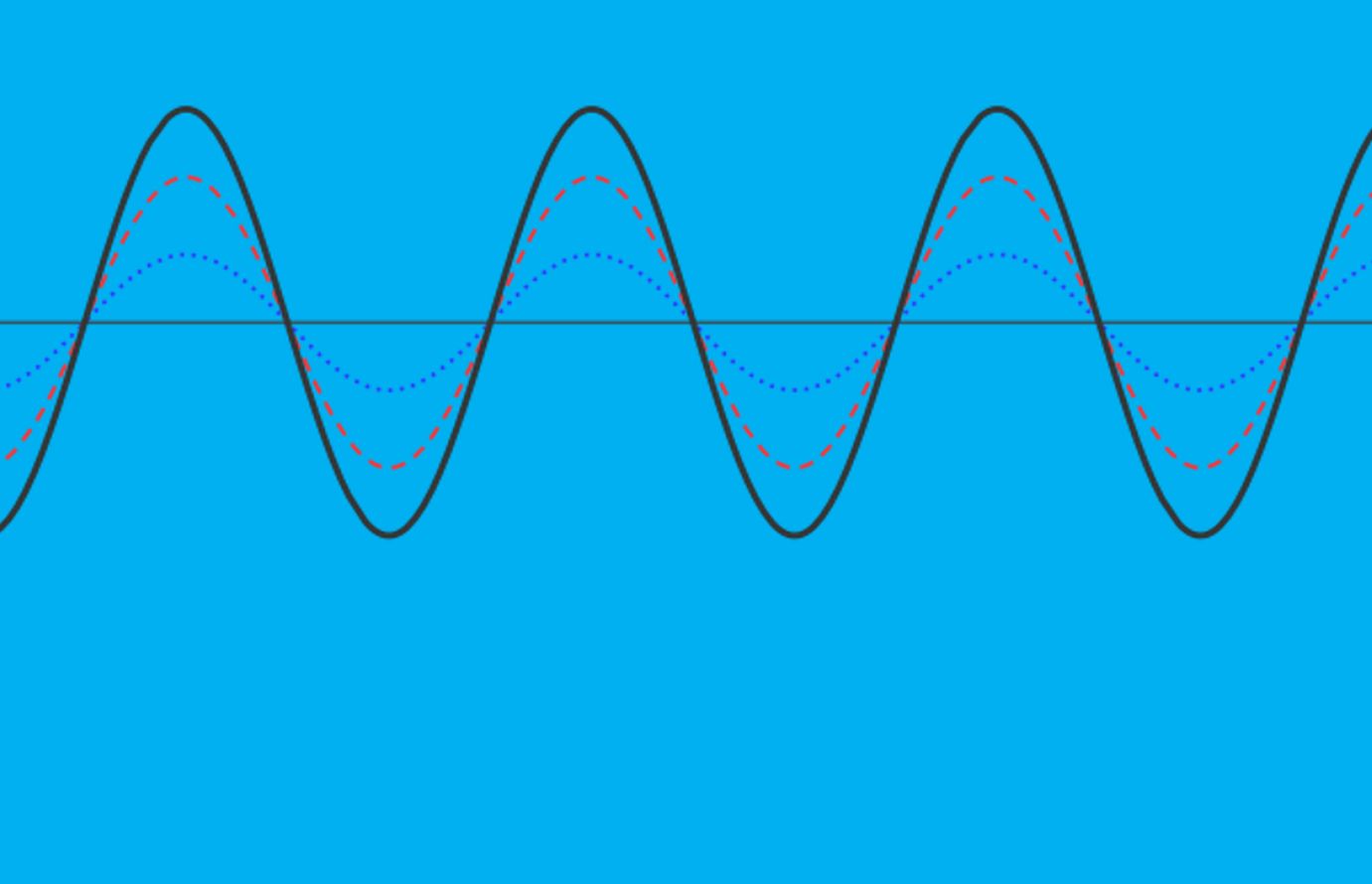

To understand what interference is, first, we need to understand what a receiver is. In the field of telecommunications, a receiver is a device that converts a signal into usable information — like an electromagnetic wave into a voice. Sometimes, undesired signals disrupt the functioning of a receiver and damage the communication between several devices. This phenomenon is known as interference and the undesired signal, noise. It can cause voice distortion during a telephone call, for example.

Interference occurs when multiple machines use the same frequency band at the same time. To avoid interference, receivers choose which signals they pick up and which they drop. While telephone networks are organized to avoid two smartphones interfering with each other, this is not the case for the Internet of Things, where interference is becoming critical.

Read on I’MTech: Better network-sharing with NOMA

Different kinds of noise causing interference

With the boom in the number of connected devices, the amount of interference will increase and cause the network to deteriorate. By improving machine receivers, it appears possible to mitigate this damage. Most connected devices are equipped with receivers adapted for Gaussian noise. These receivers make the best decisions possible as long as the signal received is powerful enough.

By studying how interference occurs, scientists have understood that it does not follow a Gaussian model, but rather an impulsive one. “Generally, there are very few objects that function together at the same time as ours and near our receiver,” explains Clavier. “Distant devices generate weak interference, whereas closer devices generate strong interference: this is the phenomenon that characterizes impulsive interference,” he specifies.

Reception strategies implemented for Gaussian noise do not account for the presence of these strong noise values. They are therefore easily misled by impulsive noise, with receivers no longer able to recover the useful information. “By designing receivers capable of processing the different kinds of interference that occur in real life, the network will be more robust and able to host more devices,” adds the researcher.

Adaptable receivers

For a receiver to be able to understand Gaussian and non-Gaussian noise, it needs to be able to identify its environment. If a device receives a signal that it wishes to decode while the signal of another nearby device is generating interference, it will use an impulsive model to deal with the interference and decode the useful signal properly. If it is in an environment in which the devices are all relatively far away, it will analyze the interference with a Gaussian model.

To correctly decode a message, the receiver must adapt its decision-making rule to the context. To do so, Clavier indicates that a “receiver may be equipped with mechanisms that allow it to calculate the level of trust in the data it receives in a way that is adapted to the properties of the noise. It will therefore be capable of adapting to both Gaussian and impulsive noise.” This method, used by the researcher to design receivers, means that the machine does not have to automatically know its environment.

Currently, industrial actors are not particularly concerned with the nature of interference. However, they are interested in the means available to avoid it. In other words, they do not see the usefulness of questioning the Gaussian model and undertaking research into the way in which interference is produced. For Clavier, this lack of interest will be temporary, and “in time, we will realize that we will need to use this kind of receiver in devices,” he notes. “From then on, engineers will probably start to include these devices more and more in the tools they develop,” the researcher hopes.

Rémy Fauvel