Covid-19 Epidemic: an early warning signal that we’ve reached the planet’s limits?

Natacha Gondran, Mines Saint-Étienne – Institut Mines-Télécom and Aurélien Boutaud, Mines Saint-Étienne – Institut Mines-Télécom

[divider style=”normal” top=”20″ bottom=”20″]

This article was published for the Fête de la Science (Science Festival, held from 2 to 12 October 2020 in mainland France and from 6 to 16 November in Corsica, overseas departments and internationally), in which The Conversation France is a partner. The theme for this year’s festival is “Planète Nature”. Read about all the events in your region at Fetedelascience.fr.

This article was published for the Fête de la Science (Science Festival, held from 2 to 12 October 2020 in mainland France and from 6 to 16 November in Corsica, overseas departments and internationally), in which The Conversation France is a partner. The theme for this year’s festival is “Planète Nature”. Read about all the events in your region at Fetedelascience.fr.

[divider style=”normal” top=”20″ bottom=”20″]

[dropcap]W[/dropcap]hen an athlete gets too close to the limits of his body, it often reacts with an injury that forces him to rest. What athlete who has pushed himself past his limits has not been reined in by a strain, tendinitis, broken bone or other pain that has forced him to take it easy?

In ecology, there is also evidence that ecosystems send signals when they are reaching such high levels of deterioration that they cannot perform the regulatory functions that allow them to maintain their equilibrium. These are called early warning signals.

Several authors have made the connection between the Covid-19 epidemic and the decline of biodiversity, urging us to see this epidemic as an early warning signal. Evidence of a link between the current emerging zoonoses and the decline of biodiversity has existed for a number of years and that of a link between infectious diseases and climate change is emerging.

These early warning signals serve as a reminder that the planet’s capacity to absorb the pollution and deterioration to which it is subjected by humanity is not unlimited. And, as is the case for an athlete, there are dangers in getting too close to these limits.

Planetary boundaries that must not be transgressed

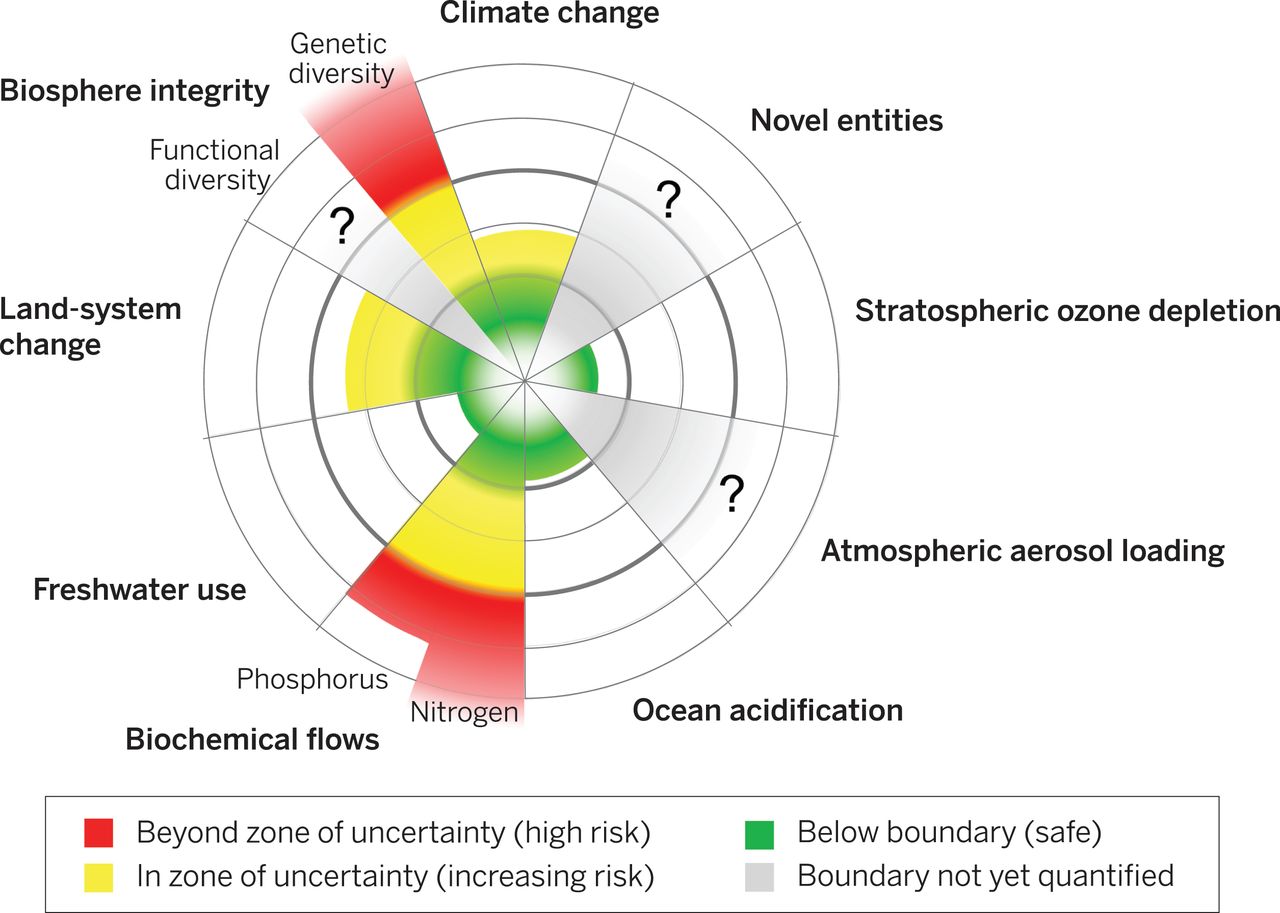

For over ten years, scientists from a wide range of disciplines and institutions have been working together to define a global framework for a Safe Operating Space (SOS), characterized by physical limits that humanity must respect, at the risk of seeing conditions for life on Earth become much less hospitable to human life. This framework has since been added to and updated through several publications.

These authors highlight the holistic dimension of the “Earth system”. For instance, the alteration of land use and water cycles makes systems more sensitive to climate change. Changes in the three major global regulating systems have been well-documented – ozone layer degradation, climate change and ocean acidification.

Other cycles, which are slower and less visible, regulate the production of biomass and biodiversity, thereby contributing to the resilience of ecological systems – the biogeochemical cycles of nitrogen and phosphorous, the freshwater cycle, land use changes and the genetic and functional integrity of the biosphere. Lastly, two phenomena present boundaries that have not yet been quantified by the scientific community: air pollution from aerosols and the introduction of novel entities (chemical or biological, for example).

These biophysical sub-systems react in a nonlinear, sometimes abrupt way, and are particularly sensitive when certain thresholds are approached. The consequences of crossing these thresholds may be irreversible and, in certain cases, could lead to huge environmental changes..

Several planetary boundaries have already been transgressed, others are on the brink

According to Steffen et al. (2015), planetary boundaries have already been overstepped in the areas of climate change, biodiversity loss, the biogeochemical cycles of nitrogen and phosphorous, and land use changes. And we are getting dangerously close to the boundaries for ocean acidification. As for the freshwater cycle, although W. Steffen et al. consider that the boundary has not yet been transgressed on the global level, the French Ministry for the Ecological and Inclusive Transition has reported that the threshold has already been crossed in France.

These transgressions cannot continue indefinitely without threatening the equilibrium of the Earth system – especially since these processes are closely interconnected. For example, overstepping the boundaries of ocean acidification as well as those of the nitrogen and phosphorous cycles will ultimately limit the oceans’ ability to absorb atmospheric carbon dioxide. Likewise, the loss of natural land cover and deforestation reduce forests’ ability to sequester carbon and thereby limit climate change. But they also reduce local systems’ resilience to global changes.

Representation of the nine planetary boundaries (Steffen et al., 2015):

Taking quick action to avoid the risk of drastic changes to biophysical conditions

The biological resources we depend on are undergoing rapid and unpredictable transformations within just a few human generations. These transformations may lead to the collapse of ecosystems, food shortages and health crises that could be much worse than the one we are currently facing. The main factors underlying these planetary impacts have been clearly identified: the increase in resource consumption, the transformation and fragmentation of natural habitats, and energy consumption.

It has also been widely established that the richest countries are primarily responsible for the ecological pressures that have led us to reach the planetary boundaries, while the poorer countries of the Global South, are primarily victims of the consequences of these degradations.

Considering the epidemic we are currently experiencing as an early warning signal should prompt us to take quick action to avoid transgressing planetary boundaries. The crisis we are facing has shown that strong policy decisions can be made in order to respect a limit – for example, the number of beds available to treat the sick. Will we be able to do as much when it comes to planetary boundaries?

The 150 citizens of the Citizens’ Convention for Climate have proposed that we “change our law so that the judicial system can take account of planetary boundaries. […] The definition of planetary boundaries can be used to establish a framework for quantifying the climate impact of human activities.” This is an ambitious goal, and it is more necessary than ever”.

[divider style=”dotted” top=”20″ bottom=”20″]

Aurélien Boutaud and Natacha Gondran are the authors of Les limites planétaires (Planetary Boundaries) published in May of 2020 by La Découverte.

Natacha Gondran is a research professor in environmental assessment at Mines Saint-Étienne – Institut Mines-Télécom and Aurélien Boutaud, holds a PhD in environmental science and engineering from Mines Saint-Étienne – Institut Mines-Télécom.

This article has been republished from The Conversation under a Creative Commons license. Read original article (in French).