How can industrial risk be assessed?

Safety is a key concern in the industrial sector. As such, studying risk represents a specialized field of research. Experiments in this area are particularly difficult to carry out, as they involve explosions and complicated measures. Frédéric Heymes, a researcher at IMT Mines Alès who specializes in industrial risk, discusses the unique aspects of this field of research, and new issues to be considered.

What does research on industrial risk involve?

Frédéric Heymes: Risk is the likelihood of the occurrence of an event that could lead to negative and high-stakes consequences. Our research is broken down into three levels of anticipation (understanding, preventing, protecting) and one operational level (helping manage accidents). We have to understand what can happen and do everything possible to prevent dangerous events from happening in real life. Since accidents remain inevitable, we have to anticipate protective measures to best protect people and resources in the aftermath an accident. We must also be able to respond effectively. Emergency services and the parties responsible for managing industrial disasters need simulation tools to help them make the right decisions. Risk research is cross-sectorial and can be applied to a wide range of industries (energy, chemistry, transport, pharmaceuticals, agri-food).

What’s a typical example of an industrial risk study?

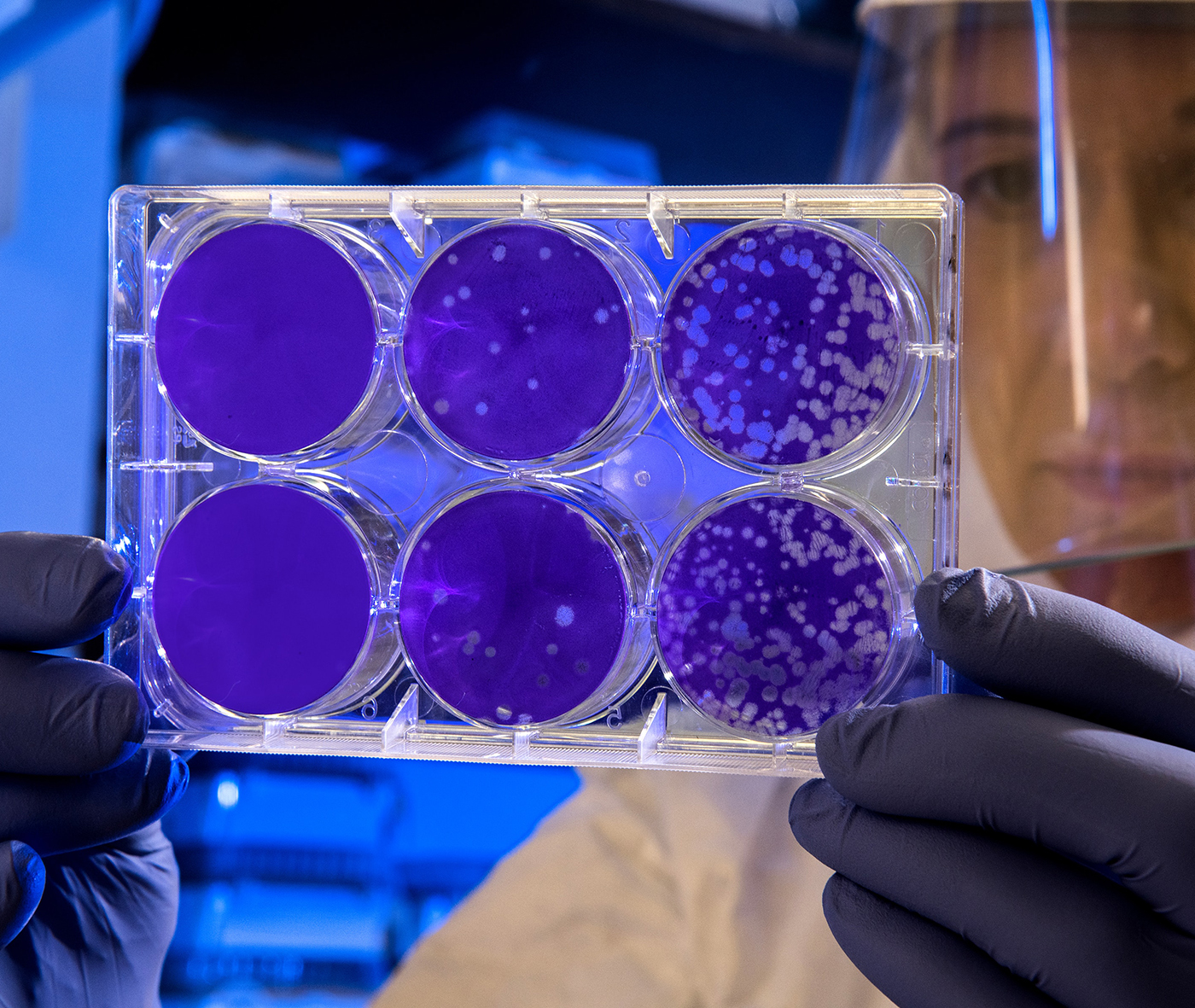

FH: Although my research may address a wide variety of themes, on the whole, it’s primarily connected to explosive risk. That means understanding the phenomenon and why it occurs, in order to make sure it won’t happen again. A special feature of our laboratory is that we can carry out experimental field testing for dangerous phenomena that can’t be performed in the laboratory setting.

What does an experiment on explosive risk look like?

FH: We partnered with Total to carry out an especially impressive experiment, which had never before been done anywhere in the world. It was a study on the explosion of superheated water, under very high pressure at a very high temperature. It was potentially dangerous since the explosion releases a very large amount of energy. It was important for Total to understand what happens in the event of such an explosion and the consequences of concern. Carrying out the experiment was a real team effort and called for a great deal of logistical planning. Right away, it was different than working in a lab setting. There were between 5 and 8 people involved in each test, and everyone had their own specific role and specialty: data acquisition, control, high-speed cameras, logistics, handling. We needed a prototype that weighed about a ton, which we had made by a boilermaker. That alone was no simple task. Boilermakers are responsible for producing compliant equipment that is known to be reliable. But for our research, we knew that the prototype would explode. So we had to reassure the manufacturer in terms of liability.

How do you set up such an explosion?

FH: We need a special testing ground to carry out the experiment and to get permission to use it, we have to prove that the test is perfectly controlled. For these tests, we collaborated with the Camp des Garrigues, a military range located north of Nîmes. The test area is secure but completely empty, so it took a lot of preparation and set-up. In addition, firefighters were also on site with our team. And there was a great deal of research dedicated to sensors in order to obtain precise measurements. The explosion lasts less than a second. It’s a very short test. Most of the time, we only have access to the field for a relatively short period of time, which means we carry out the tests one after another, non-stop. We’re also under a lot of stress – we know that the slightest error could have dramatic consequences.

What happens after this study?

FH: The aim of this research was to study the consequences of such an explosion on the immediate environment. That provides us with an in-depth understanding of the event so that those involved can take appropriate action. We therefore obtain information about the explosion, the damage it causes and the size of the damaged area. We also observe whether it can give rise to a shock wave or projectile expulsion, and if so, we study their impacts.

Has there ever been a time when you were unable to carry out tests you needed for your research?

FH: Yes, that was the case for a study on the risk of propane tank explosions during wildfires. Ideally, we would have to control a real wildfire and expose propane tanks to this hazard. But we’re not allowed to do that, and it’s extremely dangerous. It’s a real headache. Ultimately, we have to divide the project into two parts and study each part separately. That way, we obtain results that we can link using modeling. On one hand, we have the wildfire with a huge number of variables that must be taken into account: wind strength and direction, slope inclination, types of species in the vegetation, etc. And on the other hand, we study fluid mechanics and thermodynamics to understand what happens inside propane tanks.

What results did you achieve through this study?

FH: We arrived at the conclusion that gas tanks are not likely to explode if brush clearing regulations are observed. In residential areas located near forests, there are regulations for maintenance, and brush clearing in particular. But if these rules are not observed, safety is undermined. We therefore suggested a protective component with good thermal properties and flame resistance to protect tanks in scenarios that do not comply with regulations.

What are some current issues surrounding industrial risk?

FH: Research in the field of industrial risk really took off in the 1970s. There were a number of industrial accidents, which underscored the need to anticipate risks, leading to extensive research to prevent and protect against risks more effectively. But today, all energy sectors are undergoing changes and there are new risks to consider. Sectors are being created and raising new issues, as is the case for hydrogen for example. Hydrogen is a very attractive energy source since its use only produces water, and no carbon dioxide. But it is a dangerous compound since it’s highly flammable and explosive. The question is how to organize hydrogen supply chains (production, transport, storage, use) as well as possible. How can hydrogen best be used in the territory while minimizing risks? It’s a question that warrants further investigation. A cross-disciplinary research project on this topic with other IMT partners is in the startup phase, as part of Carnot HyTrend.

Read more on I’MTech: What is Hydrogen Energy?

So does that mean that energy and environmental transition come with their own set of new risks to be studied?

FH: Yes, that’s right and global warming is another current field of research. To go back to wildfires, they’re becoming more common which raises concerns. How can we deal with the growing number of fires? One solution is to consider passive self-protection scenarios, meaning reducing the vulnerability to risks through technological improvements, for example. The energy transition is bringing new technologies, along with new uses. Like I was saying before, hydrogen is a dangerous chemical compound, but we’ve known that for a long time. However, its operational use to support energy transition raises a number of new questions.

How can we deal with these new risks?

FH: The notion of new industrial risk is clearly linked to our social and technological evolutions. And evolution means new risks. Yet it’s hard to anticipate such risks since it’s hard to anticipate such evolutions in the first place. But at the same time, these evolutions provide us with new tools: artificial intelligence for example. We can now assimilate large amounts of data and quickly extract useful, relevant results to recognize an abnormal, potentially dangerous situation. Artificial intelligence also helps us overcome a number of technological hurdles. For example, we’re working with Mines ParisTech to conduct research on predicting the hydrodynamic behavior of gas leaks using artificial intelligence methods, with unprecedented computing speed and accuracy.

How is research with industrial players organized on this topic?

FH: Research can grow out of partnerships with research organizations, such as the IRSN (French Institute for Radiological Protection and Nuclear Safety). During the decommissioning of a power plant, even though there’s no longer any fissile material, residual metal dust could potentially ignite. So we have to understand what may happen in order to act accordingly in terms of safety. But for the most part, I collaborate directly with industrialists. In France, they’re responsible for managing the risks inherent in their operations. So there’s a certain administrative pressure to improve on these issues, and that sometimes involves research questions. But most of the time, investments are driven not by administrative requirements, but by a profound commitment to reducing risks.

What’s quite unique about this field of research is that we have complete freedom to study the topic and complete freedom to publish. That’s really unique to the field of risk. In general, results are shared easily, and often published so that “the competition” can also benefit from the findings. It’s also quite common for several companies in the same industry team up to fund a study since they all stand to benefit from it.