Effective communication for the environments of the future

Optimizing communication is an essential aspect of preparing for the uses of tomorrow, from new modes of transport to the industries of the future. Reliable communications are a prerequisite when it comes to delivering high quality services. Researchers from EURECOM, in partnership with The Technical University of Munich are working together to tackle this issue, developing new technology aimed at improving network security and performance.

In some scenarios involving wireless communication, particularly in the context of essential public safety services or the management of vehicular networks, there is one vital question: what is the most effective way of conveying the same information to a large number of people? The tedious solution would involve repeating the same message over and over again to each individual recipient, using a dedicated channel each time. A much quicker way is what is known as multicast. This is what we use when sending an email to several people at the same time, or when a news anchor is reading us the news. The sender of the information only provides it once, disseminating it via a means enabling them to duplicate it and to send it through communication channels capable of reaching all recipients.

In addition to TV news broadcasts, multicasts are particularly useful for networks comprising machines or objects set to follow on from the arrival of 5G and its future applications. This is the case, for example, with vehicle networks. “In a scenario where cars are all connected to one another, there is a whole bunch of useful information that could be shared with them using multicast technology”, explains David Gesbert, head of the Communication Systems department at EURECOM. “This could be traffic information, notifications about accidents, weather updates, etc.” The issue here is that, unlike TV sets, which do not move about while we are trying to watch the news, cars are mobile.

The mobile nature of recipients means that reception conditions are not always optimal. When driving through a tunnel, behind a large apartment block or when we’re taking our car out of the garage, it will be difficult for communication to reach our car. Despite these constraints – which affect multiple drivers at the same time – we need to be able to receive messages in order for the information service to operate effectively. “The transmission speed of the multicast has to be slowed down in order for it to be able to function with the car located in the worst reception scenario”, explains David Gesbert. What this means is that the flow rate must be lower or more power deployed for all users of the network. Just 3 cars going through a tunnel would be enough to slow down the speed at which potentially thousands of cars receive a message.

Communication through cooperation

For networks with thousands of users, it is simply not feasible to restrict the distribution characteristics in this way. In order to tackle this problem, David Gesbert and his team entered into a partnership with the Technical University of Munich (TUM) within the framework of the German-French Academy for the Industry of the Future. These researchers from France and Germany set themselves the task of devising a solution for multicast communication that would not be constrained by this “worst car” problem. “Our idea was as follows: we restrict ourselves to a small percentage of reception terminals which receive the message, but in order to offset that, we ensure that these same users are able to retransmit the message to their neighbors”, he explains. In other words: in your garage, you might not receive the message from the closest antenna, but the car out on the street 30 feet in front of your house will and will then be able to send it efficiently over a short distance.

Researchers from EURECOM and the TUM were thus able to develop an algorithm capable of identifying the most suitable vehicles to target. The message is first transmitted to everyone. Depending on whether or not reception is successful, the best candidates are selected to pass on the rest of the information. Distribution is then optimized for these vehicles through the use of the MIMO technique for multipath propagation. These vehicles will then be tasked with retransmitting the message to their neighbors through vehicle to vehicle communication. The tests carried out on these algorithms indicate a drop in network congestion in certain situations. “The algorithm doesn’t provide much out in the country, where conditions tend mostly to be good for everyone”, outlines David Gesbert. “In towns and cities, on the other hand, the number of users in poor reception conditions is a handicap for conventional multicasts, and it is here that the algorithm really helps boost network performance”.

The scope of these results extends beyond car networks, however. One other scenario in which the algorithm could be used is for the storage of popular content, such as videos or music. “Some content is used by a large number of users. Rather than going to search for them each time a request is made within the core network, these could be stored directly on the mobile terminals of users”, explains David Gesbert. In this scenario, our smartphones would no longer need to communicate with the operator’s antenna in order to download a video, but instead with another smartphone with better reception in the area onto which the content has already been downloaded.

More reliable communication for the uses of the future

The work carried out by EURECOM and the TUM into multicast technology has its roots in a more global project, SeCIF (Secure Communications for the Industry of the Future). The various industrial sectors set to benefit from the rise in communication between objects need reliable communication. Adding machine-to-machine communication to multicasts is just one of the avenues explored by the researchers. “At the same time, we have also been taking a closer look at what impact machine learning could have on the effectiveness of communication”, stresses David Gesbert.

Machine learning is breaking through into communication science, providing researchers with solutions to design problems for wireless networks. “Wireless networks have become highly heterogeneous”, explains the researcher. “It is no longer possible for us to optimize them manually because we have lost the intuition in all of this complexity”. Machine learning is capable of analyzing and extracting value from complex systems, enabling users to respond to questions that are too difficult to understand.

For example, the French and German researchers are looking at how 5G networks are able to optimize themselves autonomously depending on network usage data. In order to do this, data on the quality of the radio channel has to be fed back from the user terminal to the decision center. This operation takes up bandwidth, with negative repercussions for the quality of calls and the transmission of data over the Internet, for example. As a result, a limit has to be placed on the quantity of information being fed back. “Machine learning enables us to study a wide range of network usage scenarios and to identify the most relevant data to feed back using as little bandwidth as possible”, explains David Gesbert. Without machine learning “there is no mathematical method capable of tackling such a complex optimization problem”.

The work carried out by the German-French Academy will be vital when it comes to preparing for the uses of the future. Our cars, our towns, our homes and even our workplaces will be home to a growing number of connected objects, some of which will be mobile and autonomous. The effectiveness of communications is a prerequisite to ensuring that the new services they provide are able to operate effectively.

[box type=”success” align=”” class=”” width=””]

The research work by EURECOM and TUM on multicasting mentionned in this article has been published during the International Conference on Communications (ICC). It received the best paper award (category: Wireless communications) during the event, which is a highly competitive award in this scientific field.

[/box]

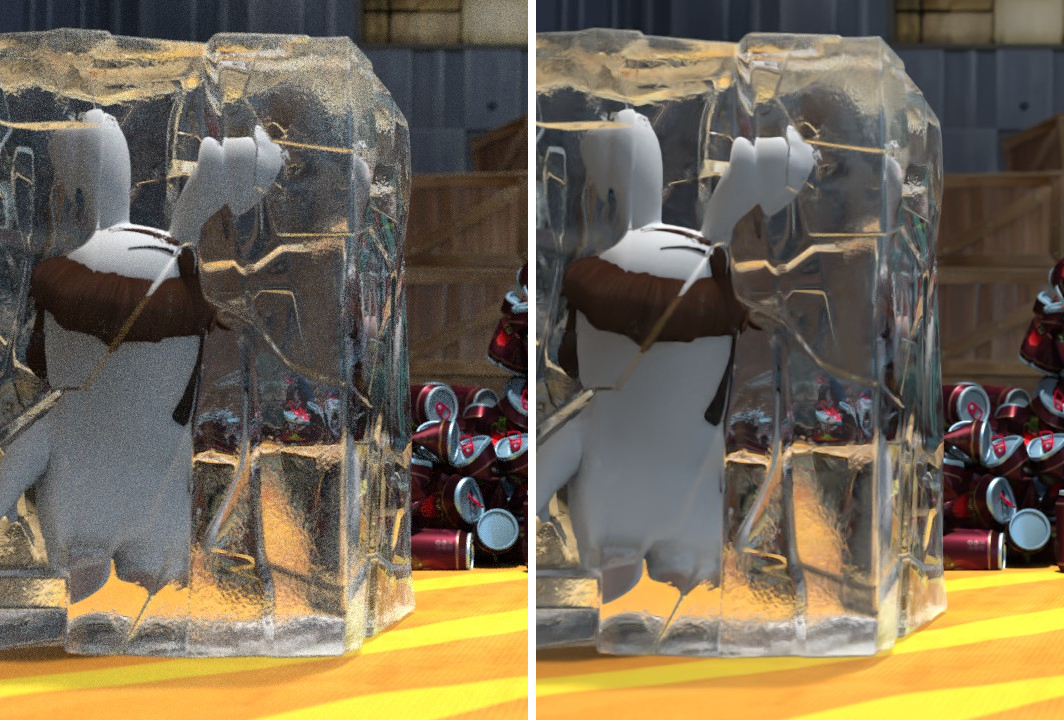

Illustration of BCD denoising a scene, before and after implementing the algorithm

Illustration of BCD denoising a scene, before and after implementing the algorithm