Unéole on our roofs

We know how to use wind to produce electricity, but large three-bladed turbines do not have their place in urban environments. The start-up Unéole has therefore developed a wind turbine that is suitable for cities, as well as other environments. It also offers a customized assessment of the most efficient energy mix. Clovis Marchetti, a research engineer at Unéole, explains the innovation developed by the start-up, which was incubated at IMT Lille Douai.

The idea for the start-up Unéole came from a trip to French Polynesia, islands that are cut off from the continent, meaning that they must be self-sufficient in terms of energy. Driven by a desire to develop renewable energies, Quentin Dubrulle focused on the fact that such energy sources are scarce in urban areas. Wind, in particular, is an untapped energy source in cities. “Traditional, three-bladed wind turbines are not suitable,” says Clovis Marchetti, a research engineer at Unéole. “They’re too big, make too much noise and are unable to capture the swirling winds created by the corridors between buildings.”.

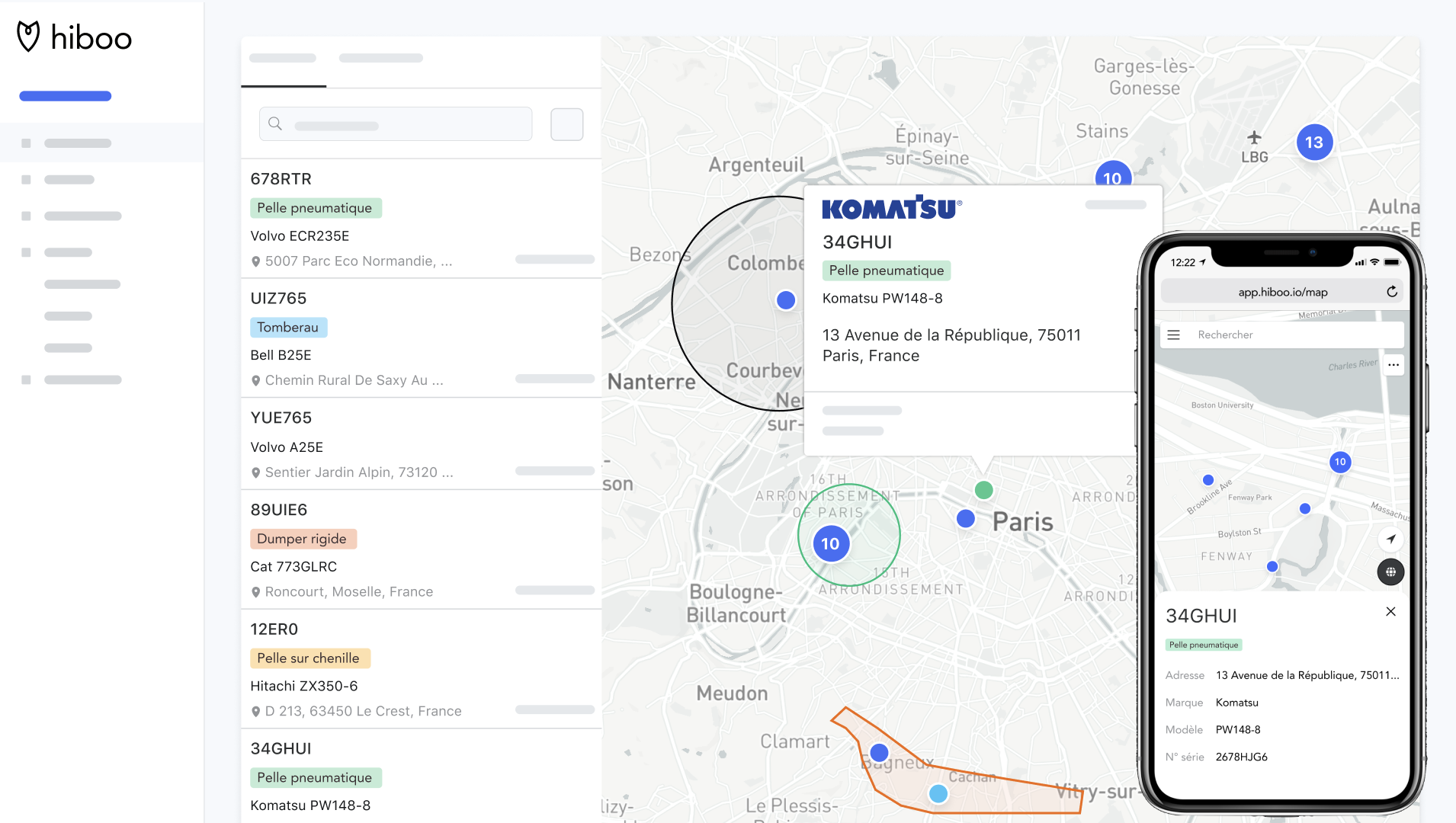

Supported by engineers and researchers, Quentin Dubrulle put together a team to study the subject. Then, in July 2014 he founded Unéole, which was incubated at IMT Lille Douai. Today the start-up proposes an urban turbine measuring just under 4 meters high and 2 meters wide that can produce up to 1,500 kWh per year. It is easy to install on flat roofs and designed to be used in cities, since it captures the swirling winds found in urban environments.

Producing energy with a low carbon footprint is a core priority for the project. This can be seen in the choice of materials and method of production. The parts are cut by laser, a technology that is well-understood and widely used by many industries around the world. So if these wind turbines have to be installed on another continent, the parts can be cut and assembled on location.

Another important aspect is the use of eco-friendly materials. “This is usually a second step,” says Clovis Marchetti, “but it was a priority for Unéole from the very beginning.” The entire skeleton of the turbine is built with recyclable materials. “We use aluminum and recycled and recyclable stainless steel,” he says. “For the electronics, it’s obviously a little harder.”

Portrait of an urban wind turbine

The wind turbine has a cylindrical shape and is built in three similar levels with slightly curved blades that are able to trap the wind. These blades are offset by 60° from one level to the next. “This improves performance since the production is more uniform throughout the turbine’s rotation.” says Clovis Marchetti. Another advantage to this architecture is that it makes it easy to start: no matter what direction the wind comes from, a part of the wind turbine will be sensitive to it, making it possible to induce movement.

To understand how a wind turbine works, two concepts of aerodynamics are important: lift and drag. In the former, a pressure difference diverts the flow of air and therefore exerts a force. “It’s what makes planes fly for example,” explains Clovis Marchetti. In the latter, the wind blows on a surface and pushes it. “Our wind turbine works primarily with drag, but lift effects also come into play,” he adds. “Since the wind turbine is directly pushed by the wind, its rotational speed will always be roughly equal to the wind speed.”

And that plays a significant role in terms of the noise produced by the wind turbine. Traditional three-bladed turbines turn faster than the wind due to lift. They therefore slice through the wind and produce a swishing noise. “Drag doesn’t create this problem since the wind turbine vibrates very little and doesn’t make any noise.” he says.

An optimal energy mix

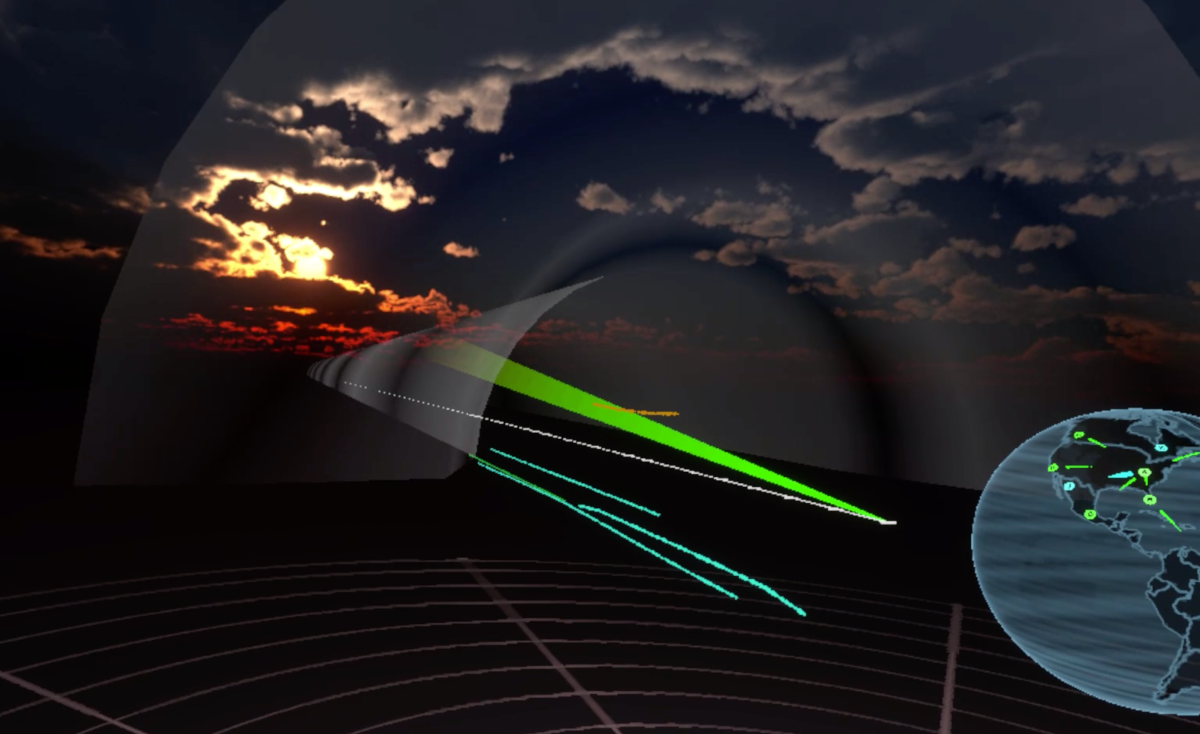

The urban wind turbine is not the only innovation proposed by Unéole. The central aim of this project is to combine potential renewable energies to find the optimal energy mix for a given location. As such, a considerable amount of modeling is required in order to analyze the winds on site. That means modeling a neighborhood by taking into consideration all the details that affect wind: topographical relief, buildings, vegetation etc. Once the data about the wind has been obtained from Météo France, the team studies how the wind will behave in a given situation on a case-by-case basis.

“Depending on relief and location, the energy capacity of the wind turbine can change dramatically,” says Clovis Marchetti. These wind studies allow them to create a map in order to identify locations that are best suited for promoting the turbine, and places where it will not work as well. “The goal is to determine the best way to use roofs to produce energy and optimize the energy mix, so we sometimes suggest that clients opt for photovoltaic energy,” he says.

“An important point is the complementary nature of photovoltaic energy and wind turbines,” says Clovis Marchetti. Wind turbines maintain production at night, and are also preferable for winter, whereas photovoltaics are better for summer. Combining the two technologies offers significant benefits at the energy level, for example, uniform production. “If we only install solar panels, we’ll have a peak of productivity at noon in the summer, but nothing at night,” he explains. This peak of activity must therefore be stored, which is costly and still involves some loss of production. A more uniform production would therefore make it possible to produce energy on a more regular basis without having to store the energy produced.

To this end, Unéole is working on a project for an energy mix platform: a system that includes their urban wind turbines, supplemented with a photovoltaic roof. Blending the two technologies would make it possible to produce up to 50% more energy than photovoltaic panels installed alone.

A connected wind turbine

“We’re also working on making this wind turbine connected,” says Clovis Marchetti. This would provide two major benefits. First, the wind turbine could provide information directly about its production and working condition. This is important so that the owner can monitor the energy supply and ensuring that it is working properly. “If the wind turbine communicates the fact that it is not turning even though it’s windy, we know right away that action is required;” he explains.

In addition, a connected wind turbine could predict its production capacity based on weather forecasts. “A key part of the smart city of tomorrow is the ability to manage consumption based on production,” he says. Today, weather forecasts are fairly reliable up to 36 hours in advance, so it would be possible to adjust our behavior. Imagine, if for example, strong winds were forecast for 3 pm. In this case, it would be better to wait until then to launch a simulation that requires a lot of energy.