Improve the quality of concrete to optimize construction

Since the late 20th century, concrete has become the most widely used manufactured material in the world. Its high level of popularity comes alongside recurring problems, affecting its quality and durability. Among these problems is when one of the components in concrete, cement paste, sweats. Mimoune Abadassi, civil engineering PhD student at IMT Mines Alès, aims to resolve this problem.

“When concrete is still fresh, the water inside rises to the surface and forms condensation,” explains Mimoune Abadassi, doctoral student in Civil Engineering at IMT Mines Alès. This phenomenon is called concrete sweating. “When this process takes place, some of the water will not reach the surface and remains trapped inside the concrete, which can weaken the structure,” adds the researcher, before specifying that “sweating does not only have negative effects on the concrete’s quality, as water allows the material to be damp cured, which prevents it from drying out and cracks appearing that would reduce durability”.

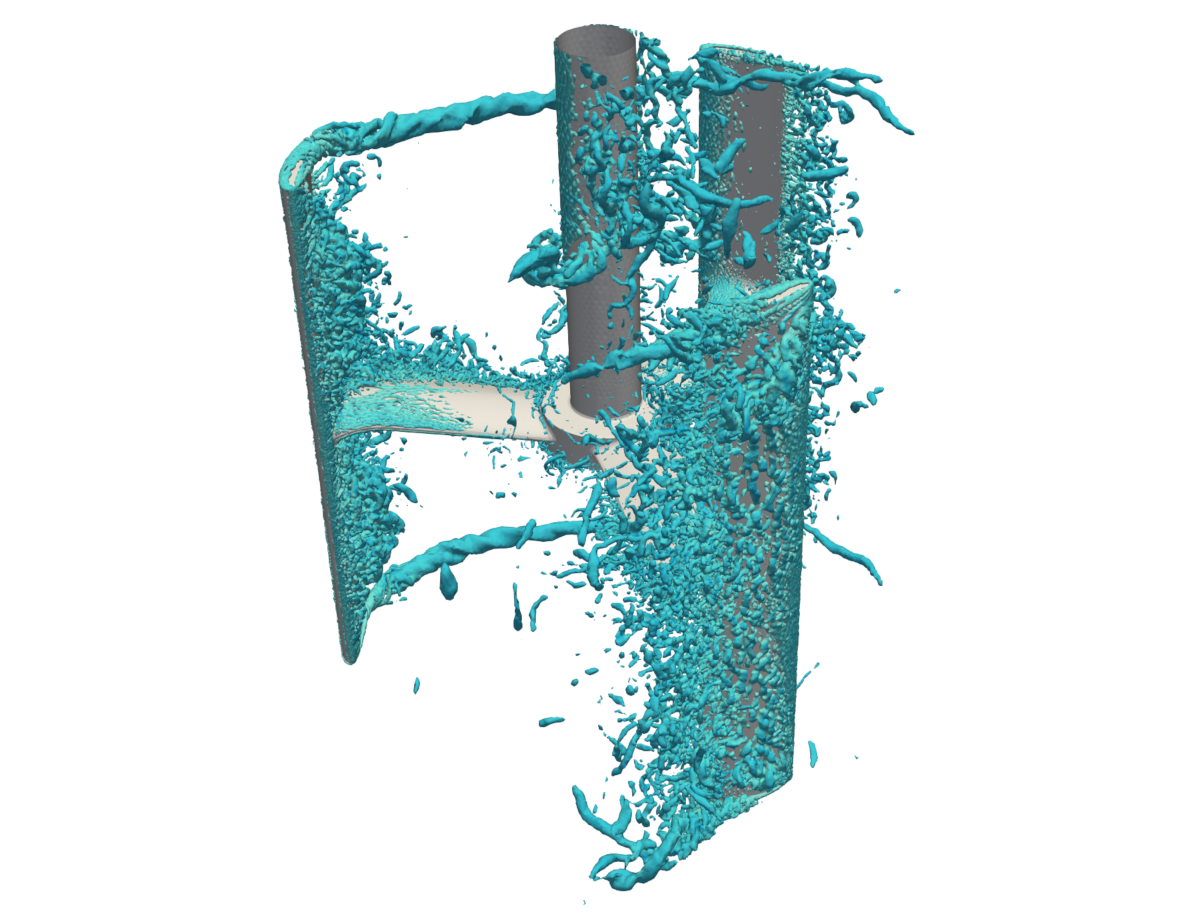

In his thesis, Abadassi studies the sweating of cement paste, one of the components of concrete alongside sand and gravel. In analyzing cement paste prepared with varying amounts of water, the young researcher has remarked that the more water incorporated in the cement paste, the more it sweats. He has also looked into the effect of superplasticizers, chemical products that when included in the cement paste, make it more liquid, more malleable when fresh and more resilient when hardened. “When we increase the amount of superplasticizer, we have observed that the cement paste sweats more as well,” indicates Abadassi. “This is explained by the fact that superplasticizers disperse suspended cement particles and encourage the water contained in clusters formed by these particles to be released,” he points out, before adding that “this phenomenon causes the volume of water in the mixture to increase, which increases the sweating of the cement paste”.

Research at the nanometric, microscopic and macroscopic level

By interfering with the sweating, superplasticizers also affect the permeability of cement paste. To study its permeability when fresh, Abadassi used an oedometer, a device mainly used in the field of soil mechanics. Oedometers compress a sample, extract the water contained inside and measure the volume, to determine how permeable it is. The larger the volume of water recovered, the more permeable the sample. In the case of cement paste, if it is too permeable, more water will enter, which reduces cohesion between aggregate particles and weakens the material’s structure.

By varying certain parameters when preparing the cement paste, such as the amount of superplasticizer, Abadassi aims to observe the changes taking place within the paste, invisible to the naked eye. To do so, he uses a Turbiscan. This machine, generally used in the cosmetics industry, makes it possible to analyze particle dispersion and cluster structure in the near-infrared. By observing the sample at scales ranging from the nanometer to the millimeter, it is possible to identify the formation of flocks: groups of particles in suspension which adhere to one another, and that, in the presence of superplasticizers, separate and release water into the cement paste mixture.

To understand the consequences of phenomena in cement paste at the microscopic and mesoscopic scale, Abadassi uses a scanning electron microscope. This method makes it possible to observe the paste’s microstructure and interfaces between aggregate particles at a nanometric and microscopic scale. “With this technique, I can visualize internal sweating, shown by the presence of water stuck between aggregate particles and not rising to the surface,” he explains. When concrete has hardened, a scanning microscope can be used to identify fissuring phenomena and cavity formation caused by the sweating paste.

Abadassi has also studied the effects of an essential stage in cement paste production: vibration. This process allows cement particles to be rearranged, leaving the smallest possible gaps between them and therefore making the paste more durable and compact. After vibrating the cement paste at various frequencies, Abadassi concluded that sweating is more likely at higher frequencies. “Vibrating cement particles in suspension will cause them to be rearranged, which will lead to the water contained in flocks being released,” he describes, adding that “the greater the vibration, the more the particles will rearrange and the more water will be released”.

Once these trials are finished, the concrete’s mechanical performances will be analyzed. One way this will be done is by exerting mechanical pressure on an object, in this case, a sample of concrete, to measure its resistance to said pressure. The results obtained from this experiment will be connected with microscope observations, Turbiscan tests and trials varying the parameters of the cement paste formula. All of Abadassi’s results will be used to create a range of formulas that can be utilized by concrete production companies. This will provide them with the optimal quantities of components, such as water and superplasticizers, to include when preparing cement for use in concrete. In this way, the quality and durability of the most widely used manufactured material in the world could be improved.

Rémy Fauvel