AI-4-Child “Chaire” research consortium: innovative tools to fight against childhood cerebral palsy

In conjunction with the GIS BeAChild, the AI-4-Child team is using artificial intelligence to analyze images related to cerebral palsy in children. This could lead to better diagnoses, innovative therapies and progress in patient rehabilitation. But also a real breakthrough in medical imaging.

The original version of this article was published on the IMT Atlantique website, in the News section.

Cerebral palsy is the leading cause of motor disability in children, affecting nearly two out of every 1,000 newborns. And it is irreversible. The AI-4-Child chaire (French research consortium), managed by IMT Atlantique and the Brest University Hospital, is dedicated to fighting this dreaded disease, using artificial intelligence and deep learning, which could eventually revolutionize the field of medical imaging.

“Cerebral palsy is the result of a brain lesion that occurs around birth,” explains François Rousseau, head of the consortium, professor at IMT Atlantique and a researcher at the Medical Information Processing Laboratory (LaTIM, INSERM unit). “There are many possible causes – prematurity or a stroke in utero, for example. This lesion, of variable importance, is not progressive. The resulting disability can be more or less severe: some children have to use a wheelchair, while others can retain a certain degree of independence.”

Created in 2020, AI-4-Child brings together engineers and physicians. The result of a call for ‘artificial intelligence’ projects from the French National Research Agency (ANR), it operates in partnership with the company Philips and the Ildys Foundation for the Disabled, and benefits from various forms of support (Brittany Region, Brest Metropolis, etc.). In total, the research program has a budget of around €1 million for a period of five years.

François Rousseau, professor at IMT Atlantique and head of the AI-4-Child chaire (research consortium)

Hundreds of children being studied in Brest

AI-4-Child works closely with BeAChild*, the first French Scientific Interest Group (GIS) dedicated to pediatric rehabilitation, headed by Sylvain Brochard, professor of physical medicine and rehabilitation (MPR). Both structures are linked to the LaTIM lab (INSERM UMR 1101), housed within the Brest CHRU teaching hospital. The BeAChild team is also highly interdisciplinary, bringing together engineers, doctors, pediatricians and physiotherapists, as well as psychologists.

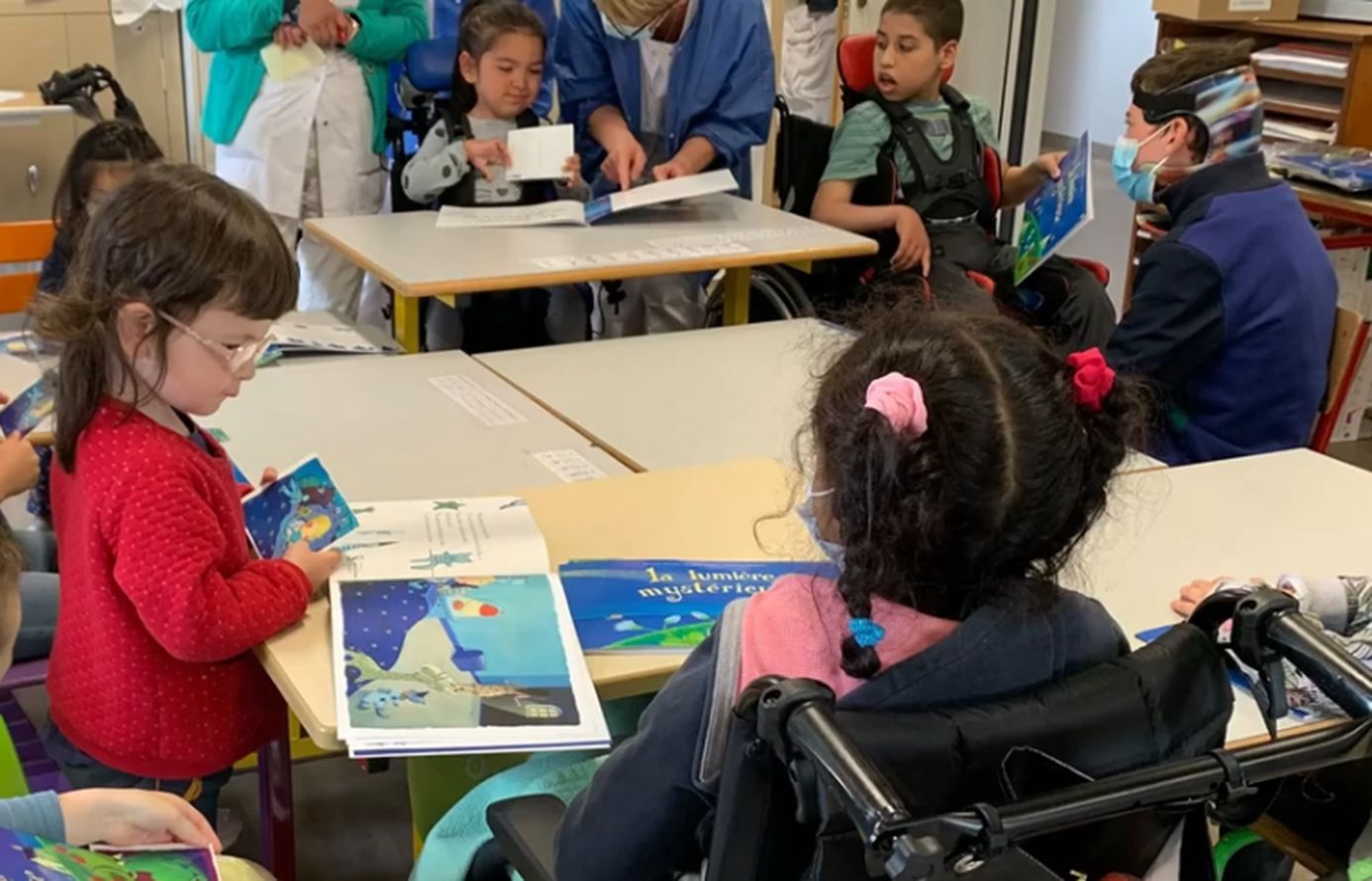

Hundreds of children from all over France and even from several European countries are being followed at the CHRU and at Ty Yann (Ildys Foundation). By bringing together all the ‘stakeholders’ – patients and families, health professionals and imaging specialists – on the same site, Brest offers a highly innovative approach, which has made it a reference center for the evaluation and treatment of cerebral palsy. This has enabled the development of new therapies to improve children’s autonomy and made it possible to design specific applications dedicated to their rehabilitation.

“In this context, the mission of the chair consists of analyzing, via artificial intelligence, the imagery and signals obtained by MRI, movement analysis or electroencephalograms,” says Rousseau. These observations can be made from the fetal stage or during the first years of a child’s life. The research team is working on images of the brain (location of the lesion, possible compensation by the other hemisphere, link with the disability observed, etc.), but also on images of the neuro-musculo-skeletal system, obtained using dynamic MRI, which help to understand what is happening inside the joints.

‘Reconstructing’ faulty images with AI

But this imaging work is complex. The main pitfall is the poor quality of the images collected, due to camera shake or artifacts during the shooting. So AI-4-Child is trying to ‘reconstruct’ them, using artificial intelligence and deep learning. “We are relying in particular on good quality views from other databases to achieve satisfactory resolution,” explains the researcher. Eventually, these methods should be able to be applied to routine images.

Significant progress has already been made. A doctoral student is studying images of the ankle obtained in dynamic MRI and ‘enriched’ by other images using AI – static images, but in very high resolution. “Despite a rather poor initial quality, we can obtain decent pictures,” notes Rousseau. Significant differences between the shapes of the ankle bone structure were observed between patients and are being interpreted with the clinicians. The aim will then be to better understand the origin of these deformations and to propose adjustments to the treatments under consideration (surgery, toxin, etc.).

The second area of work for AI-4-Child is rehabilitation. Here again, imaging plays an important role: during rehabilitation courses, patients’ gait is filmed using infrared cameras and a system of sensors and force plates in the movement laboratory at the Brest University Hospital. The ‘walking signals’ collected in this way are then analyzed using AI. For the moment, the team is in the data acquisition phase.

Several areas of progress

The problem, however, is that a patient often does not walk in the same way during the course and when they leave the hospital. “This creates a very strong bias in the analysis,” notes Rousseau. “We must therefore check the relevance of the data collected in the hospital environment… and focus on improving the quality of life of patients, rather than the shape of their bones.”

Another difficulty is that the data sets available to the researchers are limited to a few dozen images – whereas some AI applications require several million, not to mention the fact that this data is not homogeneous, and that there are also losses. “We have therefore become accustomed to working with little data,” says Rousseau. “We have to make sure that the quality of the data is as good as possible.” Nevertheless, significant progress has already been made in rehabilitation. Some children are able to ride a bike, tie their shoes, or eat independently.

In the future, the AI-4-Child team plans to make progress in three directions: improving images of the brain, observing bones and joints, and analyzing movement itself. The team also hopes to have access to more data, thanks to a European data collection project. Rousseau is optimistic: “Thanks to data processing, we may be able to better characterize the pathology, improve diagnosis and even identify predictive factors for the disease.”

* BeAChild brings together the Brest University Hospital Centre, IMT Atlantique, the Ildys Foundation and the University of Western Brittany (UBO). Created in 2020 and formalized in 2022 (see the French press release), the GIS is the culmination of a collaboration that began some fifteen years ago on the theme of childhood disability.