What is a Quantum Computer?

The use of quantum logic for computing promises a radical change in the way we process information. The calculating power of a quantum computer could surpass that of today’s biggest supercomputers within ten years. Romain Alléaume, a researcher in quantum information at Télécom ParisTech, helps us to understand how they work.

Is the nature of the information in a quantum computer different?

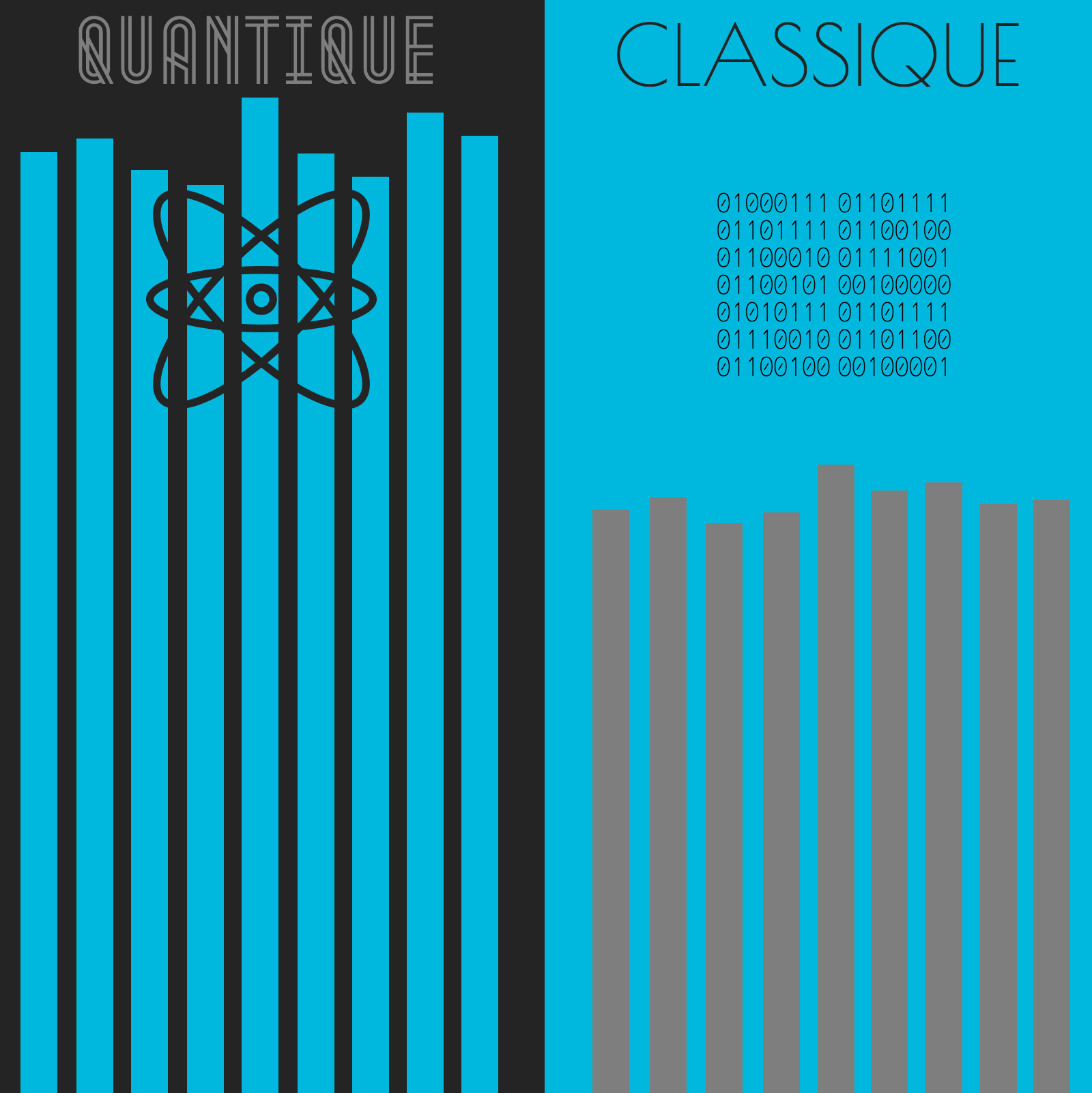

Romain Alléaume: In classical computer science, information is encoded in bits: 1 or 0. It is not quite the same in quantum computing, where information is encoded on what, we refer to as “quantum bits” or qubits. And there is a big difference between the two. A standard bit exists in one of two states, either 0 or 1. A qubit can exist in any superposition of these two states, and can therefore have many more than two values.

There is a stark difference between using several bits or qubits. While n standard bits can only take a value among 2n possibilities, n qubits can take on any combination of these 2n states. For example, 5 bits take a value among 32 possibilities: 00000, 00001… right up to 11111. 5 qubits can take on any linear superposition of the previous 32 states, which is more than one billion states. This phenomenal expansion in the size of the space of accessible states is what explains the quantum computer’s greater computing capacity.

Concretely, what does a qubit look like?

RA: Concretely, we can encode a qubit on any quantum system with two states. The most favourable experimental systems are the ones we know how to manipulate precisely. This is for instance the case with the energy levels of electrons in an atom. In quantum mechanics, the energy of an electron “trapped” around an atomic nucleus may take different values, and these energy levels take on specific “quantified” values, hence the name, quantum mechanics. We can call the first two energy levels of the atom 0 and 1: 0 corresponding to the lowest level of energy and 1 to a higher level of energy, known as the “excited state”. We can then encode a quantum bit by putting the atom in the 0 or in the 1 state, but also in any superposition (linear combination) of the 0 state and the 1 state.

To create good qubits, we have to find systems such that the quantum information remains stable over time. In practice, creating very good qubits is an experimental feat: atoms tend to interact with their surroundings and lose their information. We call this phenomenon decoherence. To avoid decoherence, we have to carefully protect the qubits, for example by putting them in very low temperature conditions.

What type of problems does the quantum computer solve efficiently?

RA: It exponentially increases the speed with which we can solve “promise problems”, that is, problems with a defined structure, where we know the shape of the solutions we are looking for. However, for reversing a directory for example, the quantum computer has only been proven to speed up the process by a square-root factor, compared with a regular computer. There is an increase, but not a spectacular one.

It is important to understand that the quantum computer is not magic and cannot accelerate any computational problem. In particular, one should not expect quantum computers to replace classical computers. Its main scope will probably be related to simulating quantum systems that cannot be simulated with standard computers. This will involve simulating chemical reactions or super conductivity, etc. While quantum simulators are likely to be the first concrete application of quantum computing, we know about quantum algorithms that can be applied to solve complex optimization problems, or to accelerate computations in machine learning. We can expect to see quantum processors used as co-processors, to accelerate specific computational tasks.

What can be the impact of the advent of large quantum computers?

RA: The construction of large quantum computers will moreover enable us to break most of the cryptography that is used today on the Internet. The advent of large quantum computer is unlikely to occur in the next 10 years. Yet, as these data are stored for years, even tens of years, we need to start thinking about new cryptographic techniques that will be resistant to the quantum computer.

Read the blog post: Confidential communications and quantum physics

When will the quantum computer compete with classical supercomputers?

RA: Even more than in classical computing, quantum computing requires error-correcting codes to improve the quality of the information coded on qubits, and to be scaled up. We can currently build a quantum computer with just over a dozen qubits, and we are beginning to develop small quantum computers which work with error-correcting codes. We estimate that a quantum computer must have 50 qubits in order to outperform a supercomputer, and solve problems which are currently beyond reach. In terms of time, we are not far away. Probably five years for this important step, often referred to as “quantum supremacy”.