Precision measurement and characterization

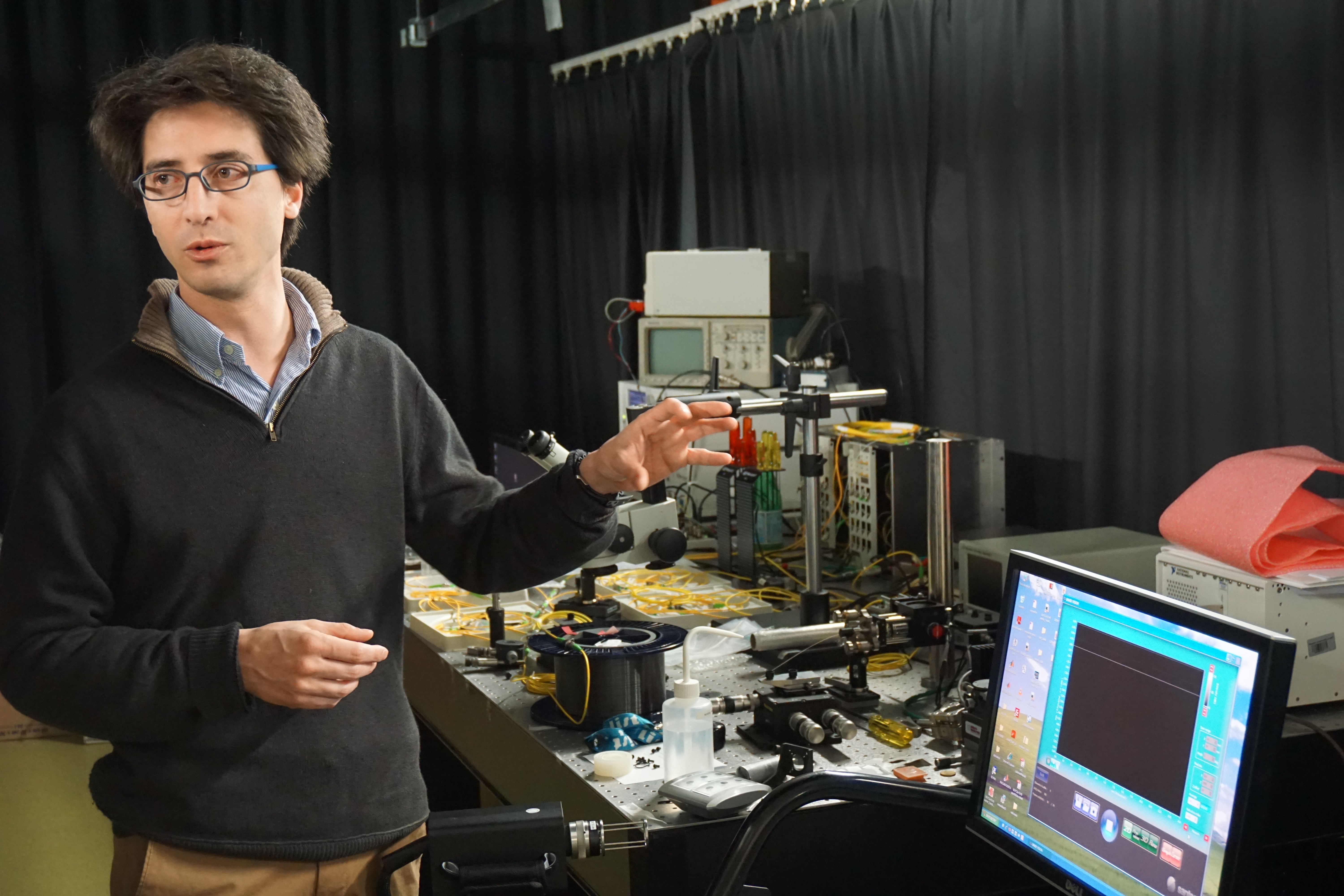

Yaneck Gottesman, a metrologist and specialist in the analysis and characterization of components, contributes to the development of the Optics and Photonics laboratory at Télécom SudParis. The lab is equipped with innovative high-performance electronic instruments for measurement, with multiple applications covering the fields of healthcare, telecoms and security.

Why does a component stop working? What happened in its transition from ‘working’ to ‘broken’? What unforeseen physics are at work? This is what Yaneck Gottesman tries to understand; he is interested in “seeking out the flaws in our understanding of everyday objects“. Metrology, or the science of measurement, demands precision and adaptability and is at the heart of the Professor’s research at Télécom SudParis. An example of this is an experiment during which the optical properties of a mirror produced results that were difficult to explain. It took a year of questioning the framework of interpretation to show that the object was affected by vibrations that were almost undetectable because they were of an amplitude of considerably less than a micron. The benefit of this experiment was that it enabled the team to develop their expertise and establish a protocol for characterizing the dynamic properties of the objects studied: “when I carry out an analysis I always have to ask myself what it is I am really measuring and whether I need to reconsider the model. It is a question of being able to disassociate the object being measured from the instrument used to measure it.” Here, it was necessary here to break free of a supposedly static context in order to carry out a full dynamic analysis.

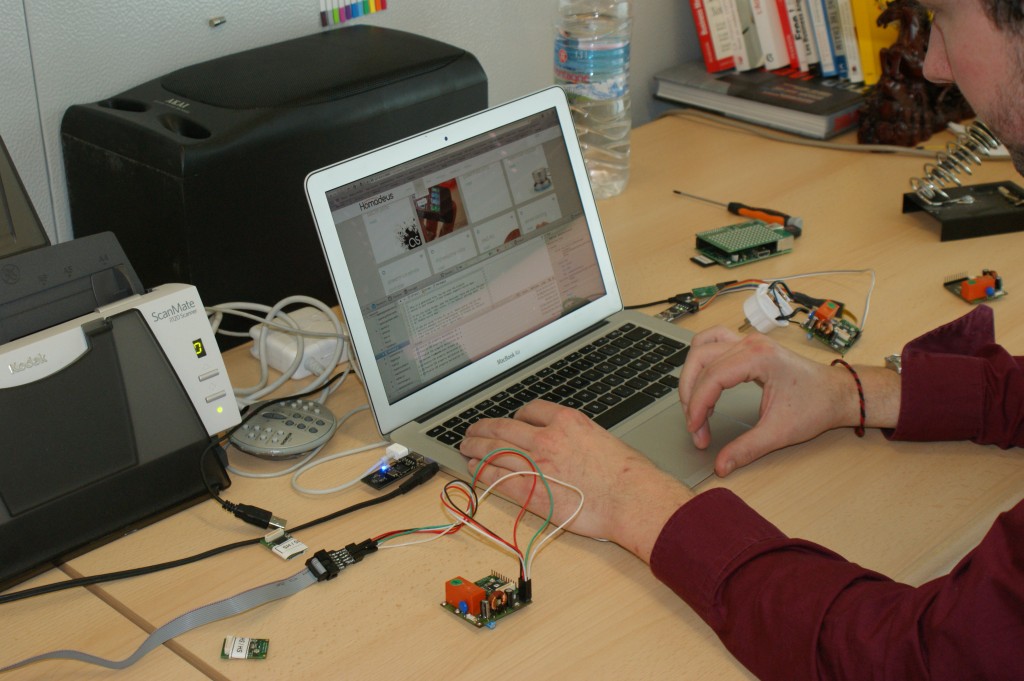

‘Homemade’ instruments to meet specific requirements

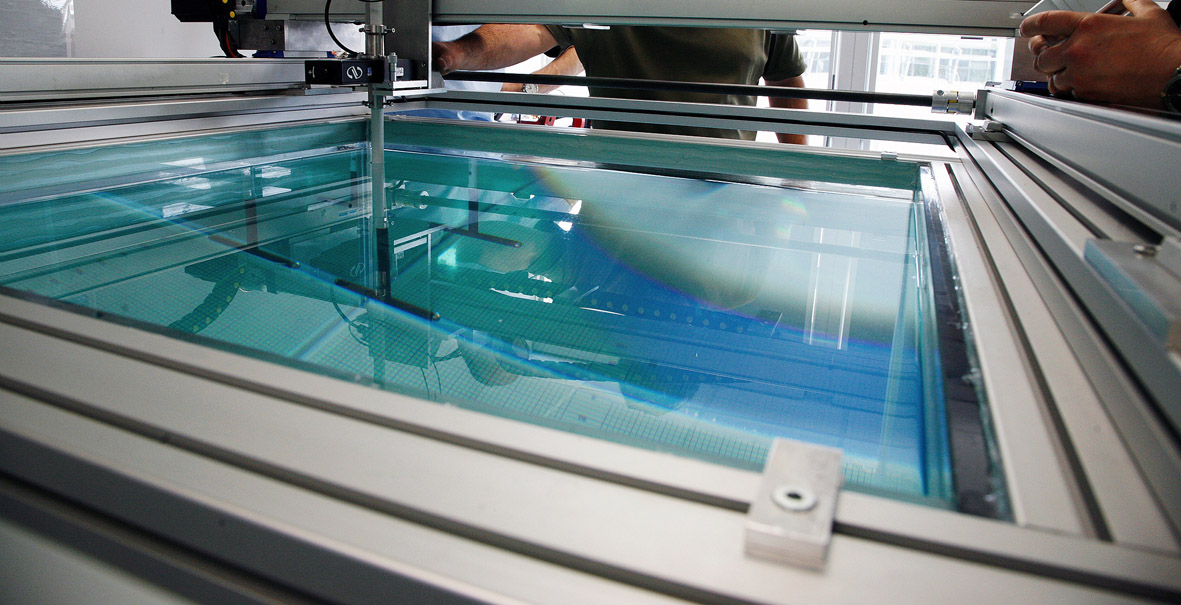

This ability to break free of the conventional field is a fundamental quality that allows the development of these specific tools and is a strength of the Optics and Photonics laboratory. Such tools include the OLCI (Optical Low Coherence Interferometry) and the OFDI (Optical Frequency Domain Interferometry). These two instruments have been specially developed for measurement and analysis with micrometric resolution over distances of up to 200 m (depending on the instrument used). Interferometry is a method that uses two signals produced by the same optical source, one of which serves as a reference while the other examines the object to be analyzed. Data is obtained by the superimposition of these two signals, which have undergone different conditions of diffusion.

In the case of research work carried out by various French laboratories on next-generation fiber optics, for example, the tools on the market were not suitable due to the ambiguity of interpretation of results recorded by these instruments. This difficulty led Yaneck and his colleagues to work on controlling the properties of emission from the source, obtaining a flexible interferometric architecture and controlling the full signal processing chain. This approach was a determining factor in demonstrating an unusual property of the fibers studied.

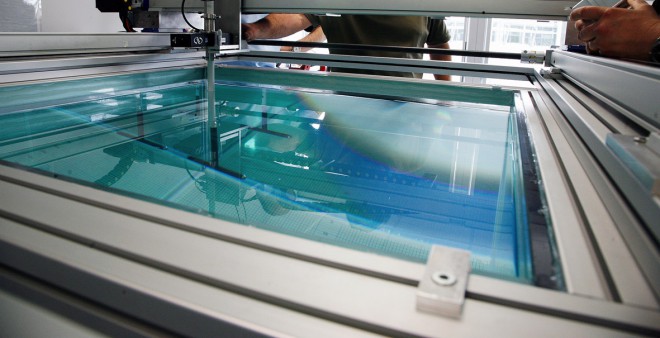

The prototype of the reflectometry bench developed in the field of frequencies: this system is used, among other things, for the spectral, spatial and modal characterization of optoelectronic circuits and components

Quality and variety of the data recorded

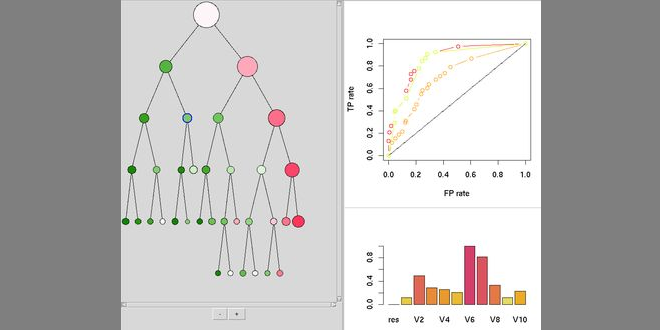

What really adds value to the work carried out on the laboratory’s instruments, however, “is the quality and variety of information recorded simultaneously when an object is measured”. One of the initial, very important and difficult challenges is the limit in terms of absolute precision of an instrument. Here, it is assured by a systematic approach that involves the combination of benchmarks, methods of optical referencing and electronic circuits used to digitally compensate for optical fluctuations specific to the environment and the instrument. The second challenge concerns the diversity of the information recorded. The solution proposed consists in developing instruments capable of simultaneously recording all vectoral values of the light collected, such as intensity, temporal and spatial phases and polarization. Thanks to this ‘all in one’ approach, each instrument becomes a spectrum analyzer as well as an ellipsometer, a telemeter or a Doppler scanner. Moreover this diversity provides detailed information on the electromagnetic field that is otherwise unobtainable.

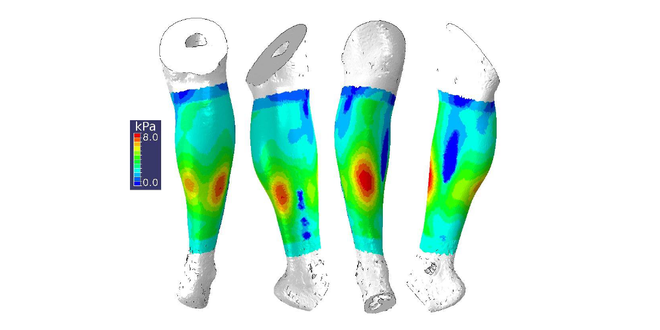

The approaches that have been developed, some of which have been patented by Télécom Sud-Paris, provide extremely powerful observation tools with multiple and specialized uses according to the objects examined, such as fiber optic instruments for optoelectronic components, or instruments for use through free space for OCT (optical coherence tomography) imaging. Potentially there is a wide range of applications. In the healthcare sector, biosensors are very promising (see insert), as is cell imaging. When biologists start to use these instruments a large number of medical applications will emerge. Other fields are also concerned such as telecommunications, whose components would benefit from extremely precise diagnostics. Security will also benefit from unfalsifiable biometric sensors: a property that is a direct product of the variety of different measurements carried out simultaneously by these unique devices.

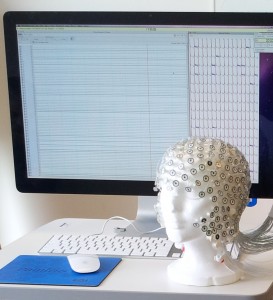

[box type=”shadow” align=”” class=”” width=””]The OLCI, patented and serving healthcare

Radical transformations are expected in the field of healthcare thanks to innovative measurement instruments. The OLCI, and its use with an OCT – two devices that have both been patented by Institut Mines-Télécom – may allow the early diagnosis of certain illnesses. Imagine a drop of blood on a miniature optical surface made up of specialized zones in which millions of bio-photonic sensors are placed and which react with the molecules present in the blood. These chemical reactions modify the optical nature of the surface, which is analyzed in detail by the OLCI. The results are correlated and interpreted by a physician in order to provide a very precise diagnosis.[/box]

An unrivaled instrumental platform and level of expertise

The laboratory has an instrument base named the VCIS (Versatile Coherence Interferometry Setup), which can be combined and structured according to needs and applications. It relies on high-performance tools, which Yaneck Gottesman hopes can be used by laboratories, in industry, by universities, manufacturers and researchers: “whether they are private or public, the platform is open for them to come and analyze or evaluate objects of interest in detail, test the performance of their components or develop new, more specialized instruments using the existing modular base.”

Beside the economic benefits of this partnership, there is a desire to create a place of open exchange which will allow progress to be made in multiple fields at the same time. Attracting users and being surrounded by them in order to understand their needs and benefit from their expertise, is definitely the best way to remain in contact with the domain in question and anticipate the future.

Optics as a central theme

Optics as a central theme

Yaneck Gottesman is an alumnus of the École Centrale de Marseille (former ESIM) which he left in 1997 and where, in his final year, he also took a Postgraduate Advanced Diploma in optics with the École Nationale Supérieure de Physique de Marseille. He then joined the CNET in Bagneux (French National Centre for Studies in Telecommunications, now Orange Labs) where he prepared and, in 2001, defended a thesis on optical reflectometry for component analysis. He then did a post-doctoral year at the Laboratory for Photonics and Nanostructures (LPN) at the CNRS in Marcoussis, focused on non-linear optics. In 2002 he joined the Electronics and Physics department (EPH) at Télécom SudParis (INT at the time), where he specialized in precision measurements and the physics of optoelectronic components. He has been accredited to lead research since February 2014.

Editor: Umaps

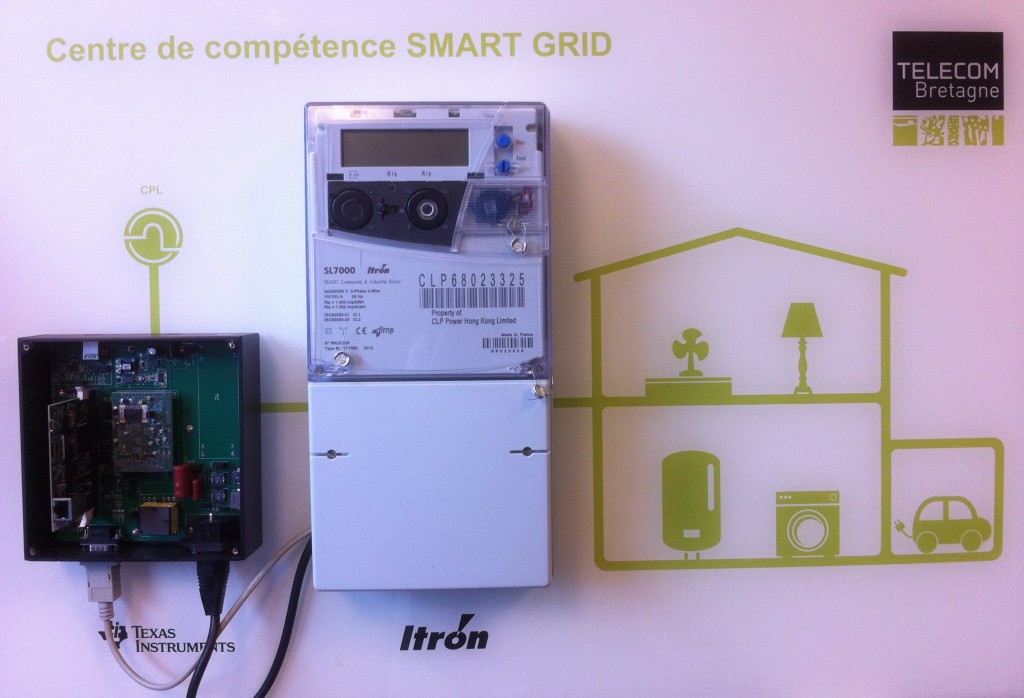

A smart grid skills center on the Rennes campus of Télécom Bretagne

A smart grid skills center on the Rennes campus of Télécom Bretagne