When information science assists artificial intelligence

The brain, information science, and artificial intelligence: Vincent Gripon is focusing his research at Télécom Bretagne on these three areas. By developing models that explain how our cortex stores information, he intends to inspire new methods of unsupervised learning. On October 4, he will be presenting his research on the renewal of artificial intelligence at a conference organized by the French Academy of Sciences. Here, he gives us a brief overview of his theory and its potential applications.

Since many of the developments in artificial intelligence are based on progress made in statistical learning, what can be gained from studying memory and how information is stored in the brain?

Vincent Gripon: There are two approaches to artificial intelligence. The first approach is based on mathematics. It involves formalizing a problem from the perspective of probabilities and statistics, and describing the objects or behaviors to be understood as random variables. The second approach is bio-inspired. It involves copying the brain, based on the principle that it represents the only example of intelligence that can be used for inspiration. The brain is not only capable of making calculations, it is above all able to index, research, and compare information. There is no doubt that these abilities play a fundamental role in every cognitive task, including the most basic.

In light of your research, do you believe that the second approach has greater chances of producing results?

VG: After the Dartmouth conference of 1956 – the moment at which artificial intelligence emerged as a research field – the emphasis was placed on the mathematical approach. 60 years later, there are mixed results, and many problems that could be solved by a 10-year-old child cannot be solved using machines. This partly explains the period of stagnation experienced by the discipline in the 1990s. The revival we have seen over the past few years can be explained by the renewed interest in the artificial neural networks approach, particularly due to the rapid increase in available computing power. The pragmatic response is to favor these methods, in light of the outstanding performance achieved using neuro-inspired methods, which comes close to and sometimes even surpasses that of the human cortex.

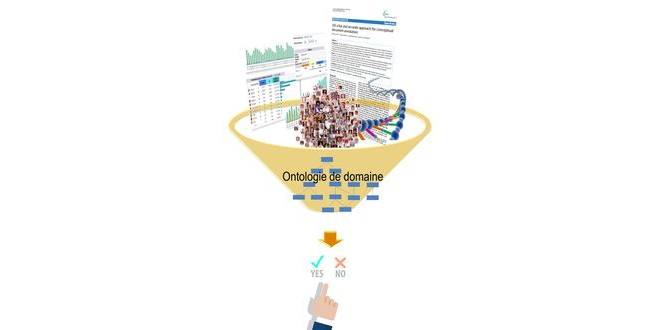

How can information theory – usually more focused on error correcting codes in telecommunications than neurosciences – help you imitate an intelligent machine?

VG: When you think about it, the brain is capable of storing information, sometimes for several years, despite the constant difficulties it must face: the loss of neurons and connections, extremely noisy communication, etc. Digital systems face very similar problems, due to the miniaturization and multiplication of components. The information theory proposes a paradigm that addresses the problems related to information storage and transfer, which applies to any system, biological or otherwise. The concept of information is also indispensable for any form of intelligence, in addition to calculation, which very often receives greater attention.

What model do you base your work on?

VG: As an information theorist, I start from the premise that robust information is redundant information. By applying this principle to the brain, we see that information is stored by several neurons, or several micro-columns, which are clusters of neurons, on the distributed error correcting code model. One model that offers outstanding robustness is the clique model. A clique is made up of several — at least four — micro-columns, which are all interconnected. The advantage of this model is that even when one connection is lost, they can all still communicate. This is a distinctive redundancy property. Also, two micro-columns can be part of several cliques. Therefore, every connection supports several items of information, and every item of information is supported by several connections. This dual property ensures the mechanism’s robustness and its great diversity of storage.

“Robust information is redundant”

How has this theory been received by the community of brain specialists?

VG: It is very difficult to build bridges between the medical field and the mathematical or computer science field. We do not have the same vocabulary and intentions. For example, it is not easy to get our neuroscientist colleagues to admit that information can be stored in the very structure of the connections of the neural network, and not in its mass. The best way of communicating remains biomedical imaging, in which the models are confronted with reality, which can facilitate their interpretation.

Do the observations made via imaging offer hope regarding the validity of your theories?

VG: We work specifically with the laboratory for signal and image processing (LTSI) of Université de Rennes I to validate our models. To bring them to light, the cerebral activation of subjects performing cognitive tasks is observed using electroencephalography. The goal is not to directly validate our theories, since the required spatial resolution is not currently attainable. The goal is rather to verify the macroscopic properties they predict. For example, one of the consequences of the neural clique model is that a positive correlation exists between the topological distance between the representation of objects in the neocortex and the semantic distance between the same objects. Specifically, the representations of two similar objects will share many of the same micro-columns. This has been confirmed through imaging. Of course, this does not validate the theory, but it can cause us to modify it or carry out new experiments to test it.

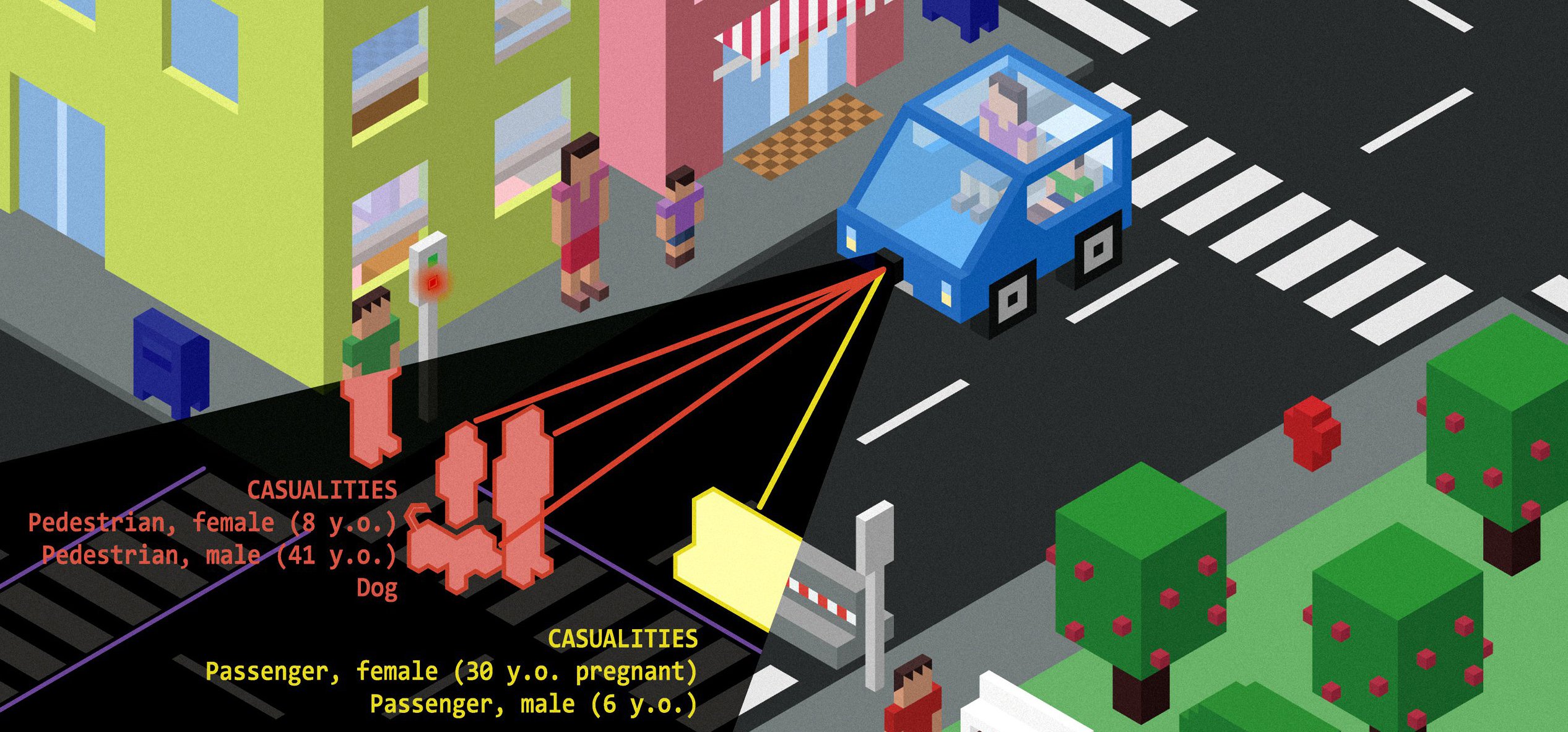

To what extent is your model used in artificial intelligence?

VG: We focus on problems related to machine learning — typically on object recognition. Our model offers good results, especially in the area of unsupervised learning, in other words, without a human expert helping the algorithm to learn by indicating what it must find. Since we focus on memory, we target different applications than those usually targeted in this field. For example, we look closely at an artificial system’s capacity to learn with few examples, to learn many different objects or learn in real-time, discovering new objects at different times. Therefore, our approach is complementary, rather than being in competition with other learning methods.

The latest developments in artificial intelligence are now focused on artificial neural networks — referred to as “deep learning”. How does your position complement this learning method?

VG: Today, deep neural networks achieve outstanding levels of performance, as highlighted in a growing number of scientific publications. However, their performance remains limited in certain contexts. For example, these networks require an enormous amount of data in order to be effective. Also, once a neural network is trained, it is very difficult to get the network to take new parameters into account. Thanks to our model, we can enable incremental learning to take place: if a new type of object appears, we can teach it to a network that has already been trained. In summary, our model is not as good for calculations and classification, but better for memorization. A clique network is therefore perfectly compatible with a deep neural network.

[box type=”shadow” align=”” class=”” width=””]

Artificial intelligence at the French Academy of Sciences

Because artificial intelligence now combines approaches from several different scientific fields, the French Academy of Sciences is organizing a conference on October 4 entitled “The Revival of Artificial Intelligence”. Through presentations by four researchers, this event will present the different facets of the discipline. In addition to the presentation by Vincent Gripon, which combines informational neuroscience, the conference will also feature presentations by Andrew Blake (Alan Turing Institute) on learning machines, Yann LeCun (Facebook, New York University) on deep learning, and Karlheinz Meier (Heidelberg University) on brain-derived computer architecture.

Practical information:

Conference on “The Revival of Artificial Intelligence”

October 4, 2016 at 2:00pm

Large meeting room at Institut de France

23, quai de Conti, 75006 Paris

[/box]

Guillaume Desbrosse, mediating between science and the public

Guillaume Desbrosse, mediating between science and the public