In the framework of the European research program H2020, the Institut Mines-Telecom is taking part in the project « City4age ». The latter is meant to offer a smart city model adapted to the elderly. Through non-intrusive technologies, the aim is to improve their quality of life and to facilitate the action of Health Services. The researcher and director of the IPAL[1], Mounir Mokhtari, contributes to the project in the test city of Singapor. Following here, is an interview given by the researcher to the Petitjournal.com, a french media for the French overseas.

In the framework of the European research program H2020, the Institut Mines-Telecom is taking part in the project « City4age ». The latter is meant to offer a smart city model adapted to the elderly. Through non-intrusive technologies, the aim is to improve their quality of life and to facilitate the action of Health Services. The researcher and director of the IPAL[1], Mounir Mokhtari, contributes to the project in the test city of Singapor. Following here, is an interview given by the researcher to the Petitjournal.com, a french media for the French overseas.

Mounir Mokhtari, Director of the IPAL

LePetitJournal.com : What are the research areas of and stakes involved in “City4age”?

Mounir Mokhtari : Today, in Europe as in Singapore, the population is ageing and the number of dependent elderly persons is rising sharply; even as the skilled labour force that can look after these people has decreased significantly. The management (of this issue) is often institutionalisation. Our objective is to maintain the autonomy of this group of people at home and in the city, to improve their quality of life and that of their caregivers (family, friends etc.) by the integration of daily non-intrusive and easy-to-use technologies.

It involves the development of technological systems that motivate elderly persons in frail health to stay more active, to reinforce social ties and to prevent risks. The objective is to install non-intrusive captors, information systems and communication devices in today’s homes, and to create simple user interfaces with everyday objects such as smartphones, TV screens, tablets, to assist dependent people in their daily living.

LPJ : What are the principal challenges in the research?

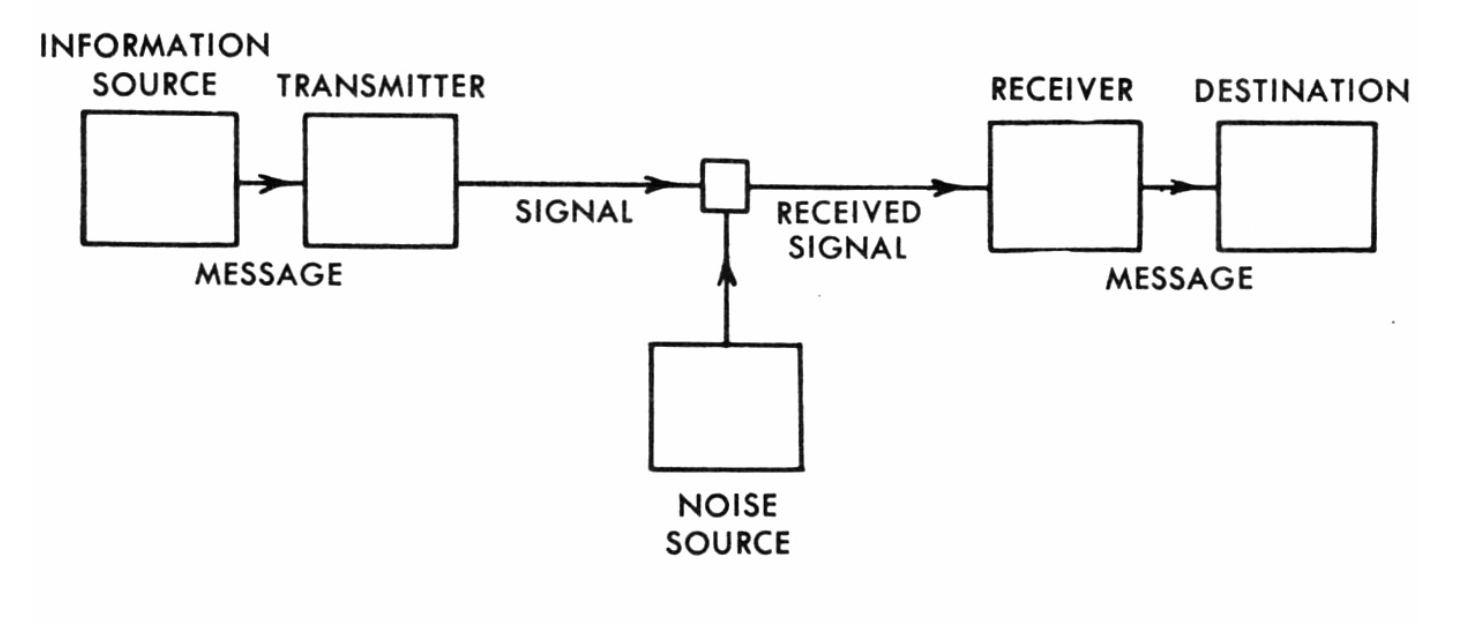

MM : The first challenge is to identify the normal behavior of the person, to know his / her habits, to be able to detect changes that may be related to a decline in cognitive or motor skills. This involves the collection of extensive information available through connected objects and lifestyle habits, which we used to define a “user profile”.

Then the data obtained is interpreted and a service provided to the person. Our objective is not to monitor people but to identify exact areas of interest (leisure, shopping, exercise) and to encourage the person to attend such activities to avoid isolation which could result in the deterioration of his / her quality of life or even health.

For this, we use the tools of decision and system learning, the Machine Learning or Semantic Web. It’s the same principle, if you like, that Google uses to suggest appropriate search results (graph theory), with an additional difficulty in our case, related to the human factor. It is all about making a subjective interpretation of behavioural data using machines that have a logical interpretation. But it is also where the interest of this project lies, besides the strong societal issue. We work with doctors, psychologists, ergonomists, physio and occupational therapists and social science specialists, etc.

LPJ : Can you give us a few simple examples of such an implementation ?

MM : To assist in the maintaining of social ties and activity levels, let’s take the example of an elderly person who has the habit of going to his / her Community Centre and of taking his / her meals at the hawker centre. If the system detects that this person has reduced his / her outings outside of home, it will generate a prompt to the person to encourage him / her to get out of the home again, for example, “your friends are now at the hawker centre and they are going to eat, you should join them”. The system can also simultaneously notify the friends on their mobiles that the person has not been out for a long time and to suggest that they visit him/ her for example.

Concerning the elderly who suffer cognitive impairment, we work on the key affected functions that are simple daily activities such as sleeping, hygiene, eating, and risks of falls. For example, we install motion captors in rooms to detect possible falls. We equip beds with optic fibre captors to manage the person’s breathing and heart rate to spot potential sleep problems, apnea or cardiac risks, without disturbing the person’s rest.

LPJ : An application in Singapore ?

MM : Our research is highly applied, with a strong industry focus and a view to a quick deployment to the end-user. The solutions developed in the laboratory are proven in a showflat, then in clinical tests. At the moment, we are carrying out tests at the Khoo Teck Puat hospital to validate our non-intrusive sleep management solutions.

Six pilot sites were chosen to validate in situ the deployment of City4age, including Singapore for testing the maintenance of social ties and activity levels of the elderly, via the Community Centres in HDB neighbourhoods. The target is a group of around 20 people aged 70 and above, fragile and suffering from mild cognitive impairment, who are integrated in a community – more often in a Senior Activity Centre. The test also involves the volunteers who help these elderly persons in their community.

LPJ : What is your background in Singapore?

MM : My research concentrated mainly on the area of technology that could be used to assist dependent people. I came to Singapore for the first time in 2004 for the International Conference On Smart Homes and Health Telematics or ICOST which I organised.

I then discovered a scientific ecosystem that I was not aware of (at that period, the focus was turned towards the USA and some European cities). I was pleasantly surprised by the dynamism, the infrastructure in place and the building of new structures at a frantic pace, and above all, by a country that is very active in the research area of new technologies.

I continued to exchange with Singapore since then and finally decided to join the laboratory IPAL, to which I am seconded by the “Institut Mines-Télécom” since 2009. I took over the direction of IPAL in 2015 to develop this research.

LPJ : What is your view of the MERLION programme?

MM : The PHC MERLION is very relevant and attractive for the creation of new teams. There was an undeniable leverage of French diplomacy and MERLION in the launch of projects and in the consolidation of collaborations with our partners.

The programme brings a framework that creates opportunities and encourages exchanges between researchers and international conference participants and also contributes to the emergence of new collaborations.

Without the MERLION programme, for example, we would not have been able to create the symposium SINFRA (Singapore-French Symposium) in 2009, which has become a biennial event for the laboratory IPAL. In addition, the theme of « Inclusive Smart Cities and Digital Health » was initiated into IPAL thanks to a MERLION project which was headed by Dr. Dong Jin Song who is today the co-director of IPAL for NUS.

Other than the diplomatic and financial support, the Embassy also participates in IPAL’s activities through making available one of its staff members on a part-time basis, who is integrated into the project team (at IPAL).

LPJ : Do you have any upcoming collaborations?

MM : We are planning a new collaboration between IPAL and the University of Bordeaux – which specialises in social sciences – for a behavioural study to help us in our current research. We are thinking of applying for a new MERLION project in order to kickstart this new collaboration. It is true that the Social Sciences aspect, despite its importance in the well-being of the elderly and their entourage, is not very well-developed in the laboratory. This PHC MERLION proposal may well have the same leverage as the previous one.

Beyond the European project City4Age, IPAL just signed a research collaboration agreement with PSA Peugeot—Citroën on mobility aspects in the city and well-being with a focus on the management of chronic diseases, such as diabetes and respiratory illnesses. There is also an ongoing NRF (National Research Foundation) project with NUS (National University of Singapore), led by Dr. Nizar Quarti, a member of IPAL, on mobile and visual robotics.

Interview by Cécile Brosolo (www.lepetitjournal.com/singapour) and translation by Institut Français de Singapour, Ambassade de France à Singapour.

[1] IPAL : Image & Pervasive Access Lab – CNRS’s UMI based in Singapore.