OpenAirInterface: An open platform for establishing the 5G system of the future

In this article, we continue our exploration of the Télécom & Société numérique Carnot institute technological platforms. OpenAirInterface is the platform created by EURECOM to support mobile telecommunication systems like 4G and 5G. Its goal: to develop access solutions for networks, radio and core networks. Its service is based on a software suite developed using open source.

In this article, we continue our exploration of the Télécom & Société numérique Carnot institute technological platforms. OpenAirInterface is the platform created by EURECOM to support mobile telecommunication systems like 4G and 5G. Its goal: to develop access solutions for networks, radio and core networks. Its service is based on a software suite developed using open source.

The OpenAirInterface platform offers a 4G system built on a set of software programs. These programs can each be tested and modified individually by the user companies, independently of the other programs. The goal is to establish the new features of what will become the 5G network. To find out more, we talked with Christian Bonnet, a communications systems researcher at EURECOM.

What is OpenAirInterface?

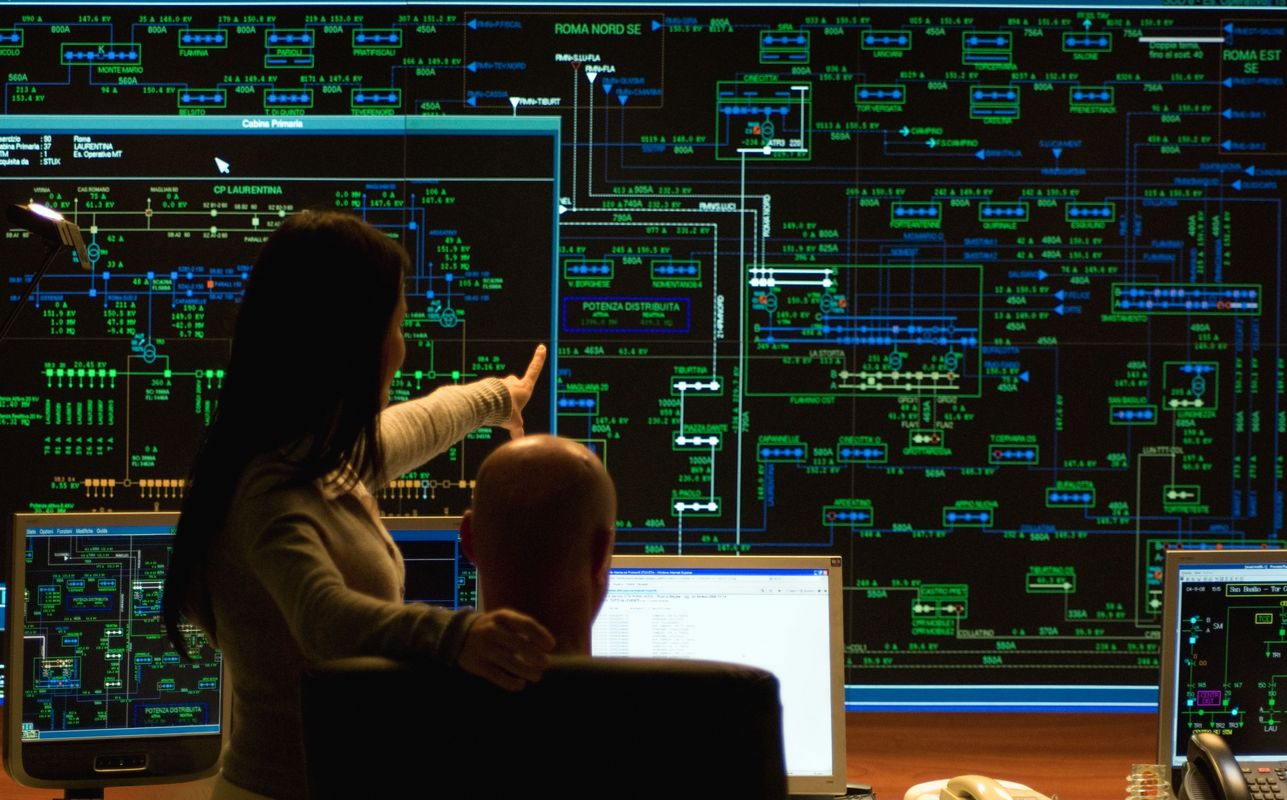

Christian Bonnet: This name encompasses two aspects. The first is the implementation of the software that makes up a 4G-5G system. This involves software components that run in a mobile terminal – those that increment the radio transmissions, and those that are in the core network.

The second part of OpenAirInterface is an “endowment fund” created by EURECOM at the end of 2014, which is aimed at leading an open and global software Alliance (OSA – OpenAirInterface Software Alliance).

How does this software suite work?

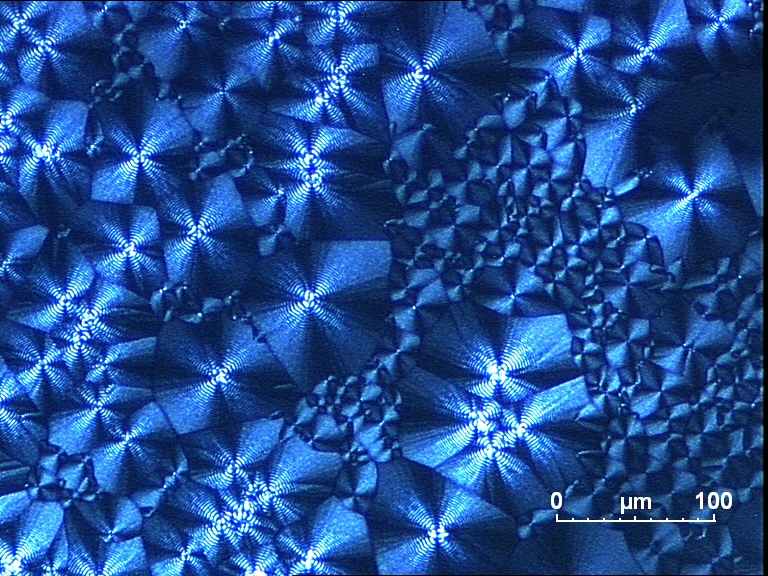

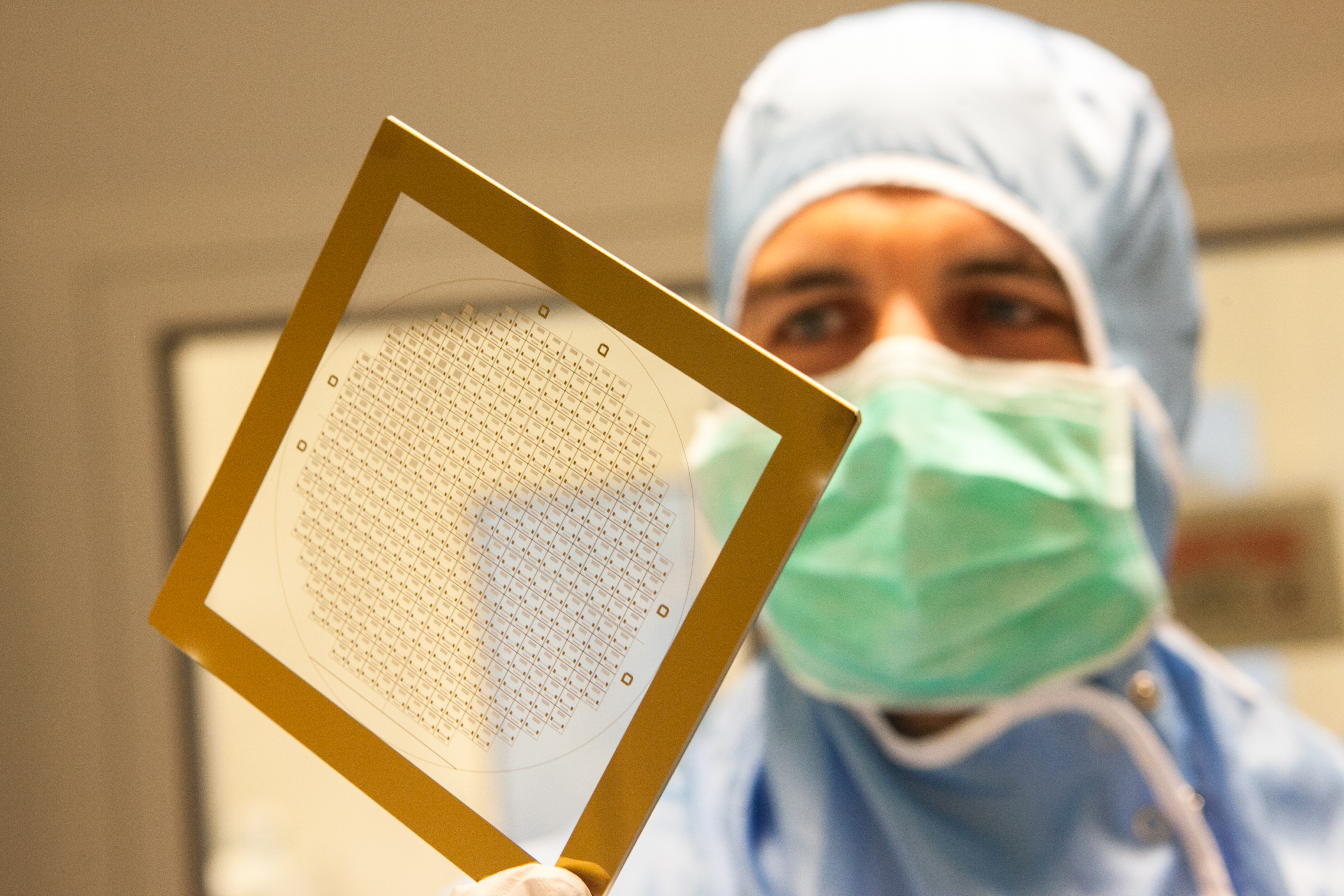

CB: The aim is to implement the software components required for a complete 4G system. This involves the modem of a mobile terminal, the software for radio relay stations, as well as the software for the specific routers used for a network core. Therefore, we deal with all of the processes involved in the radio layer (modulation, coding, etc.) of communication protocols. It runs on the Intel x86 processors that are found in PCs and computer clusters. This means that it is compatible with Cloud developments. To install it, you must have a radio card connected to the PC, which serves as the terminal, and a second PC, which serves as a relay station.

Next, depending on what we need to do, we can take only a part of the software implementation. For example, we can use commercial mobile terminals and attach to a network composed of an OpenAirInterface relay and a commercial network core. Any combination is possible. We have therefore established a complete network chain for 4G, which can move towards the 5G network using all of these software programs.

Who contributes to OpenAirInterface?

CB: Since the Alliance was established, we have had several types of contributors. The primary contributor, to date, has been EURECOM, because its teams are those that developed the initial versions of all the software programs. These teams include research professors, post-doctoral students, and PhD students who can contribute to this platform that provides participants with an experimental environment for their research. In addition, through the software Alliance, we have acquired new kinds of contributors: industrial stakeholders and research laboratories located throughout the world. We have expanded our base, and this openness enables us to receive contributions from both the academic and industrial worlds. (Editor’s note: Orange, TCL and Ercom are strategic OpenAirInterface partners, but the Alliance also includes many associate members, such as Université Pierre et Marie Curie (UPMC), IRT Bcom, INRIA and, of course, IMT. The full list is available here.)

What does the Carnot Label represent for your activities?

CB: The Carnot Label was significant in our relationship with the Beijing University of Posts and Telecommunications in China (BUPT), a university specializing in telecommunications. The BUPT asked us to provide a quality label reference that would allow us to demonstrate the recognition of our expertise. The Carnot Label was presented and recognized by the foreign university. This label demonstrates the commitment of OpenAirInterface developments to the industrial world, while also representing a seal of quality that is recognized far beyond the borders of France and Europe.

Why do companies and industrial stakeholders contact OpenAirInterface?

CB: To develop innovation projects, industrial stakeholders need advances in scientific research. They come to see us because they are aware of our academic excellence and they also know that we speak the same language. It’s in our DNA! Since its very beginning, EURECOM has embodied the confluence of industry and research; we speak both languages. We have developed our own platforms, we have been confronted with the same issues that industrial stakeholders face on a daily basis. We are therefore positioned as a natural intermediary between these two worlds. We listen attentively to the innovation projects they present.

You chose to develop your software suite as open source, why?

CB: It is a well-known model that is beginning to spread. It facilitates access to knowledge and contributions. This software is covered by open source licenses that protect contributors and enable wider dissemination. This acts as a driving force and an accelerator of development and testing, since each software component must be tested. If you multiply the introduction of this software throughout the world, everyone will be able to use it more easily. This enables a greater number of software tests, and therefore increases the amount of feedback from users for improving the existing versions. Therefore, the entire community benefits. This is a very important point, because even in industry, many components are starting to be developed using this model.

In addition to this approach, what makes OpenAirInterface unique?

CB: OpenAirInterface has brought innovation to open source software licensing. Many types of open source licenses exist. It is a vast realm, and the industrial world is bound to large patent portfolios. The context is as follows: on the one hand, there are our partners who have industrial structures that rely on revenue from patents and, on the other hand, there is a community who wants free access to software for development purposes. How can this apparent contradiction be resolved?

We have introduced a specific license to protect the software for non-commercial operations –everything related to research, innovation, tests – as for classic open source software. For commercial operations, we have established a patent declaration system. This means that if industrial stakeholders implement their own patented components, they need only indicate this and, for commercial operations, people will therefore contact the rights holders to negotiate. These conditions are known as FRAND (fair, reasonable and nondiscriminatory) terms, and reflect the practices industrial players in the field follow with standardization organizations such as GPP. In any case, this procedure has been well accepted. This explains why Orange and Nokia (formerly Alcatel-Lucent Bell Labs), convinced by the benefits of this type of software license, are featured among the Alliance’s strategic partners.

What is the next development phase for OpenAirInterface?

CB: Several areas of development exist. The projects that are proposed as part of the European H2020 program, for which we are awaiting the results, will allow us to achieve scientific advances and will benefit the software range. The Alliance has also defined major areas for development through joint projects led by both an industrial partner and an academic partner. This type of structure enables us to bring people together from around the world. They volunteer to participate in one of the steps towards achieving 5G.

[divider style=”normal” top=”20″ bottom=”20″]

The TSN Carnot institute, a guarantee of excellence in partnership-based research since 2006.

Having first received the Carnot label in 2006, the Télécom & Société Numérique Carnot institute is the first national “Information and Communication Science and Technology” Carnot institute. Home to over 2,000 researchers, it is focused on the technical, economic and social implications of the digital transition. In 2016, the Carnot label was renewed for the second consecutive time, demonstrating the quality of the innovations produced through the collaborations between researchers and companies.

The institute encompasses Télécom ParisTech, IMT Atlantique, Télécom SudParis, Télécom, École de Management, EURECOM, Télécom Physique Strasbourg and Télécom Saint-Étienne, École Polytechnique (Lix and CMAP laboratories), Strate École de Design and Femto Engineering.

[divider style=”normal” top=”20″ bottom=”20″]

The TSN Carnot institute, a guarantee of excellence in partnership-based research since 2006

The TSN Carnot institute, a guarantee of excellence in partnership-based research since 2006