Our exposure to electromagnetic waves: beware of popular belief

Joe Wiart, Télécom ParisTech – Institut Mines-Télécom, Université Paris-Saclay

This article is published in partnership with “La Tête au carré”, the daily radio show on France Inter dedicated to the popularization of science, presented and produced by Mathieu Vidard. The author of this text, Joe Wiart, discussed his research on the show broadcast on April 28, 2017 accompanied by Aline Richard, Science and Technology Editor for The Conversation France.

For over ten years, controlling exposure to electromagnetic waves and to radio frequencies in particular has fueled many debates, which have often been quite heated. An analysis of reports and scientific publications devoted to this topic shows that researchers are mainly studying the possible impact of mobile phones on our health. At the same time, according to what has been published in the media, the public is mainly concerned about base stations. Nevertheless, mobile phones and wireless communication systems in general are widely used and have dramatically changed how people around the world communicate and work.

Globally, the number of mobile phone users now exceeds 5 billion. And according to the findings of an Insee study, the percentage of individuals aged 18-25 in France who own a mobile phone is 100%! It must be noted that the use of this method of communication is far from being limited to simple phone calls — by 2020 global mobile data traffic is expected to represent four times the overall internet traffic of 2005. In France, according to the French regulatory authority for electronic and postal communications (ARCEP), over 7% of the population connected to the internet exclusively via smartphones in 2016. And the skyrocketing use of connected devices will undoubtedly accentuate this trend.

Smartphone Zombies. Ccmsharma2/Wikimedia

The differences in perceptions of the risks associated with mobile phones and base stations can be explained in part by the fact that the two are not seen as being related. Moreover, while exposure to electromagnetic waves is considered to be “voluntary” for mobile phones, individuals are often said to be “subjected” to waves emitted by base stations. This helps explains why, despite the widespread use of mobiles and connected devices, the deployment of base stations remains a hotly debated issue, often focusing on health impacts.

In practice, national standards for limiting exposure to electromagnetic waves are based on the recommendations of the International Commission on Non-Ionizing Radiation Protection (ICNIRP) and on scientific expertise. A number of studies have been carried out on the potential effects of electromagnetic waves on our health. Of course, research is still being conducted in order to keep pace with the constant advancements in wireless technology and its many uses. This research is even more important since radio frequencies from mobile telephones have now been classified as “possibly carcinogenic for humans” (group 2B) following a review conducted by the International Agency for Research on Cancer.

Given the great and ever-growing number of young people who use smartphones and other mobile devices, this heightened vigilance is essential. In France the National Environmental and Occupational Health Research Programme (PNREST) of the National Agency for Food, Environmental and Occupational Health Safety (Anses) is responsible for monitoring the situation. And to address public concerns about base stations (of which there are 50,000 located throughout France), many municipalities have discussed charters to regulate where they may be located. Cities such as Paris, which, striving to set an example for France and major European cities, signed such a charter as of 2003, are officially limiting exposure from base stations through a signed agreement with France’s three major operators.

Hillside in Miramont, Hautes Pyrenees France. Florent Pécassou/Wikimedia

This charter was updated in 2012 and was further discussed at the Paris Council in March, in keeping with the Abeille law, which was proposed to the National Assembly in 2013 and passed in February 2015, focusing on limiting the exposure to electromagnetic fields. Yet it is important to note that this initiative, like so many others, concerns only base stations despite the fact that exposure to electromagnetic waves and radio frequencies comes from many other sources. By focusing exclusively on these base stations, the problem is only partially resolved. Exposure from mobile phones for users or their neighbors must also be taken into consideration, along with other sources.

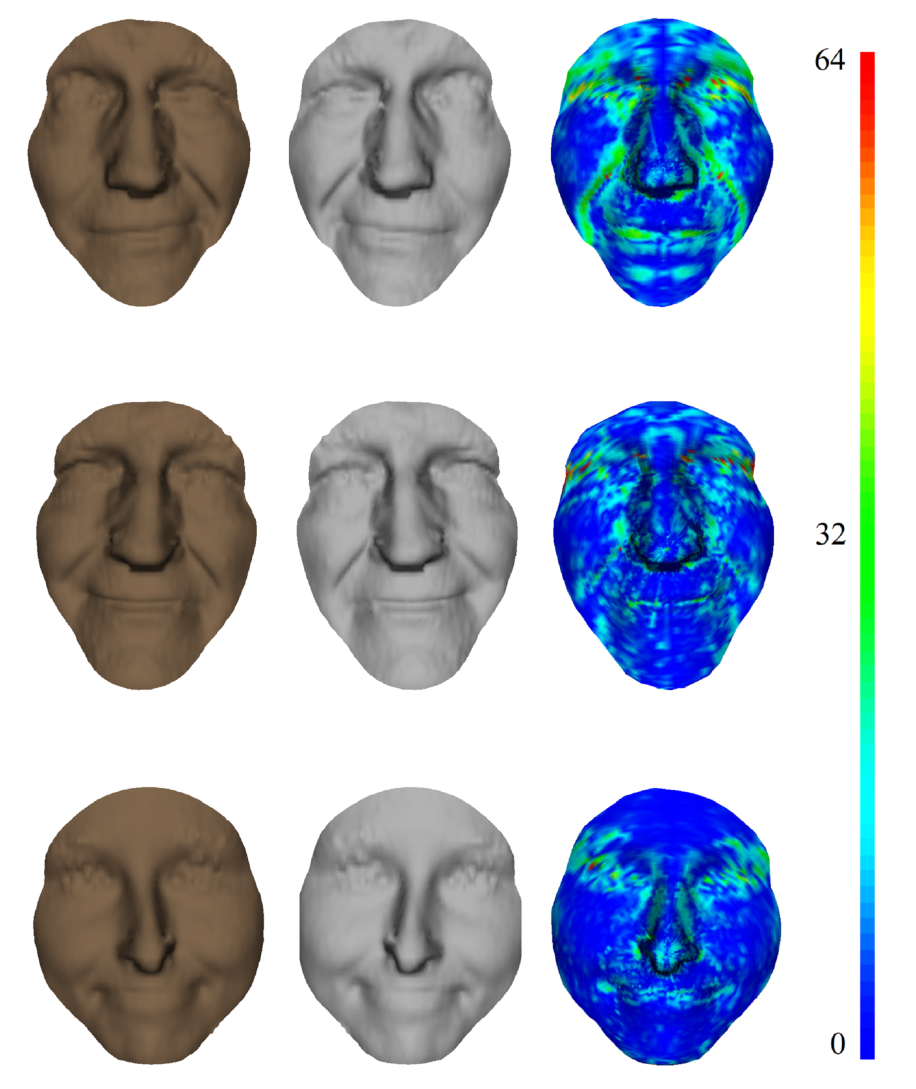

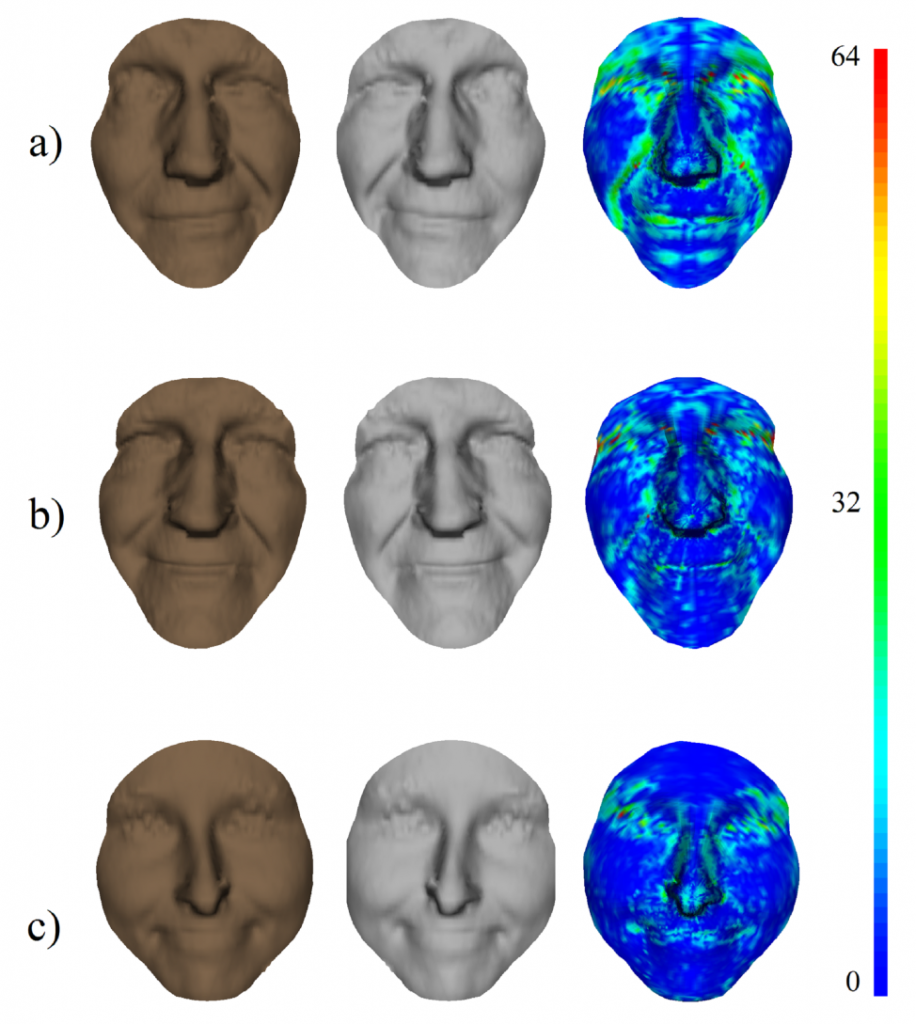

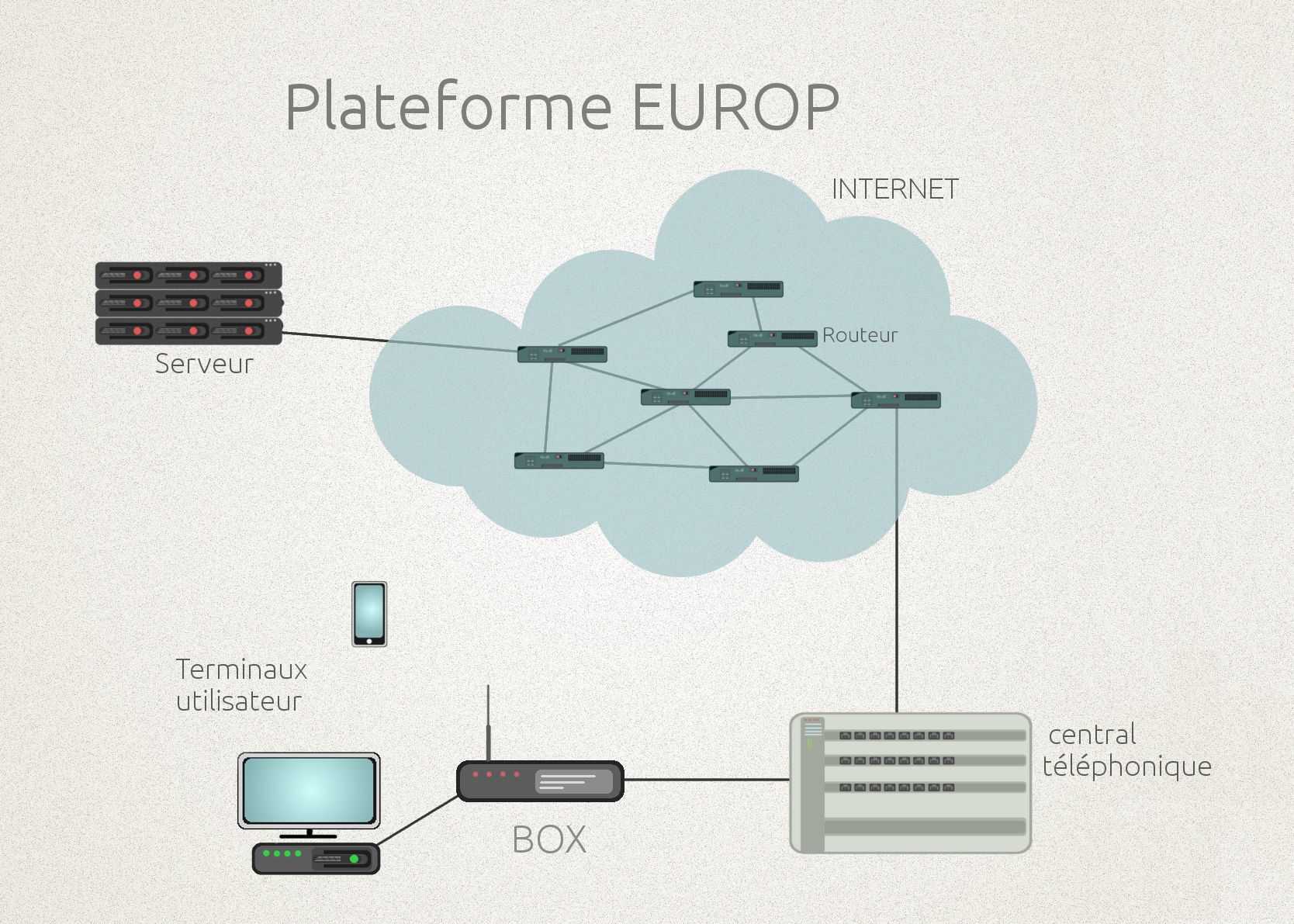

In practice, the portion of exposure to electromagnetic waves which is linked to base stations is far from representing the majority of overall exposure. As many studies have demonstrated, exposure from mobile phones is much more significant. Fortunately, the deployment of 4G, followed by 5G, will not only improve speed but will also contribute to significantly reducing the power radiated by mobile phones. Small cell network architecture with small antennas supplementing larger ones will also help limit radiated power. It is important to study solutions resulting in lower exposure to radio frequencies at different levels, from radio devices to network architecture or management and provision of services. This is precisely what the partners in the LEXNET European project set about doing in 2012, with the goal of cutting public exposure to electromagnetic fields and radio frequency in half.

In the near future, fifth-generation networks will use several frequency bands and various architectures in a dynamic fashion, enabling them to handle both increased speed and the proliferation of connected devices. There will be no choice but to effectively consider the network-terminal relationship as a duo, rather than treating the two as separate elements. This new paradigm has become a key priority for researchers, industry players and public authorities alike. And from this perspective, the latest discussions about the location of base stations and renewing the Paris charter prove to be emblematic.

Joe Wiart, Chairholder in research on Modeling, Characterization and Control of Exposition to Electromagnetic Waves at Institut Mines Telecom, Télécom ParisTech – Institut Mines-Télécom, Université Paris-Saclay

This article was originally published in French in The Conversation France The Conversation

The TSN Carnot institute, a guarantee of excellence in partnership-based research since 2006

The TSN Carnot institute, a guarantee of excellence in partnership-based research since 2006