Nathalie Redon, IMT Lille Douai – Institut Mines-Télécom

Last May, Paris City Hall launched “Pollutrack”: a fleet of micro sensors placed on the roofs of vehicles traveling throughout the capital to measure the amount of fine particles present in the air in real-time. A year before, Rennes proposed that residents participate in assessing the air quality via individual sensors.

In France, for several years, high concentrations of fine particles have been regularly observed, and air pollution has become a major health concern. Each year in France, 48,000 premature deaths are linked to air pollution.

The winter of 2017 was a prime example of this phenomenon, with daily levels reaching up to 100µg/m3 in certain areas, and with conditions stagnating for several days due to the cold and anticyclonic weather patterns.

A police sketch of the fine particle

A fine particle (particulate matter, abbreviated PM) is characterized by three main factors: its size, nature and concentration.

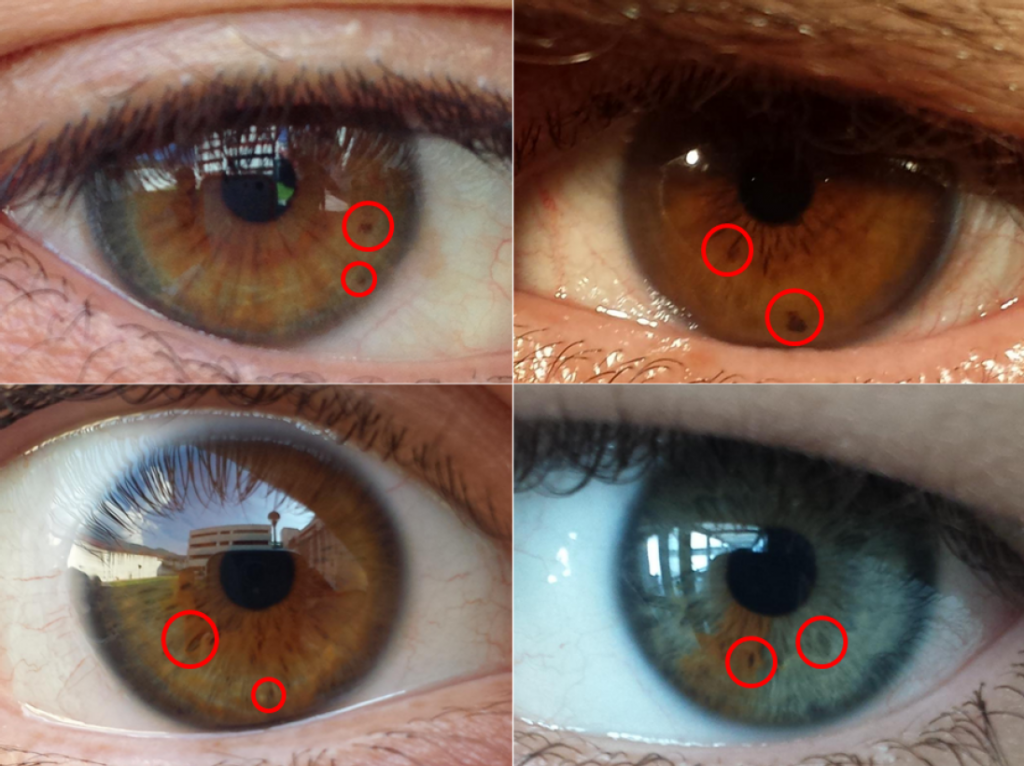

Its size, or rather its diameter, is one of the factors that affects our health: the PM10 have a diameter ranging from 2.5 to 10μm; PM2.5, a diameter less than 2.5μm. By way of comparison, one particle is approximately 10 to 100 times finer than a hair. And this is the problem: the smaller the particles we inhale, the more deeply they penetrate the lungs, leading to an inflammation of the lung alveoli, as well as the cardiovascular system.

The nature of these fine particles is also problematic. They are made up of a mixture of organic and mineral substances with varying degrees of danger: water and carbon form the base around which condense sulfates, nitrates, allergens, heavy metals and other hydrocarbons with proven carcinogenic properties.

As for their concentration, the greater it is in terms of mass, the greater the health risk. The World Health Organization recommends not to exceed personal exposure of 25 μg/m3 for the PM2.5 as a 24-hour average and 50 μg/m3 for the PM10. In recent years, thresholds have been constantly exceeded, especially large cities.

The website for the BreatheLife campaign, created by WHO, where you can enter the name of a city and find out its air quality. Here, the example of Grenoble is given.

Humans are not the only ones affected by the danger of these fine particles: when they are deposited, they contribute to the enrichment of natural environments, which can also lead to an eutrophication, phenomena, meaning excess amounts of nutriments, such as the nitrogen carried by the particles, are deposited in the soil or water. For example, this leads to algal blooms that can suffocate local ecosystems. In addition, due to the chemical reaction of the nitrogen with the surrounding environment, the eutrophication generally leads to soil acidification. Soil that is more acidic becomes drastically less fertile: vegetation becomes depleted, and slowly but inexorably, species die off.

Where do they come from?

Fine particle emissions primarily originate from human activities: 60% of PM10 and 40% of PM2.5 are generated from wood combustion, especially from fireplace or stove heating, 20% to 30% originate from automotive fuel (diesel is the number one). Finally, nearly 19% of national PM10 emissions, and 10% PM2.5 emissions result from agricultural activities.

To help public authorities limit and control these emissions, the scientific community must improve the identification and quantification of these sources of emissions, and must gain a better understanding of their spatial and temporal variability.

Complex and costly readings

Today, fine particle readings are primarily based on two techniques.

First, samples are taken from filters; these are taken after an entire day and are then analyzed in a laboratory. Aside from the fact that the data is delayed, the analytical equipment used is costly and complicated to use; a certain level of expertise is required to interpret the results.

The other technique involves making measurements in real time, using tools like the Multi-wavelength Aethalometer AE33, a device that is relatively expensive, at over €30,000, but has the advantage of providing measurements every minute or even under a minute. It is also able to monitor black carbon (BC): it can identify the particles that originate specifically from combustion reactions. The aerosol chemical speciation monitor (ACSM) is also worth mentioning, as it makes it possible to identify the nature of the particles, and takes measurements every 30 minutes. However, its cost of €150,000 means that access to this type of tool is limited to laboratory experts.

Given their cost and level of sophistication, there are a limited number of sites in France that are equipped with these tools. Thanks to these simulations, the analysis of daily averages makes it possible to create maps with a 50km by 50km grid.

Since these means of measurement do not make it possible to establish a real-time map with finer spatio-temporal scales—in terms of the km2 and minutes—the scientists have recently begun looking to new tools: particle microsensors.

How do microsensors work?

Small, light, portable, inexpensive, easy to use, connected… microsensors appear to offer many advantages that complement the range of heavy analytical techniques mentioned above.

But how credible are these new devices? To answer this question, we need to look at their physical and metrological characteristics.

At present, several manufactures are competing for the microsensor market: the British Alphasense, the Chinese Shinyei and the American manufacturer, Honeywell. They all use the same measurement method: optical detection using a laser diode.

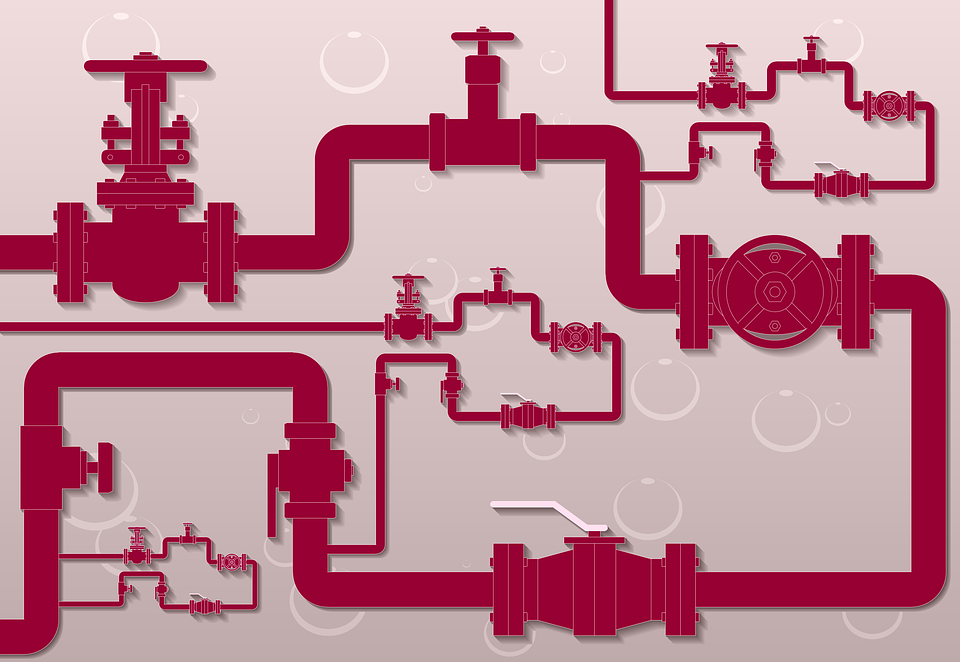

The principle is simple: the air, sucked in by the fan, flows through the detection chamber, which is configurated to remove the larger particles, and retain only the fine particles. The air, loaded with particles, flows through the optical signal emitted by the laser diode, the beam of which is diffracted by a lens.

A photodetector placed opposite the emitted beam records decreases in luminosity caused by the passing particles, and counts the number by size ranges. The electrical signal from the photodiode is then transmitted to a microcontroller that processes the data in real time: if the air flow rate is known, the concentration number can then be determined, and then the mass, based on the size ranges, as seen in the figure below.

An example of a particle sensor (brand: Honeywell, HPM series)

From the most basic to the fully integrated version (including acquisition and data processing software, and measurement transmission via cloud computing), the price can range from €20 to €1,000 for the most elaborate systems. This is very affordable, compared to the techniques mentioned above.

Can we trust microsensors?

First, it should be noted that these microsensors do not provide any information on the fine particles’ chemical composition. Only the techniques described above can do that. However, knowledge of the particles’ nature provides information about their source.

Furthermore, the microsensor system used to separate particles by size is often rudimentary; field tests have shown that while the finest particles (PM2.5) are monitored fairly well, it is often difficult to extract the PM10 fraction alone. However, the finest particles are precisely what affect our health the most, so this shortcoming is not problematic.

In terms of the detection/quantification limits, when the sensors are new, it is possible to reach reasonable thresholds of approximately 10µg/m3. They also have sensitivity levels between 2 and 3µg/m3 (with an uncertainty of approximately 25%), which is more than sufficient for monitoring the dynamics of how the particle concentrations change in the concentration range of up to 200µg/m3.

However, over time, the fluidics and optical detectors of these systems tend to become clogged, leading to errors in the results. Microsensors must therefore be regularly calibrated by connecting them to reference data, such as the data released by air pollution control agencies.

This type of tool is therefore ideally suited for an instantaneous and semi-quantitative diagnosis. The idea is not to provide an extremely precise measurement, but rather to report on the dynamic changes in particulate air pollution on a scale with low/medium/high levels. Due to the low cost of these tools, they can be distributed in large numbers in the field, and therefore help provide a better understanding of particulate matter emissions.

Nathalie Redon, Assistant Professor, Co-Director of the “Sensors” Laboratory, IMT Lille Douai – Institut Mines-Télécom

This article was originally published in French on The Conversation.