Climate change as seen from space

René Garello, IMT Atlantique – Institut Mines-Télécom

[divider style=”normal” top=”20″ bottom=”20″]

[dropcap]T[/dropcap]he French National Centre for Space Research has recently presented two projects based on greenhouse gas emission monitoring (CO2 and methane) using satellite sensors. The satellites, which are to be launched after 2020, will supplement measures carried out in situ.

On a global scale, this is not the first such program to measure climate change from space: the European satellites from the Sentinel series have already been measuring a number of parameters since Sentinel-1A was launched on April 3, 2014 under the aegis of the European Space Agency. These satellites are part of the Copernicus Program (Global Earth Observation System of Systems), carried out on a global scale.

Since Sentinel-1A, the satellite’s successors 1B, 2A, 2B and 3A have been launched successfully. They are each equipped with sensors with various functions. For the first two satellites, these include a radar imaging system, for so-called “all weather” data acquisition, the radar wavelength being indifferent to cloudy conditions, whether at night or in the day. Infrared optical observation systems allow the second two satellites to monitor the temperature of ocean surfaces. Sentinel-3A also has four sensors installed for measuring radiometry, temperature, altimetry and the topography of surfaces (both ocean and land).

The launch of these satellites builds on the numerous space missions that are already in place on a European and global scale. The data they record and transmit grant researchers access to many parameters, showing us the planet’s “pulse”. These data partially concern the ocean (waves, wind, currents, temperatures, etc.) showing the evolution of large water masses. The ocean acts as an engine to the climate and even small variations are directly linked to changes in the atmosphere, the consequences of which can sometimes be dramatic (hurricanes). Data collected by sensors for continental surfaces concern variations in humidity and soil cover, whose consequences can also be significant (drought, deforestation, biodiversity, etc.).

Incroyable image de l’œil de l'#ouragan #Jose saisie samedi par le satellite #Sentinel2 🛰️Pic @anttilip pic.twitter.com/kphDZG8RCu

— Météo-France (@meteofrance) September 11, 2017

[Incredible image from the eye of #hurricane #Jose taken on Saturday by the satellite #Sentinel2 Pic @anttilip]

Masses of data to process

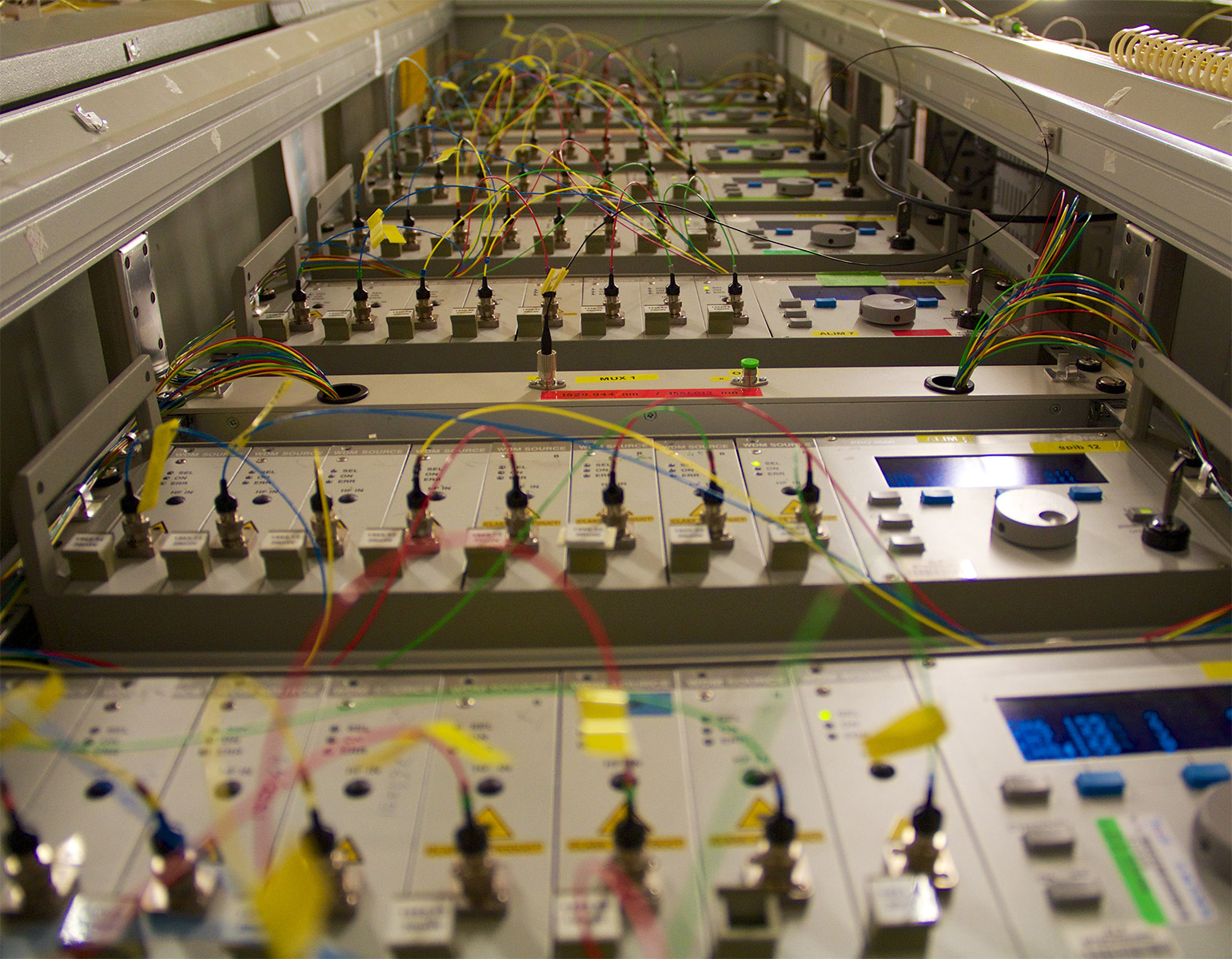

Processing of data collected by satellites is carried out on several levels, ranging from research labs to more operational uses, not forgetting formatting activity done by the European Space Agency.

The scientific community is focusing increasingly on “essential variables” (physical, biological, chemical, etc.) as defined by groups working on climate change (in particular GCOS in the 1990s). They are attempting to define a measure or group of measures (the variable) that will contribute to the characterization of the climate in a critical way.

There are, of course, a considerable number of variables that are sufficiently precise to be made into indicators allowing us to confirm whether or not the UN’s objectives of sustainable development have been achieved.

The Boreal AJS 3 drone is used to take measurements at a very low altitude above the sea

The identification of these “essential variables” may be achieved after data processing, by combining this with data obtained by a multitude of other sensors, whether these are located on the Earth, under the sea or in the air. Technical progress (such as images with high spatial or temporal resolution) allows us to use increasingly precise measures.

The Sentinel program operates in multiple fields of application, including: environmental protection, urban management, spatial planning on a regional and local level, agriculture, forestry, fishing, healthcare, transport, sustainable development, civil protection and even tourism. Amongst all these concerns, climate change features at the center of the program’s attention.

The effort made by Europe has been considerable, representing an investment of over €4 billion between 2014 and 2020. However, the project also has very significant economic potential, particularly in terms of innovation and job creation: economic gains in the region of €30 million are expected between now and 2030.

How can we navigate these oceans of data?

Researchers, as well as key players in the socio-economic world, are constantly seeking more precise and comprehensive observations. However, with spatial observation coverage growing over the years, the mass of data obtained is becoming quite overwhelming.

Considering that a smartphone contains a memory of several gigabytes, spatial observation generates petabytes of data to be stored; and soon we may even be talking in exabytes, that is, in trillions of bytes. We therefore need to develop methods for navigating these oceans of data, whilst still keeping in mind that the information in question only represents a fraction of what is out there. Even with masses of data available, the number of essential variables is actually relatively small.

Identifying phenomena on the Earth’s surface

The most recent developments aim to pinpoint the best possible methods for identifying phenomena, using signals and images representing a particular area of the Earth. These phenomena include waves and currents on ocean surfaces, characterizing forests, humid, coastal or flooding areas, urban expansion in land areas, etc. All this information can help us to predict extreme phenomena (hurricanes), and manage post-disaster situations (earthquakes, tsunamis) or monitor biodiversity.

The huge and devastating #napafire seen by #OLCI on october 9th #Sentinel3 @CopernicusEU @CopernicusEMS @ESA_EO @USGS pic.twitter.com/e2P8QG7nfj

— antonio vecoli (@tonyveco) October 10, 2017

The next stage consists in making processing more automatic by developing algorithms that would allow computers to find the relevant variables in as many databases as possible. Intrinsic parameters and information of the highest level should then be added into this, such as physical models, human behavior and social networks.

This multidisciplinary approach constitutes an original trend that should allow us to qualify the notion of “climate change” more concretely, going beyond just measurements to be able to respond to the main people concerned – that is, all of us!

[divider style=”normal” top=”20″ bottom=”20″]

René Garello, Professor in Signal and Image Processing, “Image and Information Processing” department, IMT Atlantique – Institut Mines-Télécom

The original version of this article was published on The Conversation.