Mathematical tools for analyzing the development of brain pathologies in children

Magnetic resonance imaging (MRI) enables medical doctors to obtain precise images of a patient’s structure and anatomy, and of the pathologies that may affect the patient’s brain. However, to analyze and interpret these complex images, radiologists need specific mathematical tools. While some tools exist for interpreting images of the adult brain, these tools are not directly applicable in analyzing brain images of young children and newborn or premature infants. The Franco-Brazilian project STAP, which includes Télécom ParisTech among its partners, seeks to address this need by developing mathematical modeling and MRI analysis algorithms for the youngest patients.

An adult’s brain and that of a developing newborn infant are quite different. An infant’s white matter has not yet fully myelinized and some anatomical structures are much smaller. Due to these specific features, the images obtained of a newborn infant and an adult via magnetic resonance imaging (MRI) are not the same. “There are also difficulties related to how the images are acquired, since the acquisition process must be fast. We cannot make a young child remain still for a long period of time,” adds Isabelle Bloch, a researcher in mathematical modeling and spatial reasoning at Télécom ParisTech. “The resolution is therefore reduced because the slices are thicker.”

These complex images require the use of tools to analyze and interpret them and to assist medical doctors in their diagnoses and decisions. “There are many applications for processing MRI images of adult brains. However, in pediatrics there is a real lack that must be addressed,” the researcher observes. “This is why, in the context of the STAP project, we are working to design tools for processing and interpreting images of young children, newborns and premature infants.”

The STAP project, funded by the ANR and FAPESP was launched in January and will run for four years. The partners involved include the University of São Paolo in Brazil, the pediatric radiology departments at São Paolo Hospital and Bicêtre Hospital, as well as the University of Paris Dauphine and Télécom ParisTech. “Three applied mathematics and IT teams are working on this project, along with two teams of radiologists. Three teams in France, two in Brazil… The project is both international and multidisciplinary,” says Isabelle Bloch.

Rare and heterogeneous data

To work towards developing a mathematical image analysis tool, the researchers collected MRIs of young children and newborns from partner hospitals. “We did not acquire data specifically for this project,” Isabelle Bloch explains. “We use images that are already available to the doctors, for which the parents have given their informed consent for the images to be used for research purposes.” The images are all anonymized, regardless of whether they display normal or pathological anatomy. “We are very cautious: If a patient has a pathology that is so rare that his or her identity could be recognized, we do not use the image.”

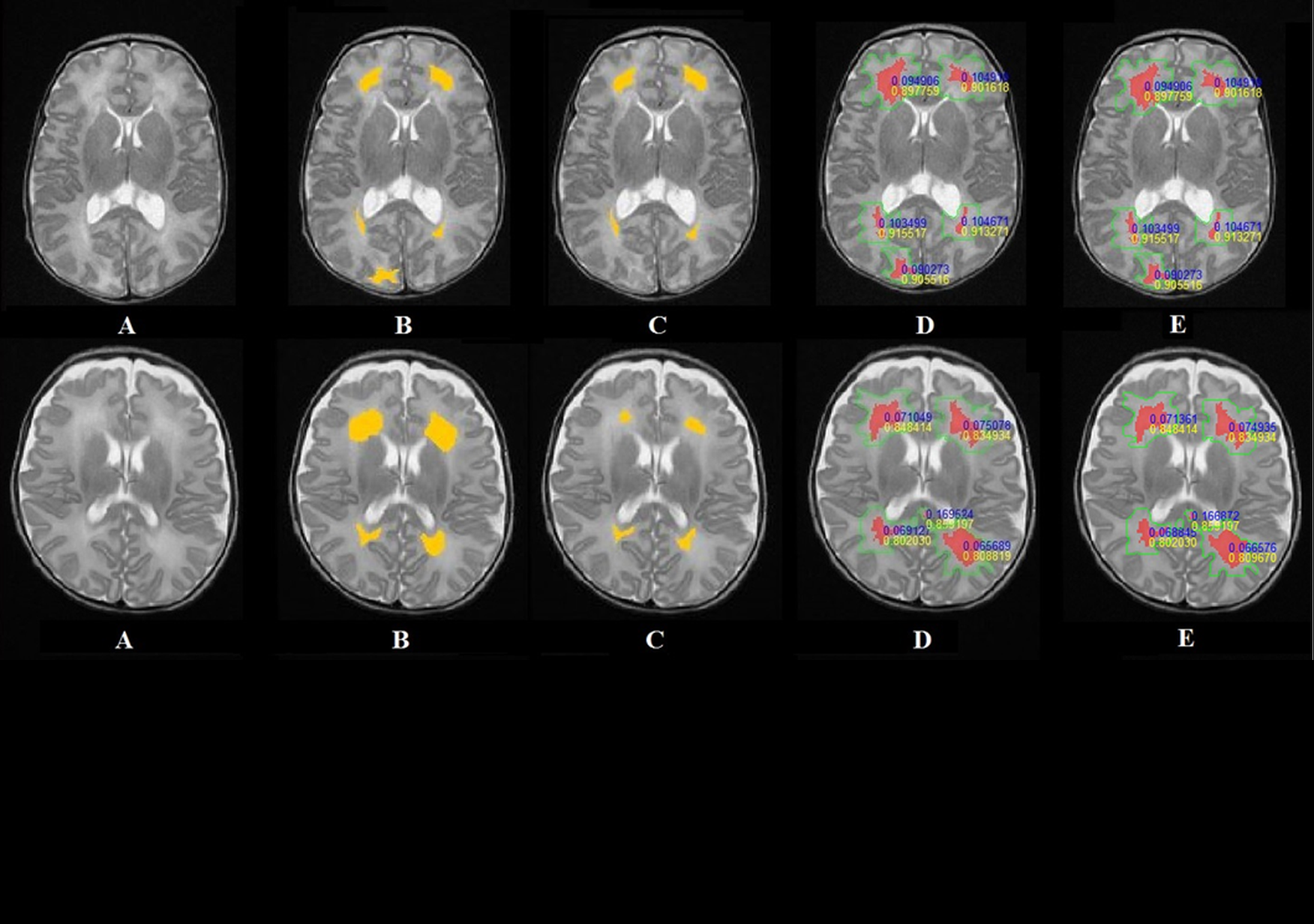

Certain pathologies and developmental abnormalities are of particular interest to researchers: hyperintense areas, which are areas of white matter that appear lighter than normal on the MRI images, developmental abnormalities in the corpus callosum, the anatomical structure which joins the two cerebral hemispheres, and cancerous tumors.

“We are faced with some difficulties because few MRI images exist of premature and newborn babies,” Isabelle Bloch explains. “Finally, the images vary greatly depending on the age of the patient and the pathology. We therefore have a limited dataset and many variations that continue to change over time.”

Modeling medical knowledge

Although the available images are limited and heterogeneous, the researchers can make up for this lack of data through the medical expertise of radiologists, who are in charge of annotating the MRI that are used. They will therefore have access to valuable information on brain anatomy and pathologies as well as the patient’s history. “We will work to create models in the form of medical knowledge graphs, including graphs of the structures’ spatial layout. We will have assistance from the pediatric radiologists participating in the project,” says Isabelle Bloch. “These graphs will guide the interpretation of the images and help to describe the pathology, and the surrounding structures: Where is the pathology? What healthy structures could it affect? How is it developing?”

For this model, each anatomical structure will be represented by a node. These nodes are connected by edges that bear attributes such as spatial relationships or contrasts of intensity that exist in the MRI. This graph will take into account the patient’s pathologies by adapting and modifying the links between the different anatomical structures. “For example, if the knowledge shows that a given structure is located to the right of another, we would try to obtain a mathematical model that tells us what ‘to the right of’ means,” the researcher explains. “This model will then be integrated into an algorithm for interpreting images, recognizing structures and characterizing a disease’s development.”

After analyzing a patient’s images, the graph will become an individual model that corresponds to a specific patient. “We do not yet have enough data to establish a standard model, which would take variability into account,” the researcher adds. “It would be a good idea to apply this method to groups of patients, but that would be a much longer-term project.”

An algorithm to describe images in the medical doctor’s language

In addition to describing the brain structures spatially and visually, the graph will take into account how the pathology develops over time. “Some patients are monitored regularly. The goal would be to compare MRI images spanning several months of monitoring and precisely describe the developments of brain pathologies in quantitative terms, as well as their possible impact on the normal structures,” Isabelle Bloch explains.

Finally, the researchers would like to develop an algorithm that would provide a linguistic description of the images’ content using the pediatric radiologist’s specific vocabulary. This tool would therefore connect the quantified digital information extracted from images with words and sentences. “This is the reverse method of that which is used for the mathematical modeling of medical knowledge,” Isabelle Bloch explains. “The algorithm would therefore describe the situation in a quantitative and qualitative manner, hence facilitating the interpretation by the medical expert.”

“In terms of the structural modeling, we know where we are headed, although we still have work to do on extracting the characteristics from the MRI,” says Isabelle Bloch regarding the project’s technical aspects. “But combining spatial analysis with temporal analysis poses a new problem… As does translating the algorithm into the doctor’s language, which requires transitioning from quantitative measurements to a linguistic description.” Far from trivial, this technical advance could eventually allow radiologists to use new image analysis tools better suited to their needs.

Find out more about Isabelle Bloch’s research