Between attackers and defenders, who is in the lead? In cybersecurity, the attackers have long been viewed as the default winners. Yet infrastructures are becoming better and better at protecting themselves. Although much remains to be done, things are not as imbalanced as they might seem, and research is providing valid cyberdefense solutions to help ensure the security and resilience of computer systems.

[divider style=”normal” top=”20″ bottom=”20″]

This article is part of our series on Cybersecurity: new times, new challenges.

[divider style=”normal” top=”20″ bottom=”20″]

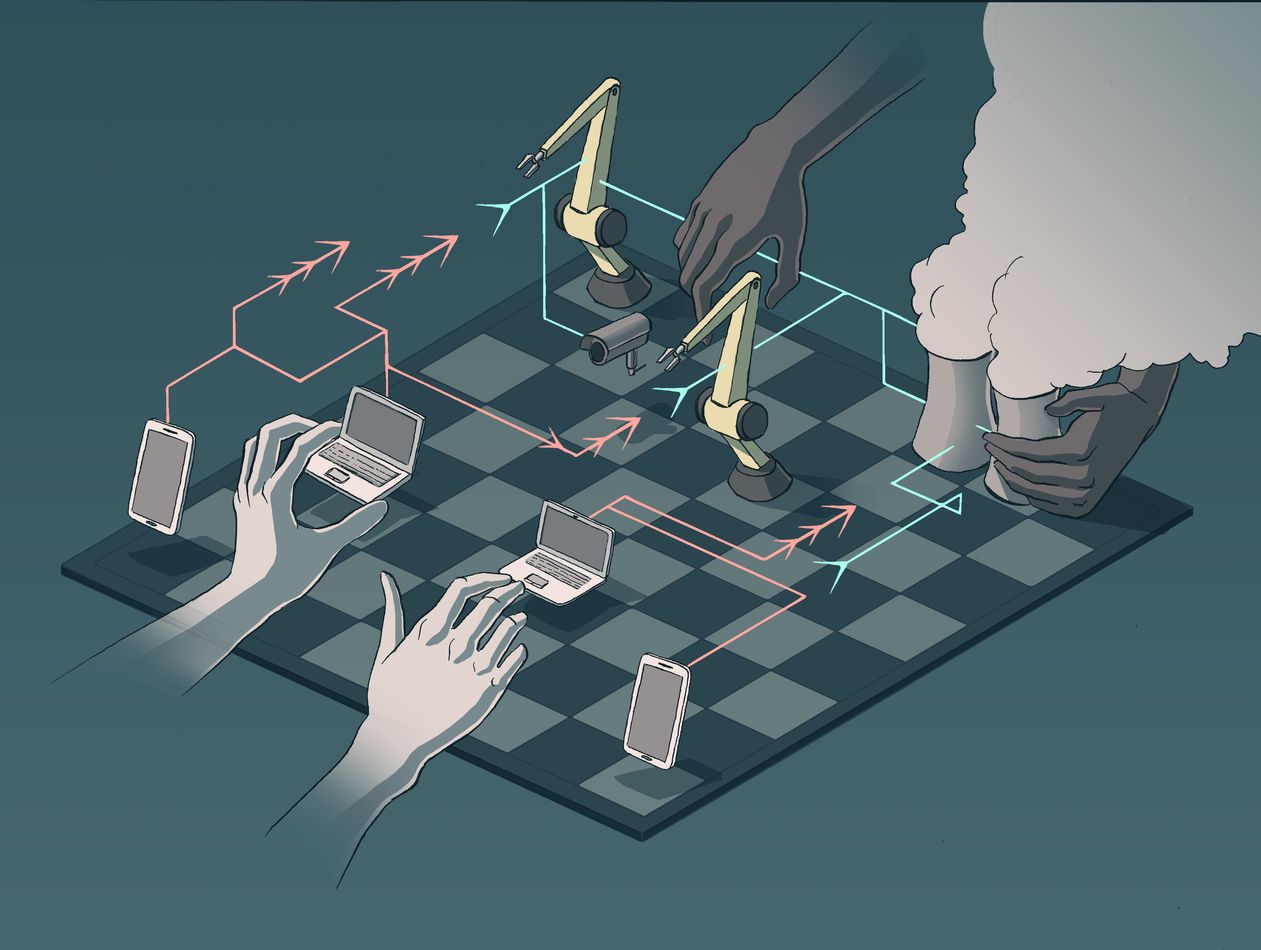

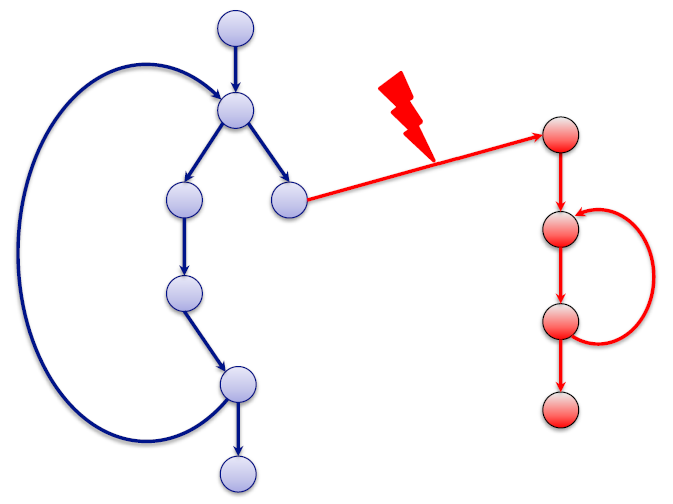

At the beginning of a chess game, the white pieces are always in control. This is a cruel reality for the black pieces, who are often forced to be on the defensive during the first few minutes of the game. The same is true in the world of cybersecurity: the attacker, like the player with the white chess pieces, makes the first blow. “He makes his choices, and the defender must follow his strategy which puts him in a situation of inferiority by default,” observes Frédéric Cuppens, a cybersecurity researcher at IMT Atlantique. This reality is what defines the strategies adopted by companies and software publishers, with the difference that unlike the black chess pieces, the cyber-defenders cannot counter-attack. It is illegal for an individual or an organization to respond by attacking the attacker with a cyber-attack. In this context, the defense plan can be broken down into three parts: protecting oneself to limit the attacker’s initiative, deploying defenses to prevent him from reaching his goal and, above all, ensuring the resilience of the system if the attacker succeeds in his aim.

This last possibility must not be overlooked. “In the past, researchers quickly realized that they would not succeed in preventing every attack,” recalls Hervé Debar, a researcher in cybersecurity at Télécom SudParis. From a technical point of view, “it is impossible to predict all the potential paths of attack,” he explains. And from an economic and organizational perspective, blocking all the doors using preventive measures or in the event of an attack makes the systems inoperable. It is therefore often more advantageous to accept to undergo an attack, cope with it and end it, than it is to attempt to prevent it at all costs. Hervé Debar and Frederic Cuppens, members of the Cybersecurity and critical infrastructures Chair at IMT (see box at the end of the article), are very familiar with this arbitration. For nuclear power plants for example, shutting everything down to prevent a threat is unthinkable.

Reducing the initiative

Despite these obstacles facing cyber-defense, the field is not lagging behind. Institutional and private organizations’ technical and financial means are generally greater than those of cybercriminals — with the exception of computer attacks on governments. Once a new flaw has been discovered, the information spreads quickly. “Software publishers react quickly to block these entryways, things travel very fast,” says Frédéric Cuppens. The National Vulnerabilty Database (NVD), is an American database that lists all known vulnerabilities and serves as a reference for cyber-defense experts. Beginning in 1995 with a few hundred flaws, it now records thousands of new entries each year, with 15,000 in 2017. This just shows how important it is to share information to allow the fastest possible response and shows the involvement of communities of experts in this collective strategy.

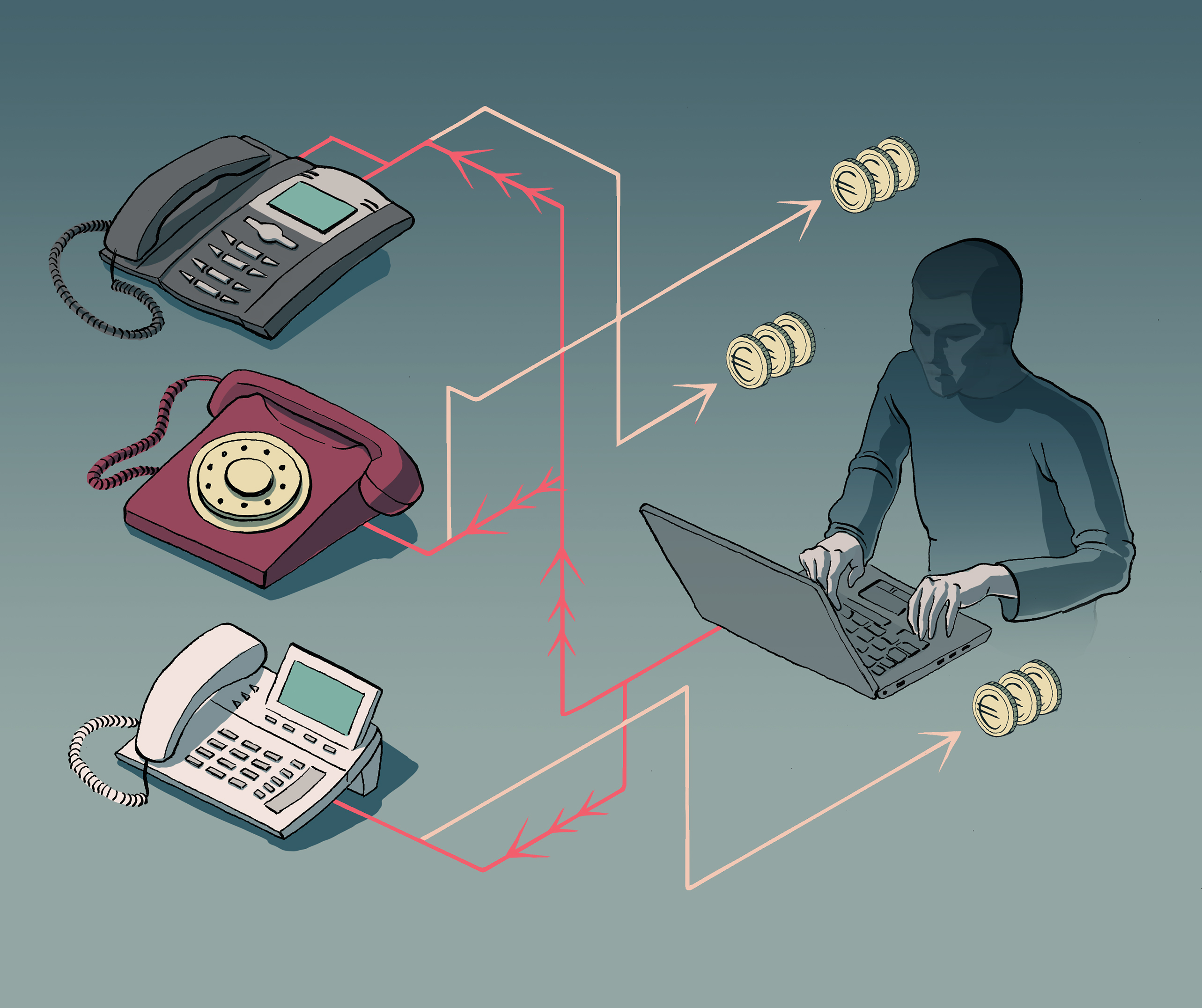

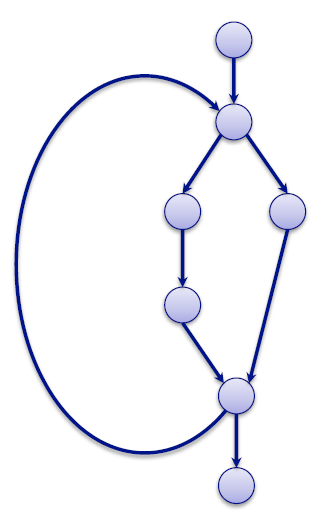

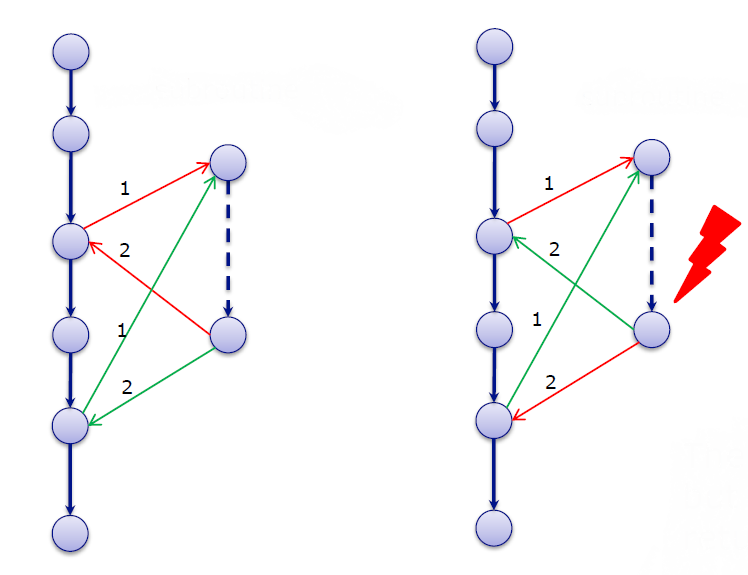

“But it’s not enough,” warns Frédéric Cuppens. “These databases are crucial, but they always come after the event,” he explains. To reduce the attacker’s initiative when it is made, the attack must be detected as soon as possible. The best way to achieve this is to adopt a behavioral approach. To do so, data is collected to analyze how the systems function normally. Once this learning step is completed, the system’s “natural” behavior is known. When a deviation occurs in the operations in relation to this baseline, it is considered a potential danger sign. “In the case of attacks on websites, for example, we can detect the injection of unusual commands that betrays the attacker’s actions,” explains Hervé Debar.

Connected objects, like surveillance cameras, are a new target for attackers. Cyber-defense must therefore be adapted accordingly.

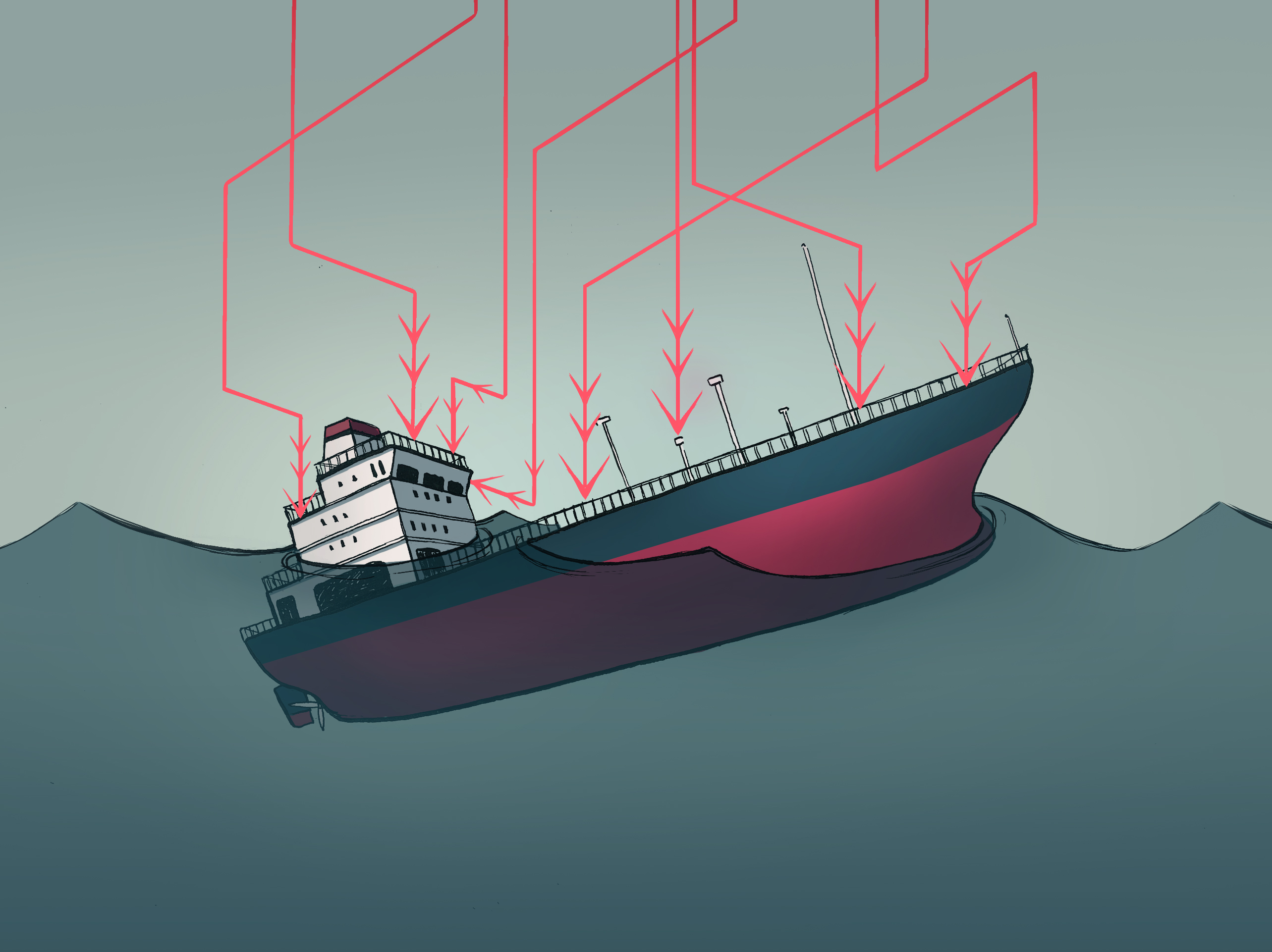

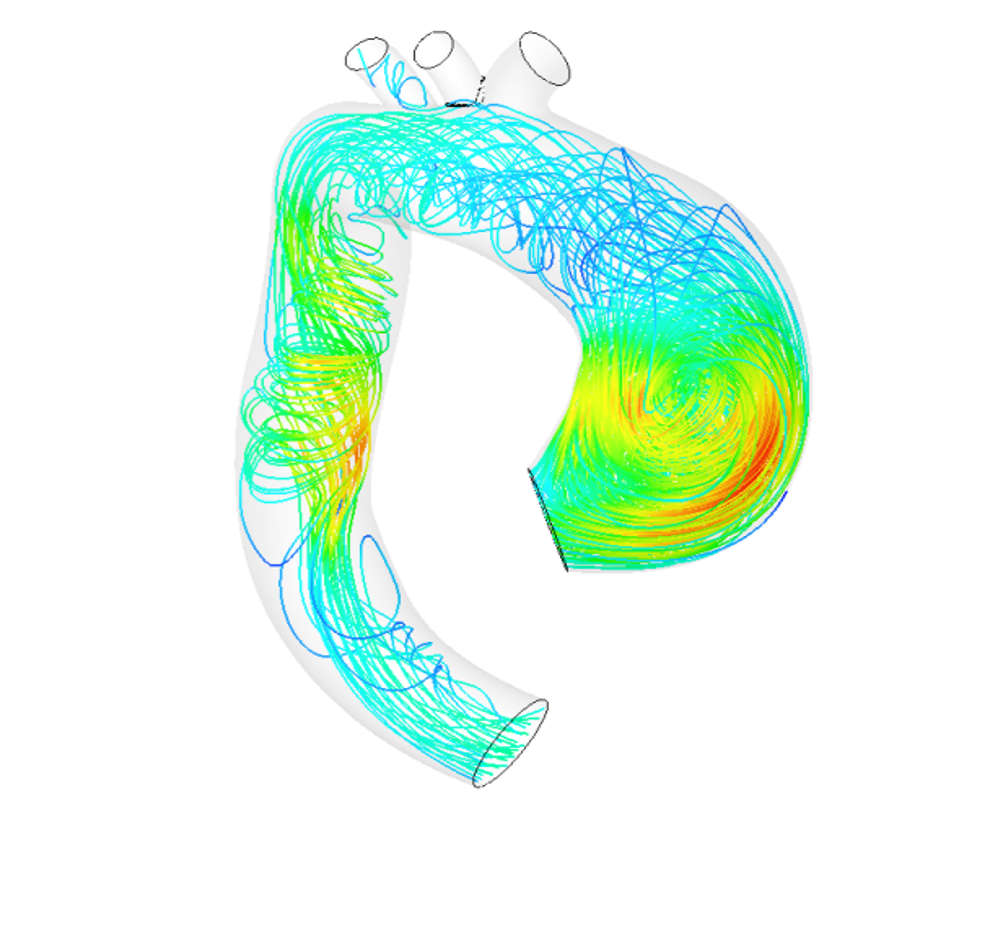

The boom of the Internet of Things has brought about a change of scale that reduces the effectiveness of this behavioral approach, since it is impossible to monitor each object individually. In 2016, the botnet Mirai took control of connected surveillance cameras and used them to send requests to servers belonging to the DNS provider, Dyn. The outcome: websites belonging to Netflix, Twitter, Paypal and other major Internet entities whose domain names were managed by the company became inaccessible. “Mirai has changed things a little,” admits the researcher from Télécom SudParis. Protection must be adapted in the light of the vulnerabilities created by connected objects. It is inconceivable to monitor whole fleets of cameras in the same way we would monitor a website. It is too expensive, too difficult. “We therefore protect the communication between objects rather than the objects themselves and we use certain objects as sentinels,” explains Hervé Debar. Since an attack on cameras affects them all, it is only necessary to protect and observe a few to detect a general attack. Through collaboration with Airbus, the researcher and his team have shown that monitoring 2 industrial sensors out of a group of 20 was sufficient for detecting an attack.

Ensuring resilience

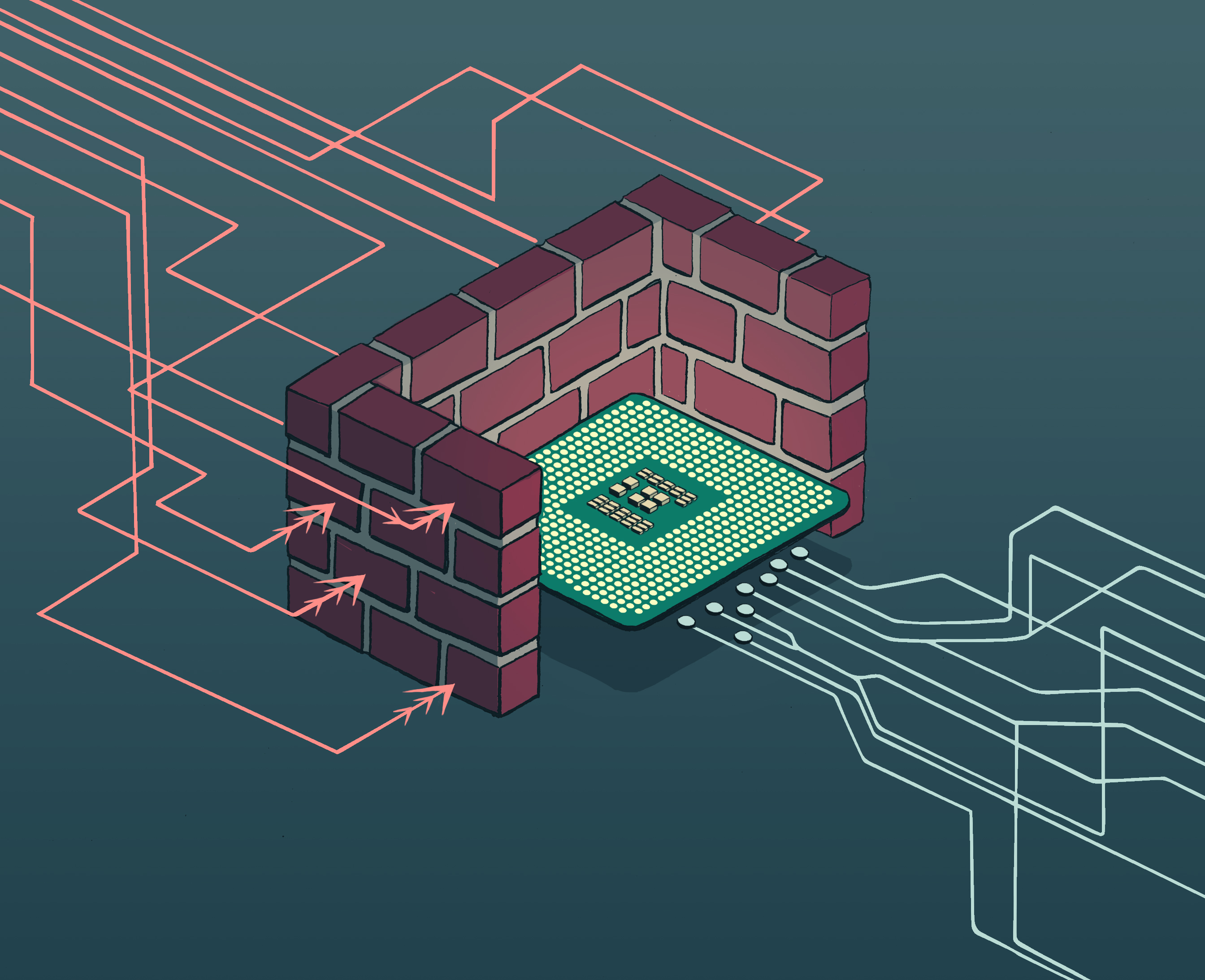

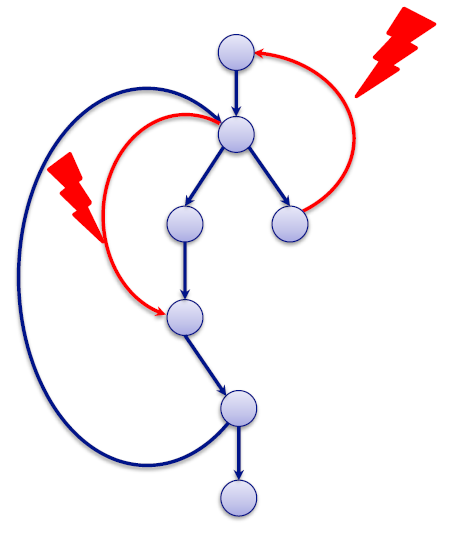

Once the attack has been detected, everything must be done to ensure it has the least possible impact. “To thwart the attacker and seize the initiative, a good solution is to use the moving target defense,” Frédéric Cuppens explains. This technique is relatively new: the first academic research on the subject was conducted in the early 2010s, and the practice has started to spread among companies for around two years now. It involves moving the targets of the attack to safer locations. It is easy to understand this cyber-defense strategy in the area of cloud computing. The functions of a virtual machine that is attacked can be moved to another machine that was unaffected. The service therefore continues to be provided. “With this means of defense, all that is needed is for the IP addresses and routing functions to be dynamically reconfigured,” the IMT Atlantique researcher explains. In essence, this means directing all the traffic related to a department through a clone of the attacked system. This technique is a major asset, especially for those facing particularly vicious attacks. “The systems’ resilience is paramount in protecting against polymorphic malware, which changes as it spreads,” says Frédéric Cuppens.

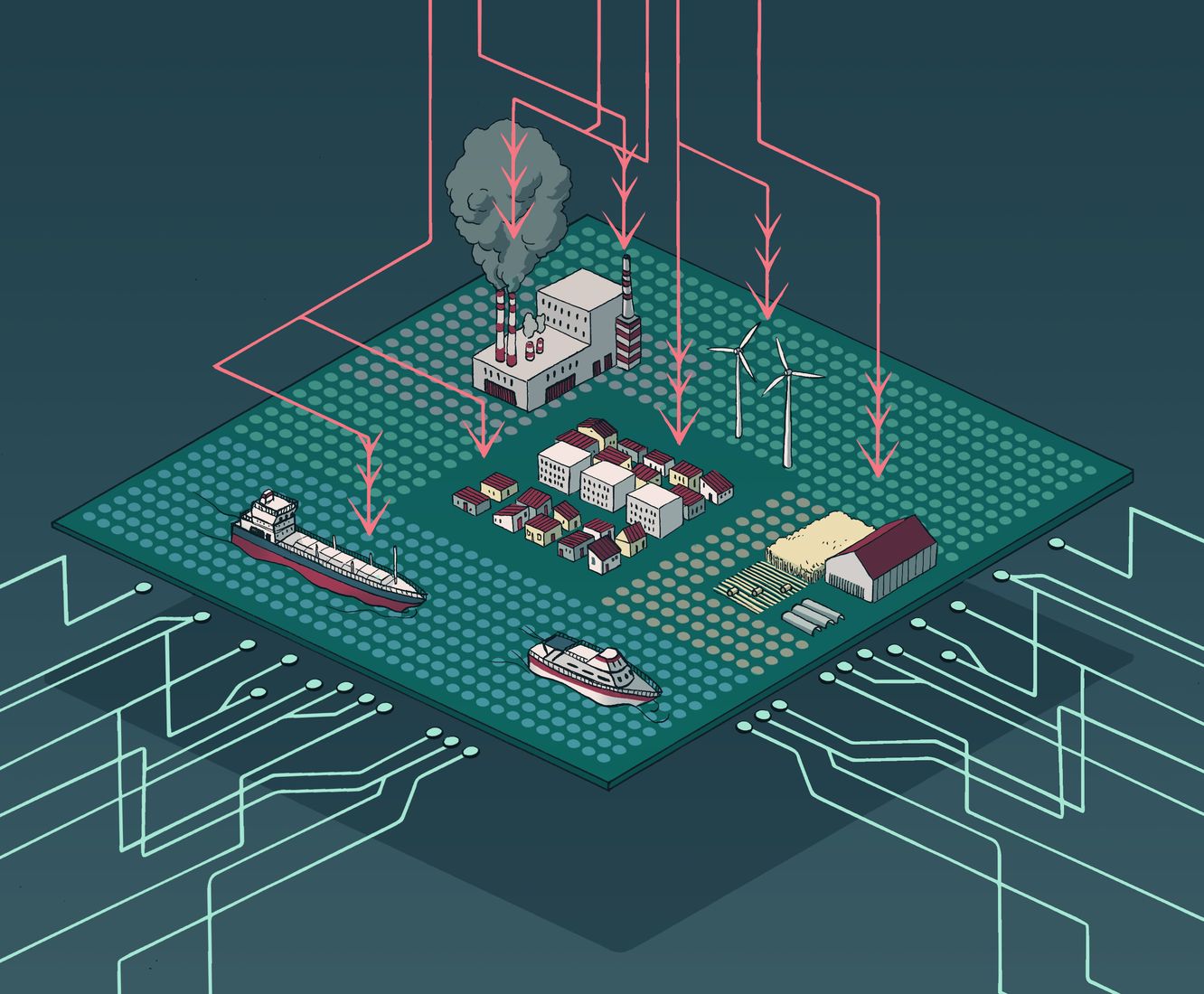

While the moving target strategy is effective for dematerialized systems, it is more difficult to deploy in physical infrastructures. Connected robots in a production line and fleets of connected objects cannot be cloned and moved as needed. For these systems, functional diversification is used instead. “For equipment that runs on specific hardware with specific software, the company can reproduce the system, but with different hardware and software,” explains Frédéric Cuppens. Since the security vulnerabilities are inherent in the given hardware or software, if one is attacked, the other one should still remain functional. But this protection represents an added cost. In response to this argument, the researcher replies that the safety of infrastructures and individuals is at stake. “For an airplane, we are all willing to accept that 80% of the cost be dedicated to safety, because so many lives are at stake. The same is true for infrastructures: losing control of certain types of equipment can cost lives, whether it be the employees nearby or that of citizens in extreme cases involving critical infrastructure.”

How can cyber-defense be further developed?

People’s attitudes about cybersecurity must change. This is one of the major challenges we must address to further limit what attackers can achieve. One of the keys is to convince companies of the importance of investing in this area. Another is disseminating good practices. “Today we know which uses ensure better security right from the development stage for software and systems,” says Hervé Debar. “Using languages that are more robust than others, tools that can check how programs are written, proven libraries for certain functions, secure programming patterns, making test plans…” Yet these practices are far from routine for developers. All too often, their objective is to offer an application or functional system as quickly as possible, to the detriment of security and robustness.

It is now critical that this paradigm be revised. The use of artificial intelligence raises many questions. While it offers the potential for designing dynamic solutions for detecting intrusions, it also opens the door to new threats. “The principle behind using AI is that it adapts to situations,” explains Frédéric Cuppens. “But if systems are constantly adapting, how will it be possible to determine ahead of time if the changes are caused by AI or an attack?” To prevent cybercriminals from taking advantage of this gray area, the systems’ security must be guaranteed and the way they operate must be transparent. Yet today, these two dimensions are far from being at the forefront of most developers’ minds. “The security of connected objects is being left behind,” says Frédéric Cuppens. And it goes further than the question of processes: “being careful about what we do with information technology is a state of mind,” Hervé Debar adds.

[box type=”info” align=”” class=”” width=””]

A chair for protecting critical infrastructure

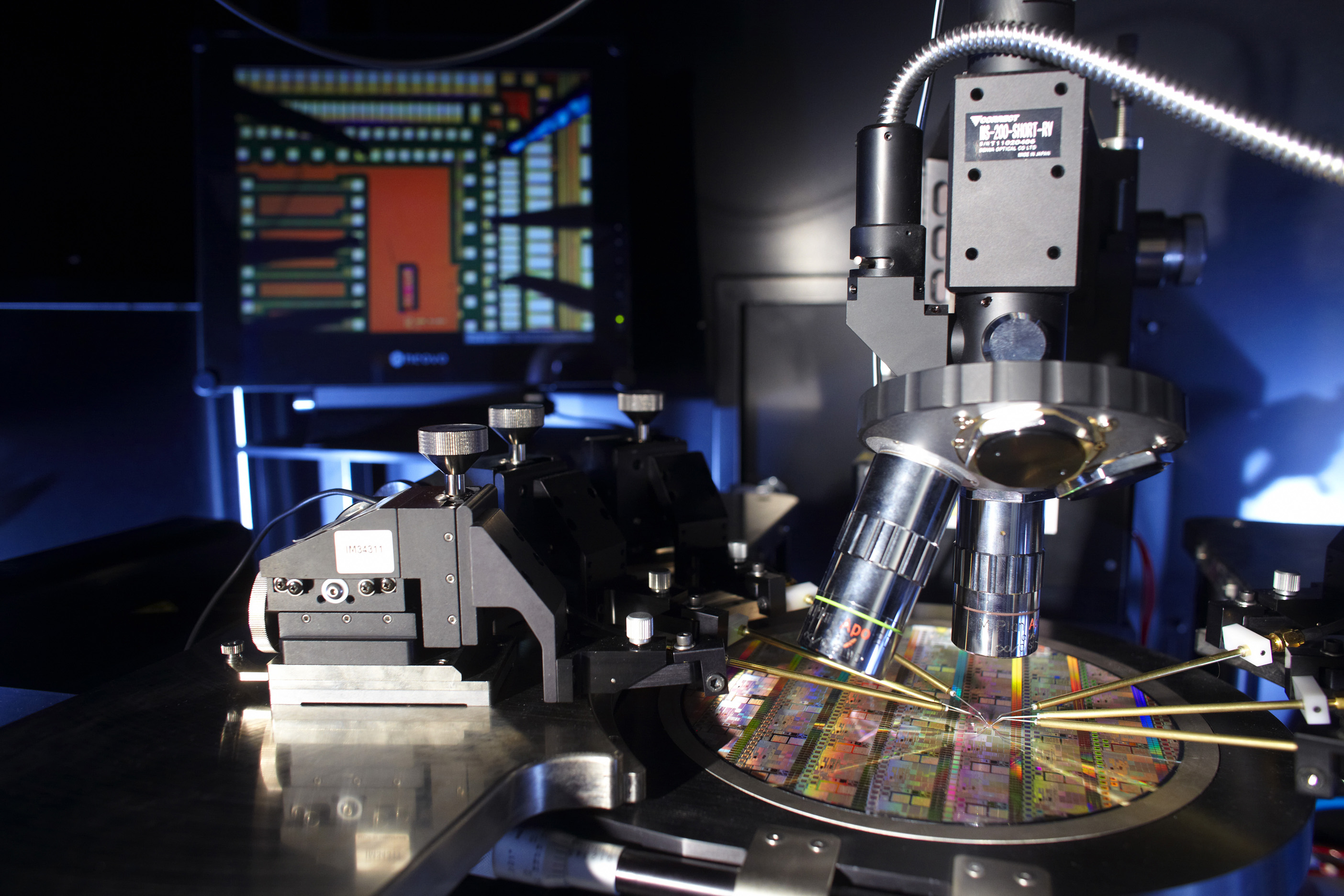

Telecommunications, defense, energy… These sectors are vital for the proper functioning of the country. In the event of a breakdown, essential services are no longer provided to citizens. With the rise in connected objects within these critical infrastructures, the risk of cyberattacks is increasing. New cyber-defense programs must therefore be developed to protect them.

This is the whole purpose of the IMT Cybersecurity of Critical Infrastructures Chair. It brings together researchers from IMT Atlantique, Télécom SudParis and Télécom Paristech to focus on the issue. The scientists work in close collaboration with industry stakeholders affected by these issues. Airbus, Orange, EDF, La Poste, BNP Paribas, Amossys and Société Générale are all partners of this Chair. They provide the researchers with real-life cases of risks and systems that must be protected to improve the current state of cyber-defense.

Find out more

[/box]