Pierre Comon’s research focuses on a subject that is as simple as it is complex: how to find a single solution to a problem. From environment to health and telecommunications, this researcher in information science at GIPSA-Lab is taking on a wide range of issues. Winner of the IMT-Académie des Sciences 2018 Grand Prix, he juggles mathematical abstraction and the practical, scientific reality in the field.

When asked to explain what a tensor is, Pierre Comon gives two answers. The first is academic, factual, and rather unattractive despite a hint of vulgarization: “it is a mathematical object that is equivalent to a polynomial with several variables.” The second answer reveals a researcher conscious of the abstract nature of his work, passionate about explaining it and experienced at doing so. “If I want to determine the concentration of a molecule in a sample, or the exact position of a satellite in space, I need a single solution to my mathematical problem. I do not want several possible positions of my satellite or several concentration values, I only want one. Tensors allow me to achieve this.”

Tensors are particularly powerful in conditions in which the number of parameters is not particularly high. For example, they cannot be used to find the unknown position of 100 satellites with only 2 antennas. However, when the ratio between the parameters to be determined and the data samples are balanced, they become a very useful tool. There are many applications for tensors, including telecommunications, environment and healthcare.

Pierre Comon recently worked on tensor methods for medical imaging at the GIPSA-Lab* in Grenoble. For patients with epilepsy, one of the major problems is determining the source of the seizures in the brain. This not only makes it possible to treat the disease, but also to potentially prepare for surgery. “When patients have a disease that is too resistant, it is sometimes necessary to perform an ablation,” the researcher explains.

Today, these points are localized using invasive methods: probes are introduced into the patient’s skull to record brainwaves, a stage that is particularly difficult for patients. The goal is therefore to find a way to determine the same parameters using non-invasive techniques, such as electroencephalography and magnetoencephalography. Tensor tools are integrated into the algorithms used to process the brain signals recorded through these methods. “We have obtained promising results,” explains Pierre Comon. Although he admits that invasive methods currently remain more efficient, he also points out that they are older. Research on this topic is still young but has already provided reason to hope that treating certain brain diseases could become less burdensome for patients.

An entire world in one pixel

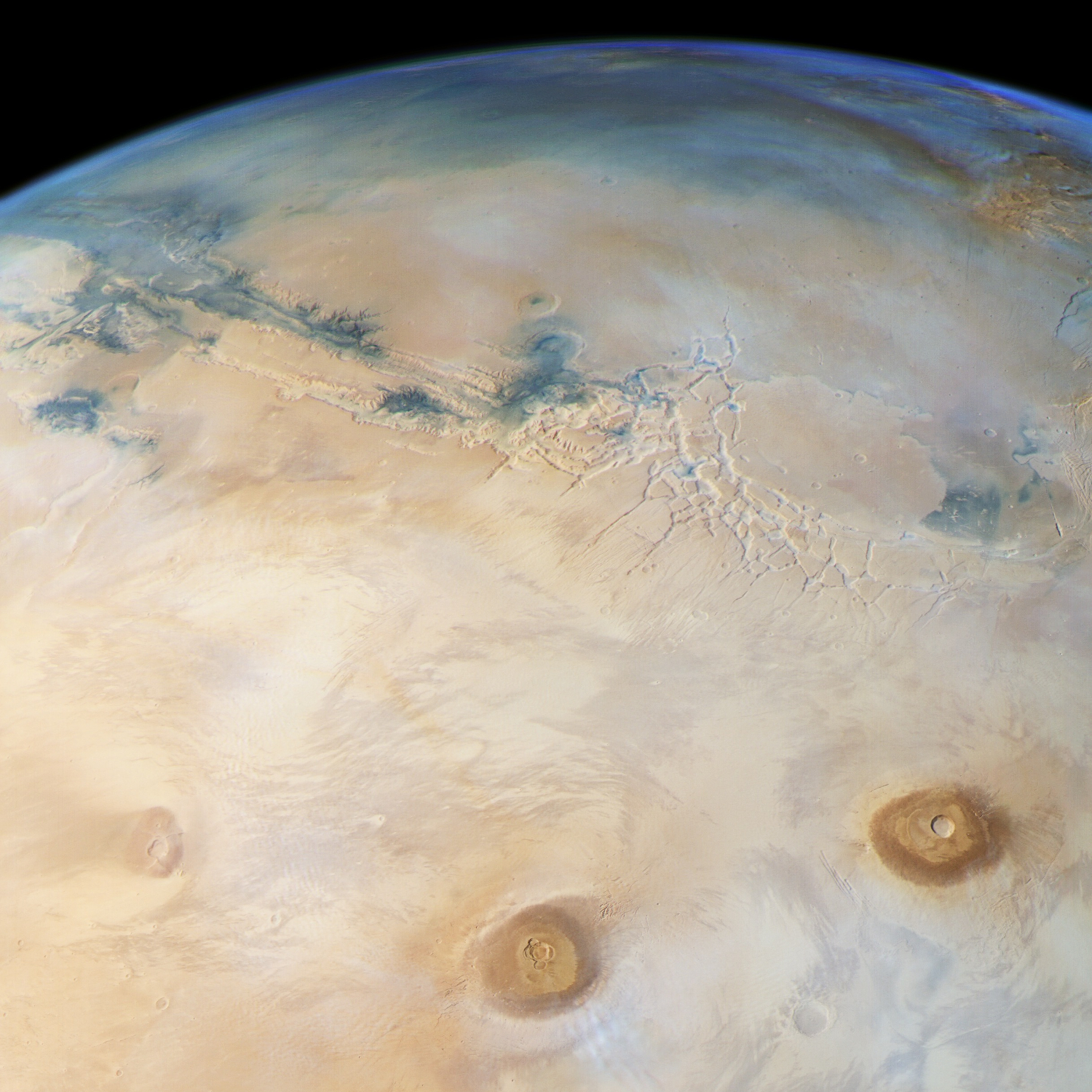

For environmental applications, on the other hand, results are much less prospective. Over the past decade, Pierre Comon has demonstrated the relevance of using tensors in planetary imaging. In satellite remote sensing, each pixel can cover anywhere from a few square meters to several square kilometers. The elements present in each pixel are therefore very diverse: forests, ice, bodies of water, limestone or granite formations, roads, farm fields, etc. Detecting these different elements can be difficult depending on the resolution. Yet, there is a clear benefit in the ability to automatically determine the number of elements within one pixel. Is it just a forest? Is there a lake or road that runs through this forest? What is the rock type?

The tensor approach answers these questions. It makes it possible to break down pixels by indicating the number of the different components. Better still, it can do this “without using a dictionary, in other words, without knowing ahead of time what elements might be in the pixel,” the researcher explains. This possibility owes to an intrinsic property of tensors, which Pierre Comon has brought to light. In certain mathematical conditions, they can only be broken down one way. In practice, for satellite imaging, a minimum number of variables are required: the intensity received for each pixel, each wavelength and each angle of incidence must be known. Therefore, the unique nature of tensor decomposition makes it possible to retrace the exact proportion of different elements in each image pixel.

For planet Earth, this approach has limited benefits, since the various elements are already well known. However, it could be particularly helpful in monitoring how forests or water supplies develop. On the other hand, the tensor approach is especially useful for other planets in the solar system. “We have tested our algorithms on images of Mars,” says Pierre Comon. “They helped us to detect different types of ice.” For planets that are still very distant and not as well known, the advantage of this “dictionary free” approach is that it helps bring unknown geological features to light. Whereas the human mind tends to compare what it sees with something it is familiar with, the tensor approach offers a neutral description and can help reveal structures with unknown geochemical properties.

The common theme: a single solution

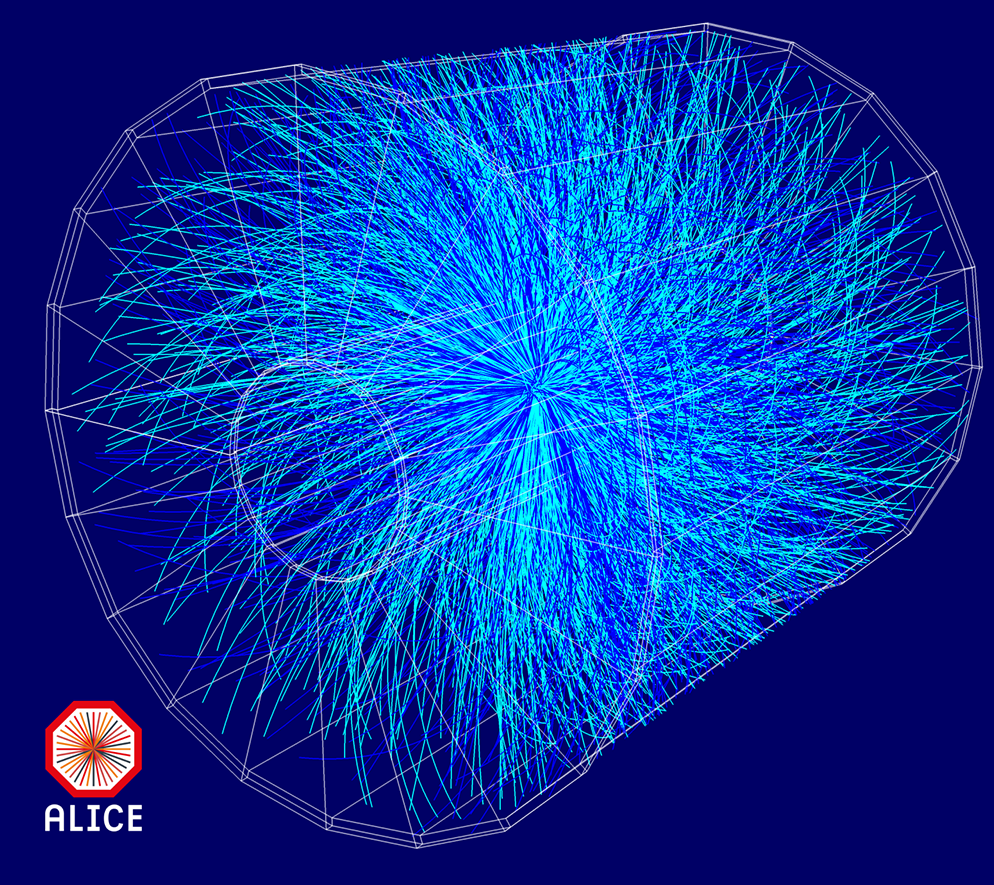

Throughout his career, Pierre Comon has sought to understand how a single solution can be found for mathematical problems. His first major research in this area began in 1989 and focused on blind source separation in telecommunications. How could the mixed signals from two transmitting antennas be separated without knowing where they were located? “Already at that point, it was a matter of finding a single solution,” the researcher recalls. This research led him to develop techniques for analyzing signals and decomposing them into independent parts to determine the source of each one.

The results he proposed in this context during the 1990s had a huge resonance in both the academic world and industry. In 1988, he joined Thales and developed several patents used to analyze satellite signals. His pioneer article on the analysis of independent components has been cited by fellow researchers thousands of times and continues to be used by scientists. According to Pierre Comon, this work formed the foundation for his research topic. “My results at the time allowed us to understand the conditions for the uniqueness of a solution but did not always provide the solution. That required something else.” That “something else” is in part the tensors, which he has demonstrated to be valuable in finding single solutions.

His projects now focus on increasing the number of practical applications of his research. Beyond the environment, telecommunications and brain imaging, his work also involves chemistry and public health. “One of the ideas I am currently very committed to is that of developing an affordable device for quickly determining the levels of toxic molecules in urine,” he explains. This type of device would quickly reveal polycyclic aromatic hydrocarbon contaminations—a category of harmful compounds found in paints. Here again, Pierre Comon must determine certain parameters in order to identify the concentration of pollutants.

*The GIPSA-Lab is a joint research unit of CNRS, Université Grenoble Alpes and Grenoble INP.

[author title=”Pierre Comon: the mathematics of practical problems” image=”https://imtech-test.imt.fr/wp-content/uploads/2018/11/pierre-comon.jpg”]Pierre Comon is known in the international scientific community for his major contributions to signal processing. He became interested in exploring higher order statistics for separating sources very early on, establishing foundational theories for analyzing independent components, which has now become one of the standard tools used for the statistical processing of data. His significant contribution recently included his very original results on tensor factorization.

The applications of Pierre Comon’s contributions are very diverse and include telecommunications, sensor networks, health and environment. All these areas demonstrate the scope and impact of his work. His long industrial history, strong desire for his scientific approach to be grounded in practical problems and his great care in developing algorithms for implementing the obtained results all further demonstrate how strongly Pierre Comon’s qualities resonate with the criteria for the 2018 IMT-Académie des Sciences Grand Prix.[/author]