Without noise, virtual images become more realistic

With increased computing capacities, computer-generated images are becoming more and more realistic. Yet generating these images is very time-consuming. Tamy Boubekeur, specialized in 3D Computer Graphics at Télécom ParisTech, is on a quest to solve this problem. He and his team have developed new technology that relies on noise-reduction algorithms and saves computing resources while offering high-quality images.

Have you ever been impressed by the quality of an animated film? If you are familiar with cinematic video games or short films created with computer-generated images, you probably have. If not, keep in mind that the latest Star Wars and Fantastic Beasts and Where to Find Them movies were not shot on a satellite superstructure the size of a moon or by filming real magical beasts. The sets and characters in these big-budget films were primarily created using 3D models of astonishing quality. One of the many examples of these impressive graphics: the demonstration by the team from Unreal Engine, a video game engine, at the Game Developers Conference last March. They worked in collaboration with Nvidia and ILMxLAB to create a fictitious scene from Star Wars created using only computer-generated images, for all the characters and sets.

To trick viewers, high-quality images are crucial. This is an area Tamy Boubekeur and his team from Télécom ParisTech specialize in. Today, most high-quality animation is produced using a specific type of computer-generated image: photorealistic computer generation using path tracing. This method begins with a 3D model of the desired scene, with the structures, objects and people. Light sources are then placed in the artificial scene: the sun outside, or lamps inside. Then paths are traced starting from the camera—what will be projected on the screen from the viewer’s vantage point—and moving towards the light source. These are the paths light takes as it is reflected off the various objects and characters in the scene. Through these reflections, the changes in the light are associated with each pixel in the image.

“This principle is based on the laws of physics and Helmholtz’s principle of reciprocity, which makes it possible to ‘trace the light’ using the virtual sensor,” Tamy Boubekeur explains. Each time the light bounces off objects in the scene, the equations governing the light’s behavior and the properties of the modeled materials and surfaces define the path’s next direction. The spread of the modeled light therefore makes it possible to capture all the changes and optical effects that the eye perceives in real life. “Each pixel in the image is the result of hundreds or even thousands of paths of light in the simulated scene,” the researcher explains. The final color of the pixel is then generated by computing the average of the color responses from each path.

Saving time without noise

The problem is, achieving a realistic result requires a tremendous number of paths. “Some scenes require thousands of paths per pixel and per image: it takes a week of computing to generate the image on a standard computer!” Tamy Boubekeur explains. This is simply too long and too expensive. A film contains 24 images per second. In one year of computing, less than two seconds of a film would be produced on a single machine. Enter noise-reduction algorithms—specifically those developed by the team from Télécom ParisTech. “The point is to stop the calculations before reaching thousands of paths,” the researcher explains. “Since we have not gone far enough in the simulation process, the image still contains noise. Other algorithms are used to remove this noise.” The noise alters the sharpness of the image and is dependent on the type of scene, the materials, lighting and virtual camera.

Research on noise has been carried out and has flourished since 2011. Today, many algorithms exist based on different approaches. Competition is fierce in the quest to achieve satisfactory results. What is at stake in the achieved performance? The programs’ capacity to reduce calculation times and produce a final result without noise. The Bayesian collaborative denoiser (BCD) technology, developed by Tamy Boubekeur’s team, is particularly effective in achieving this goal. Developed from 2014 to 2017 as part of Malik Boudiba’s thesis, the algorithms used in this technology are based on a unique approach.

Normally, noise removal methods attempt to guess the amount of noise present in a pixel based on properties in the observed scene—especially its visible geometry—in order to remove it. “We recognized that the properties of the scene being observed could not account for everything,” Tamy Boubekeur explains. “The noise also originates from areas not visible in the scene, from materials reflecting the light, the semi-transparent matter the light passes through or properties of the modeled optics inside the virtual camera.” A defocused background or a window in the foreground can create varying degrees of noise in the image. The BCD algorithm therefore only takes into account the color values associated with the hundreds of paths calculated before the simulation is stopped, just before the values are averaged into a color pixel. “Our model estimates the noise associated with a pixel based on the distribution of these values, by analyzing similarities with the properties of other pixels and removes the noise from them all at once,” the researcher explains.

A sharp image of Raving Rabbids

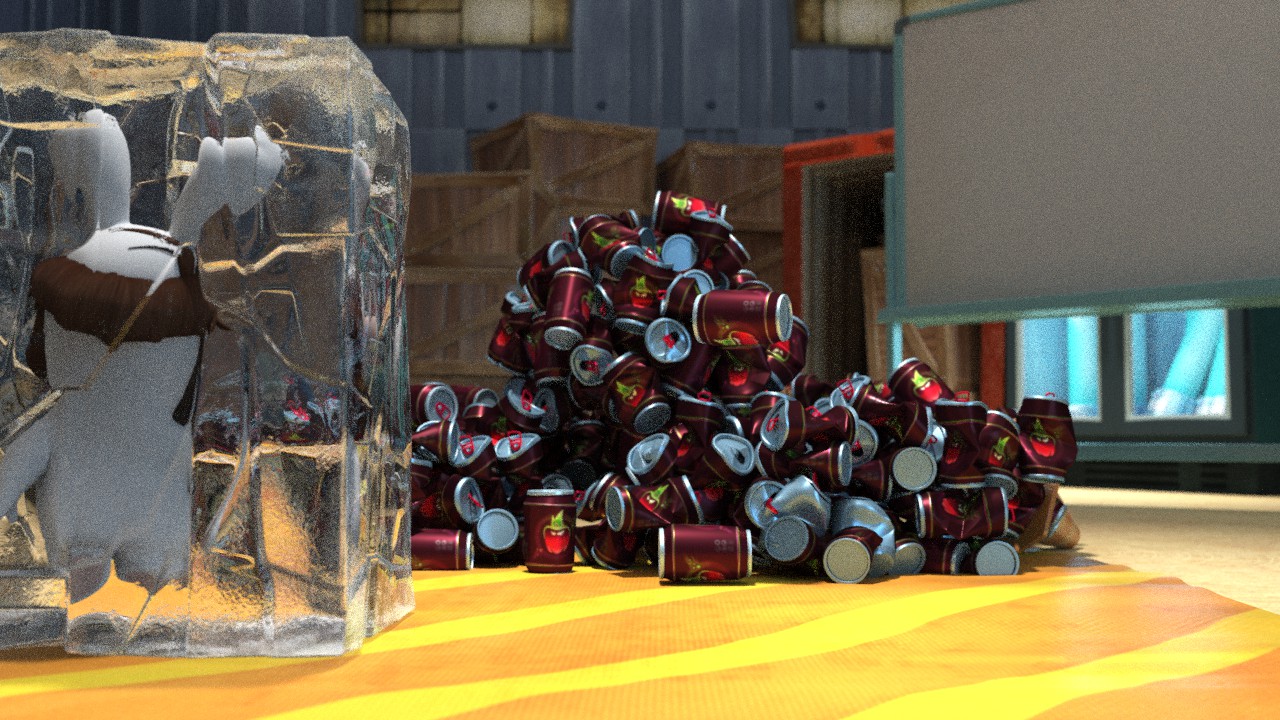

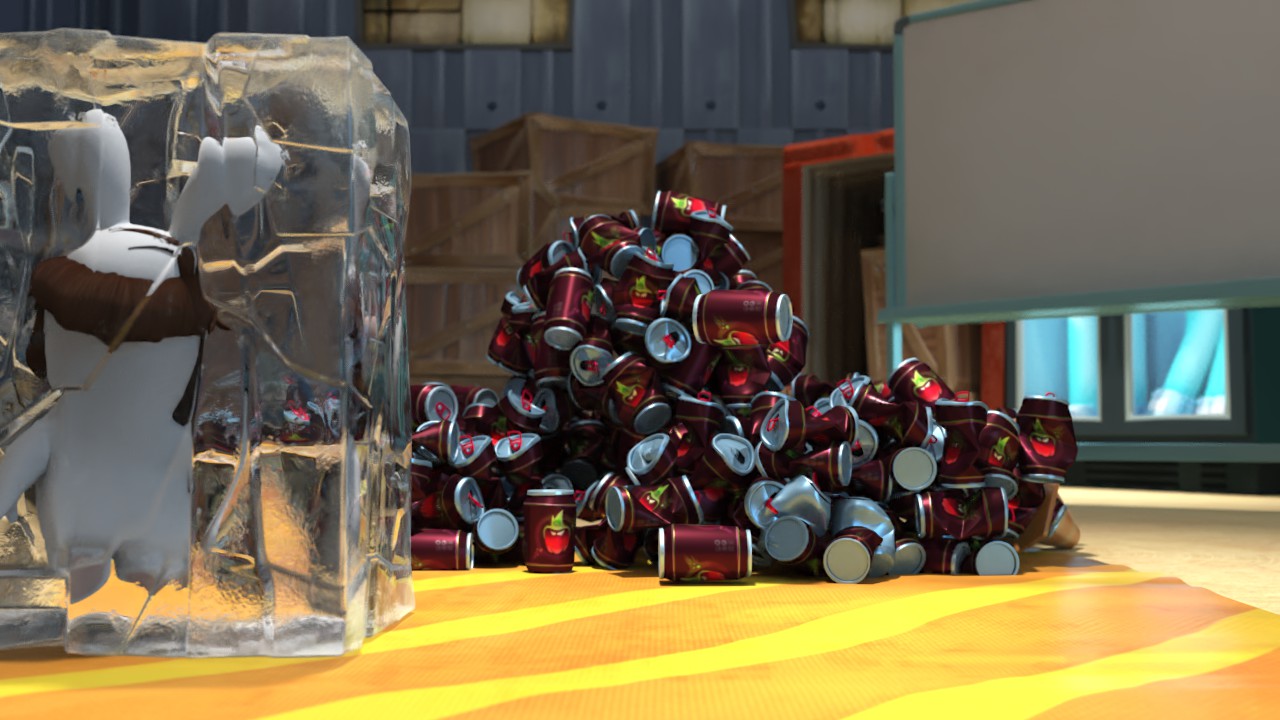

The BCD technology was developed as part of the PAPAYA project launched as part of the French National Fund for Digital Society. The project was led in partnership with Ubisoft Motion Pictures to define the key challenges in terms of noise-reduction for professional animation. The company was really impressed by the algorithms in the BCD technology and integrated them into its graphics production engine, Shining. It then used them to produce its animated series, Raving Rabbids. “They liked that our algorithms work with any type of scene, and that the technology is integrated without causing any interference,” Tamy Boubekeur explains. The BCD noise-remover does not require any changes in image calculation methods and can easily be integrated into systems and teams that already have well-established tools.

The source code for the technology has been published in open source on Github. It is freely available, particularly for animation film professionals who prefer open technology over the more rigid proprietary technology. An update to the code integrates an interactive preview module that allows users to adjust the algorithm’s parameters, thus making it easier to optimize the computing resources.

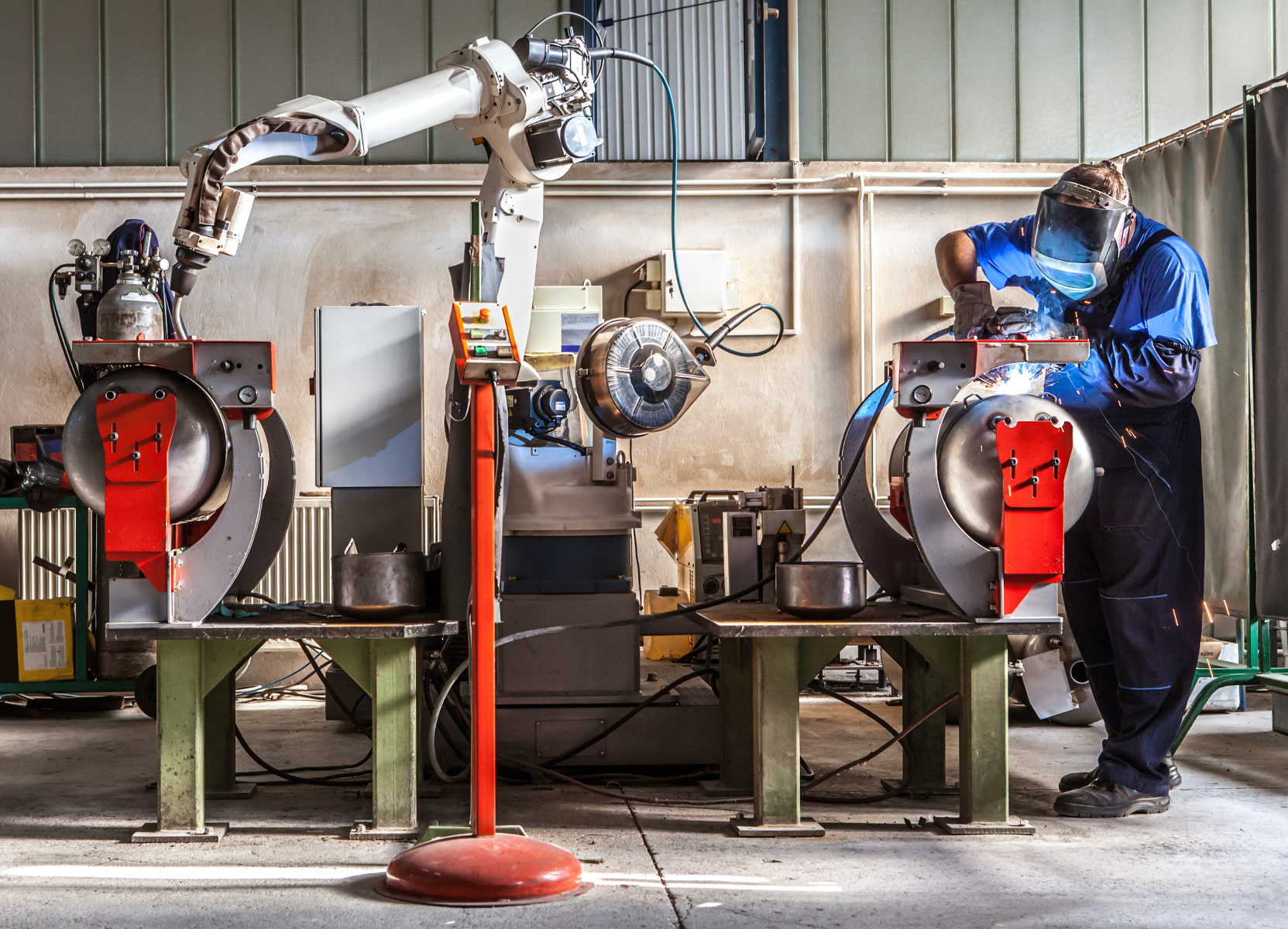

The BCD technology has therefore proven its worth and has now been integrated into several rendering engines. It offers access to high-quality image synthesis, even for those with limited resources. Tamy Boubekeur reminds us that a film like Disney’s Big Hero 6 contains approximately 120,000 images, requires 200 million hours of computing time and the use of thousands of processors to be produced in a reasonable timeframe. For students and amateur artists, these technical resources are inaccessible. Algorithms like those used in the BCD technology offer them the hope of more easily producing very high-quality films. And the team from Télécom ParisTech is continuing its research to even further reduce the amount of computing time required. Their objective: develop new light simulation calculation distribution methods using several low-capacity machines.

[divider style=”normal” top=”20″ bottom=”20″]

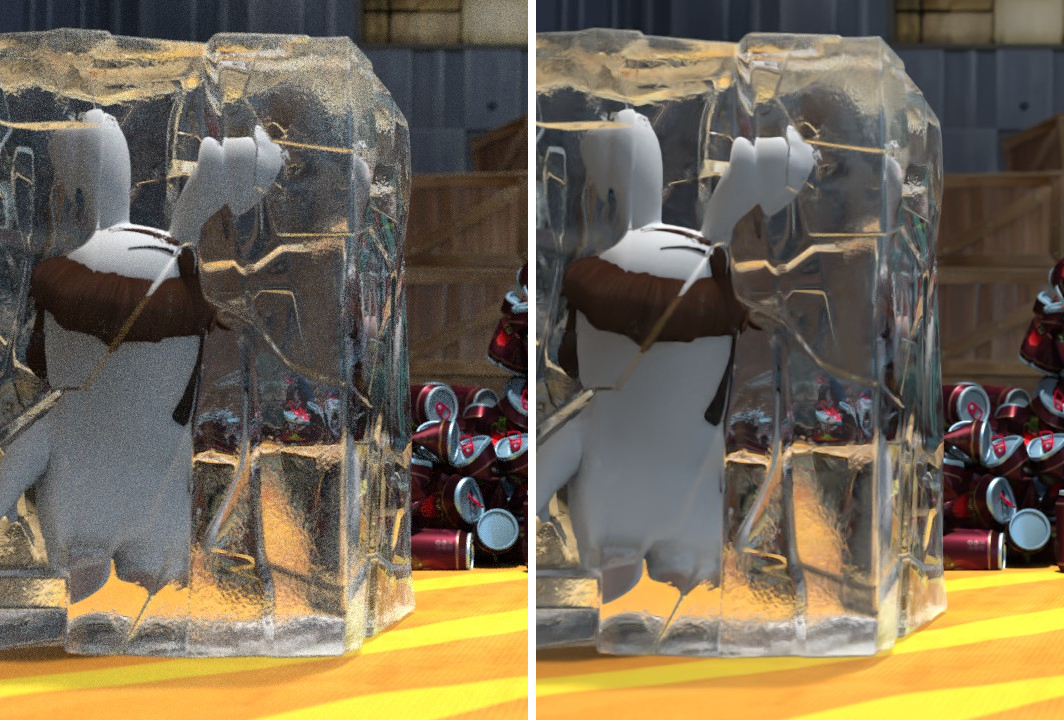

Illustration of BCD denoising a scene, before and after implementing the algorithm

Illustration of BCD denoising a scene, before and after implementing the algorithm