Smart homes: A world of conflict and collaboration

The progress made in decentralized artificial intelligence means that we can now imagine what our future homes will be like. The services offered by a smart home to its users are likely to be modeled on appliances which communicate and cooperate with each other autonomously. Today, this approach is considered the best way to control the dynamic, data-rich household environment. Olivier Boissier and Gauthier Picard, researchers in AI at Mines Saint-Étienne, are currently working on the technology. In this interview for I’MTech, they explain the interest in the decentralized approach to AI as well as how it works, through concrete examples of how it is used in the home.

This article is part of our dossier “Far from fantasy: the AI technologies which really affect us.”

Can we think of smart homes as a simple network of connected objects?

Gauthier Picard: A smart home is made up of an assortment of fairly different objects. This is very different from industrial networks of sensors, in which devices are designed to have similar memory capacities and identical structures. In a house, we cannot put the same calculating capacity in a light bulb as in an oven, for example. If the occupant expects a varied number of operating scenarios with the objects coordinating together, it means that we must be able to take the objects’ differences into account. A smart home is also a very dynamic environment. You must be able to add things such as an intelligent light bulb, or a Raspberry-type nanocomputer to control the blinds when you want to, without hindering the performance for the user.

So, how do you make a house ‘smart’ despite all this complexity?

Olivier Boissier: We use what we call a multi-agent approach. This is central to our discipline of decentralized artificial intelligence. We use the term ‘decentralized’ instead of ‘distributed’ to really highlight that to make a house ‘smart’, we need to do more than just distribute knowledge between the different devices. The decision also needs to be decentralized. We use the term agent to describe an object, service which will manage several objects or a service which will itself manage several services. Our aim is to make these agents organize themselves via rules which allow them to exchange information in the best way possible. But not all household objects will become agents because, as Gautier said, some objects don’t have sufficient calculating capacity and are unable to organize themselves. Therefore, one of the biggest questions that we ask ourselves is whether an object should remain a simple object which perceives or executes things, such as a sensor or a small LED, and which objects will become agents.

Can you show how his approach works with a concrete example of how it’s used in a smart home?

GP: If we again use light bulbs and light as an example, we can imagine a user asking for the light level in their smart home to fall by 40% if they’re in their living room after 9pm. The user doesn’t care which object decides or acts to carry out the request, what interests them is having less light. It’s up to the global system to optimize the decisions by deciding which light bulb to turn off or whether the TV also needs to be turned off as it emits light even when it’s not being used, or whether it can leave the blinds open because it’s still daylight outside. All of these decisions need to be made in a collective manner, potentially with constraints set by the occupant who might want to lower the electricity bill, for example. A centralized entity will not manage all of these decisions, instead, each element will react depending on what the other elements do. If it is summer, and therefore still light outside, does the house need more lights on? If it does, then the agents will first turn on the bulbs which consume the least energy and emit the most light. If this is not enough, other agents will turn on other light bulbs.

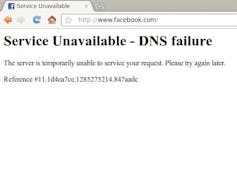

You said that the decision was not centralized. Why don’t you just have one decision-making device which manages all the objects?

GP: The problem with a centralized solution, is that it all depends on a single device. With this approach, it is very likely that all information will be stored on the cloud. If the network is faulty, or if there is too much activity, then the network’s performance will be affected. The advantage of the multi-agent approach is that it also has data which is close to where the decision is being made. Since everything is done locally, it will take less time for decisions to be made and the network will have better security. Therefore, the system is more resilient and can respond better to the privacy requirements of the users.

OB: But we are not saying that centralized solutions are necessarily worse. The multi-agent approach requires efforts to coordinate the objects and services. It should be preferred in complex environments, where it is necessary to have data close to where the decision is being made. If a centralized management algorithm works for a precise and simple action, then that’s fine. The multi-agent approach becomes interesting when there are large quantities of data which need to be processed quickly. This is the case when a smart home includes several users with multiple, sometimes conflicting, functions.

How can functions become conflicting?

OB: In the case of lighting, a conflicting situation would be if two users in the same room have different preferences. The same agents are asked to carry out two incompatible decision-making processes. This situation can be simplified to a conflict of resources. Conflicts like this have a high chance of occurring because we are in a dynamic environment. The agents make action plans to respond to the user’s demands but if another user enters in the room, the plan will be disrupted. Therefore, conflicts can’t always be predicted in advance; they often only appear when the plan is being executed. In certain cases, simple rules mean that the problem can be resolved quickly. This happens when priority functions such as emergency assistance or the security of the building will take precedence over entertainment functions. In other cases, ways to resolve conflicts between agents must be created.

GP: Negotiation is a good example of a technique which solves this problem. Because the conflict is a fight over a resource, each agent can coordinate a bid for the functions that it wishes to use. If it wins the bid, it accumulates a debt which prevents it from winning the next one. Over time, the agents regulate themselves. By adopting an economic approach between agents, we can also try to find the Nash equilibrium. This means that each agent will maximize its output depending on what the rest of the agents want to do.

How do you make all of these interactions possible between agents?

OB: There are several ways that agents can self-organize. It can be done through stigmergy, whereby the agents don’t communicate with each other; they simply act in response to what is happening around them. This can also be in response to information that is placed in their environment by other agents, which allows them to respond to the user’s request. Another method is introducing a global behavior policy for all the agents, such as privacy, and leaving the agents to interpret it in a collective manner. In this case, the user simply gives their preference on what they want to remain confidential and the agents communicate the information accordingly. We try to combine these approaches by adding more coordination protocols, such as the conflict management rules which were mentioned above.

GP: All the agents have access to a definition of their environment. They know the rules and the roles that they can play, and they adapt to this environment. It’s a bit like when you learn the Highway Code so that you know how to act when you approach a crossroads. You know what can happen and what other motorists are supposed to do. If you find yourself in a situation which does not follow the usual rules, for example because there is a traffic jam, or an accident has happened right in the middle of the crossroad, you adapt the rules. Agents should be able to do the same thing. They should react and change the system so that they can organize themselves and respond to the user’s demands.

In regard to this general multi-agent approach for smart houses, what can we already do and what still remains a research question?

OB: Currently, there are a lot of studies on subjects that provide effective solutions in theory for the problems that we have raised. We know how to build protocols which satisfy the organization functions, we know how to configure behavioral policies amongst agents. However, there is still a lot of work to be done to move past theory and into practice. When we have a concrete case of a smart house with large amounts of information arriving at any time, the system must be able to process that data. From a practical point of view, we also need to answer fundamental questions about what a smart home should be for the user. Should they have control over absolutely everything or can we leave the decisions to agents without user control?

Is it realistic to consider the control being taken away from the occupant?

GP: We have to understand that we aren’t dealing here with neural networks which make decisions like black boxes. In the case of the multi-agent approach, there is a history of the decisions of the agent, with the plan that it puts in place to reach that decision, and the reasons for creating the plan. So even if the decision is left to the agent, that doesn’t mean that the user won’t know how it came about. There is still a control mechanism, and the user can change their preferences if they need to. It’s not as if the agent decides without the user having any opportunity to know what it is doing.

OB: It’s an AI approach which is different to what people imagine artificial intelligence being. It is not yet as well known as the learning approach. Decentralized AI is still difficult for the general public to understand but there are now more and more uses for the technology, which means that it’s becoming increasingly necessary. 20 years ago, systems often had a centralized solution. Today, notably with the development of the IoT (Internet of Things), decentralization is an obligation and decentralized AI is recognized as being the most logical solution for uses such as smart homes or Smart Cities.