Recycling carbon fibre composites: a difficult task

Carbon fibre composite materials are increasingly widespread, and their use continues to rise every year. Recycling these materials remains difficult, but is nevertheless necessary at the European level for environmental, economic and legislative reasons. At IMT Mines Albi, researchers are working on a new method: vapo-thermolysis. While this process offers promising results, there are many steps to be taken before a recycling system can be developed.

The new shining stars of aviation giants Airbus and Boeing, the A350 and the 787 Dreamliner, are also symbols of the growing prevalence of composite materials in our environment. Aircraft, along with wind turbines, cars and sports equipment, increasingly contain these materials. Carbon fibre composites still represent a minority of the composites on the market — far behind fiberglass — but are increasing by 10 to 15% per year. Manufacturers must now address the question of what will become of these materials when they reach the end of their lives? In today’s society, where considering the environmental impact of product is no longer optional, the recycling issue question cannot be ignored.

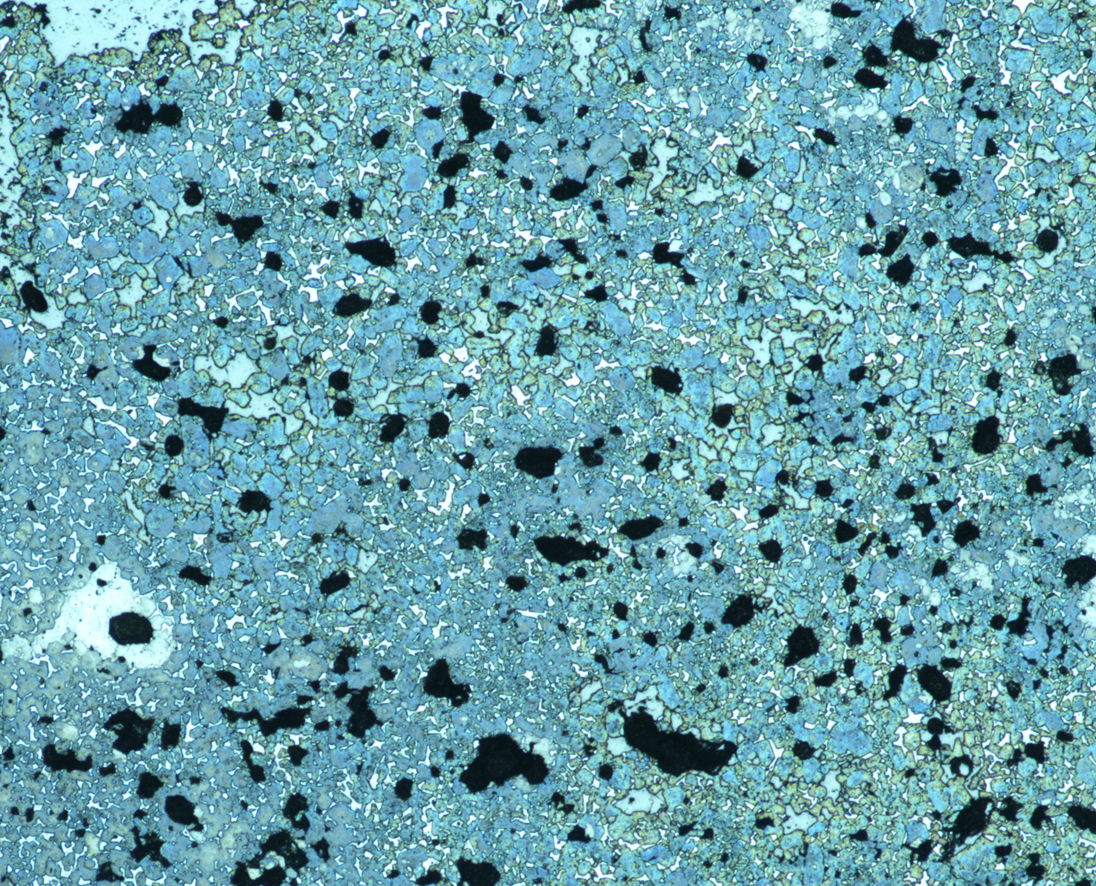

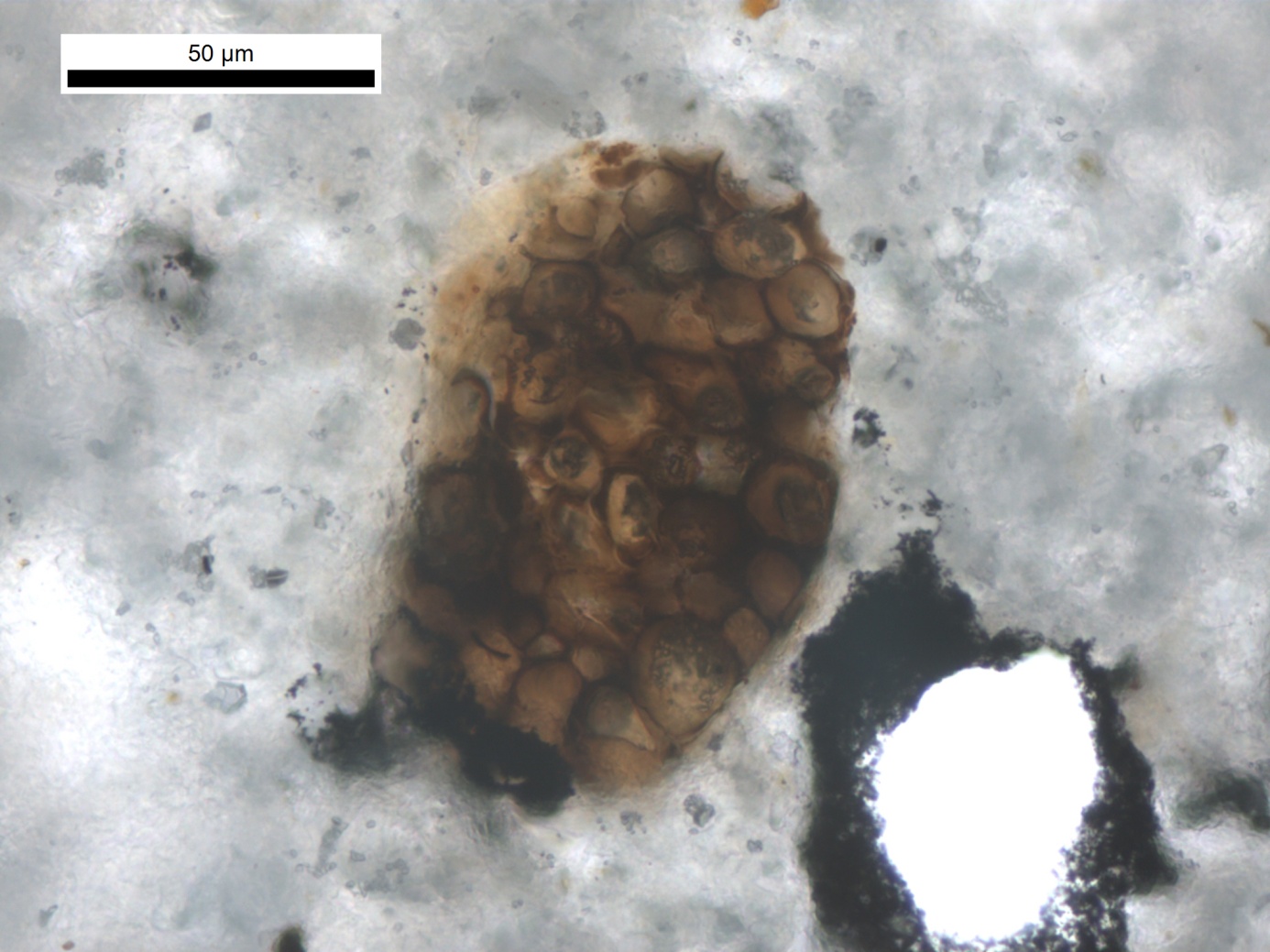

At IMT Mines Albi, scientific research being carried out by Yannick Soudais[1] and Gérard Bernhart[2] addresses this issue. The researchers in polymer and materials chemistry are developing a new process for recycling carbon fibre composites. This is no small task, since it requires separating the fibre present in the form of a textile or unidirectional filaments from the solid resin polymer that forms the matrix in which it is plunged. Two main processes currently exist to try to separate the fiber from the resin: pyrolysis and solvolysis. The first consists of burning the matrix in an inert nitrogen atmosphere in order to avoid burning part of the fiber. The second is a chemical method based on solvents, which is very laborious, because it requires high temperature and pressure.

The process developed by the Albi-based researchers is called “vapo-thermolysis” and combines these two processes. At present, it is one of the most promising solutions in the world to move toward the wide-scale reuse of carbon fibres. Besides Albi, only a handful of other research centers in the world are working on this topic (mainly in Japan, China and South Korea). “We use superheated water vapor which acts as a solvent and induces chemical degradation reactions,” explains Yannick Soudais. Unlike with pyrolysis, there is no need for nitrogen. And compared to the traditional chemical method, the process takes place under atmospheric pressure. In short: vapo-thermolysis is easier to implement and master on an industrial scale.

After recovery, reuse

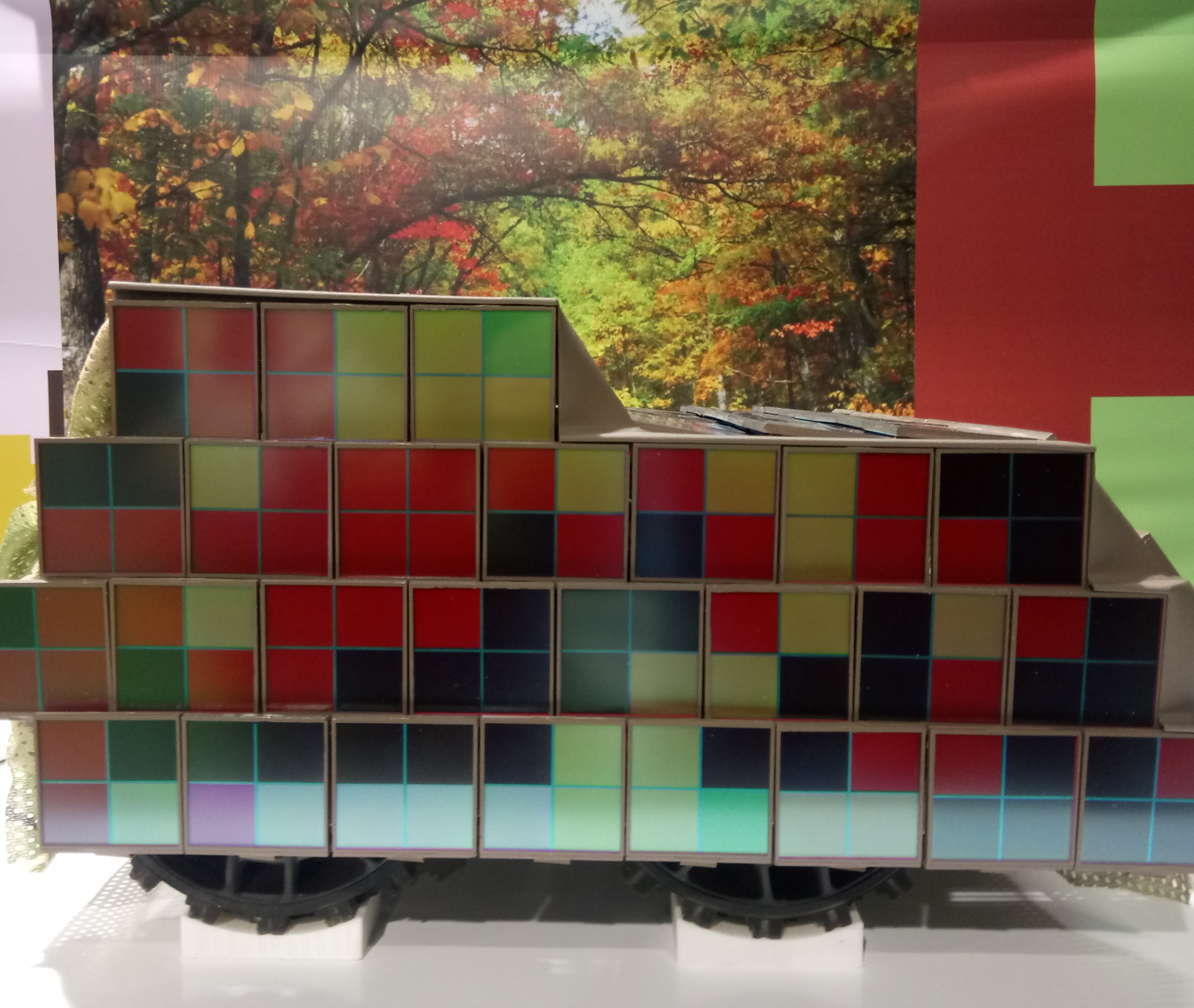

The simplest way to reuse carbon fibres is to spread out the bundle of interlinked fibres on a flat surface and reuse it in this form, as a carpet. They will therefore be used to make composites for decorative parts rather than structural parts. The size of the recovered fibres can also be further reduced to be used as reinforcements for polymer pellets. This approach makes it possible to produce automobile parts using injection, for example. Demonstrations illustrating this type of reuse have been carried out by the researchers in collaboration with the Toulouse-based company Alpha Recyclage Composites (ARC).

But the real challenge remains being able to reuse these fibers for higher-performance uses. To do so, “we have to be able to make spun fibers from short fibres,” says Gérard Bernhart. “We’re carrying out extensive research on this topic in partnership with ARC because so far, no one in the world has been able to do that.” These prospects involve techniques specific to the textile industry, which is why the researchers have formed a partnership with the French Institute of Textiles and Clothing (IFTH). For now, the work is only in its exploratory stages and focuses on determining technologies which could be used to develop reshaping processes. One idea, for example, is to use ribbed rollers to form homogenous yarns, then a card to create a uniform voile, followed by a drawing and spinning stage.

For manufacturers of composite parts, these prospects open the door to more economically-competitive materials. Of course, recycling is an environmental issue and certain regulations establish standards of behavior for manufacturers. This is the case, for example, for automobile manufacturers, who must ensure, regardless of the parts used in their cars, that 85% of the vehicle mass can be recycled when it reaches the end of its life. But mature, efficient recycling processes also help lower the cost of manufacturing carbon fibre composite parts.

When the fibre is new it costs €25 per kilo, or even €80 per kilo for fibres produced for high-performance materials. “The price is mainly explained by the material and energy costs involved in fibre manufacturing,” says Gérard Bernhart. Recycled fibres would therefore lead to new industrial opportunities. Far from being unrelated to the environmental perspective, this economic aspect could, on the contrary, be a driving force for developing an effective system for recycling carbon fibres.

[1] Yannick Soudais is a researcher at the Rapsodee laboratory, a joint research unit between IMT Mines Albi/CNRS

[2] Gérard Bernhart is a researcher at the Clément Ader Institute, a joint research unit between IMT Mines Albi/ISAE/INSA Toulouse/University Toulouse III-Paul Sabatier/CNRS