Is dark matter the key to the medical scanner of the future?

A team of researchers at IMT Atlantique is developing a new type of medical scanner called XEMIS. To create the device, the team drew on their previous research in fundamental physics and the detection of dark matter, using liquid xenon technology. The first time the device was tested was using small animals. It allowed the scientists to significantly lower the injected dose, the time of the examinations, and to improve the resolution of the images produced.

This article is part of our dossier “When engineering helps improve healthcare“

For the past 10 years, researchers at IMT Atlantique have been tracking dark matter as part of the XENON international collaboration. Their approach, which uses liquid xenon, currently makes them world leaders in one of the biggest mysteries of the universe: what is dark matter made of? Although the answer to this question is still waiting to be discovered, the team’s fundamental work in physics has already given rise to new ideas… in medicine! As well as detecting dark matter, the innovations produced by the XENON collaboration have proven to be extremely useful for medical imaging, as they are much more efficient than current scanners.

Improving current medical imaging techniques is one of the great challenges for medicine’s future. The personalization of patients’ healthcare and follow-up care, as well as the prediction of illnesses, mean that doctors are needing to acquire more patient data more often. However, having to remain still for 30 minutes straight is not enjoyable for patients- especially when they are now being asked to get scans more often! For hospitals, more examinations means more machines and more staff. This means that faster imaging techniques are not only more practical for patients, but also more economic for health services.

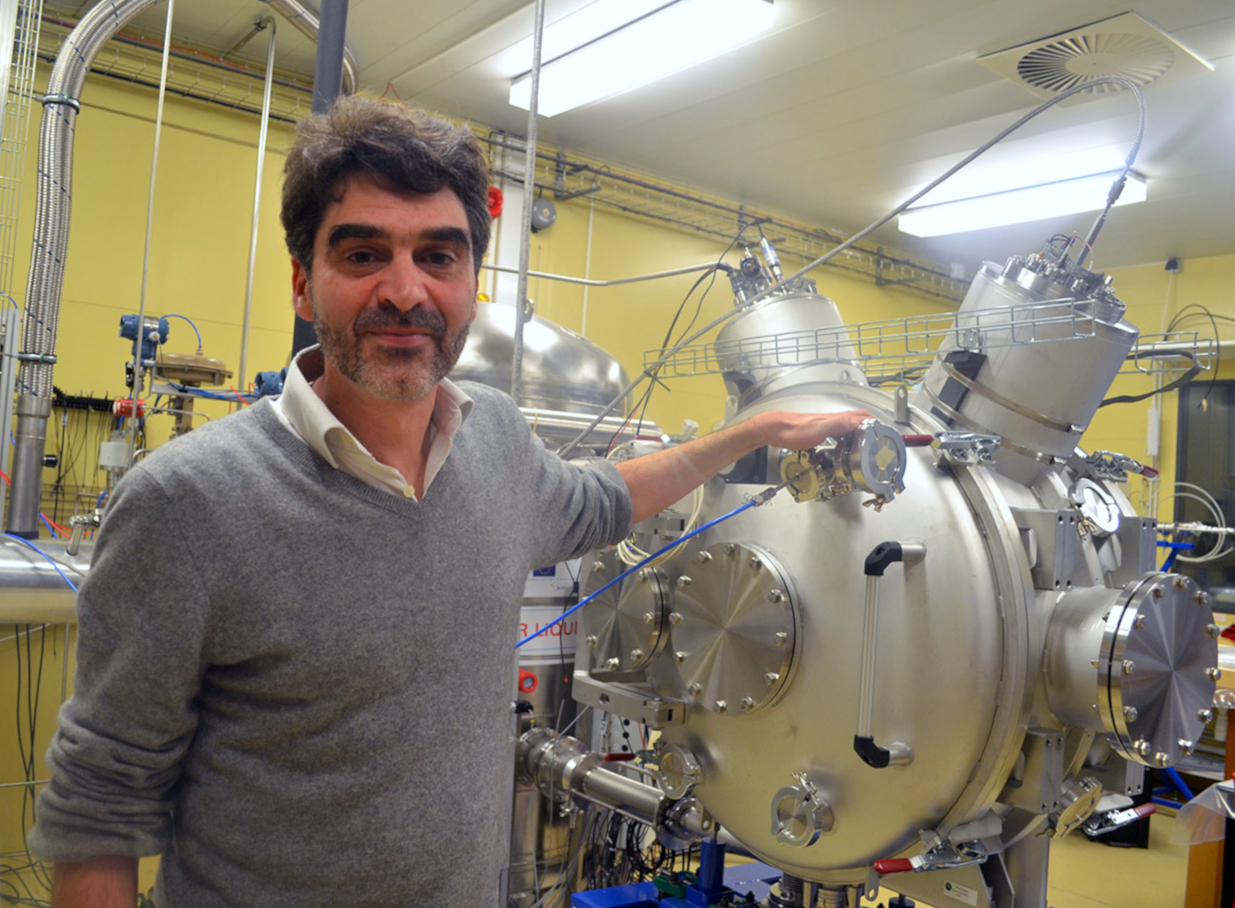

All over the world, several initiatives are competing to try and find the scanners of the future. These all have similar structures to scanners that are currently being used. “This is an area of study where investment is important” states Dominique Thers, a researcher in fundamental physics at IMT Atlantique and French coordinator of the XENON collaboration. Manufacturers are improving the scanners logically, for example, by increasing their camera size or resolution. “Since we are researching dark matter, our technology comes from another field. This means that our solution is completely different to the routes that are currently being explored”, highlights Thers, whilst reminding us that his team’s work is still in the research phase, and not for industrial use.

A xenon bath

The physicists’ solution has been named XEMIS (Xenon Medical Imaging System). Although he uses the word ‘camera’, Dominique Thers’ description of the device is completely different to anything we could have imagined. “XEMIS is a cylindrical bathtub filled with liquid xenon”. Imagine a donut led on its side and then stretched out lengthways with a hole in the middle to form a tube. The patient is led down inside the hole and surrounded by the tube’s 12cm thick wall, which is filled with liquid xenon. Although XEMIS is shaped like any other scanner, the imaging principle is completely different. In this device, the entire tube is the ‘camera’.

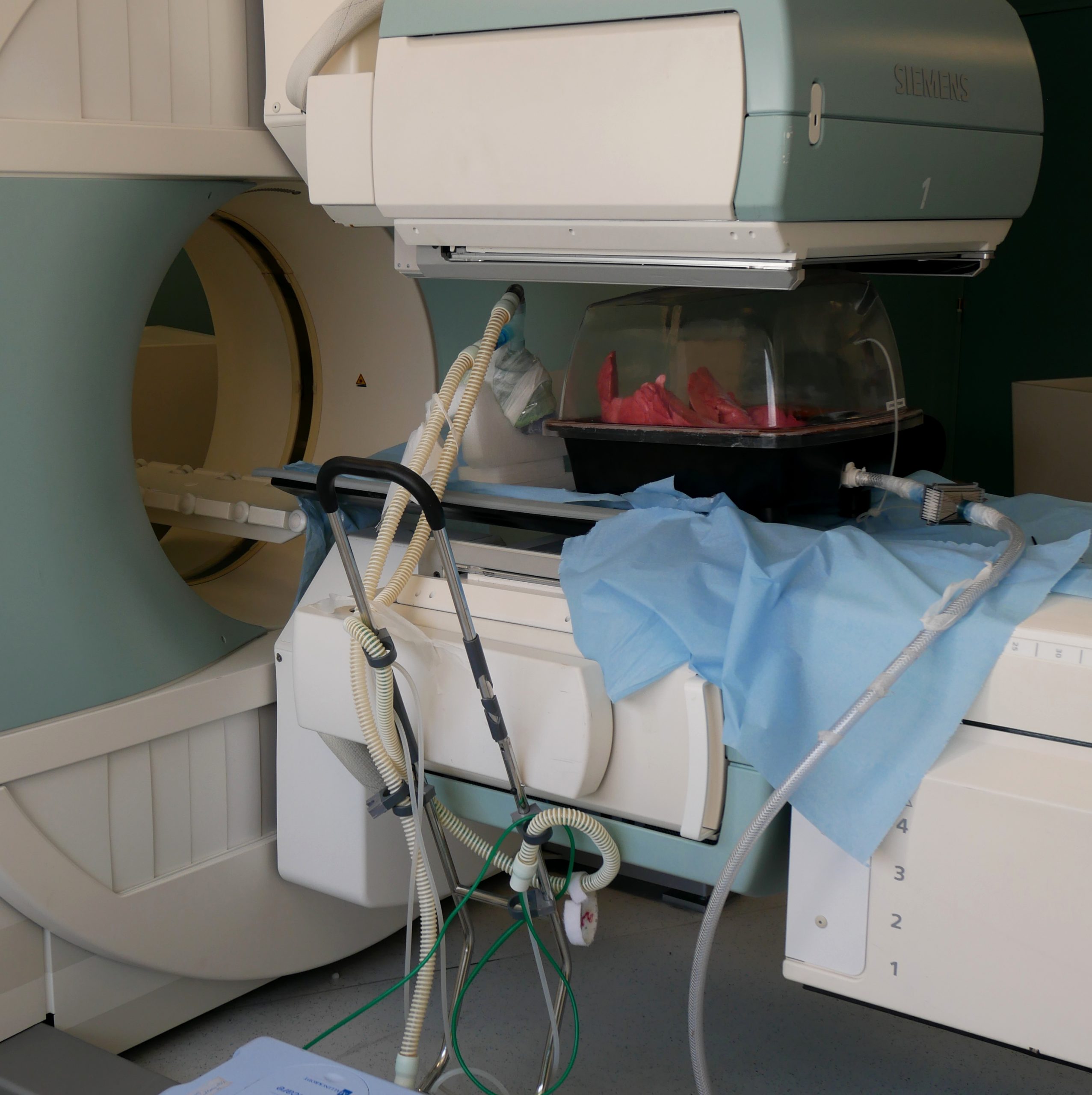

To understand this, let’s go into more detail. Currently, there are two main types of medical scanners: the CT scanner and the PET scanner The CT scanner uses an X-ray source which passes through the patient’s body, and is received by a receptor on the other side of the tube. Whereas, for the PET scanner, the patient needs to be injected with a weak radioactive substance. This substance then emits ionizing radiation, which is detected by a circle of sensors that moves along the patient in the tube.

However, both devices have a size limit, which is called the parallax effect. Since the sensors face the center of the tube, their detection capacity is not the same in every direction around the patient. Therefore, image resolution is better in the center of the field of view, compared to the edge. This is why a PET scanner can only produce medical images by section, as the receptors need to be repositioned to get an accurate scan of each area of the patient.

Although XEMIS uses an injection like the PET scanner, there is no need to move sensors during the scan, as each atom of liquid xenon surrounding the patient acts as a sensor. This means that it has a large field of view that offers the same image quality in every direction. The device offers a huge advantage; now, there is no longer any need to move the sensors and work bit-by-bit. In the same amount of time as a traditional scan, XEMIS provides a more precise image; or the same quality image in a shorter amount of time.

Three photons are better than two

The highly consistent level of detection is not the only advantage of this new scanner. “The substances in traditional injections, such as those with a fluorine-18 base, emit two diametrically opposed gamma rays”, explains Dominique Thers. “When they come into contact with the xenon surrounding the patient, two things happen: light is emitted, and an electrical current is produced”. This method, which is based on contact with xenon, comes directly from the approaches used to detect dark matter.

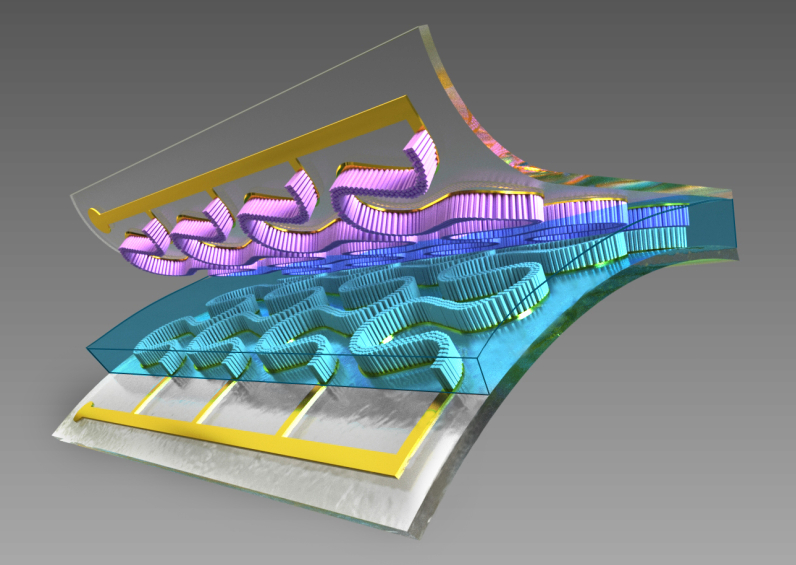

Therefore, on both sides of the tube there are interaction points with xenon. The line between these two points passes through the patient and, more precisely, through the point where the gamma rays are emitted. Algorithms then record these signals and join them together with associated points in the patient. This is then used to build an image. “Because XEMIS technology eliminated the parallax effects, the signal-to-noise ratio of the image created by this detection method is ten times better than the images produced by classical PET scanners”, explains Dominique Thers.

As well as this, XEMIS cameras give doctors a new imaging modality called three-photon imaging! Instead of using fluorine-18, the researchers would inject the patient with scandium-44, which is made by the ARRONAX cyclotron in Nantes. This isotope emits two diametrically opposed photons, as well as a third. “Not only do we have a line that passes through the patient, but XEMIS also measures a hollow cone which contains the third proton. This passes through the emission point”. The additional geometric information allows the machine to efficiently calculate the emission point using triangulation, which ultimately leads to an even better signal-to-noise ratio.

According to Dominique Thers, “By removing the parallax effect, the device can improve the signal-to-noise ratio of a medical image of a human by a factor of ten. It can improve this ratio by another factor of ten if it uses a camera that can capture the entire patient in its field of view, and another factor of ten with the third photon. In total, this means that XEMIS can improve the signal-to-noise ratio by a factor of 1,000.” This makes it possible to make adjustments that can reduce the injected dose, or to improve the imaging protocol in order to increase the frame rate of the cameras.

Can this become an industrial scanner?

Researchers at IMT Atlantique have already demonstrated the effectiveness of XEMIS using three-photon radioactive tracers, but they have not yet tried this with living things. A second phase of the project, called XEMIS2, is now coming to an end. “Our goal now is to produce the first image of a living thing using a small animal, for example. This will demonstrate the huge difference between XEMIS and traditional scanners”, Thers explains. This is a key step in ensuring that the technology is adopted by the medical imaging community. The team are working with the Nantes University Hospital, which should help them to achieve this objective.

In the meantime, the IMT Atlantique team has already patented several innovations with Air Liquide for a xenon renewal and emptying system for the XEMIS camera. 30% of this technology uses cryogenic liquids. It is important that the team have already planned technical processes that will make it easier for health services to use XEMIS, just in case the scanner is adopted by the medical profession. This is a step forward in the international development of this unique technology.