Understanding the resilience of the immune system through mathematical modeling

Gaining insight into how the immune system works using mathematics is the ultimate goal of the research carried out by IMT Atlantique researcher Dominique Pastor, along with his team. Although the study involves a great degree of abstraction, the scientists never lose sight of practical applications, and not only in relation to biology.

In many industries, the notion of “resilience” is a key issue, even though there is no clear consensus on the definition of the term. From the Latin verb meaning “to rebound,” the term does not exactly refer to the same thing as resistance or robustness. A resilient system is not unaffected by external events, but it is able to fulfill its function, even in a degraded mode, in a hostile environment. For example, in computer science, resilience means the ability to provide an acceptable level of services in the event of a failure

This capacity is also found in the human body — and in general, in all living beings. For example, when you have a cold, your abilities may be reduced, but in most cases you can keep living more or less normally.

This phenomenon is regularly observed in all biological systems, but remains quite complex. It is still difficult to understand how resilience works and the set of behaviors to which it gives rise.

A special case of functional redundancy: degeneracy

It was through discussions with Véronique Thomas-Vaslin, a biologist at Sorbonne University, that Dominique Pastor, a telecommunications researcher at IMT Atlantique, became particularly aware of this property of biological systems. Working with Roger Waldeck, who is also a researcher at IMT Atlantique, and PhD student Erwan Beurier, he carried out research to mathematically model this resilience, in order to demonstrate its basic principles and better understand how it works.

To do so, they drew on publications by other scientists, including American biologist Gerald Edelman (Nobel prize winner for medicine in 1972), underscoring another property of living organisms: degeneracy. (This term is usually translated in French as dégénérescence, which means ‘degeneration,’ but this word is misleading). “Degeneracy” refers to the ability of two structurally different elements to perform the same function. It is therefore a kind of functional redundancy, which also implies different structures. This characteristic can be found at multiple levels in living beings.

For example, amino acids, which are the building blocks of essential proteins, are produced from “messages” included in portions of DNA. More specifically, each message is called a “codon”: a sequence of three molecules, known as nucleotides. However, there are 4 possible nucleotides, meaning there are 64 possible combinations, for only 22 amino acids. That means that some codons correspond to the same amino acid: a perfect example of degeneracy.

“My hunch is that that degeneracy is central to any resilient system,” explains Dominique Pastor. “But it’s just a hunch. The aim of our research is to formalize and test this idea based on mathematical results. This can be referred to as the mathematics of resilience.”

To this end, he relied on the work of French mathematician Andrée Ehresmann, Emeritus Professor at the University of Picardie Jules Verne, who established a mathematical model of degeneracy, known as the “Multiplicity Principle,” with Jean-Paul Vanbremeersch, an Amiens-based physician who specializes in gerontology.

Recreating resilience in the form of mathematical modeling

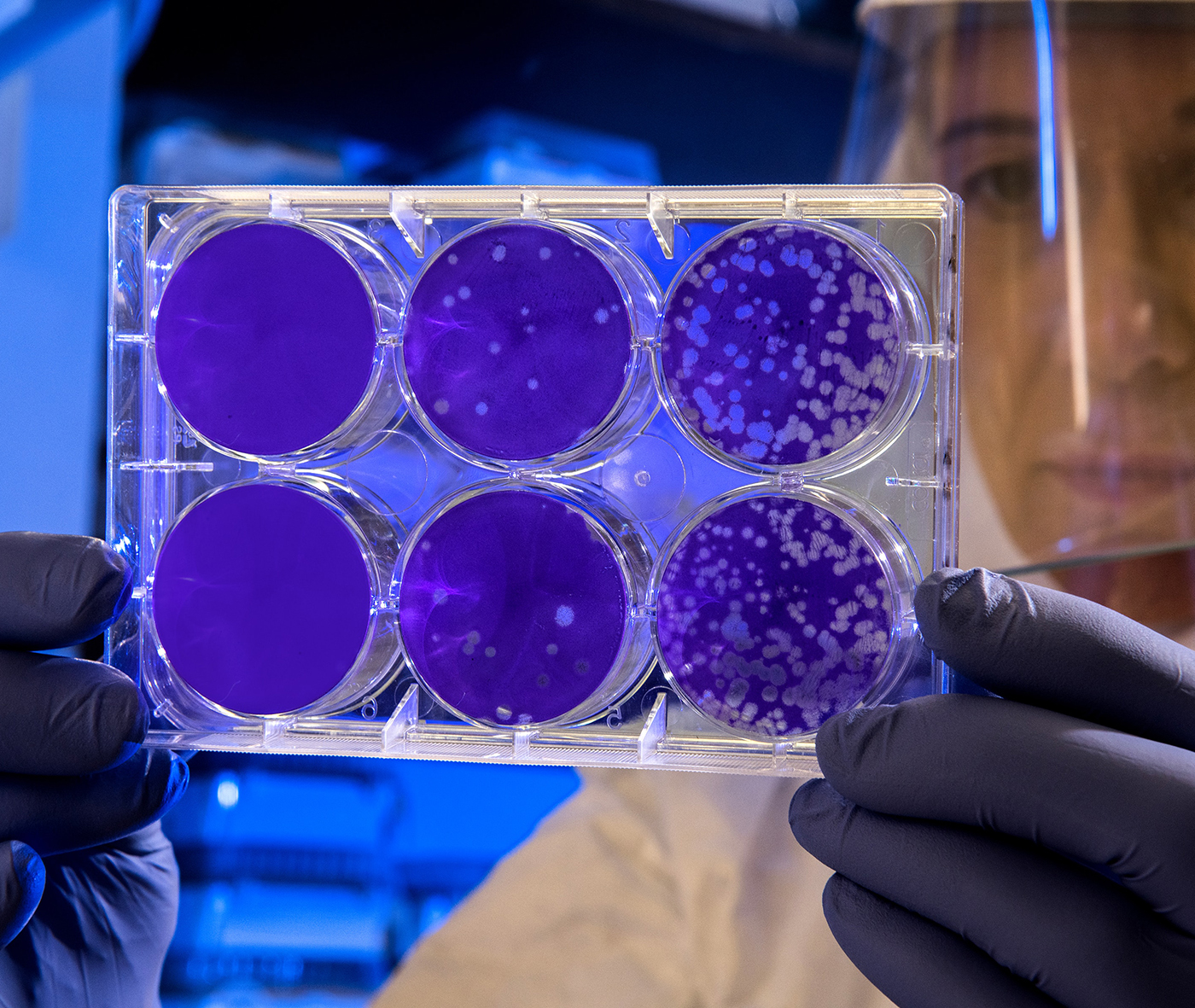

Dominique Pastor and his team therefore started out with biologists’ concrete observations of the human body, and then focused on theoretical study. Their goal was to develop a mathematical model that could imitate both the degeneracy and resilience of the immune system in order to “establish a link between the notion of resilience, this Multiplicity Principle, and statistics.” Once this link was established, it would then be possible to study it and gain insight into how the systems work in real life.

The researchers therefore examined the performance of two categories of statistical testing, for a given problem, namely to detect a phenomenon. The first category is called “Neyman-Pearson testing,” and is optimal for determining whether or not an event has occurred. The second category, RDT, (Random Distortion Testing), is also optimal, but for a different task: detecting whether an event has moved away from an initial model.

The two types of procedures were not created with the same objective. However, the researchers successfully demonstrated that RDT testing could also be used, in a “degenerative” manner, to detect a phenomenon, with a comparable performance to Neyman-Pearson testing. That means that in the theoretical case of an infinite amount of data, they can detect the presence or absence of a phenomenon with the same level of precision. The two categories therefore perform the same function, although they are structurally different. “We therefore made two sub-systems in line with the Multiplicity Principle,” concludes the IMT Atlantique researcher.

What’s more, the nature of RDT testing gives it an advantage over Neyman-Pearson testing since the latter only works optimally when real events follow a certain mathematical model. If this is not the case — as so often happens in nature — it is more likely to be incorrect. RDT testing can adapt to a variable environment, since it is designed to detect such variations, and is therefore more robust. Combining the two types of testing can result in a system with the inherent characteristics of resilience, meaning the ability to function in a variety of situations.

From biology to cybersecurity

These findings are not intended to remain confined to a theoretical universe. “We don’t work with theory for the sake of theory,” says Dominique Pastor. “We never forget the practical side: we continually seek to apply our findings.” The goal is therefore to return to the real world, and not only in relation to biology. In this respect, the approach is similar to that used in research on neural networks – initially focused on understanding how the human brain works, it ultimately resulted in systems used in the field of computer science.

“The difference is that neural networks are like black boxes: we don’t know how they make their decisions,” explains the researcher. “Our mathematical approach, on the other hand, provides an understanding of the principles underlying the workings of another black box: the immune system.” This understanding is also supported by collaboration with David Spivak, a mathematician at MIT (United States), again, in the field of mathematical modeling of biological systems.

The first application Dominique Pastor is working on falls within the realm of cybersecurity. The idea is to imitate the resilient behavior of an immune system for protective purposes. For example, many industrial sites are equipped with sensors to monitor various factors (light, opening and closing of doors, filling a container etc.) To protect these devices, they could be combined with a system to detect external attacks. This could be made up of a network, which would receive data recorded by the sensors and run a series of tests to determine whether there has been an incident. Since these tests could be subject to attacks themselves, they would have to be resilient in order to be effective – hence the importance of using different types of tests, in keeping with the previously obtained results.

For now it is still too early to actually apply these theories. It remains to be proven that the Multiplicity Principle is a sufficient guarantee of resilience, given that this notion does not have a mathematical definition as of today. This is one of Dominique Pastor’s ambitions. The researcher admits that it is still his “pipe dream” and says, “My ultimate goal would still be to go back to biology. If our research could help biologists better understand and model the immune system, in order to develop better care strategies, that would be wonderful.”