What is eco-design?

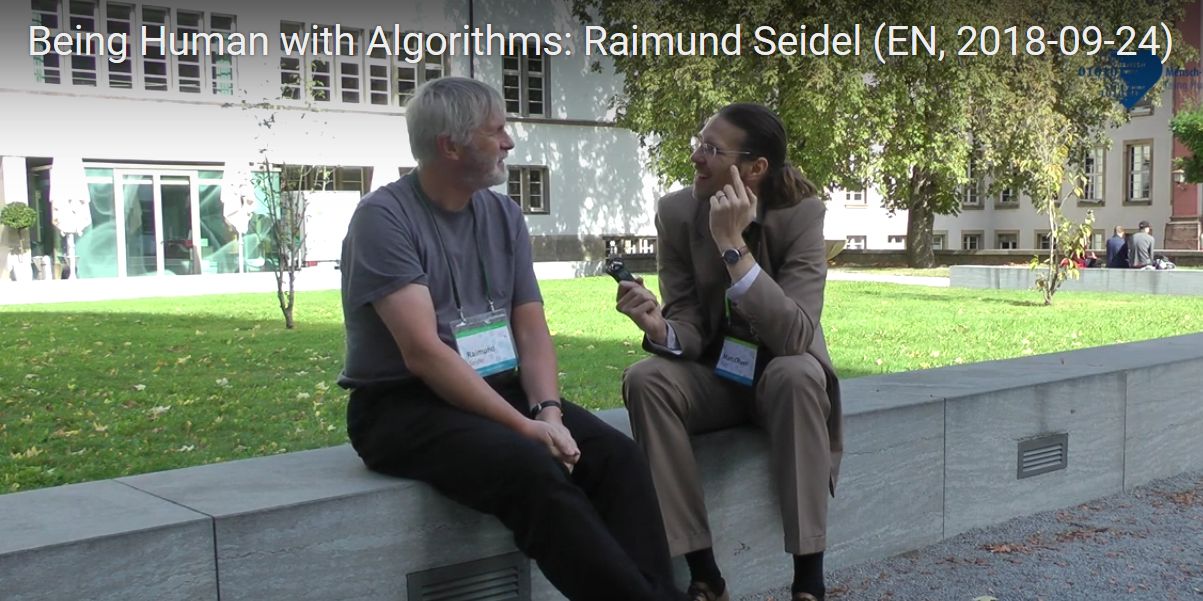

In industry, it is increasingly necessary to design products and services with concern and respect for environmental issues. Such consideration is expressed through a practice that is gaining ground in a wide range of sectors: eco-design. Valérie Laforest, a researcher in environmental assessment and environmental engineering and organizations at Mines Saint-Étienne, explains the term.

What does eco-design mean?

Valérie Laforest: The principle of eco-design is to incorporate environmental considerations from the earliest stages of creating a service or product, meaning from the design stage. It’s a method governed by standards, at the national and international level, describing concepts and setting out current best practices for eco-design. We can just as well eco-design a building as we can a tee-shirt or a photocopying service.

Why this need to eco-design?

VL: There is no longer any doubt about the environmental pressure on the planet. Eco-design is one concrete way for us to think about how our actions impact the environment and consider alternatives to traditional production. Instead of producing, and then looking for solutions, it’s much more effective and efficient to ask questions from the design stage of a product to reduce or avoid the environmental impact.

What stages does eco-design apply to?

VL: In concrete terms, it’s based entirely on the life cycle of a system, from very early on in its existence. Eco-design thinking takes into account the extraction of raw materials, as well as the processing and use stages, until end of life. If we recover the product when it is no longer usable, to recycle it for example, that’s also an example of eco-design. As it stands today, end-of-life products are either sent to landfills, incinerated or recycled. Eco-design means thinking about the materials that can be used, but also thinking about how a product can be dismantled so as to be incorporated within another cycle.

When did we start hearing about this principle?

VL: The first tools arrived in the early 2000s but the concept may be older than that. Environmental issues and associated research have increased since 1990. But eco-design really emerged in a second phase when people started questioning the environmental impact of everyday things: our computer, sending an email, the difference between a polyester or cotton tee-shirt.

What eco-design tools are available for industry?

VL: The tools can fall into a number of categories. There are relatively simple ones, like check-lists or diagrams, while others are more complex. For example, there are life-cycle analysis tools to identify the environmental impacts, and software to incorporate environmental indicators in design tools. The latter require a certain degree of expertise in environmental assessment and a thorough understanding of environmental indicators. And developers and designers are not trained to use these kinds of tools.

Are there barriers to the development of this practice?

VL: There’s a real need to develop special tools for eco-design. Sure, some already exist, but they’re not really adapted to eco-design and can be hard to understand. This is part of our work as researchers, to develop new tools and methods for the environmental performance of human activities. For example, we’re working on projects with the Écoconception center, a key player in the Saint-Etienne region as well as at the national level.

In addition to tools, we also have to go visit companies to get things moving and see what’s holding them back. We have to consider how to train, change and push companies to get them to incorporate eco-design principles. It’s an entirely different way of thinking that requires an acceptance phase in order to rethink how they do things.

Is the circular economy a form of eco-design?

VL: Or is eco-design a form of the circular economy? That’s an important question, and answers vary depending on who you ask. Stakeholders who contribute to the circular economy will say that eco-design is part of this economy. And on the other side, eco-design will be seen as an initiator of the circular economy, since it provides a view of the circulation of material in order to reduce the environmental impact. What’s certain is that the two are linked.

Tiphaine Claveau for I’MTech

[box type=”info” align=”” class=”” width=””]

This article was published as part of Fondation Mines-Télécom‘s 2020 brochure series dedicated to sustainable digital technology and the impact of digital technology on the environment. Through a brochure, conference-debates, and events to promote science in conjunction with IMT, this series explores the uncertainties and challenges of the digital and environmental transitions.

[/box]