The original version of this article (in French) was published in the quarterly newsletter of the Values and Policies of Personal Information Chair (no. 18, September 2020).

[divider style=”normal” top=”20″ bottom=”20″]

[dropcap]O[/dropcap]n March 11, 2020, the World Health Organization officially declared that our planet was in the midst of a pandemic caused by the spread of Covid-19. First reported in China, then Iran and Italy, the virus spread critically and quickly as it was given an opportunity. In two weeks, the number of cases outside China increased 13-fold and the number of affected countries tripled [1].

[dropcap]O[/dropcap]n March 11, 2020, the World Health Organization officially declared that our planet was in the midst of a pandemic caused by the spread of Covid-19. First reported in China, then Iran and Italy, the virus spread critically and quickly as it was given an opportunity. In two weeks, the number of cases outside China increased 13-fold and the number of affected countries tripled [1].

Every nation, every State, every administration, every institution, every scientist and every politician, every initiative and every willing public and private actors were called on to think and work together to fight this new scourge.

From the manufacture of masks and respirators to the pooling of resources and energy to find a vaccine, all segments of society joined altogether as our daily lives were transformed, now governed by a large-scale deadly virus. The very structure of the way we operate in society was adapted in the context of an unprecedented lockdown period.

In this collective battle, digital tools were also mobilized.

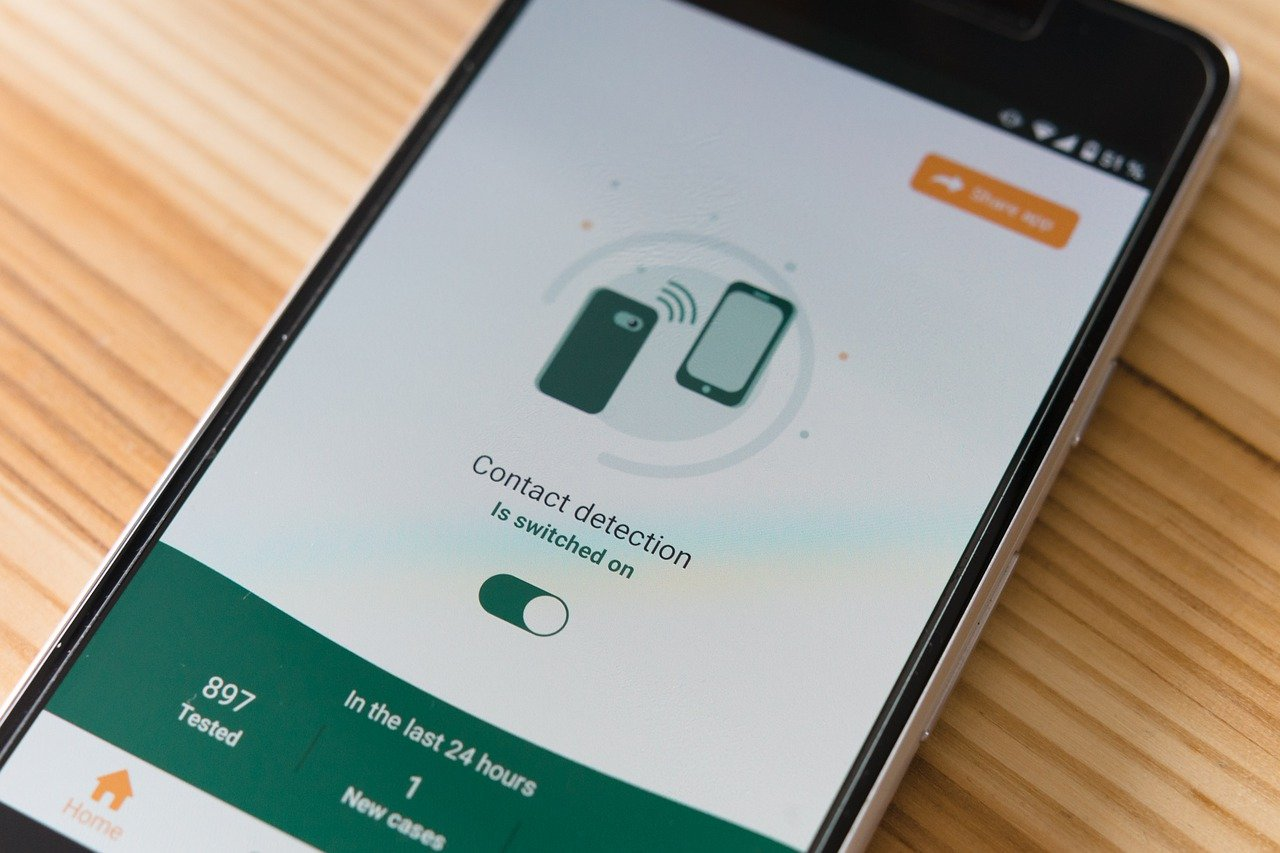

As early as March 2020, South Korea, Singapore and China announced the creation of contact tracing mobile applications to support their health policies [2].

Also in March, in Europe, Switzerland reported that it was working on the creation of the “SwissCovid” application, in partnership with EPFL University in Lausanne and ETH University in Zurich. This contact tracing application pilot project was eventually implemented on June 25. SwissCovid is designed to notify users who have been in extended contact with someone who tested positive for the virus, in order to control the spread of the virus. To quote the proponents of the application, it is “based on voluntary registration and subject to approval from Swiss Parliament.” Another noteworthy feature is that it is “based on a decentralized approach and relies on application programming interfaces (APIs) from Google and Apple.”

France, after initially dismissing this type of technological component through the Minister of the Interior, who stated that it was “foreign to French culture,” eventually changed is position and created a working group to develop a similar app called “StopCovid”.

In total, no less than 33 contact tracing apps were introduced around the world [3]. With a questionable success.

However, many voices in France, Europe and around the world, have spoken out against the implementation of this type of system, which could seriously infringe on basic rights and freedoms, especially regarding individual privacy and freedom of movement. Others have voiced concern about the possible control of this personal data by the GAFAM or States that are not committed to democratic values.

The security systems for these applications have also been widely debated and disputed, especially the risks of facing a digital virus, in addition to a biological one, due to the rushed creation of these tools.

The President of the CNIL (National Commission for Information Technology and Civil Liberties) Marie-Laure Denis, echoed the key areas for vigilance aimed at limiting potential intrusive nature of these tools.

- First, through an opinion issued on April 24, 2020 on the principle of implementing such an application, the CNIL stated that, given the exceptional circumstances involved in managing the health crisis, it considered the implementation of StopCovid feasible. However, the Commission expressed two reservations: the application should serve the strategy of the end-of-lockdown plan and be designed in a way that protects users’ privacy [4].

- Then, in its opinion of May 25, 2020, urgently issued for a draft decree related to the StopCovid mobile app [5], the CNIL stated that the application “can be legally deployed as soon as it is found to be a tool that supports manual health investigations and enables faster alerts in the event of contact cases with those infected with the virus, including unknown contacts.” Nevertheless, it considered that “the real usefulness of the device will need to be more specifically studied after its launch. The duration of its implementation must be dependent on the results of this regular assessment.”

From another point of view, there were those who emphasized the importance of digital solutions in limiting the spread of the virus.

No application can heal or stop Covid. Only medicine and a possible vaccine can do this. However, digital technology can certainly contribute to health policy in many ways, and it seems perfectly reasonable that the implementation of contact tracing applications came to the forefront.

What we wish to highlight here is not so much the arguments for or against the design choices in the various applications (centralized or decentralized, sovereign or otherwise) or even against their very existence (with, in each case, questionable and justified points), but the conversational scope that has played a part in all the debates surrounding their implementation.

While our technological progress is impressive in terms of scientific and engineering accomplishments, our capacity to collectively understand interactions between digital progress and our world has always raised questions within the Values and Policies of Personal Information research Chair.

It is, in fact, the very purpose of its existence and the reason why we share these issues with you.

In the midst of urgent action taken on all levels to contain, manage and–we hope–reverse the course of the pandemic, the issue of contact tracing apps has caused us to realize that the debates surrounding digital technology have perhaps finally moved on to a tangible stage involving collective reflection that is more intelligent, democratic and respectful of others.

In Europe, and also in other countries in the world, certain issues have now become part of our shared basis for conversation. These include personal data protection, individual privacy, technology that should be used, the type of data collected and its anonymization, application security, transparency, the availability of their source codes, their operating costs, whether or not to centralize data, their relationship with private or State monopolies, the need in duly justified cases for the digital tracking of populations, independence from the GAFAM [6] and United States [7] (or other third State).

In this respect, given the altogether recent nature of this situation, and our relationship with technological progress, which is no longer deified nor vilified, nor even a fantasy from an imaginary world that is obscure for many, we have progressed. Digital technology truly belongs to us. We have collectively made it ours, moving beyond both blissful techno-solutionism and irrational technophobia.

If you are not yet familiar with this specific subject, please reread the Chair’s posts on Twitter dating back to the start of the pandemic, in which we took time to identify all the elements in this conversational scope pertaining to contact tracing

The goal is not to reflect on these elements as a whole, or the tone of some of the colorful and theatrical remarks, but rather something we see as new: the quality and wealth of these remarks and their integration in a truly collective, rational and constructive debate.

It was about time!

On August 26, 2020, French Prime Minister Jean Castex made the following statement: “StopCovid did not achieve the desired results, perhaps due to a lack of communication. At the same time, we knew in advance that conducting the first full-scale trial run of this type of tool in the context of this epidemic would be particularly difficult.” [8] Given the human and financial investment, it is clear that the cost-effectiveness ratio does not help the case for StopCovid (and similar applications in other countries) [9].

Further revelations followed when the CNIL released its quarterly opinion on September 14, 2020. While, for the most part, the measures implemented (SI-DEP and Contact Covid data files, the StopCovid application) protected personal data, the Commission identified certain poor practices. It contacted the relevant agencies to ensure they would become compliant in these areas as soon as possible.

In any case, the conclusive outcome that can be factually demonstrated, is that remarkable progress has been made in our collective level of discussion, our scope for conversation in the area of digital technology. We are asking (ourselves) the right questions. Together, we are setting the terms for our objectives: what we can allow, and what we must certainly not allow.

This applies to ethical, legal and technical aspects.

It’s therefore political.

Claire Levallois-Barth and Ivan Meseguer

Co-founders of the Values and Policies of Personal Information research chair