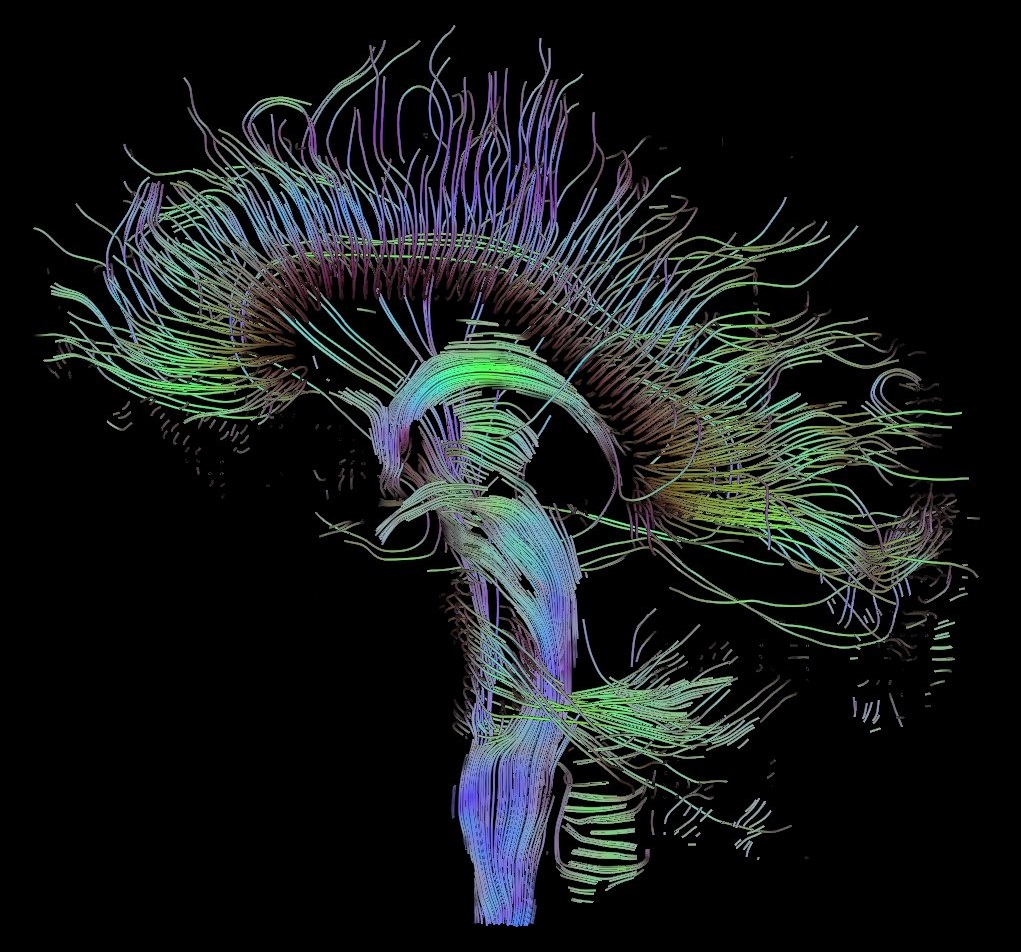

The brain: seeing between the fibers of white matter

The principle behind diffusion imaging and tractography is exploring how water spreads through our brain in order to study the structure of neurons. Doctors can use this method to improve their understanding of brain disease. Pietro Gori, a researcher in image processing at Télécom ParisTech, has just launched a project called Neural Meta Tracts, funded by the Emergence program at DigiCosme. It aims to improve modelling, visualization and manipulation of the large amounts of data produced by tractography. This may considerably improve the analysis of white matter in the brain, and in doing so, allow doctors to more easily pinpoint the morphological differences between healthy and sick patients.

What is the goal of the Neural Meta Tracts project?

Pietro Gori: The project stems from my past experience. I have worked in diffusion imaging, which is a non-invasive form of brain imaging, and tractography. This technique allows you to explore the architecture of the brain’s white matter, which is made up of bundles of several millions of neuron axons. Tractography allows us to represent these bundles in the form of curves in a 3D model of the brain. It is a very rich method which provides a great deal of information, but this information is difficult to visualize and make use of in digital calculations. Our goal with Neural Meta Tracts is to facilitate and accelerate the manipulation of these data.

Who can benefit from this type of improvement to tractography?

PG: By making visualization easier, we are helping clinicians to interpret imaging results. This may help them to diagnose brain diseases more easily. Neurosurgeons can also gain from tractography in planning operations. If they are removing a tumor, they want to be sure that they do not cut fibers in the critical areas of the brain. The more precise the image is, the better prepared they can be. As for improvements to data manipulation and calculation, neurologists and radiologists doing research on the brain are highly interested. As they are dealing with large amounts of data, it can take time to compare sets of tractographies, for example when studying the impact of a particular structure on a particular disease.

Could this help us to understand certain diseases?

PG: Yes. In psychiatry and neurology, medical researchers want to compare healthy people with sick people. This enables them to study differences which may either be the consequence or the cause of the disease. In the case of Alzheimer’s, certain parts of the brain are atrophied. Improving mathematical modeling and visualization of tractography data can therefore help medical researchers to detect these anatomical changes in the brain. During my thesis, I also worked on Tourette syndrome. Through my work, we were able to highlight anatomical differences between healthy and sick subjects.

How do you improve the visualization and manipulation of tractography data?

PG: I am working with Jean-Marc Thiery and other lecturers and researchers at Télécom ParisTech and the École Polytechnique on applying differential geometry techniques. We analyze the geometry of bundles of neuron axons, and we try to approximate them as closely as possible without losing information. We are working on algorithms which will be able to rapidly compare two sets of tractography data. When we have similar sets of data, we try to aggregate them, again trying not to lose information. It is important to realize that if you have a database of a cohort of one thousand patients, it can take days of calculation using very powerful computers to compare their tractographies in order to find averages or main variations.

Who are you collaborating with on this project to obtain the tractography data and study the needs of practitioners?

PG: We use a high-quality freely-accessible database of healthy individuals, called the Human Connectome Project. We also collaborate with clinicians in the Pitié Salpêtrière, Sainte-Anne and Kremlin-Bicêtre hospitals in the Paris region. These are radiologists, neurologists and neurosurgeons. They provide their experience of the issues with which they are faced. We are initially focusing on three applications: Tourette syndrome, multiple sclerosis, and surgery on patients with tumors.

Also read on I’MTech:

[one_half]

[/one_half]

[one_half_last]

[/one_half_last]