AI4EU: a project bringing together a European AI community

On January 10th, the AI4EU project (Artificial Intelligence for the European Union), an initiative of the European Commission, was launched in Barcelona. This 3-year project led by Thalès, with a budget of €20 million, aims to bring Europe to the forefront of the world stage in the field of artificial intelligence. While the main goal of AI4EU is to gather and coordinate the European AI community as a single entity, the project also aims to promote EU values: ethics, transparency and algorithmic explainability. TeraLab, the AI platform at IMT, is an AI4EU partner. Interview with its director, Anne-Sophie Taillandier.

What is the main goal of the AI4EU H2020 project?

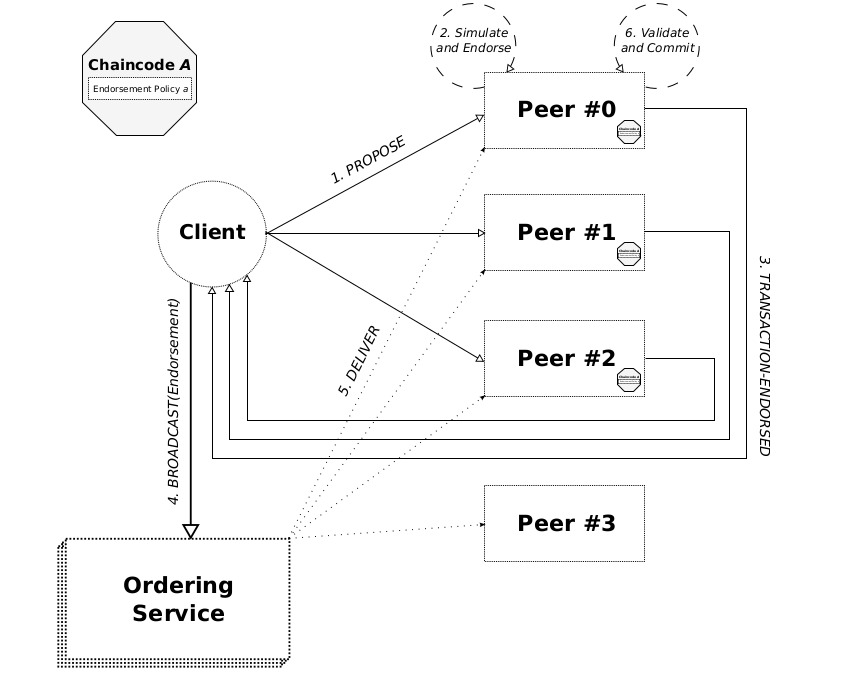

Anne-Sophie Taillandier: To create a platform bringing together the Artificial Intelligence (AI) community and embodying European values: sovereignty, trust, responsibility, transparency, explainability… AI4EU seeks to make AI resources, such as data repositories, algorithms and computing power, available for all users in every sector of society and the economy. This includes everyone from citizens interested in the subject, SMEs seeking to integrate AI components, start-ups, to large groups and researchers—all with the goal of boosting innovation, reinforcing European excellence and strengthening Europe’s leading position in the key areas of artificial intelligence research and applications.

What is the role of this platform?

AST: It primarily plays a federating role. AI4EU, with 79 members in 21 EU countries, will provide a unique entry point for connecting with existing initiatives and accessing various competences and expertise pooled together in a common base. It will also play a watchdog role and will provide the European Commission with the key elements it needs to orient its AI strategy.

TeraLab, the IMT Big Data platform, is also a partner. How will it contribute to this project?

AST: Along with Orange, TeraLab coordinates the “Platform Design & Implementation” work package. We provide users with experimentation and integration tools that are easy to use without prior theoretical knowledge, which accelerates the start-up phase for projects developed using the platform. For common questions that arise when launching a new project, such as the necessary computing power, data security, etc., TeraLab offers well-established infrastructure that can quickly provide solutions.

Which use cases will you work on?

AST: The pilot use cases focus on public services, the Internet of Things (IoT), cybersecurity, health, robotics, agriculture, the media and industry. These use cases will be supplemented by open calls launched over the course of the project. These open calls will target companies and businesses that want to integrate platform components into their activities. They could benefit from the sub-grants provided for in the AI4EU framework: the European Commission funds the overall project, which itself funds companies proposing convincing project through the total dedicated budget of €3 million.

Ethical concerns represent a significant component of European reflection on AI. How will they be addressed?

AST: They certainly represent a central issue. The project governance will rely on a scientific committee, an industrial committee as well as an ethics committee that will ensure transparency, reproducibility and explainability by means of tools including charters, indicator and labels. Far from representing an obstacle to business development, the emphasis on ethics creates added value and a distinguishing feature for this platform and community. The guarantee that the data will be protected and will be used in an unbiased manner represents a competitive advantage for the European vision. Beyond data protection, other ethical aspects such as gender parity in AI will also be taken into account.

What will the structure and coordination look like for this AI community initiated by AI4EU?

AST: The project members will meet at 14 events in 14 different countries to gather as many stakeholders as possible throughout Europe. Coordinating the community is an essential aspect of this project. Weekly meetings are also planned. Every Thursday morning, as part of a “world café”, participants will share information, feedback, and engage in discussions between suppliers and users. A digital collaborative platform will also be established to facilitate interactions between stakeholders. In other words, we are sure to keep in touch!