Data sharing, a common European challenge

Promoting data sharing between economic players is one of Europe’s major objectives via its digital governance strategy. To accomplish this, there are two specific challenges to be met. Firstly, a community must be created around data issues, bringing together various stakeholders from multiple sectors. Secondly, the technological choices implemented by these stakeholders must be harmonised.

‘If we want more efficient algorithms, with qualified uncertainty and reduced bias, we need not only more data, but more diverse data’, explains Sylvain Le Corff. This statistics researcher at Télécom SudParis thus raises the whole challenge around data sharing. This need applies not only to researchers. Industrial players must also strengthen their data with that from their ecosystem. For instance, an energy producer will benefit greatly from industrial data sharing with suppliers or consumer groups, and vice versa. A car manufacturer will become all the more efficient with more data sources from their sub-contractors.

The problem is that this sharing of data is far from being a trivial operation. The reason lies in the numerous technical solutions that exist to produce, store and use data. The long-standing and over-riding idea for economic players was to try to exploit their data themselves, and each organisation therefore made personal choices in terms of architecture, format or data-related protocols. An algorithm developed to exploit data sets in a specific format cannot use data packaged in another format. This then calls for a major harmonisation phase.

‘This technical aspect is often under-estimated in data sharing considerations’, Sylvain Le Corff comments. ‘Yet we are aware that there is a real difficulty with the pre-treatment operation to harmonise data.’ The researcher quotes the example of automatic language analysis, a key issue for artificial intelligence, which relies on the automatic processing of texts from multiple sources: raw texts, texts generated by audio or video documents, or texts derived from other texts, etc. This is the notion of multi-modality. ‘The plurality of sources is well-managed in the field, but the manner in which we oversee this multi-modality can vary within the same sector.’ Two laboratories or two companies will therefore not harmonise their data in the same way. In order to work together, there is an absolute need to go through this fastidious pre-treatment, which can hamper collaboration.

A European data standard

Olivier Boissier, a researcher in artificial intelligence and inter-operability at Mines Saint-Étienne, adds another factor to this issue: ‘The people who help to produce or process data are not necessarily data or AI specialists. In general, they are people with high expertise in the field of application, but don’t always know how to open or pool data sets.’ Given such technical limitations, a promising approach consists in standardising practices. This task is being taken on by the International Data Spaces Association (IDSA), whose role is to promote data sharing on a global scale, and more particularly in Europe.

Contrary to what one might assume, the idea of a data standard does not mean imposing a single norm on data format, architecture or protocol. Each sector has already worked on ontologies to help facilitate dialogue between data sets. ‘Our intention is not to provide yet another ontology’, explains Antoine Garnier, project head at IDSA. ‘What we are offering is more of a meta-model which enables a description of data sets based on those sector ontologies, and with an agnostic approach in terms of the sectors it targets.’

This standard could be seen as a list of conditions on which to base data use. To summarise the conditions in IDSA’s architectural model, ‘the three cornerstones are the inter-operability, certification and governance of data’, says Antoine Garnier. Thanks to this approach, the resulting standard serves as a guarantee of quality between players. It enables users to determine rapidly whether an organisation fulfils these conditions and is thus trustworthy. This system also raises the question of security, which is one of the primary concerns of organisations who agree to open their data.

Europe, the great lake region of data?

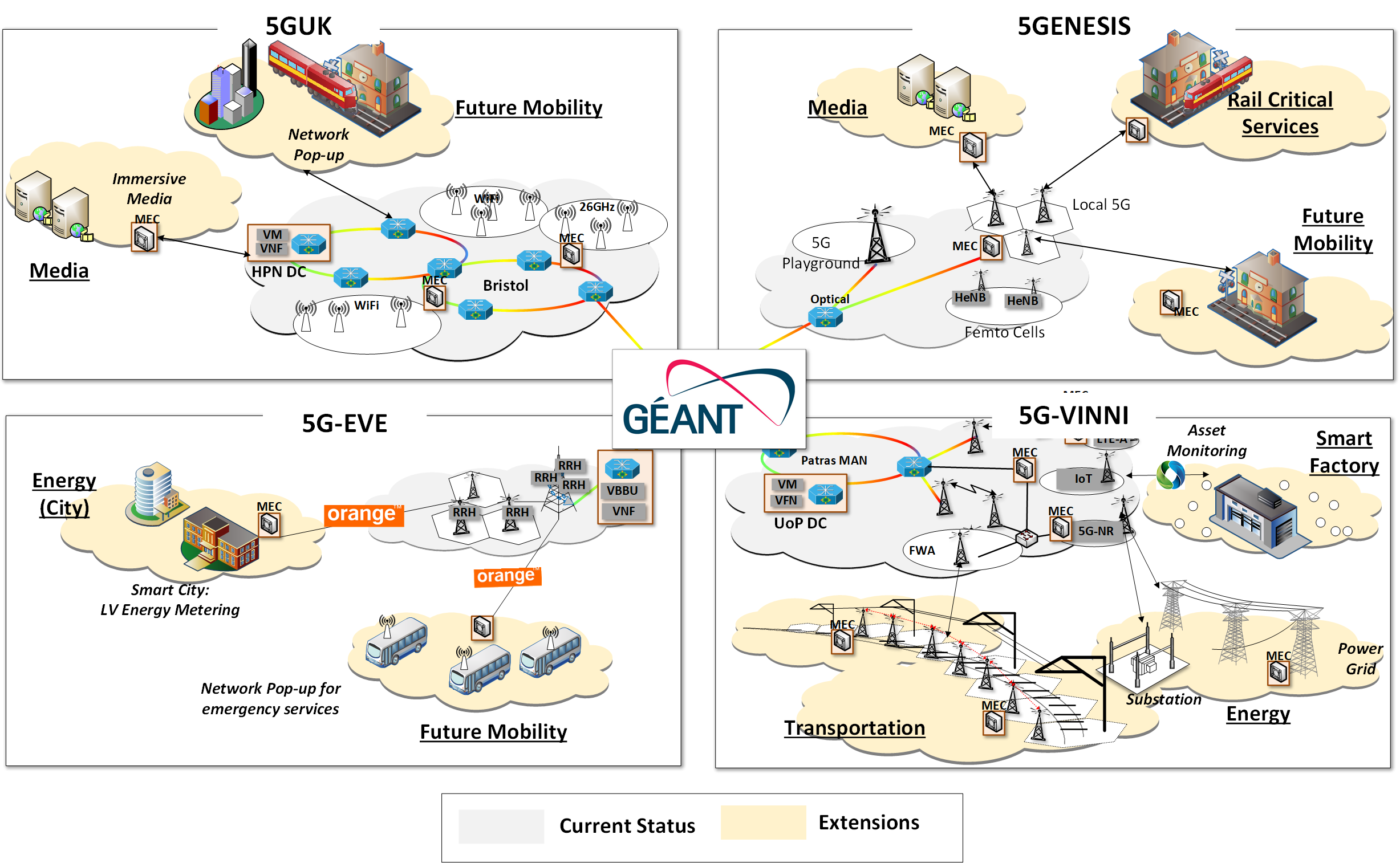

While developing a standard is a step forward in technical terms, it remains to be put into actual use. For this, its design must incorporate the technical, legal, economic and political concerns of European data stakeholders – producers and users alike. Hence the importance of creating a community consisting of as many organisations as possible. In Europe, since 2020, this community has had a name, Gaia-X, an association of players, including together IMT and IDSA in particular, to structure efforts around the federation of data, software and infrastructure clouds. Via Gaia-X, public and private organisations aim to roll out standardisation actions, using the IDSA standard among others, but may also implement research, training or awareness activities.

‘This is such a vast issue that if we want to find a solution, we must approach it through a community of experts in security, inter-operability, governance and data analysis’ Olivier Boissier points out, emphasising the importance of dialogue between specialists around this topic. Alongside their involvement in Gaia-X, IMT and IDSA are organising a winter school from 2 to 4 December to raise awareness among young researchers of data-sharing issues (see insert below). With the support of the German-French Academy for the Industry of the Future, it will provide the keys to understanding technical and human issues, through concrete cases. ‘Within the research community, we are used to taking part in conferences to keep up to date on the state of play of our field, but it is difficult to have a deeper understanding of the problems faced by other fields’, Sylvain Le Corff admits. ‘This type of Franco-German event is essential to structuring the European community and forming a global understanding of an issue, by taking a step back from our own area of expertise.’

The European Commission has made no secret of its ambition to create a space for the free circulation of data within Europe. In other words, a common environment in which personal and confidential data would be secured, but also in which organisations would have easy access to a significant amount of industrial data. To achieve this idyllic scenario of cooperation between data players, the collective participation of organisations is an absolute prerequisite. For academics, the communitarian approach is a core practice and does not represent a major challenge. For businesses, however, there remains a certain number of stakeholders to win over. The majority of major industries have understood the benefits of data sharing, ‘but some companies still see data as a monetizable war treasure that they must avoid sharing’, says Antoine Garnier. ‘We must take an informative approach and shatter preconceived ideas.’

Read on I’MTech: Data sharing: an important issue for the agricultural sector

What about non-European players? When we speak about data sharing, we systematically refer to the cloud, a market cornered by three American players, Amazon, Microsoft and Google, behind which we find other American stakeholders (IBM and Oracle) and a handful of Chinese interests such as Alibaba and Tencent. How do we convince these ‘hyper-scalers’ (the title refers to their ability to scale up to meet growing demand, regardless of the sector) to adopt a standard which is not their own, when they are the owners of the technology upon which the majority of data use is based? ‘Paradoxically, we are perhaps not such bad news for them’ Antoine Garnier assures us. ‘Along with this standard, we are also offering a form of certification. For players suffering from a negative image, this allows them to demonstrate compliance with the rules.’

This standardisation strategy also impacts European digital sovereignty and the transmission of its values. In the same way as Europe succeeded in imposing a personal data protection standard in the 2010s with the formalisation of the GDPR, it is currently working to define a standard around industrial data sharing. Its approach to this task is identical, i.e. to make standardisation a guarantee of security and responsible management. ‘A standard is often perceived as a constraint, but it is above all a form of freedom’ concludes Olivier Boissier. ‘By adopting a standard, we free ourselves of the technical and legal constraints specific to each given use.’

[box type=”info” align=”” class=”” width=””]From 2 to 4 December: a winter school on data sharing

Around the core theme of Data Analytics & AI, IMT and TU Dortmund are organising a winter school on data sharing for industrial systems, from 2 to 4 December 2020, in collaboration with IDSA, the German-French Academy for the Industry of the Future and with the support of the Franco-German University. Geared towards doctoral students and young researchers, its aim is to open perspectives and establish a state of play on the question of data exchange between European stakeholders. Through the participation of various European experts, this winter school will examine the technical, economic and ethical aspects of data sharing by bringing together the field expertise of researchers and industrial players.

[/box]