Understanding methane hydrate formation to revolutionize pipelines

Since hydrocarbon is always drawn from deep in the sea floor, oil companies face potential obstruction problems in their pipelines due to the formation of solid compounds: methane hydrates. Ana Cameirao, an engineer and PhD specializing in industrial crystallization at Mines Saint-Étienne, is hoping to understand and model this phenomenon. She has contributed to the creation of an industrial chair in collaboration with international laboratories and operators such as Total, with the aim of developing a modelling software for the flow within the pipelines. Their mission is to achieve a more economic and ecological usage of underwater pipelines.

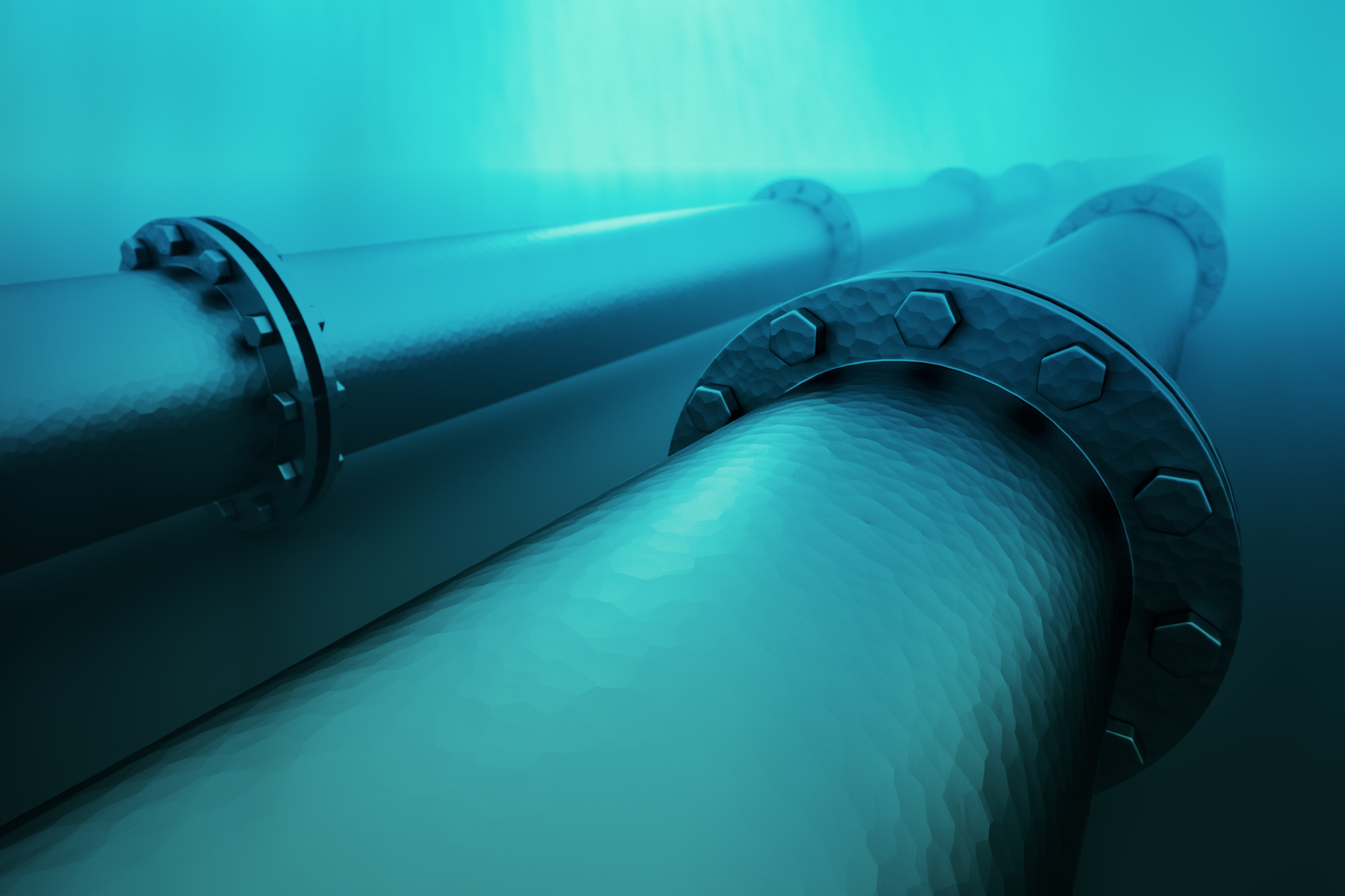

“Always further, always deeper.” This is the logic behind the implementation of offshore platforms. Faced with the world’s intense demand and thanks to technological progress, hydrocarbon reserves which had previously been considered to be inaccessible are now exploitable. However, the industry has met an obstacle: methane hydrates. These solid compounds are actually solidified water molecules trapped in a sort of cage created by a methane molecule. These are created in environments of around 4°C and 80 bars of pressure, such as in deep-sea pipelines. These can end up accumulating and subsequently obstructing the flow. This issue may prove hard to fix, seeing as depths reach close to 3,000 meters!

In order to get around this problem, oil companies generally inject methanol into the pipelines in order to lower the formation temperature of the hydrates. However, injecting this alcohol carries an additional cost as well as an environmental impact. Additionally, systematic thermal insulation of pipelines is not sufficient to prevent the formation of hydrates. “The latest solution consists in injecting additives which are supposed to slow the formation and accumulation of hydrates”, explains Ana Cameirao, a researcher at the SPIN (Sciences des Processus Industriels et Naturels) research center at Mines Saint-Étienne, and a specialist in crystallization, the science behind the formation and growth of solid aggregates within liquid phases, for instance.

Towards the reasonable exploitation of pipelines

For nearly 10 years, the researcher has been studying the formation of hydrates in all conditions likely to occur in offshore pipelines. “We are looking to model the phenomenon, in other words, to estimate the quantity of hydrates formed, to see whether this solid phase can be transported through the flow, to find if there is a need to inject additives, and if yes, in what quantity”, she summarizes. The goal is to prompt a well-considered exploitation of the pipelines and avoid the massive injection of methanol as a preventative measure. In order to establish these models, Ana Cameirao utilizes a valuable experimental tool: the Archimedes platform.

This 50 meter loop located at the SPIN center allows her to reproduce the flow of the mixture of oil, water and gas which circulates in the pipelines. A plethora of equipment, including cameras and laser probes which function under very high pressure levels, allow her to study the formation of the solid compounds, including their size, nature, aggregation speed, etc. She has been closely examining all the possible scenarios: “we vary the temperature and pressure, but also the nature of the mix, for example by incorporating more or less gas, or by varying the proportion of water in the mixture”, explains Ana Cameirao.

Thanks to all these trials, in 2016, the researcher and her team published one of the most complete comprehension models about this phenomenon of methane hydrate crystallization. “Similar models do already exist, but only for fixed proportions of water. Our model is more extensive: it can integrate any proportion of water. This allows a greater variety of oil wells to be studied, including the oldest ones where the mixture can consist of up to 90% water!” This model is the product of painstaking work: over 150 experiments have been completed over the last 5 years, each of them representing at least two measurement days. Above all, it offers new perspectives: “Petrochemical process simulation software is very limited in explaining the flow in pipelines alongside hydrate formation. The main task is to invent modules that are able to take this phenomenon into consideration”, analyses Ana Cameirao.

Applications in environmental technology

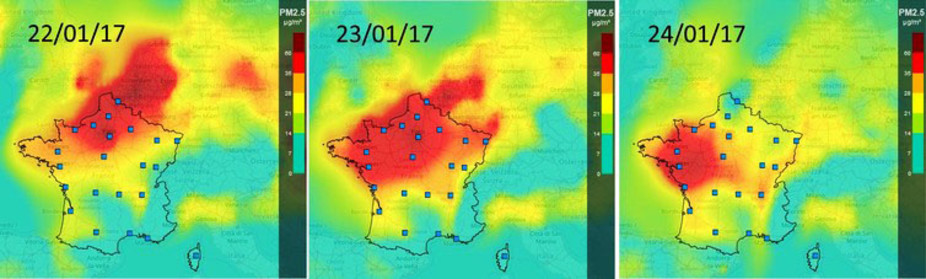

This is the next step of a soon-to-be completed project: “We are currently aiming to combine our knowledge on crystallization of hydrates with that of experts on fluid mechanics, in order to better characterize their flow”. This multidisciplinary approach is the main subject of the international chair Gas Hydrates and Multiphase Flow in Flow Assurance, which was opened in January 2017 by the Mines school in collaboration with two laboratories hailing from the Federal University of Technology in Parana, Brazil (UTFPR), and the Colorado School of Mines in the US. The chair, which will span over three to five years, also involves industrial partners, the top level of whom includes Total. “Total, who has been a partner of the research center for 15 years, not only offers financial support, but also shares with us its experience in real exploitation”, tells Ana Cameirao.

Credits: Mines Saint-Étienne

A better understanding of hydrate crystallization will facilitate the offshore exploitation of hydrocarbon, but it could also benefit environmental technology over time. Indeed, researchers are working on innovative application of hydrates, such as the harvesting of CO2 or new climate control techniques. “The idea would be to form hydrate sorbets overnight when energy is available and less expensive, in order to diffuse this through a climate control system during the daytime. As the hydrates melt, the heat in the surrounding area would be absorbed”, explains Ana Cameirao. Clearly, it seems that crystallization can lead to anything!

[author title=”Ana Cameirao : « Creativity comes first »” image=”https://imtech-test.imt.fr/wp-content/uploads/2017/09/Portrait_Ana_Cameirao.jpg”]

Ana Cameirao chose very early on to pursue a course in engineering in her home country of Portugal. “It was the possibility to apply the science which interested me, this potential to have a definitive impact on people’s lives”, she recalls. After finishing her studies in industrial crystallization at IMT Mines Albi, she threw herself into applied research. “It’s a constant challenge, we are always discovering new things”, she marvels, when looking back over her ten years at the SPIN center at Mines Saint Étienne.

Ana Cameirao also invokes creativity in her role as a professor, backed by innovative teaching methods which include projects, specific case studies, bibliographic independent learning, and much more. “Students today are no longer interested in two-hour lectures. You need to involve them”, she tells. The teacher feels so strongly about this topic that she decided to complete a MOOC dedicated to exploring methods for stimulating creativity, and plans to organize her own workshops on the subject within her school in 2018!

[/author]

The TSN Carnot institute, a guarantee of excellence in partnership-based research since 2006

The TSN Carnot institute, a guarantee of excellence in partnership-based research since 2006