Energy and telecommunications: brought together by algorithms

It is now widely accepted that algorithms can have a transformative effect on a particular sector. In the field of telecommunications, they may indeed greatly impact how energy is produced and consumed. Between reducing the energy consumption of network facilities and making better use of renewable energy, operators have embarked on a number of large-scale projects. And each time, algorithms have been central to these changes. The following is an overview of the transformations currently taking place and findings from research by Loutfi Nuaymi, a researcher in telecommunications at IMT Atlantique. On April 28 he gave a talk about this subject at the IMT symposium dedicated to energy and the digital revolution.

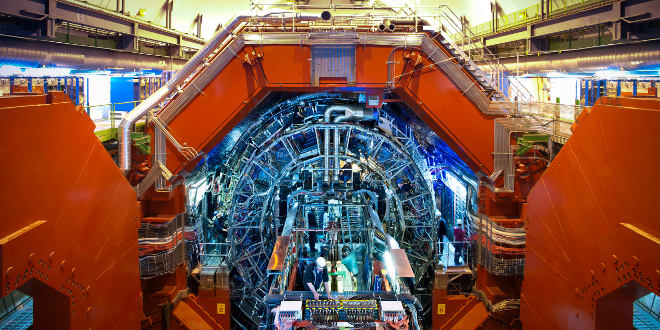

20,000: the average number of relay antennae owned by a mobile operator in France. Also called “base stations,” they represent 70% of the energy bill for telecommunications operators. Since each station transmits with a power of approximately 1 kW, reducing their demand for electricity is a crucial issue for operators in order to improve the energy efficiency of their networks. To achieve this objective, the sector is currently focusing more on technological advances in hardware than on the software component. Due to the latest advances, a recent base station consumes significantly less energy for data throughout that is nearly a hundred times higher. But new algorithms that promise energy savings are being developed, including some which simply involve… switching off base stations at certain scheduled times!

This solution may seem radical since switching off a base station in a cellular network means potentially preventing users within a cell from accessing the service. Loutfi Nuaymi, a researcher in telecommunications at IMT Atlantique, is studying this topic, in collaboration with Orange. He explains that, “base stations would only be switched off during low-load times, and in urban areas where there is greater overlap between cells.” In large cities, switching off a base station from 3 to 5am would have almost no consequence, since users are likely to be located in areas covered by at least one other base station, if not more.

Here, the role of algorithms is twofold. First of all, they would manage the switching off of antennas when user demand is lowest (at night) while maintaining sufficient network coverage. Secondly, they would gradually switch the base stations back on when users reconnect (in the morning) and up to peak hours during which all cells must be activated. This technique could prove to be particularly effective in saving energy since base stations currently remain switched on at all times, even during off-peak hours.

Loutfi Nuaymi points out that, “the use of such algorithms for putting base stations on standby mode is taking time to reach operators.” Their reluctance is understandable, since interruptions in service are by their very definition the greatest fear of telecom companies. Today, certain operators could put one base station out of ten on standby mode in dense urban areas in the middle of the night. But the IMT Atlantique researcher is confident in the quality of his work and asserts that it is possible “to go even further, while still ensuring high quality service.”

Gradually switching base stations on or off in the morning and at night according to user demand is an effective energy-saving solution for operators.

While energy management algorithms already allow for significant energy savings in 4G networks, their contributions will be even greater over the next five years, with 5G technology leading to the creation of even more cells to manage. The new generation will be based on a large number of femtocells covering areas as small as ten meters — in addition to traditional macrocells with a one-kilometer area of coverage.

Femtocells consume significantly less energy, but given the large number of these cells, it may be advantageous to switch them off when not in use, especially since they are not used as the primary source of data transmission, but rather to support macrocells. Switching them off would not in any way prevent users from accessing the service. Loutfi Nuaymi describes one way this could work. “It could be based on a system in which a user’s device will be detected by the operator when it enters a femtocell. The operator’s energy management algorithm could then calculate whether it is advantageous to switch on the femtocell, by factoring in, for example, the cost of start-up or the availability of the macrocell. If it is not overloaded, there is no reason to switch on the femtocell.”

What is the right energy mix to power mobile networks?

The value of these algorithms lies in their capacity to calculate cost/benefit ratios according to a model which takes account of a maximum number of parameters. They can therefore provide autonomy, flexibility, and quick performance in base station management. Researchers at IMT Atlantique are building on this decision-making support principle and going a step further than simply determining if base stations should be switched on or put on standby mode. In addition to limiting energy consumption, they are developing other algorithms for optimizing the energy mix used to power the network.

They begin with the observations that renewable sources of energy are less expensive, and if operators equip themselves with solar panels or wind turbines, they must also store the energy produced to make up for periodic variations in sunshine and the sporadic nature of wind. So, how can an operator decide between using stored energy, energy supplied by its own solar or wind facilities, or energy from the traditional grid, which may rely on a varying degree of smart technology? Loutfi Nuaymi and his team are also working on user cases related to this question and have joined forces with industrial partners to test and develop algorithms which could provide some answers.

“One of the very concrete questions operators ask is what size battery is best to use for storage,” says the researcher. “Huge batteries cost as much as what operators save by replacing fossil fuels with renewable energy sources. But if the batteries are too small, they will have storage problems. We’re developing algorithmic tools to help operators make these choices, and determine the right size according to their needs, type of battery used, and production capacity.”

Another question: is it more profitable to equip each base station with its own solar panel or wind turbine, or rather, create an energy farm to supply power to several antennas? The question is still being explored but preliminary findings suggest that no single organization is clearly preferential when it comes to solar panels. Wind turbines, however, are imposing objects which are sometimes refused by neighbors, making it preferential to group them together.

Renewable energies at the cost of diminishing quality of service?

Once this type of constraint has been ruled out, operators must calculate the maximum proportion of renewable energies to include in the energy mix with the least possible consequences on quality of mobile service. Sunshine and wind speed are sporadic by nature. For an operator, a sudden drop in production at a wind or solar power farm could have direct consequences on network availability — no energy means no working base stations.

Loutfi Nuaymi admits that these limitations reveal the complexity of developing algorithms, “We cannot simply consider the cost of the operators’ energy bills. We must also take account of the minimum proportion of renewable energies they are willing to use so that their practices correspond to consumer expectations, the average speed of distribution to satisfy users, etc.”

Results from research in this field show that in most cases, the proportion of renewable energies used in the energy mix can be raised to 40%, with only an 8% drop in quality of service as a result. In off-peak hours, this represents only a slight deterioration and does not have a significant effect on network users’ ability to access the service.

And even if a drastic reduction in quality of service should occur, Loutfi Nuaymi has some solutions. “We have worked on a model for a mobile subscription that delays calls if the network is not available. The idea is based on the principle of overbooking planes. Voluntary subscribers — who, of course, do not have to choose this subscription— accept the risk of the network being temporarily unavailable and, in return, receive financial compensation if it affects their use.”

Although this new subscription format is only one possible solution for operators, and is still a long way from becoming reality, it shows how the field of telecommunications may be transformed in response to energy issues. Questions have arisen at times about the outlook for mobile operators. Given the energy consumed by their facilities and the rise of smart grids which make it possible to manage both self-production and the resale of electricity, these digital players could, over time, come to play a significant role in the energy sector.

“It is an open-ended question and there is a great deal of debate on the topic,” says Loutfi Nuaymi. “For some, energy is an entirely different line of business, while others see no reason why they should not sell the energy collected.” The controversy could be settled by new scientific studies in which the researcher is participating. “We are already performing technical-economic calculations in order to study operators’ prospects.” The highly-anticipated results could significantly transformation the energy and telecommunications market.

This article is part of our dossier Digital technology and energy: inseparable transitions!

Coordinated by Engie, and with one of its main academic partners being IMT,

Coordinated by Engie, and with one of its main academic partners being IMT,

Now, the time has come to apply this research. The researcher and his team are therefore working to develop the VALTHERA platform (in French: VALorisation THErmique des Résidus de transformation des Agro-ressources, the Thermal Recovery of Processing Residues from Agro-Resources). This platform is aimed at developing various processes for thermal waste recovery in partnership with industrial stakeholders (see box). In particular, Javier Escudero and his colleagues at the

Now, the time has come to apply this research. The researcher and his team are therefore working to develop the VALTHERA platform (in French: VALorisation THErmique des Résidus de transformation des Agro-ressources, the Thermal Recovery of Processing Residues from Agro-Resources). This platform is aimed at developing various processes for thermal waste recovery in partnership with industrial stakeholders (see box). In particular, Javier Escudero and his colleagues at the