H2sys: hydrogen in the energy mix

I’MTech is dedicating a series of articles to success stories from research partnerships supported by the Télécom & Société Numérique Carnot institute (TSN), to which IMT and Femto Engineering belong.

[divider style=”normal” top=”20″ bottom=”20″]

H2sys is helping make hydrogen an energy of the future. This spin-off company from the FCLAB and Femto-ST laboratories in Franche-Comté offers efficient solutions for integrating hydrogen fuel cells. Some examples of these applications include generators and low-carbon urban mobility. And while the company was officially launched only 6 months ago, its history is closely tied to the pioneers of hydrogen technology from Franche-Comté.

1999, the turn of the century. Political will was focused on the new millennium and energy was already a major industrial issue. The end of the 90s marked the beginning of escalating oil prices after over a decade of price stability. In France, the share of investment in nuclear energy was waning. The quest for other forms of energy production had begun, a search for alternatives worthy of the 2000s. This economic and political context encouraged the town of Belfort and the local authorities of the surrounding region to invest in hydrogen. Thus, the FCLAB research federation was founded, bringing together relevant laboratories related to this theme. Almost two decades later, Franche-Comté has become a major hub for the discipline. FCLAB is the first national applied research community to work on hydrogen energy and the integration of fuel cell systems. It also integrates a social sciences and humanities research approach which looks at how our societies adopt new hydrogen technologies. This federation brings together 6 laboratories including FEMTO-ST and is under the aegis of 10 organizations, including the CNRS.

It was from this hotbed of scientific activity that H2sys was born. Described by Daniel Hissel, one of its founders, as “a human adventure”, the young company’s history is intertwined with that of the Franche-Comté region. First, because it was created by scientists from FCLAB. Daniel Hissel is himself a professor at the University of Franche-Comté and leads a team of researchers at Femto-ST, both of which are partners of the federation. Secondly, because the idea at the heart of the H2sys project grew out of regional activity in the field of hydrogen energy. “As a team, we began our first discussions on the industrial potential of hydrogen fuel cell systems early as 2004-2005,” Daniel Hissel recalls. The FCLAB teams were already working on integrating these fuel cells into energy production systems. However, the technology was not yet sufficiently mature. The fundamental work did not yet target large-scale applications.

Ten more years would be needed for the uses to develop and for the hydrogen fuel cell market to truly take shape. In 2013, Daniel Hissel and his colleagues watched intently as the market emerged. “All that time we had spent working to integrate the fuel cell technology provided us with the necessary objectivity and allowed us to develop a vision of the future technical and economic issues,” he explains. The group of scientists realized that it was the right time to start their business. They created their project the same year. They quickly received support from the Franche-Comté region, followed by the Technology Transfer Accelerator (SATT) in the Grand Est region and the Télécom & Société Numérique Carnot institute. In 2017, the project officially became the company H2sys.

Hydrogen vs. Diesel?

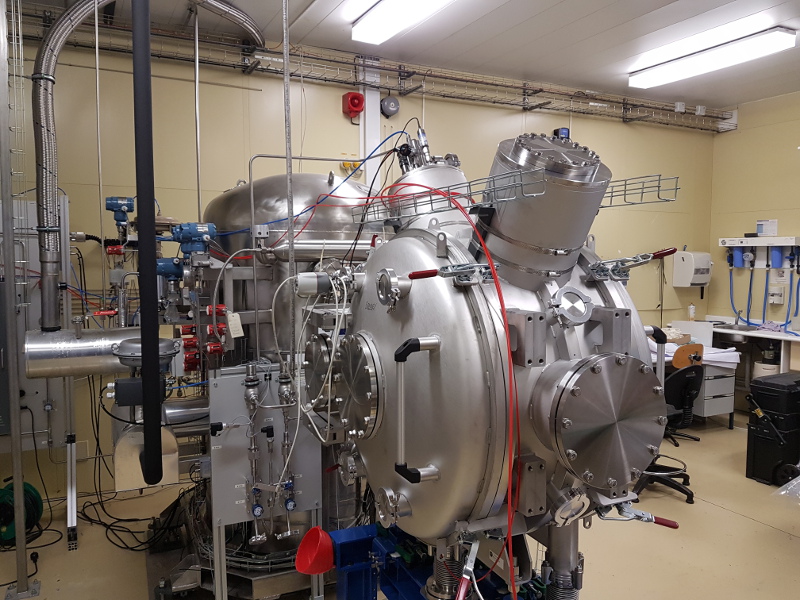

The spin-off now offers services for integrating hydrogen fuel cells based on its customers’ needs. It focuses primarily on generators ranging from 1 to 20 kW. “Our goal is to provide electricity to isolated sites to meet needs on a human scale,” says Daniel Hissel. The applications range from generating electric power for concerts or festivals to supporting rescue teams responding to road accidents or fires. The solutions developed by H2sys integrate expertise from FCLAB and Femto-ST, whose research involves work in system diagnosis and prognosis aimed at understanding and anticipating failures, lifespan analysis, predictive maintenance and artificial intelligence for controlling devices.

Given their uses, H2sys systems are in direct competition with traditional generators which run on combustion engines—specifically diesel. However, while the power ranges are similar, the comparison ends there, according to Daniel Hissel, since the hydrogen fuel cell technology offers considerable intrinsic benefits. “The fuel cell is powered by oxygen and hydrogen, and only emits energy in the form of electricity and hot water,” he explains. The lack of pollutant emissions and exhaust gas means that these generators can be used inside as well as outside. “This is a significant benefit when indoor facilities need to be quickly installed, which is what firefighters sometimes must do following a fire,” says the co-founder of the company.

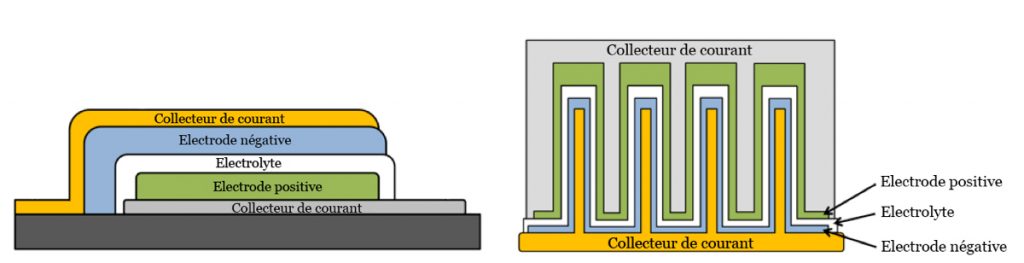

Another argument is how unpleasant it is to work near a diesel generator. Anyone who has witnessed one in use understands just how much noise and pollutant emissions the engine generates. Hydrogen generators, on the other hand, are silent and emit only water. Their maintenance is also easier and less frequent: “Within the system, the gases react through an electrolyte membrane, which makes the technology much more robust than an engine with moving parts,” Daniel Hissel explains. All of these benefits make hydrogen fuel cells an attractive solution.

In addition to generators, H2sys also works on range extenders. “This is a niche market for us because we do not yet have the capacity to integrate the technology into most vehicles,” the researcher explains. However, the positioning of the company does illustrate the existing demand for solutions that integrate hydrogen fuel cells. Daniel Hissel sees even more ambitious prospects. While the electric yield of these fuel cells is much better than those of diesel engines (55% versus 35%), the hot water they produce can also be recovered for various purposes. Many different options are being considered, including a water supply network for isolated sites, or for household consumption in micro cogeneration units for electricity and heating.

But finding new uses through intelligent integrations is not the only challenge facing H2sys. As a spin-off company from research laboratories, it must continue to drive innovation in the field. “With FCLAB, we were the first to work on diagnosing hydrogen fuel cell systems in the 2000s,” says Daniel Hissel. “Today, we are preparing the next move.” Their sights are now set on developing better methods for assessing the systems’ performance to improve quality assurance. In contributing to making the technology safer, H2SYS is heavily involved in developing fuel cells. And the technology’s maturation since the early 2000s is now producing results: hydrogen is now attracting the attention of manufacturers for the large-scale storage of renewable energies. Will this technology therefore truly be that of the new millennium, as foreseen by the pioneers of the Franche-Comté region in the late 90s? Without going that far, one thing is certain: it has earned its place in the energy mix of the future.

[box type=”shadow” align=”” class=”” width=””]

A guarantee of excellence

A guarantee of excellence

in partnership-based research since 2006

Having first received the Carnot label in 2006, the Télécom & Société numérique Carnot institute is the first national “Information and Communication Science and Technology” Carnot institute. Home to over 2,000 researchers, it is focused on the technical, economic and social implications of the digital transition. In 2016, the Carnot label was renewed for the second consecutive time, demonstrating the quality of the innovations produced through the collaborations between researchers and companies.

The institute encompasses Télécom ParisTech, IMT Atlantique, Télécom SudParis, Institut Mines-Télécom Business School, Eurecom, Télécom Physique Strasbourg and Télécom Saint-Étienne, École Polytechnique (Lix and CMAP laboratories), Strate École de Design and Femto Engineering. Learn more [/box]