What is a lithium-ion battery?

The lithium-ion battery is one of the best-sellers of recent decades in microelectronics. It is present in most of the devices we use in our daily lives, from our mobile phones to electric cars. The 2019 Nobel Prize in Chemistry was awarded to John Goodenough, Stanley Wittingham, and Akira Yoshino, in recognition of their initial research that led to its development. In this new episode of our “What’s?” series, Thierry Djenizian explains the success of this component. Djenizian is a researcher in microelectronics at Mines Saint-Étienne and is working on the development of new generations of lithium-ion batteries.

Why is the lithium-ion battery so widely used?

Thierry Djenizian: It offers a very good balance between storage and energy output. To understand this, imagine two containers: a glass and a large bottle with a small neck. The glass contains little water but can emptied very quickly. The bottle contains a lot of water but will be slower to empty. The electrons in a battery behave like the water in the containers. The glass is like a high-power battery with a low storage capacity, and the bottle a low-power battery with a high storage capacity. Simply put, the lithium-ion battery is like a bottle but with a wide neck.

How does a lithium-ion battery work?

TD: The battery consists of two electrodes separated by a liquid called electrolyte. One of the two electrodes is an alloy containing lithium. When you connect a device to a charged battery, the lithium will spontaneously oxidize and release electrons – lithium is the chemical element that releases electrodes most easily. The electrical current is produced by the electrons flowing between the two electrodes via an electrical circuit, while the lithium ions from the oxidation reaction migrate through the electrolyte into the second electrode.

The lithium ions will thus be stored until they no longer have any available space or until the first electrode has released all its lithium atoms. The battery is then discharged and you simply apply a current to force the reverse chemical reactions and have the ions migrate in the other direction to return to their original position. This is how lithium-ion technology works: the lithium ions are inserted into and extracted from the electrodes reversibly depending on whether the battery is charging or discharging.

What were the major milestones in the development of the lithium-ion battery?

TD: Wittingham discovered a high-potential material composed of titanium and sulfur capable of reacting with lithium reversibly, then Goodenough proposed the use of metal alloys. Yoshino marketed the first lithium-ion battery using graphite and a metal oxide as electrodes, which considerably reduced the size of the batteries.

What are the current scientific issues surrounding lithium-ion technology?

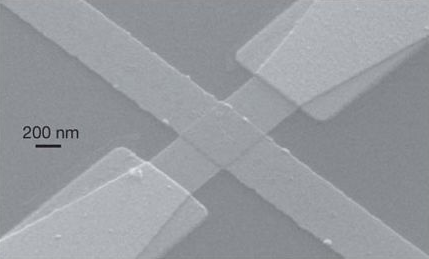

TD: One of the main trends is to replace the liquid electrolyte with a solid electrolyte. It is best to avoid the presence of flammable liquids, which also present risks of leakage, particularly in electronic devices. If the container is pierced, this can have irreversible consequences on the surrounding components. This is particularly true for sensors used in medical applications in contact with the skin. Recently, for example, we developed a connected ocular lens with our colleagues from IMT Atlantique. The lithium-ion battery we used included a solid polymer-based electrolyte because it would be unacceptable for the electrolyte to come into contact with the eye in the event of a problem. Solid electrolytes are not new. What is new is the research work to optimize them and make them compatible with what is expected of lithium-ion batteries today.

Are we already working on replacing the lithium-ion battery?

TD: Another promising trend is to replace the lithium with sodium. The two elements belong to the same family and have very similar properties. The difference is that lithium is extracted from mines at a very high environmental and social cost. Lithium resources are limited. Although lithium-ion batteries can reduce the use of fossil fuels, if their extraction results in other environmental disasters, they are less interesting. Sodium is naturally present in sea salt. It is therefore an unlimited resource that can be extracted with a considerably lower impact.

Can we already do better than the lithium-ion battery for certain applications?

TD: It’s hard to say. We have to change the way we think about our relationship to energy. We used to solve everything with thermal energy. We cannot use the same thinking for electric batteries. For example, we currently use lithium-ion button cell batteries for the internal clocks of our computers. For this very low energy consumption, a button cell has a life span of several hundred years, while the computer will probably be replaced in ten years. A 1mm² battery may be sufficient. The size of energy storage devices needs to be adjusted to suit our needs.

Read on I’MTech: Towards a new generation of lithium batteries?

We also have to understand the characteristics we need. For some uses, a lithium-ion battery will be the most appropriate. For others, a battery with a greater storage capacity but a much lower output may be more suitable. For still others, it will be the opposite. When you use a drill, for example, it doesn’t take four hours to drill a hole, nor do you need a battery that will remain charged for several days. You want a lot of power, but you don’t need a lot of autonomy. “Doing better” than the lithium-ion battery, perhaps simply means doing things differently.

What does it mean to you to have a Nobel Prize awarded to a technology that is at the heart of your research?

TD: They are names that we often mention in our scientific publications, because they are the pioneers of the technologies we are working on. But beyond that, it is great to see a Nobel Prize awarded to research that means something to the general public. Everyone uses lithium-ion batteries on a daily basis, and people recognize the importance of this technology. It is nice to know that this Nobel Prize in Chemistry is understood by many people.