From epertise in telecommunications networks to the performance of electricity grids

From networks to everyday objects, the internet has radically changed our environment. From the main arteries to the smallest vessels, it is embedded in such a large number of the most banal objects that it puts a strain on the energy bill. Yet now communicating objects can exchange information to optimize their electricity consumption. After several years of research on the IPv6 protocol, Laurent Toutain and Alexander Pelov, researchers at Télécom Bretagne, are adapting this protocol to suit objects with a small energy supply and to the smart grids that are being built. Their work is part of a series of Institut Mines-Télécom projects on energy transition, focusing on the evolution, performance and compatibility of the energy networks of the future.

From the web to the Internet of things: 20 years of protocol development

Over the past few years the advent of smart transport and the Internet of Things has exposed the limits of the classical model of the internet. Mobility, creation of spontaneous networks, energy constraints and security must be taken into account. The number of devices eligible for an Internet address has exceeded capacities of IP, the network’s fundamental protocol. With IPv6, a version offering 667 million billions of possible IP addresses per mm2 on Earth, each component or receptor of an object can now have its own address and be consulted. But IP was not designed for receptors located in the middle of nowhere with finite resources such as the processor, battery and memory and with low-speed connection. For such “LowPAN”, Low Power Wireless Personal Area Networks, a new version of IPv6 has been created, 6LowPAN, with an associated consultation protocol, CoAP (constraint application protocol), which assists continual communication between the traditional internet and that of Things.

“CoAP is a new way of structuring networks,” explains Laurent Toutain, “the interaction between existing networks and communicating objects can be established in two ways: either by improved integration of IP protocols making the network more uniform, or by marginalization of IP within the network and a diversification of the protocols for access to things”. Confidentiality and security aspects will be fundamental to the success of either one of these architectures. The researcher and his team also use mathematical models and game theory, applying them to the fields of smart transport and energy management.

Transmitting data in local energy loops

Over the past few years several regions in France producing considerably less electricity than they consume have endeavored to mobilize the region towards adopting concerted energy efficient behavior. Alexander Pelov observes that “this is the case of the poorly supplied Provence-Alpes-Côte d’Azur region, which is becoming the leader in smart grids”, meaning a vision of an electricity network with optimized links to improve its overall level of energy efficiency. Brittany and its partners have also been working for several years on controlling the demand for electricity, developing the production of energy from renewable sources and the security of electricity supply. In 2012 it sent out an initial call for projects on the “local energy loop”.

One of the objectives of electricity suppliers today is to be able to exchange data through the electricity network, “a network that was never designed to transport them”, emphasizes Laurent Toutain. It will use a low-speed 250 kb/s configuration similar to LowPAN, with the same constraints as the Internet of Things. Laurent Toutain’s team has built a simulator to precisely model the behavior of such networks. This simulator allows the re-definition of routing algorithms and the study of new applicative behavior. “We try to adapt to the existing infrastructure: we must use it so we can adapt to all forms of traffic”, and also improve the network’s performance to increase its uses. This is a major challenge because the electricity network must, for example, communicate with vehicles and negotiate if there is a priority ambulance, as well as supplying energy and transferring it from one place to another. “Without prior knowledge of telecoms networks, none of that is possible”, explains the researcher.

[box type=”shadow” align=”” class=”” width=””]

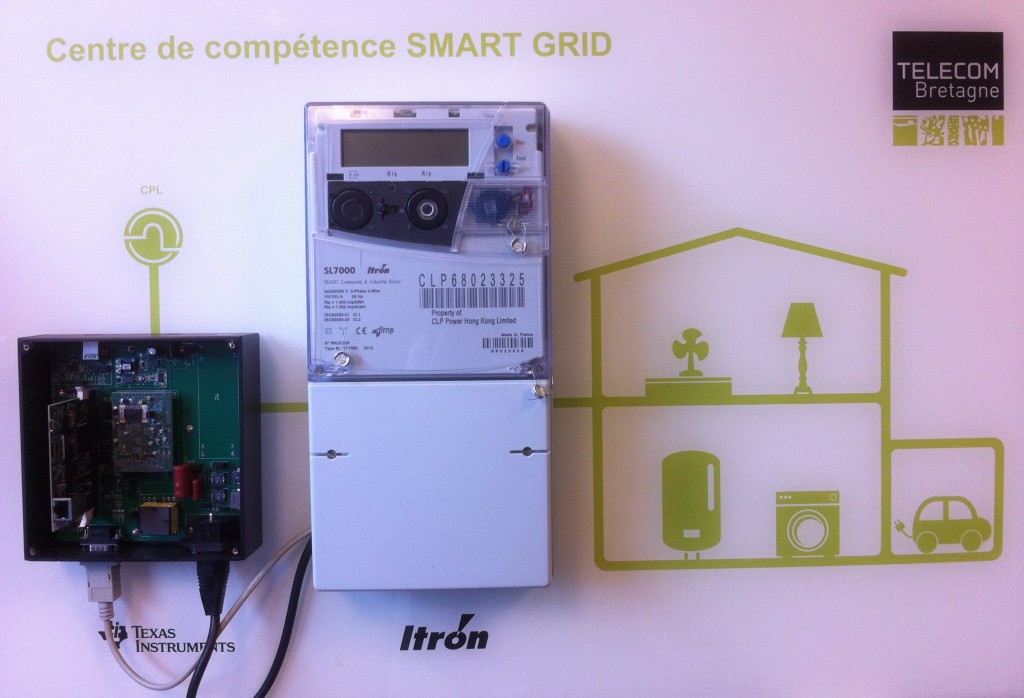

A smart grid skills center on the Rennes campus of Télécom Bretagne

A smart grid skills center on the Rennes campus of Télécom Bretagne

The fruit of a partnership with the Itron group, specialists in the development of metering solutions, and of Texas Instruments, experts in the field of semi-conductors, this research centre for power line communication technology inaugurated in November 2013 creates innovative solutions for electricity suppliers (technical upgrading of networks, smart metering etc.) and serves the French smart grids industry with the expertise of its researchers and engineers. Find out more +[/box]

Giving consumers a more active role in consumption

While better energy management can be achieved by the supplier, consumers must also play their part. Rennes is a pioneering city in thinking on the Digital City, a smart, sustainable and creative city built on the openness of public data, and has in this context sent out a call for projects concerned with energy and transport policies. Currently developing the ÉcoCité ViaSilva district, Rennes is encouraging inhabitants to restrict their energy usage and has committed to an Open Energy Data program.

Based on the observation that “we cannot double the existing infrastructure in order to transmit data”, the team of researchers based in Rennes are working on systems that give people a more active role in their consumption. It has been observed that the simple fact of showing users their consumption levels encouraged them to adopt better habits and led to savings of between 5 and 10%. “The idea is to make it fun, to imagine the “foursquare” of energy” explain Laurent Toutain and Alexander Pelov, referring to the localization mobile application whose most active users win badges. Another aspect is the visual representation of user behavior, which the team is working on with the École Européenne Supérieure d’Art de Bretagne, in digital laboratories (FabLabs) in Brittany. “Ultimately”, the researchers continue with a smile, “it’s like doing quantified-self at home”. This famous concept of “self-quantification” refers to the notion of being a “consum’actor” studied by sociologists and which is proving to be significant at this time of energy transition.

[box type=”shadow” align=”” class=”” width=””]

Research fostering spin-offs

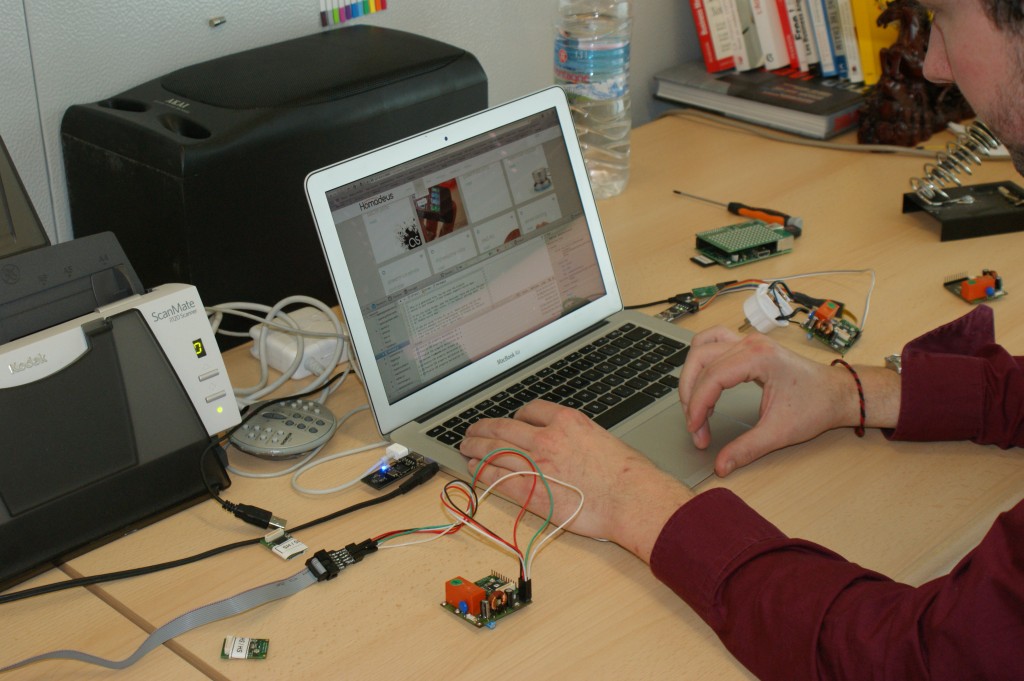

“It’s extremely rewarding to work on a societal issue like energy”, enthuses Alexander Pelov. Numerous collaborations with start-ups like Cityzen Data, companies like Deltadore, Kerlink, Médria and the FabLabs bear witness to this passion. The start-up Homadeus that is currently in the Télécom Bretagne incubator offers both “open energy data” materials and the interfaces (web and mobile) to drive them.[/box]

| Laurent Toutain and Alexander Pelov are both researchers in the Networks, Security and Multimedia Services department of Télécom Bretagne. A reputed expert in IP networks, and in particular in service quality, metrology, routing protocols and IPv6, Laurent is currently looking at new architectures and services for domestic networks with a focus on industry and technology rather than research. After studies in Bulgaria and a thesis at the University of Strasbourg in 2009, Alexander joined Télécom Bretagne in 2010 to work on energy efficiency in wireless networks and the use of smart grids in the context of smart metering and electric vehicles. |

Rédaction : Nereÿs

The Personal Information Values and Policies Chair

The Personal Information Values and Policies Chair