Ontologies: powerful decision-making support tools

Searching for, extracting, analyzing, and sharing information in order to make the right decision requires great skill. For machines to provide human operators with valuable assistance in these highly-cognitive tasks, they must be equipped with “knowledge” about the world. At Mines Alès, Sylvie Ranwez has been developing innovative processing solutions based on ontologies for many years now.

How can we find our way through the labyrinth of the internet with its overwhelming and sometimes contradictory information? And how can we trust extracted information that can then be used as the basis for reasoning integrated in decision-making processes? For a long time, the keyword search method was thought to be the best solution, but in order to tackle the abundance of information and its heterogeneity, current search methods favor taking domain ontology-based models into consideration. Since 2012, Sylvie Ranwez has been building on this idea through research carried out at Mines Alès, in the KID team (Knowledge representation and Image analysis for Decision). This team strives to develop models, methods, and techniques to assist human operators confronted with mastering a complex system, whether technical, social, or economic, particularly within a decision-making context. Sylvie Ranwez’s research is devoted to using ontologies to support interaction and personalization in such settings.

The philosophical concept of ontology is the study of the being as an entity, as well as its general characteristics. In computer science, ontology describes the set of concepts, along with their properties and interrelationships within a particular field of knowledge in such a way that they may be analyzed by humans as well as by computers. “Though the problem goes back much further, the name ontology started being used in the 90s,” notes Sylvie Ranwez. “Today many fields have their own ontology“. Building an ontology starts off with help from experts in a field who know about all the entities which characterize it, as well as their links, thus requiring meetings, interviews, and some back-and-forth in order to best understand the field concerned. Then the concepts are integrated into a coherent set, and coded.

More efficient queries

This knowledge can then be integrated into different processes, such as resource indexing and searching for information. This leads to queries with richer results than when using the keyword method. For example, the PubMed database, which lists all international biomedical publications, relies on MeSH (Medical Subject Headings), making it possible to index all biomedical publications and facilitate queries.

In general, the building of an ontology begins with an initial version containing between 500 and 3,000 concepts and it expands through user feedback. The Gene Ontology, which is used by biologists from around the world to identify and annotate genes, currently contains over 30,000 concepts and is still growing. “It isn’t enough to simply add concepts,” warns Sylvie Ranwez, adding: “You have to make sure an addition does not modify the whole.”

[box type=”shadow” align=”” class=”” width=””]

Harmonizing disciplines

Among the studies carried out by Sylvie Ranwez, ToxNuc-E (nuclear and environmental toxicology) brought together biologists, chemists and physicists from the CEA, INSERM, INRA and CNRS. But the definition of certain terms differs according to the discipline, and reciprocally, the same term may have two different definitions. The ToxNuc-E group called upon Sylvie Ranwez and Mines Alès in order to describe the study topic, but also to help these researchers from different disciplines share common values. The ontology of this field is now online and used to index the project’s scientific documents. Specialists from fields possessing ontologies often point to their great contribution to harmonizing their discipline. Once they have ontologies, different processing methods are possible, often based on measurements of semantic similarity (the topic of Sébastien Harispe’s PhD, which led to a publication of a work in English) ranging from resource indexation, to searching for information, or classification (work by Nicolas Fiorini, during his PhD supervised by Sylvie Ranwez). [/box]

Specific or generic ontologies

The first ontology Sylvie Ranwez tackled, while working on her PhD at the Laboratory of Computer and Production Engineering (LGI2P) at Mines Alès, concerned music, a field with which she is very familiar since she is an amateur singer. Well before the arrival of MOOCs, the goal was to model both the fields of music and teaching methods in order to offer personalized distance learning courses about music. She then took up work on football, at the urging of PhD director Michel Crampes. “Right in the middle of the World Cup, the goal was to be able to automatically generate personalized summaries of games,” she remembers. She went on to work on other subjects with private companies or research institutes like the CEA (French Atomic Energy Commission). Another focus of Sylvie Ranwez’s research is ontology learning, which would make it possible to build ontologies automatically by analyzing texts. However, it is very difficult to change words into concepts because of the inherent ambiguity of wording. Human beings are still essential.

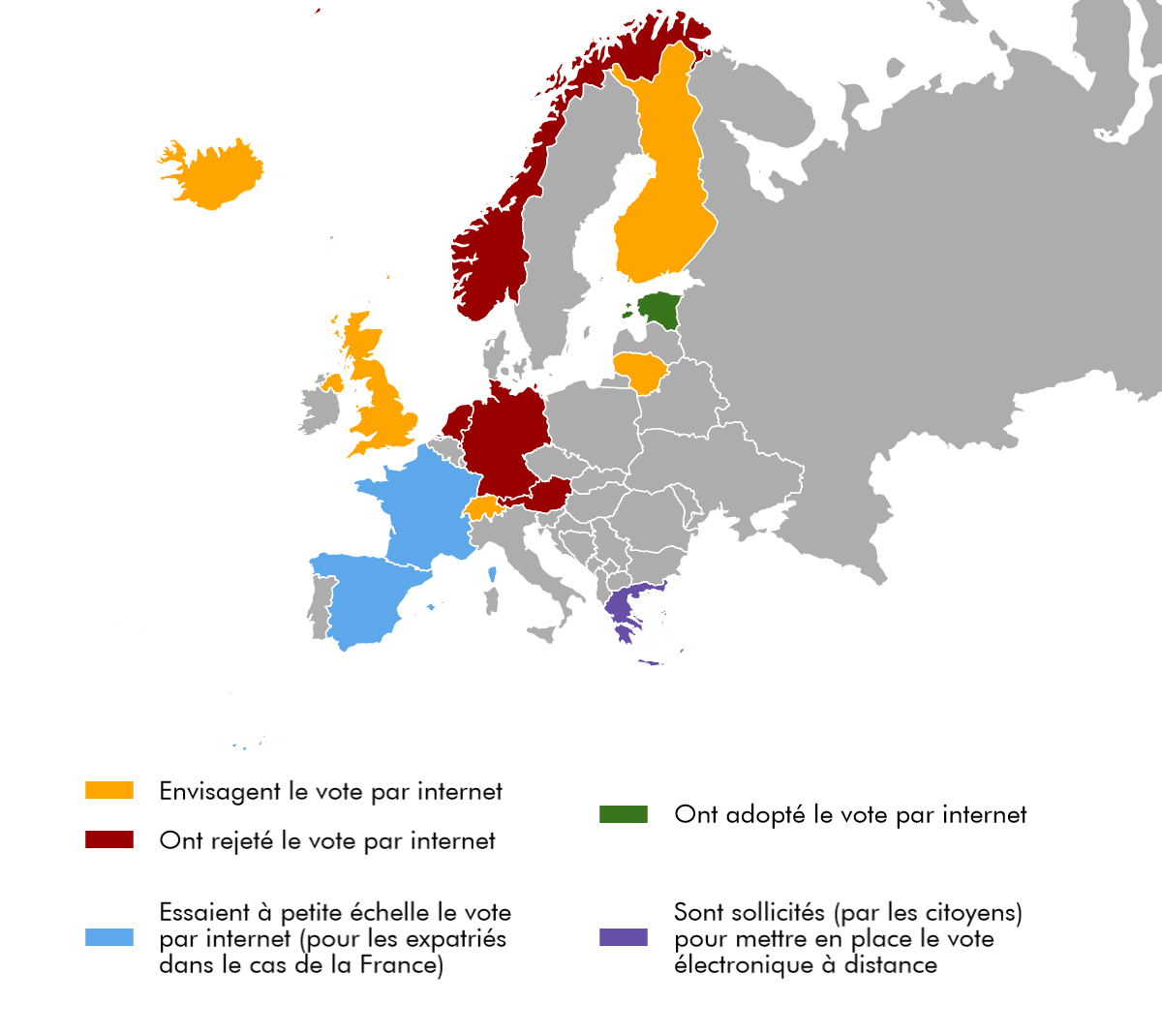

Developing an ontology for every field and for different types of applications is a costly and time-consuming process since it requires many experts and assumes they can reach a consensus. Research has thus begun involving what are referred to as “generic” ontologies. Today DBpedia, which was created in Germany using knowledge from Wikipedia, covers many fields and is based on such an ontology. During a web search, this results in the appearance of generic information on the requested subject in the upper right corner of the results page. For example, “Pablo Ruiz Picasso, born in Malaga, Spain on 25 October 1881 and deceased on 8 April 1973 in Mougins, France. A Spanish painter, graphic artist and sculptor who spent most of his life in France.”

Measuring accuracy

This multifaceted information, spread out over the internet is not, however, without its problems: the reliability of the information can be questioned. Sylvie Ranwez is currently working on this problem. In a semantic web context, data is open and different sources may claim contradictory information at times. How then is it possible to detect true facts among those data? The usual statistical approach (where the majority is right) is biased. Simply spamming false information can give it the majority. With ontologies, information is confirmed by the entire set of concepts, which are interlinked, making false information easier to detect. Similarly, an issue addressed by Sylvie Ranwez’s team concerns the detection and management of uncertainty. For example, one site claims that a certain medicine cures a certain disease, whereas a different site states instead that it “might” cure this disease. And yet, in a decision-making setting it is essential to be able to detect the uncertainty of information and be able to measure it. We are only beginning to tap into the full potential ontologies for extracting, searching for, and analyzing information.

An original background

An original background

Sylvie Ranwez came to research through a roundabout route. After completing her Baccalauréat (French high school diploma) in science, she earned two different university degrees in technology (DUT). The first, a degree in physical measurements, allowed her to discover a range of disciplines including chemistry, optics, and computer science. She then went on to earn a second degree in computer science before enrolling at the EERIÉ engineering graduate school (School of Computer Science and Electronics Research and Studies) in the artificial intelligence specialization. Alongside her third year in engineering, she also earned her post-graduate advanced diploma in computer science. She followed up with a PhD at LGI2P of Mines Alès, spending the first year in Germany at the Digital Equipment laboratory of Karlsruhe. In 2001, just after earning her PhD, and without going through the traditional post-doctoral research apprenticeship abroad, she joined LGI2P’s KID team where she has been accredited to direct research since 2013. In light of her extremely technological world, she has all the makings of a geek. But don’t be fooled – she doesn’t have a cell phone. And she doesn’t want one.