TeraLab, a big data platform with a European vision

TeraLab, an IMT platform aimed at accelerating big data projects by uniting researchers and companies, has held the “Silver i-Space” quality label since December 1st, 2016. This label, awarded by the Big Data Value Association, is a guarantee of the quality of the services the platform provides, both at the technical and legal levels. The label testifies to TeraLab’s relevance in the European big data innovation ecosystem and the platform’s ability to offer cutting-edge solutions. Anne-Sophie Taillandier, the platform’s director, tells us about the reasons for this success and TeraLab’s future projects.

What does the “Silver i-Space” label, awarded by the Big Data Value Association (BDVA) on December 1st, mean for you?

Anne-Sophie Taillandier: This is an important award, because it is a Europe-wide reference. The BDVA is an authoritative body, because it ensures the smooth organization of the public-private partnership on big data established by the European Commission. This label therefore has an impact on our ability to work at the continental level. DG Connect, the branch of the Commission in charge of rolling out a digital single market in Europe, pays particular attention to this: for example, it prefers that H2020 research projects use pre-existing platforms. Therefore, this label provides better visibility within the international innovation ecosystem.

In addition to TeraLab, three other platforms have been awarded this label. Does this put you in competition with the other platforms at the European level?

AST: The i-Spaces are complementary, not competitive. With TeraLab, we insist on creating a neutral zone: it is a breath of fresh air in the life cycle of a project, so that people—researchers and companies— can test things peacefully. The complementarity that exists between the platforms enables us to combine our energies. For example, one of the other recipients of the Silver i-Space label in December was SDIL, which is based in Germany and is more focused on industrial solutions. The people who contact the SDIL have already made an industrial choice. The stakeholders who contact TeraLab have not yet made a choice, and want to explore the potential value of their data.

How do you explain this recognition by the BDVA?

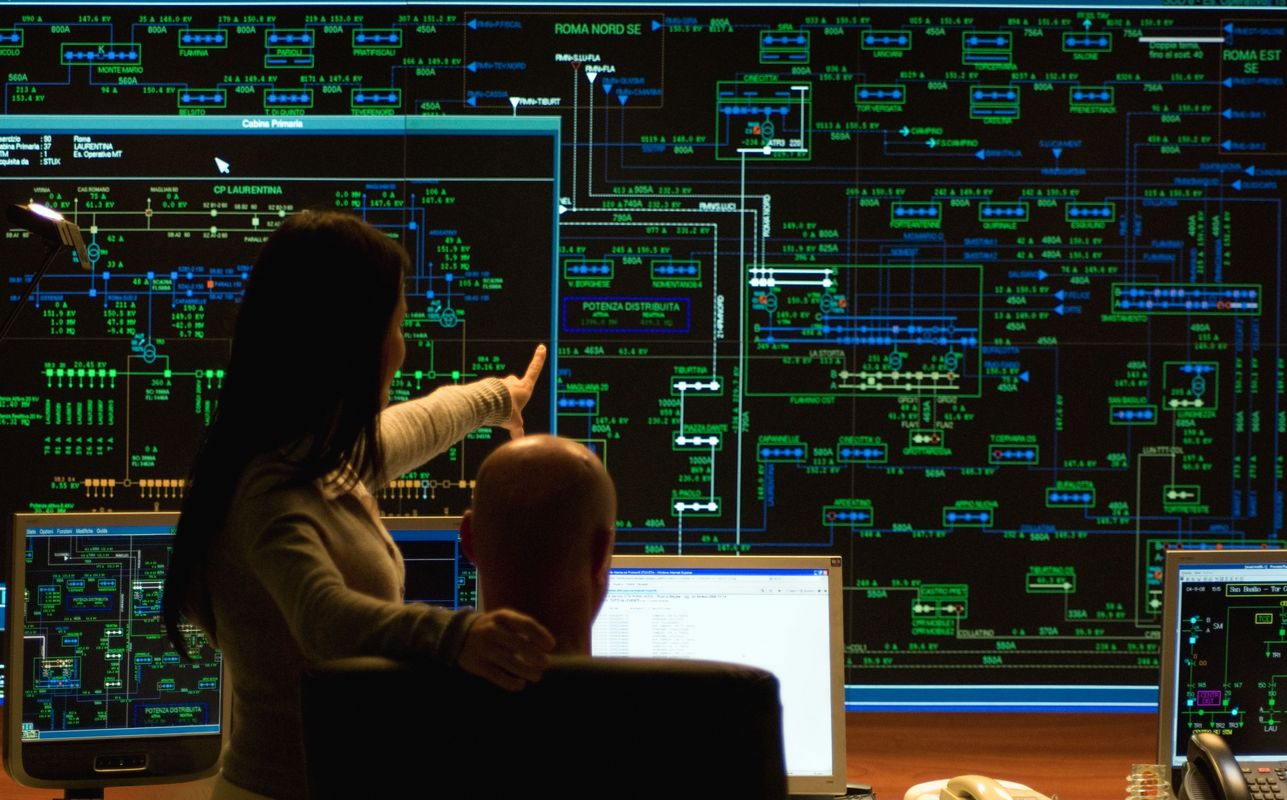

AST: TeraLab has always sought to be cutting-edge. We offer a platform that is equipped with the latest tools. Since the fourth quarter of 2016, for example, the platform has been equipped with GPUs: processors initially designed for computing graphics, but that are now also used for deep learning applications. The computing optimization they provide make it possible to make the time required for learning the algorithms fifteen times faster. We can therefore make much more powerful machines available to researchers working in the area of deep learning. Until now, the infrastructure did not allow for this. Generally speaking, if we feel that a project needs specific equipment, we look at whether we can introduce it, and whether it makes sense to do that. This constant updating is also one of the tasks set as part of the Investments for the Future program (PIA) by the Directorate-General for Enterprise.

Is it primarily the platform’s technical excellence that has been recognized?

AST: That’s not all, TeraLab represents an infrastructure, but also an ecosystem and a set of services. We assist our partners so that they can make their data available. We also have quality requirements regarding legal aspects. For example, we are working towards obtaining authorization from the Shared Healthcare Information Systems Agency (ASIP) to allow us to store personal health data. From a technical perspective, we have all we need to store this data and work with it. But we need to meet the legal and administrative requirements in order to do this, such as meeting the ISO 27001 standard. We must therefore provide guarantees of the way we receive data from companies, establish contracts, etc.

Have the upstream aspects, prior to data processing, also been the focus of innovations?

AST: Yes, because we must constantly ensure consent regarding the data. Our close relationship with IMT researchers is an asset in accomplishing this. Therefore, we have projects on data watermarking, cryptographic segmentation, and the blockchain. Data analysis and extracting value from this data can only be carried out once the entire upstream process is completed. The choice of tools for ensuring privacy issues is therefore essential, and we must constantly ensure that we remain cutting-edge in terms of security aspects as well.

How are these quality criteria then reflected in the business aspect?

AST: Today, TeraLab is involved in projects that are related to many different themes. We talked about the blockchain, but I could also mention the industry of the future, energy, tourism, health care, insurance, open source issues, interoperability… And, more importantly, since 2016, TeraLab has achieved financial equilibrium: the revenue from projects offsets the operating costs. This is far from trivial, since it means that the platform is sustainable. TeraLab will therefore continue to exist after 2018, the completion date for the PIA that initiated the platform.

What are TeraLab’s main objectives for the coming year?

AST: First of all, to strengthen this equilibrium, and continue to ensure TeraLab’s sustainability. To accomplish this, we are currently working on big data projects at the European level to respond to calls for proposals from the European Commission via the H2020 program. Then, once we have obtained the authorization from the ASIP for storing personal health data, we plan to launch health research projects, especially in the area of “P4” medicine: personalized, preventive, predictive, and participatory.

The TSN Carnot institute, a guarantee of excellence in partnership-based research since 2006

The TSN Carnot institute, a guarantee of excellence in partnership-based research since 2006