Towards a new generation of lithium batteries?

The development of connected devices requires the miniaturization and integration of electronic components. Thierry Djenizian, a researcher at Mines Saint-Étienne, is working on new micrometric architectures for lithium batteries, which appear to be a very promising solution for powering smart devices. Following his talk at the IMT symposium devoted to energy in the context of the digital transition, he gives us an overview of his work and the challenges involved in his research.

Why is it necessary to develop a new generation of batteries?

Thierry Djenizian: First of all, it’s a matter of miniaturization. Since the 1970s the Moore law which predicts an increase in performance of microelectronic devices with their miniaturization has been upheld. But in the meantime, the energy aspect has not really kept up. We are now facing a problem: we can manufacture very sophisticated sub-micrometric components, but the energy sources we have to power them are not integrated in the circuits because they take up too much space. We are therefore trying to design micro-batteries which can be integrated within the circuits like other technological building blocks. They are highly anticipated for the development of connected devices, including a large number of wearable applications (smart textiles for example), medical devices, etc.

What difficulties have you encountered in miniaturizing these batteries?

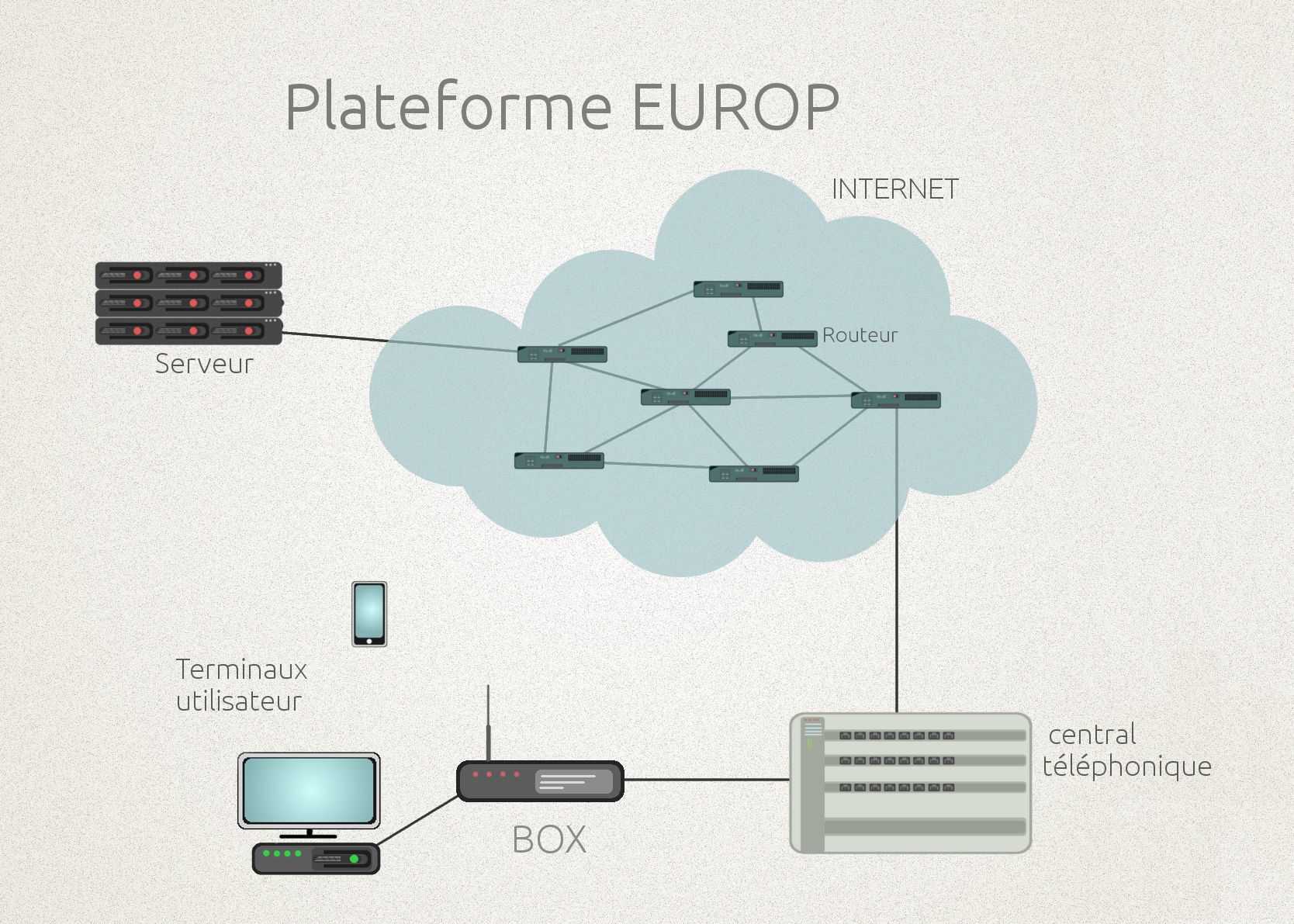

TD: A battery is composed of three elements: two electrodes and an electrolyte separating them. In the case of micro-batteries, it is essentially the contact surface between the electrodes and the electrolyte that determines storage performances: the greater the surface, the better the performance. But in decreasing the size of batteries, and therefore, the electrodes and the electrolyte, there comes a point when the contact surface is too small and battery performance is decreased.

How do you go beyond this critical size without compromising performance?

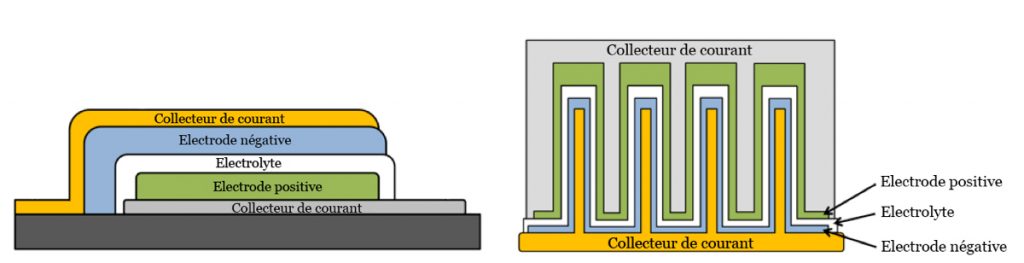

TD: One solution is to transition from 2D geometry in which the two electrodes are thin layers separated by a third thin electrolyte layer, to a 3D structure. By using an architecture consisting of columns or tubes which are smaller than a micrometer, covered by the three components of the battery, we can significantly increase contact surfaces (see illustration below). We are currently able to produce this type of structure on the micrometric scale and we are working on reaching the nanometric scale by using titanium nanotubes.

On the left, a battery with a 2D structure. On the right, a battery with a 3D structure: the contact surface between the electrodes and the electrolyte has been significantly increased.

How do these new battery prototypes based on titanium nanotubes work?

TD: Let’s take a look at the example of a low battery. One of the electrodes is composed of lithium, nickel, manganese and oxygen. When you charge this battery by plugging it in, for example, the additional electrons set off an electrochemical reaction which frees the lithium from this electrode in the form of ions. The lithium ions migrate through the electrolyte and insert themselves into the nanotubes which make up the other electrode. When all the nanotube sites which can hold lithium have been filled, the battery is charged. During the discharging phase, a spontaneous electrochemical reaction is produced, freeing the lithium ions from the nanotubes toward the nickel-manganese-oxygen electrode thereby generating the desired current.

What is the lifetime of these batteries?

TD: When a battery is working, great structural modifications take place; the materials swell and shrink in size due to the reversible insertion of lithium ions. And I’m not talking about small variations in size: the size of an electrode can become eight times larger in the case of 2D batteries which use silicon! Nanotubes provide a way to reduce this phenomenon and therefore help prolong the lifetime of these batteries. In addition, we are also carrying out research on electrolytes based on self-repairing polymers. One of the consequences of this swelling is that the contact interfaces between the electrodes and the electrolyte are altered. With an electrolyte that repairs itself, the damage will be limited.

Do you have other ideas for improving these 3D-architecture batteries?

TD: One of the key issues for microelectronic components is flexibility. Batteries are no exception to this rule, and we would like to make them stretchable in order to meet certain requirements. However, the new lithium batteries we are discussing here are not yet stretchable: they fracture when subjected to mechanical stress. We are working on making the structure stretchable by modifying the geometry of the electrodes. The idea is to have a spring-like behavior: coupled with a self-repairing electrolyte, after deformation, batteries return to their initial position without suffering irreversible damage. We have a patent pending for this type of innovation. This could represent a real solution for making autonomous electronic circuits both flexible and stretchable, in order to satisfy a number of applications, such as smart electronic textiles.

This article is part of our dossier Digital technology and energy: inseparable transitions!

The TSN Carnot institute, a guarantee of excellence in partnership-based research since 2006

The TSN Carnot institute, a guarantee of excellence in partnership-based research since 2006