Millimeter waves for mobile broadband

5G will inevitably involve opening new frequency bands allowing operators to increase their data flows. Millimeter waves are among the favorites, as they have many advantages: large bandwidth, adequate range, and small antennas. Whether or not they are opened will depend on whether they live up to expectations. The European project H2020 TWEETHER is trying to demonstrate precisely this point.

In telecommunications, the larger the bandwidth, the greater the maximum volume of data it can carry. This rule stems from the work of Claude Shannon, the father of the theory of communication. Far from anecdotal, this physical law partly explains the relentless competition between operators (see the insert at the end of the article). They fight for the largest bands in order to provide greater communication speed, and therefore, a higher quality service to their users. However, the frequencies they use are heavily regulated, as they share the spectrum with other services: naval and satellite communication, those reserved for the forces of law, medical units, etc. There are so many different users, part of the spectrum of frequencies is currently saturated.

We must therefore shift to higher frequencies, into unprecedented ranges, to allow operators to use more bands and increase their data rates. This is of primordial importance in the development of 5G. Among the potential bands to be used by mobile operators, experts are looking into millimeter waves. “The length of waves is directly linked with frequency” explains Xavier Begaud, telecommunications researcher at Télécom ParisTech. “The higher the frequency, the shorter the wavelength. Millimeter waves are located at high frequencies, between 30 and 300 GHz.”

Besides being available on a large bandwidth, they offer several other advantages. With a range of between several hundred meters and a few kilometers, they correspond to the size of the microcells planned for improving the country’s network coverage. With the rising number of smartphones and mobile devices, the current cells, which span several tens of kilometers, are saturated. By reducing the size of the cells, the speed of each antenna would be spread over fewer users. This would provide better service for everyone. In addition, cellular communication is less effective when the user is far from the antenna. Smaller cells mean greater proximity with antennas.

Another advantage is that the size of an antenna correlates with the length of the waves it transmits and receives. For millimeter waves, base stations and other transmission hubs would be a few centimeters in size at most. Besides aesthetics, the discretion of millimeter devices would be appreciated as people become wary of electromagnetic waves and the large antennas used by operators. Plus, using smaller base stations would mean fewer installation operations, and would therefore be quicker and less costly.

An often-cited downside of these waves is that they are attenuated by the atmosphere. Dioxygen in the air absorbs at 60 GHz, and other molecules absorb the waves above and below this frequency. Of course, this is an inevitable limitation, but for Xavier Begaud, this characteristic may be seen as an advantage. This natural attenuation means they may be confined to small areas. “By limiting their propagation, we can minimize interference with other 60 GHz systems” the researcher highlights.

TWEETHER: creating infrastructure for millimeter waves

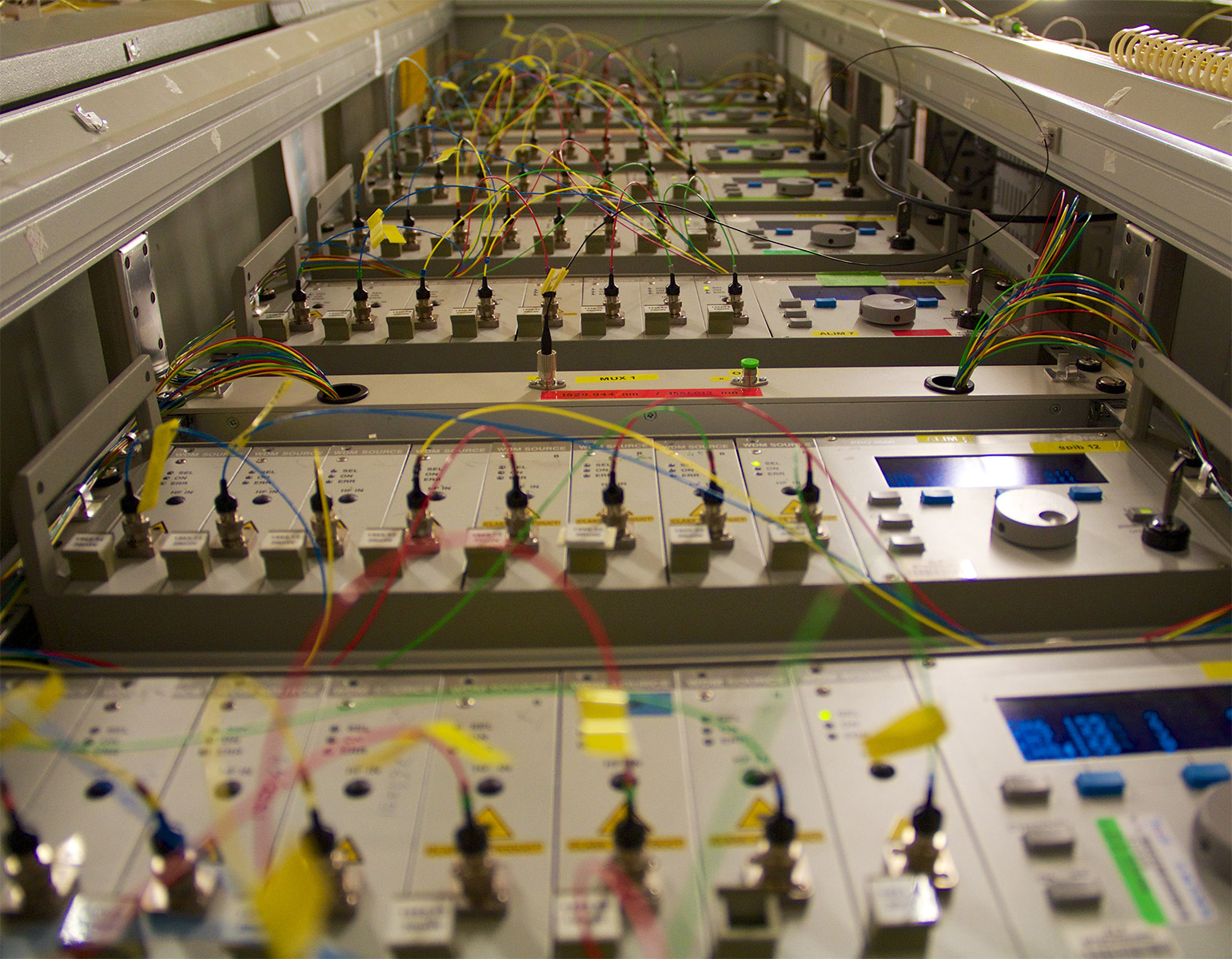

Since 2015, Xavier Begaud has been involved in the European project, TWEETHER, funded by the H2020 program, and leaded by Prof Claudio Paoloni from Lancaster University. The partners include both public (Goethe University of Frankfurt, Universitat Politècnica de València, Telecom ParisTech) and private actors (Thales Electron Devices, OMMIC, HFSE GmbH, Bowen, Fibernova Systems SL) working on creating a demonstration of infrastructure for 2018. The objective of the TWEETHER project is set a milestone in the millimetre wave technology with the realization of the first W-band (92-95GHz) wireless system for distribution of high speed internet everywhere. The TWEETHER aim is to realise the millimetre wave Point multi Point segment to finally link fibre, and sub-6GHz distribution — which is the final distribution currently achieved by LTE and WiFi, and soon by 5G. This would mean a full three segment hybrid network (fibre, TWEETHER system, sub-6GHz distribution), that is the most cost-effective architecture to reach mobile or fix final individual client. The TWEETHER system will provide indeed economical broadband connectivity with a capacity up to 10 Gbits/km² and distribution of hundreds of Mbps to tens of terminals. This will allow the capacity and coverage challenges of current backhaul and access solutions to be overcome.

This system has been made possible thanks to recent technological progress. As many parts of the system must be millimetric in size, they have to be designed with great precision. One of the essential elements in the TWEETHER system is the traveling-wave tube used in the hub which amplifies the power of the waves. The tube itself is not a new discovery, and has been used for other frequency ranges for several decades. However, for millimeter waves, it needed to be miniaturized, which was previously impossible, to deliver close to 40W of power for TWEETHER. Creating the antennas, several horns and a lens, for systems of this scale, is also challenging at these high frequencies. This part was supervised by Xavier Begaud. The antennas were measured at Télécom ParisTech in an anechoic chamber, allowing researchers to characterize the radiation patterns up to 110 GHz. The project overcame scientific and technological barriers, opening up the possibilities for large-scale millimeter systems.

The TWEETHER project is a classic example of the potential of millimeter waves in providing broadband Internet to a large number of users. Other than basic mobile communication, they could also provide an attractive alternative to supplying Fiber to the Home (FTTH), which requires many civil engineering interventions, and high maintenance costs. Transporting data to buildings using wireless broadband channels rather than fiber could therefore interest operators.

This article is part of our dossier 5G: the new generation of mobile is already a reality

[box type=”info” align=”” class=”” width=””]

A fight over bands between operators, under that watch of Arcep

In France, the allocation of frequency bands is managed by the telecommunications regulatory authority, Arcep. When the agency decides to open a new range of frequencies to operators, it holds an auction. In 2011, for example, the bands at 800 MHz and 2.6 GHz were sold to four French operators for a total sum of €3.5 billion, to the benefit of the State, as Arcep is a governmental authority. In reality, “opening the band at 800 MHz” means selling duplex lots of 10 MHz (10 MHz uploading and the same for downloading) to operators around this frequency. SFR, for example, paid over €1 billion for the band between 842 and 852 MHz uploading, and between 801 and 811 MHz for downloading. The same applied for the band at 2.6 GHZ, sold by lots of 15 or 20 MHz.

[/box]