Machines surfing the web

There has been constant development in the area of object interconnection via the internet. And this trend is set to continue in years to come. One of the solutions for machines to communicate with each other is the Semantic Web. Here are some explanations of this concept.

“The Semantic Web gives machines similar web access to that of humans,” indicates Maxime Lefrançois, Artificial Intelligence researcher at Mines Saint-Etienne. This area of the web is currently being used by companies to gather and share information, in particular for users. It makes it possible to adapt product offers to consumer profiles, for example. At present, the Semantic Web occupies an important position in research undertaken around the Internet of Things, i.e. the interconnection between machines and objects connected via the internet.

By making machines work together, the Internet of Things can be a means of developing new applications. This would serve both individuals and professional sectors, such as intelligent buildings or digital agriculture. The last two examples are also the subject of the CoSWoT1 project, funded by the French National Research Agency (ANR). This initiative, in which Maxime Lefrançois is participating, aims to provide new knowledge around the use of the Semantic Web by devices.

To do so, the projects’ researchers installed sensors and actuators in the INSA Lyon buildings on the LyonTech-la Doua campus, the Espace Fauriel building of Mines Saint-Etienne, and the INRAE experimental farm in Montoldre. These sensors record information, like the opening of a window or the temperature and CO2 levels in a room. Thanks to a digital representation of a building or block, scientists can construct applications that use the information provided by sensors, enrich it and make decisions that are transmitted to actuators.

Such applications can measure the CO2 concentration in a room, and according to a pre-set threshold, open the windows automatically for fresh air. This could be useful in the current pandemic context, to reduce the viral load in the air and thereby reduce the risk of infection. Beyond the pandemic, the same sensors and actuators can be used in other cases for other purposes, such as to prevent the build-up of pollutants in indoor air.

A dialog with cards

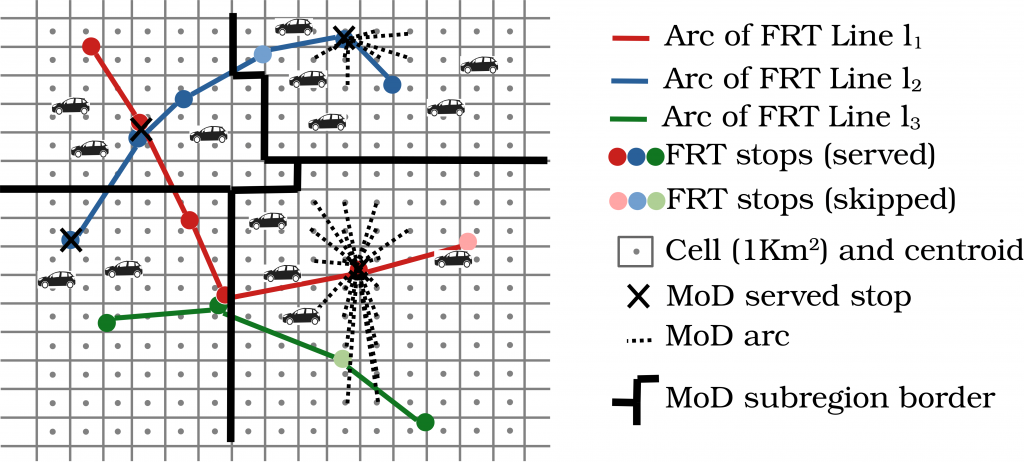

The main characteristic of the Semantic Web is that it registers information in knowledge graphs: kinds of maps made up of nodes representing objects, machines or concepts, and arcs that connect them to one another, representing their relationships. Each hub and arc is registered with an Internationalized Resource Identifier (IRI): a code that makes it possible for machines to recognize each other and identify and control objects such as a window, or concepts such as temperature.

Depending on the number of knowledge graphs built up and the amount of information contained, a device will be able to identify objects and items of interest with varying degrees of precision. A graph that recognizes a temperature identifier will indicate, depending on its accuracy, the unit used to measure it. “By combining multiple knowledge graphs, you obtain a graph that is more complete, but also more complex,” declares Lefrançois. “The more complex the graph, the longer it will take for the machine to decrypt,” adds the researcher.

Means to optimize communication

The objective of the CoSWoT project is to simplify dialog between autonomous devices. It is a question of ‘integrating’ the complex processing linked with the Semantic Web into objects with low calculating capabilities and limiting the amount of data exchanged in wireless communication to preserve their batteries. This represents a challenge for Semantic Web research. “It needs to be possible to integrate and send a small knowledge graph in a tiny amount of data,” explains Lefrançois. This optimization makes it possible to improve the speed of data exchanges and related decision-making, as well as to contribute greater energy efficiency.

With this in mind, the researcher is interested in what he calls ‘semantic interoperability’, with the aim of “ensuring that all kinds of machines understand the content of messages that they exchange,” he states. Typically, a connected window produced by one company must be able to be understood by a CO2 sensor developed by another company, which itself must be understood by the connected window. There are two approaches to achieve this objective. “The first is that machines use the same dictionary to understand their messages,” specifies Lefrançois, “The second involves ensuring that machines solve a sort of treasure hunt to find how to understand the messages that they receive,” he continues. In this way, devices are not limited by language.

IRIs in service of language

Furthermore, solving these treasure hunts is allowed by IRIs and the use of the web. “When a machine receives an IRI, it does not need to automatically know how to use it,” declares Lefrancois. “If it receives an IRI that it does not know how to use, it can find information on the Semantic Web to learn how,” he adds. This is analogous to how humans may search for expressions that they do not understand online, or learn how to say a word in a foreign language that they do not know.

However, for now, there are compatibility problems between various devices, due precisely to the fact that they are designed by different manufacturers. “In the medium term, the CoSWoT project could influence the standardization of device communication protocols, in order to ensure compatibility between machines produced by different manufacturers,” the researcher considers. It will be a necessary stage in the widespread roll-out of connected objects in our everyday lives and in companies.

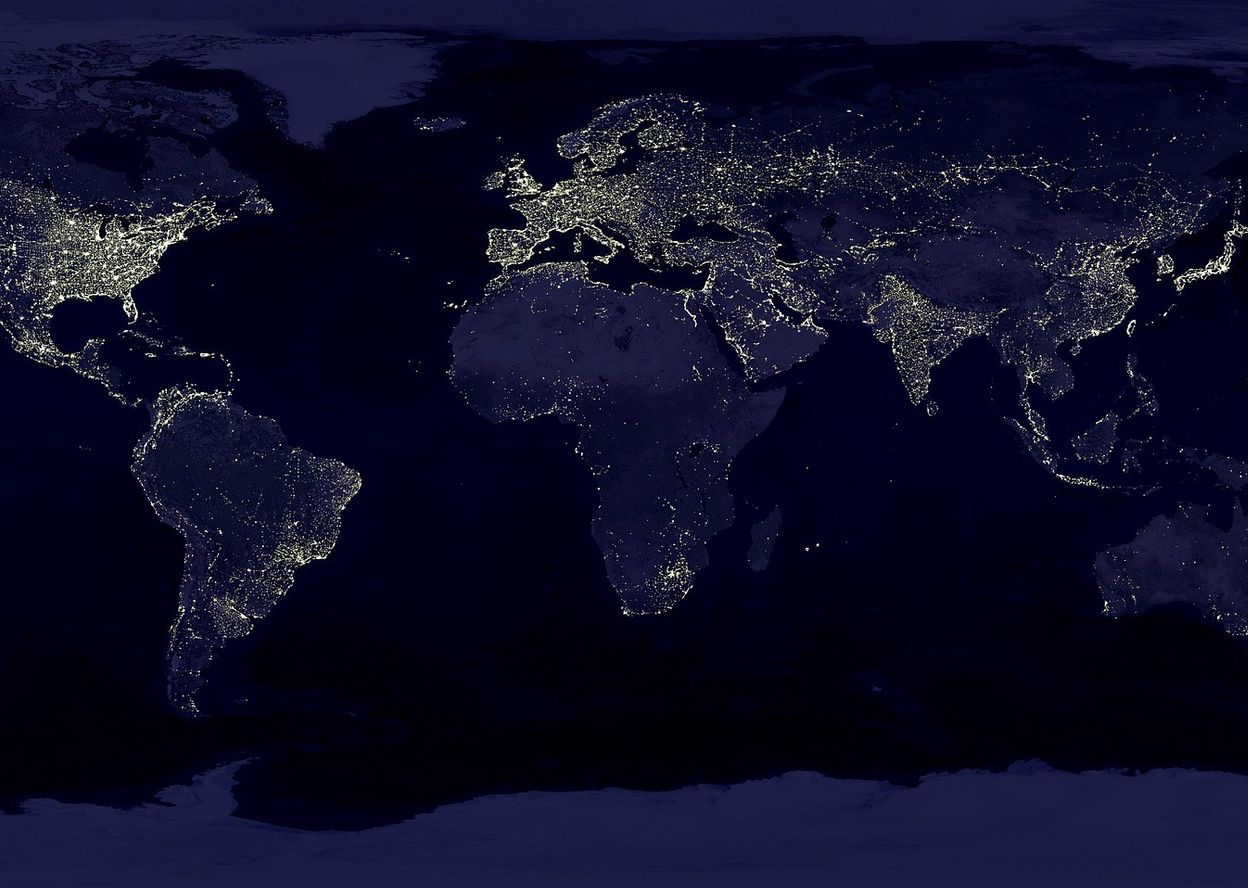

While research firms are fighting to best estimate the position that the Internet of Things will hold in the future, all agree that the world market for this sector will represent hundreds of billions of dollars in five years’ time. As for the number of objects connected to the internet, there could be as many as 20 to 30 billion by 2030, i.e. far more than the number of humans. And with the objects likely to use the internet more than us, optimizing their traffic is clearly a key challenge.

[1] The CoSWoT project is a collaboration between the LIMOS laboratory (UMR CNRS 6158 which includes Mines Saint-Étienne), LIRIS (UMR CNRS 5205), Hubert Curien laboratory (UMR CNRS 5516) INRAE, and the company Mondeca.

Rémy Fauvel

Read on I’MTech