Hervé Debar, Télécom SudParis – Institut Mines-Télécom, Université Paris-Saclay

[divider style=”normal” top=”20″ bottom=”20″]

[dropcap]T[/dropcap]he world of cybersecurity has changed drastically over the past 20 years. In the 1980s, information systems security was a rather confidential field with a focus on technical excellence. The notion of financial gain was more or less absent from attackers’ motivations. It was in the early 2000s that the first security products started to be marketed: firewalls, identity or event management systems, detection sensors etc. At the time these products were clearly identified, as was their cost, which was high at times. Almost twenty years later, things have changed: attacks are now a source of financial gain for attackers.

What is the cost of an attack?

Today, financial motivations are usually behind attacks. An attacker’s goal is to obtain money from victims, either directly or indirectly, whether through requests for ransom (ransomware), or denial of service. Spam was one of the first ways to earn money by selling illegal or counterfeit products. Since then, attacks on digital currencies such as bitcoin have now become quite popular. Attacks on telephone systems are also extremely lucrative in an age where smartphones and computer technology are ubiquitous.

It extremely difficult to assess the cost of cyber-attacks due to the wide range of approaches used. Information from two different sources can however provide insight to estimate the loss incurred: that of service providers and that of the scientific community.

On the service provider side, a report by American service provider Verizon entitled, “Data Breach Investigation Report 2017” measures the number of records compromised by an attacker during an attack but does not convert this information into monetary value. Meanwhile, IBM and Ponemon indicate an average cost of $141 US per record compromised, while specifying that this cost is subject to significant variations depending on country, industrial sector etc. And a report published by Accenture during the same period assesses the average annual cost of cybersecurity incidents as approximately $11 million US (for 254 companies).

How much money do the attackers earn?

In 2008, American researchers tried to assess the earnings of a spam network operator. The goal was to determine the extent to which an unsolicited email could lead to a purchase. By analyzing half a billion spam messages sent by two networks of infected machines (botnet), the authors estimated that the hackers who managed the networks earned $3 million US. However, the net profit is very low. Additional studies have shown the impact of cyber attacks on the cost of shares of corporate victims. This cybersecurity economics topic has also been developed as part of a Workshop on the Economics of Information Security.

The figures may appear to be high, but as is traditionally the case for Internet services, attackers benefit from a network effect in which the cost of adding a victim is low, but the cost of creating and installing the attack is very high. In the case studied in 2008, the emails were sent using the Zeus robots network. Since this network steals computing resources from the compromised machines, the initial cost of the attack was also very low.

In short, the cost of cyberattacks has been a topic of study for many years now. Both academic and commercial studies exist. Nevertheless, it remains difficult to determine the exact cost of cyber-attacks. It is also worth noting that it has historically been greatly overestimated.

The high costs of defending against attacks

Unfortunately, defending against attacks is also very expensive. While an attacker only has to find and exploit one vulnerability, those in charge of defending against attacks have to manage all possible vulnerabilities. Furthermore, there is an ever-growing number of vulnerabilities discovered every year in information systems. Additional vulnerabilities are regularly introduced by the implementation of new services and products, sometimes unbeknownst to the administrators responsible for a company network. One such case is the “bring your own device” (BYOD) model. By authorizing employees to work on their own equipment (smartphones, personal computers) this model destroys the perimeter defense that existed a few years ago. Far from saving companies money, it introduces an additional dose of vulnerability.

The cost of security tools remains high as well. Firewalls or detection sensors can cost as much as €100,000 and the cost of a monitoring platform to manage all this security equipment can cost up to ten times as much. Furthermore, monitoring must be carried out by professionals and there is a shortage of these skills in the labor market. Overall, the deployment of protection and detection solutions amounts to millions of euros every year.

Moreover, it is also difficult to determine the effectiveness of detection centers intended to prevent attacks because we do not know the precise number of failed attacks. A number of initiatives, such as Information Security Indicators, are however attempting to answer this question. One thing is certain: every day information systems can be compromised or made unavailable, given the number of attacks that are continually carried out on networks. The spread of the malicious code Wannacry proved how brutal certain attacks can be and how hard it can be to predict their development.

Unfortunately, the only effective defense is often updating vulnerable systems once flaws have been discovered. This creates few consequences for a work station, but is more difficult on servers, and can be extremely difficult in high-constraint environments (critical servers, industrial protocols etc.) These maintenance operations always have a hidden cost, linked to the unavailability of the hardware that must be updated. And there are also limitations to this strategy. Certain updates are impossible to implement, as is the case with Skype, which requires a major software update and leads to uncertainty in its status. Other updates can be extremely expensive, such as those used to correct the Spectre and Meltdown vulnerabilities that affect the microprocessors of most computers. Intel has now stopped patching the vulnerability in older processors.

A delicate decision

The problem of security comes down to a rather traditional risk analysis, in which an organization must decide which risks to protect itself against, how subject it is to risks, and which ones it should insure itself against.

In terms of protection, it is clear that certain filtering tools such as firewalls are imperative in order to preserve what is left of the perimeter. Other subjects are more controversial, such as Netflix’s abandoning of anti-virus and decision to rely instead on massive data analysis to detect cyber-attacks.

It is very difficult to assess how subject a company is to risks since they are often the result of technological advances in vulnerabilities and attacks rather than a conscious decision made by the company. Attacks through denial of service, like the one carried out in 2016 using the Mirai malware, for example, are increasingly powerful and therefore difficult to counter.

The insurance strategy for cyber-risk is even more complicated, since premiums are extremely difficult to calculate. Cyber-risk is often systematic since a flaw can affect a large number of clients. Unlike the risk of natural catastrophe, which is limited to a region, allowing insurance companies to spread the risk out over its various clients and calculate a future risk based on risk history, computer vulnerabilities are often widespread, as can be seen in recent examples such as the Meltdown, Spectre and Krack flaws. Almost all processors and WiFi terminals are vulnerable.

Another aspect that makes it difficult to estimate risks is that vulnerabilities are often latent, which means that only a small community is aware of them. The flaw used by the Wannacry malware had already been identified by NSA, the American Security Agency (under the name EternalBlue). The attackers who used the flaw learned about its existence from documents leaked from the American government agency itself.

How can security be improved? The basics are still fragile

Faced with a growing number of vulnerabilities and problems to solve, it seems essential to reconsider the way internet services are built, developed and operated. In other industrial sectors the answer has been to develop standards and certify products in relation to these standards. This means guaranteeing smooth operations, often in a statistical manner. The aeronautics industry, for example, certifies its aircraft and pilots and has very strong results in terms of safety. In a more closely-related sector, telephone operators in the 1970s guaranteed excellent network reliability with a risk of service disruption lower than 0.0001 %.

This approach also exists in the internet sector with certifications based on common criteria. These certifications often result from military or defense needs. They are therefore expensive and take a long time to obtain, which is often incompatible with the speed-to-market required for internet services. Furthermore, standards that could be used for these certifications are often insufficient or poorly suited for civil settings. Solutions have been proposed to address this problem, such as the CSPN certification defined by the ANSSI (French National Information Systems Security Agency). However, the scope of the CSPN remains limited.

It is also worth noting the consistent positioning of computer languages in favor of quick, easy production of computer code. In the 1970s languages that chose facility over rigor came into favor. These languages may be the source of significant vulnerabilities. The recent PHP case is one such example. Used by millions of websites, it was one of the major causes of SQL injection vulnerabilities.

The cost of cybersecurity, a question no longer asked

In strictly financial terms, cybersecurity is a cost center that directly impacts a company or administration’s operations. It is important to note that choosing not to protect an organization against attacks amounts to attracting attacks since it makes the organization an easy target. As is often the case, it is therefore worthwhile to provide a reminder about the rules of computer hygiene.

The cost of computer flaws is likely to increase significantly in the years ahead. And more generally, the cost of repairing these flaws will rise even more dramatically. We know that the point at which an error is identified in a computer code greatly affects how expensive it is to repair it: the earlier it is detected, the less damage is done. It is therefore imperative to improve development processes in order to prevent programming errors from quickly becoming remote vulnerabilities.

IT tools are also being improved. Stronger languages are being developed. These include new languages like RUST and GO, and older languages that have come back into fashion, such as SCHEME. They represent stronger alternatives to the languages currently taught, without going back to languages as complicated as ADA for example. It is essential that teaching practices progress in order to factor in these new languages.

The Conversation Wasted time, stolen or lost data…We have been slow to recognize the loss of productivity caused by cyber-attacks. It must be acknowledged that cybersecurity now contributes to a business’s performance. Investing in effective IT tools has become an absolute necessity.

Hervé Debar, Head of Department Networks and Telecommunications services, Télécom SudParis – Institut Mines-Télécom, Université Paris-Saclay

The original version of this article (in French) was published on The Conversation.

Also read on I’MTech:

[one_half]

[/one_half][one_half_last]

[/one_half_last]

EURECOM is coordinating the three-year European project, PAPAYA, launched on May 1st. Its mission: enable cloud services to process encrypted or anonymized data without having to access the unencrypted data. Melek Önen, a researcher specialized in applied cryptography, is leading this project. In this interview she provides more details on the objectives of this H2020 project.

EURECOM is coordinating the three-year European project, PAPAYA, launched on May 1st. Its mission: enable cloud services to process encrypted or anonymized data without having to access the unencrypted data. Melek Önen, a researcher specialized in applied cryptography, is leading this project. In this interview she provides more details on the objectives of this H2020 project.

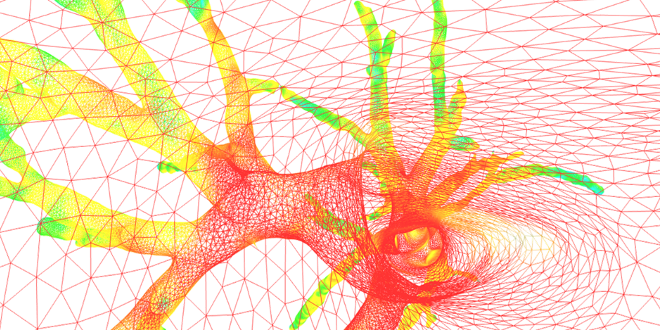

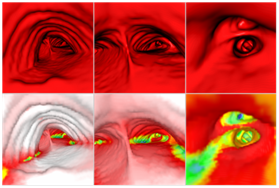

AirWays software uses a graphic grid representation of bronchial tube surfaces after analyzing clinical images and then generates 3D images to view them both “inside and outside” (above, a view of the local bronchial diameter using color coding). This technique allows doctors to plan more effectively for endoscopies and operations that were previously performed by sight.

AirWays software uses a graphic grid representation of bronchial tube surfaces after analyzing clinical images and then generates 3D images to view them both “inside and outside” (above, a view of the local bronchial diameter using color coding). This technique allows doctors to plan more effectively for endoscopies and operations that were previously performed by sight. “For now, we have limited ourselves to the diagnosis-analysis aspect, but I would also like to develop a predictive aspect,” says the researcher. This perspective is what motivated

“For now, we have limited ourselves to the diagnosis-analysis aspect, but I would also like to develop a predictive aspect,” says the researcher. This perspective is what motivated