Acklio: linking connected objects to the internet

With the phenomenal growth connected objects are experiencing, networks to support them have become a crucial underlying issue. Networks called “LPWAN” provide long-range communication and energy efficiency, making them perfectly suited to the Internet of Things, and are set to become standards. But first, they must be successfully integrated within traditional internet networks. This is precisely the mission of the very promising start-up, Acklio. This start-up developed at IMT Atlantique was a finalist for the Bercy-IMT Innovation Awards and will attend CES 2019 from 8 to 11 January.

How many will there be in 2020? 2 billion? 30 billion? Maybe even 80 billion? Although estimates of the number of connected objects that will exist in five years vary by a factor of four depending on which consulting firm or think tank you ask, one thing seems certain: the amount of objects will be a number with nine or more zeros. All these communications must be ensured to connect these objects to the internet in order to exchange data with the cloud, our email accounts or smartphone applications.

But connected objects are not like computers: they do not have fiber optic connections, and few of them use WiFi to communicate. The Internet of Things relies on specific radio networks called LPWAN—the best-known examples of which are LoRa and Sigfox. One of the major challenges in deploying the IoT is therefore to successfully ensure rapid, efficient data transfer between LPWAN networks and the internet. This is precisely the aim of Acklio, a start-up founded by two IMT Atlantique researchers: Laurent Toutain and Alexander Pelov.

Alexander Pelov explains why industrial players are interested in LPWAN networks, “Using just 3 AAA batteries, we can now power a connected gas meter that will transmit one message per day for a period of 20 years. These networks are extremely energy-efficient and make it possible to reduce the cost of communications.” From GPS tracking of objects, animals and people to logistics, alarm systems and more, all industries that wish to make use of connected objects will rely on these networks.

For Alexander Pelov, however, this poses a problem. “Depending on whether we choose the LoRa or Sigfox technology to set up the LPWAN network for the connected objects, a different approach will be used. The developers won’t work in exactly the same way, for example,” he explains. So it would be impossible to scale up in terms of infrastructure or environment to deploy multiple connected objects. It would also be difficult to ensure fluid data transfer between the LPWAN networks and the internet if each network is different. In other words, this represents a major hurdle in the development of IoT.

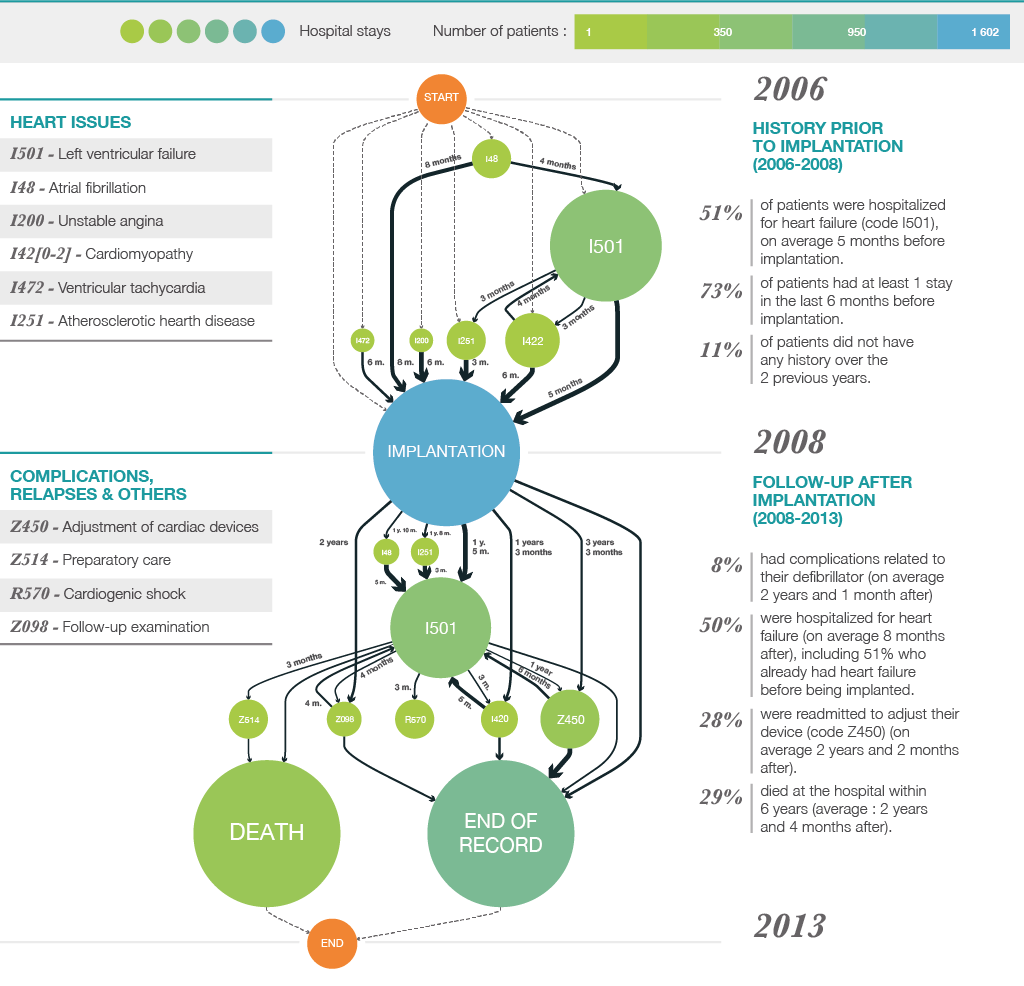

To overcome this obstacle, Acklio’s team integrates basic LPWAN protocols in standard internet protocols—like IPv6. Alexander Pelov sums up his start-up’s approach as follows, “We define a generic architecture and add it at the server level, which controls the connected objects. Then, we send messages from these objects to the internet and vice versa via this architecture.” Acklio’s technological building block thus acts as an intermediary in the transmission of data from one environment to another.

It is based on the principle of data compression and fragmentation. The role of the technology is first of all to compress the header in a data packet using a mechanism called SCHC —static context header compression. This is a crucial step for providing internet connectivity within the LPWAN network. Since compression is impossible at times, or may produce data packets that are still too large for the LPWAN network, Acklio also makes it possible to fragment the Ipv6 data packets. This two-in-one technology will allow developers to work without worrying about which LPWAN technology is used for the IoT application they are developing.

Acklio, an important player in IoT standardization

The young start-up’s work is so promising that it has been commissioned to coordinate efforts to standardize connectivity between LPWAN networks and the internet. Acklio is leading a working group within the IETF—an organization that is actively involved in developing internet standards—which brings together the IEEE, the 3GPP cooperation for telecommunications standards in Europe, and alliances for the standardization of LoRa and Sigfox technologies (including LoRa Alliance members Bouygues Telecom and Orange for example).

In all, more than 200 industry players are represented in the IETF, not counting academic institutions. “It’s an organization where researchers and engineers can talk about operational needs, technical constraints and scientific challenges without engaging in business lobbying,” says Marianne Laurent, Head of Marketing director for the start-up. In 2018, the IETF recognized Acklio’s technology as a standard. A sign of success and the start-up’s high-quality work, this has also created an opening for the technology and therefore, for competition for the young company.

However, Acklio will be able to count on its head start in developing its compression-fragmentation technique. For now, it is still the only one of its kind, and will enter the market with two products which it will present at the Las Vegas CES 2019 in January. This could be the occasion for the start-up to continue its winning streak for awards, starting with an interest-free loan from Fondation Mines-Télécom in 2016 and continuing with a Best Telecommunication Innovation Award at the 2018 Mobile World Congress in March of last year. Most importantly, the American event will also provide an opportunity to find new customers. Acklio is on track to become a shining example of researchers succeeding in the entrepreneurial world and of the direct commercialization of fundamental research in telecommunications.

[divider style=”normal” top=”20″ bottom=”20″]

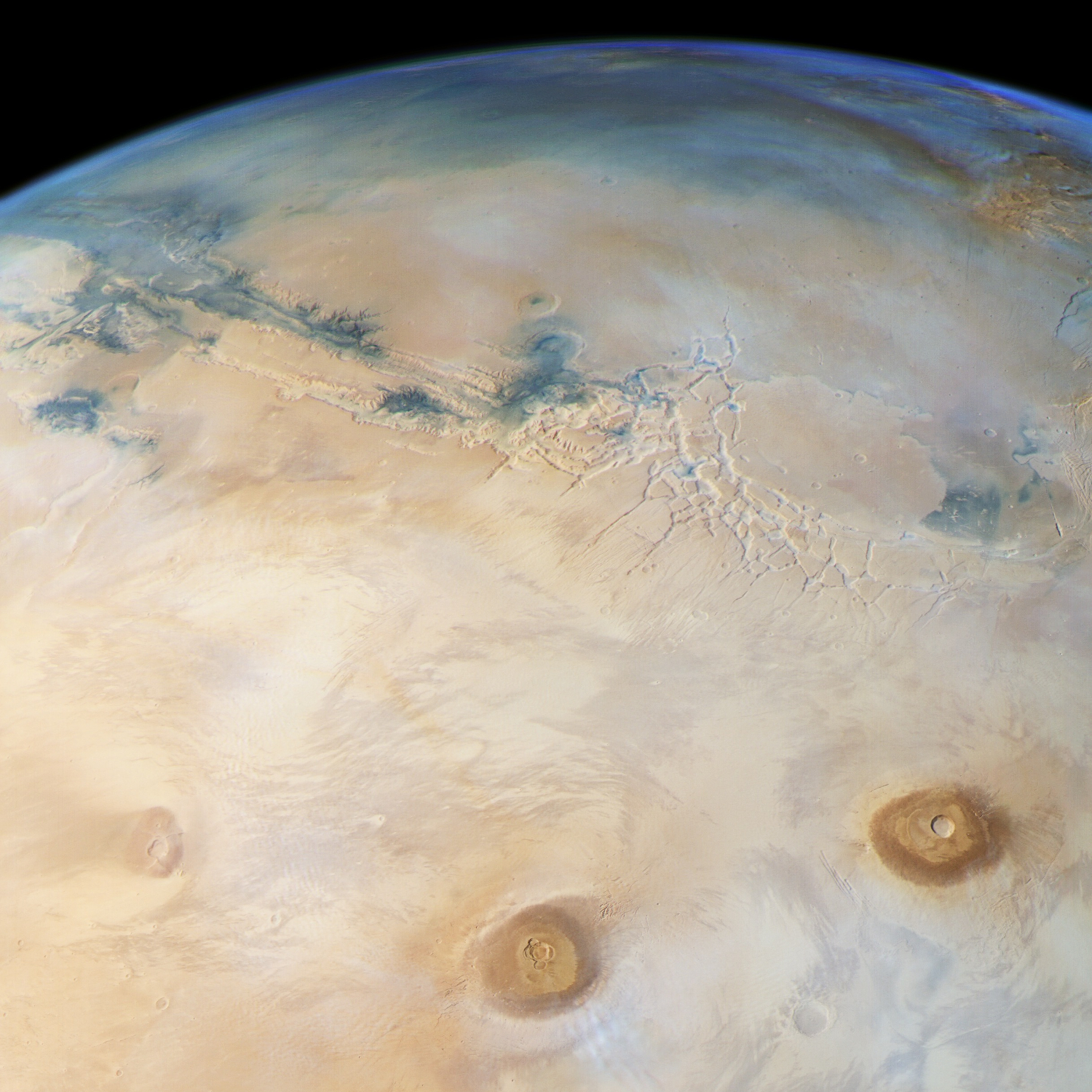

LPWAN: networks suited for connected objects

Alexander Pelov illustrates the performance of LPWAN networks through a use case carried out with the city of Rennes to control its electrical grid. “With only two LWPAN base stations, it is possible to cover 95% of the Rennes urban area.” This high level of performance does come with some drawbacks: the networks are slow and only a few messages can be sent per day by the objects connected to these networks. The two base stations support a daily traffic of one hundred 12-byte messages. But the sensors do not usually need to send much information to the server or to do so quickly. That is why these long-range networks have already become the foundation for communications between connected objects.

[divider style=”normal” top=”20″ bottom=”20″]