Synchronizing future transportation: from trucks to drones

With the development of delivery services, the proliferation of various means of transportation, saturated cities and mutualized goods, optimizing logistics networks is becoming so complex that humans can no longer find solutions without using intelligent software. Olivier Péton, specialized in operational research for optimizing transportation at IMT Atlantique, is seeking to answer this question: how can deliveries be made to thousands of customers under good conditions? He presented his research at the IMT Symposium in October on production systems of the future.

This article is part of our series on “The future of production systems, between customization and sustainable development.”

Have you ever thought about the future of the book, pair of jeans or alarm clock you buy with just one click? Moved, transferred, stored, redistributed, these objects made their way from one strategic place to the next, across the entire country to your city. Several trucks, vans and bikes are used in the delivery. You receive your order thanks to the careful organization of a logistics network that is becoming increasingly complex.

At IMT Atlantique, Fabien Lehuédé and Olivier Péton are carrying out operational research on how to optimize transportation solutions and logistics networks. “A logistics network must take into account the location of the factories and warehouses, decide which production site will serve a given customer, etc. Our job is to establish a network and develop it over time using recent optimization methods,” explains Olivier Péton.

This job is in high demand. Changes in legislation to limit the access of certain vehicles during given timeframes in city centers has required companies to rethink their distribution methods. At the same time, like these new requirements in the city, the development of new technology and new distribution methods offer opportunities for re-optimizing transportation.

What are the challenges facing the industry of the future?

“Most of the work from the past 10 years pertains to logistic systems and the synchronization of vehicles,” remarks Olivier Péton. “In other words, several vehicles must manage to arrive at practically the same time at the same place.” This is the case, for example, in projects involving the pooling of transportation means, in which goods are grouped together at a logistics platform before being sent to the final customer. “This is also the case for multimodal transportation, in which high-capacity vehicles transfer their contents to several smaller-capacity vehicles for the last mile,” the researcher explains. These concepts of mutualization and multimodal transport are at the heart of industry of the future.

In the path from the supplier to the customer, the network sometimes transitions from the national level to that of a city. On the one hand, national transport relies on a network of logistic hubs that handle large volumes of goods. On the other hand, urban networks, particularly for e-commerce, focus on last-mile delivery. “The two networks involve different constraints. For a national network, the delivery forecast can be limited to one week. The trucks often only visit three or four places per day. In the city, we can visit many more customers in one day, and replenish supplies at a warehouse. We must take into account delays, congestion, and the possibility of adjusting the itinerary along the way,” Olivier Péton explains.

Good tools make good networks

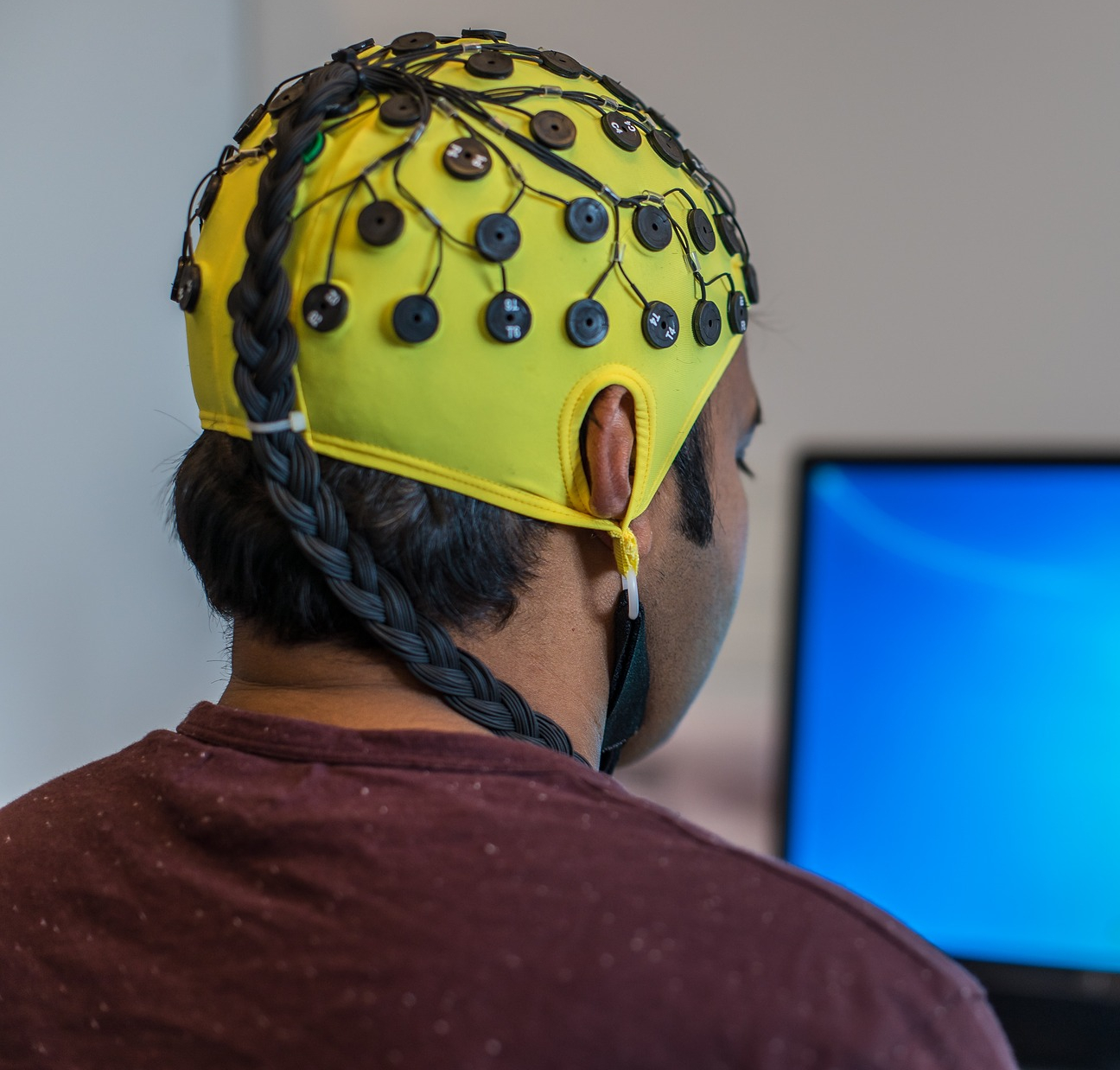

A network’s complexity depends on the amount of combinations that can be made with the elements it contains. The higher the number of sites, customer orders and stops, the more difficult it becomes to optimize the network. There could be billions of solutions, but it is impossible to list them all to find the best one. This is where the researchers’ algorithms come into play. They rely on the development of heuristic methods, in other words, coming as close as possible to an optimal solution within a reasonable calculation time of a few seconds or a few minutes. To accomplish this, it is vital to have reliable data: transport costs, delivery time schedules, etc.

There are also specific constraints related to each company. “In some cases, transport companies require truck itineraries in straight lines, with as few detours as possible to make deliveries to intermediate customers,” explains Olivier Péton. Other constraints include the maximum number of customers on one route, fair working times for drivers, etc. These types of constraints are modeled as equations. “To resolve these optimization problems, we start with an initial transport plan and we try to improve it iteratively. Each time we change the transport plan, we make sure it still meets all the constraints”. The ultimate result is based on the quality of service: ensuring that the customer is served within the time slot and in only one delivery.

Growing demand

Today, this research is primarily used prior to delivery in national networks. It helps design transport plans, determine how many trucks must be chartered and create the drivers’ schedules. Olivier Péton adds, “it also helps develop simulations that show the savings a company can hope to make by changing its logistics practices. To accomplish this, we work with 4S Network, a company that supports its customers throughout their entire transport mutualization projects.” This work can also be of interest to the major decision-makers managing a fleet with transport that can vary greatly on a daily basis. If the requests are very different from one day to the next, the software solution can develop a transport plan in a few minutes.

Read more on I’MTech: What is the Physical Internet?

What is the major challenge facing researchers? The tool’s robustness. In other words, its ability to react to unforeseeable incidents: congestion, technical problems… It must allow for small variations without having to re-optimize the entire solution. This is especially the case as new issues arise. Which exchange zone should be used in a city to transfer goods: a parking lot or vacant area? For what tonnage is it best to invest in electrical trucks? There are many different points to consider before real-time optimization can be achieved.

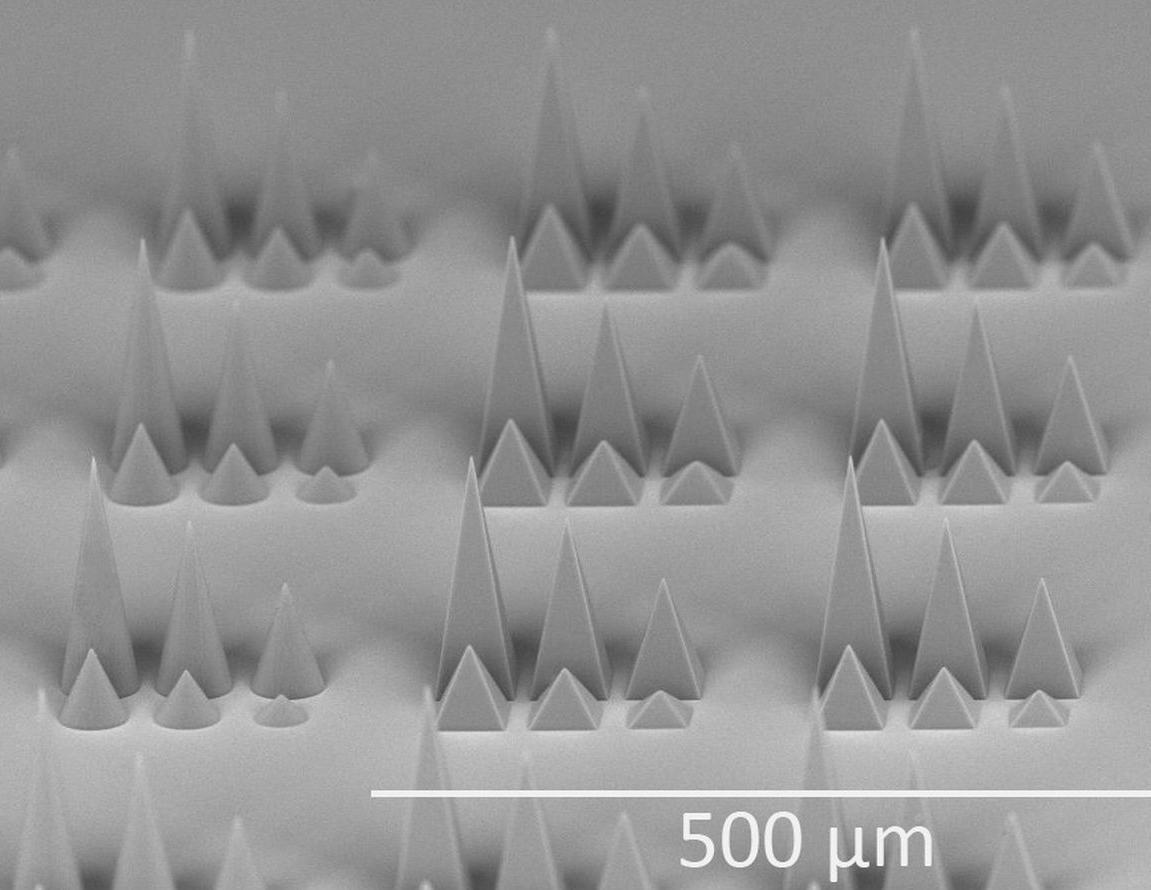

Another challenge involves developing technologically viable solutions with a sustainable business model that are acceptable from a societal and environmental perspective. As part of the ANR Franco-German project OPUSS, Fabien Lehuédé and Olivier Péton are working to optimize complex distribution systems. These systems combine urban trucks and transport with fleets of smaller, autonomous vehicles for last mile deliveries. That is, until drones come on the scene…

Article written by Anaïs Gall, for I’MTech.