Serious games: when games invade the classroom

Over the last few years, a new teaching method linked to the invasion of digital technology in our daily lives has begun shaking up traditional learning methods. The primary purpose of these serious games is not entertainment. The developing sector does not seek to substitute, but rather supplement—or at least earn its place—in the arsenal of existing educational tools. Imed Boughzala, a researcher in management at Institut Mines-Télécom Business School offers a closer look at this phenomenon.

Video games are all the rage. According to SELL, a French organization promoting the interests of video game developers, in 2018, this market was estimated at nearly €5 billion and is steadily growing, with more and more people playing and consuming video games. In fact, the video game industry is now doing better than the book market. This clearly creates an opportunity for teachers to take advantage of this gaming culture and break away from traditional learning methods.

Discover the history of Ancient Egypt with Assassin’s Creed, use the popularity of a game like Fortnite to raise awareness about climate change or develop strategy skills with Civilization or Warcraft. While some teaching methods in France are beginning to adopt these games, research in this area remains limited. It is therefore difficult to assess the effectiveness of these new spaces for informal learning. Imed Boughzala first embarked on this adventure nearly 10 years ago:

“In 2008, while traveling in the United States as a guest professor with the Management Department at the University of Arkansas, I had the opportunity to create a distance learning course on information systems on a platform called Second Life, a platform that was ahead of its time and still exists. A few months later, the university campus had to close due to an avian flu outbreak. We therefore began to focus on implementing a completely virtualized training program. At the time, I designed a serious game for students stuck at home.”

Back in France after this experience, he continued his research on collaborative games. He led his research team, SMART² (Smart Business Information Systems) on a mission to pursue the digital transformation of organizations. “We began imagining how educational tools could be used to motivate students more and seeking a method for getting their attention. We began with the observation that a wide gap currently exists between the digital culture of young people and the university culture,” Imed Boughzala explains. In addition, when students play a role, there are many motivational factors involved, from moving from one level to the next, to receiving awards, and making a mistake and starting over immediately, testing and learning.

Playing for the sake of learning

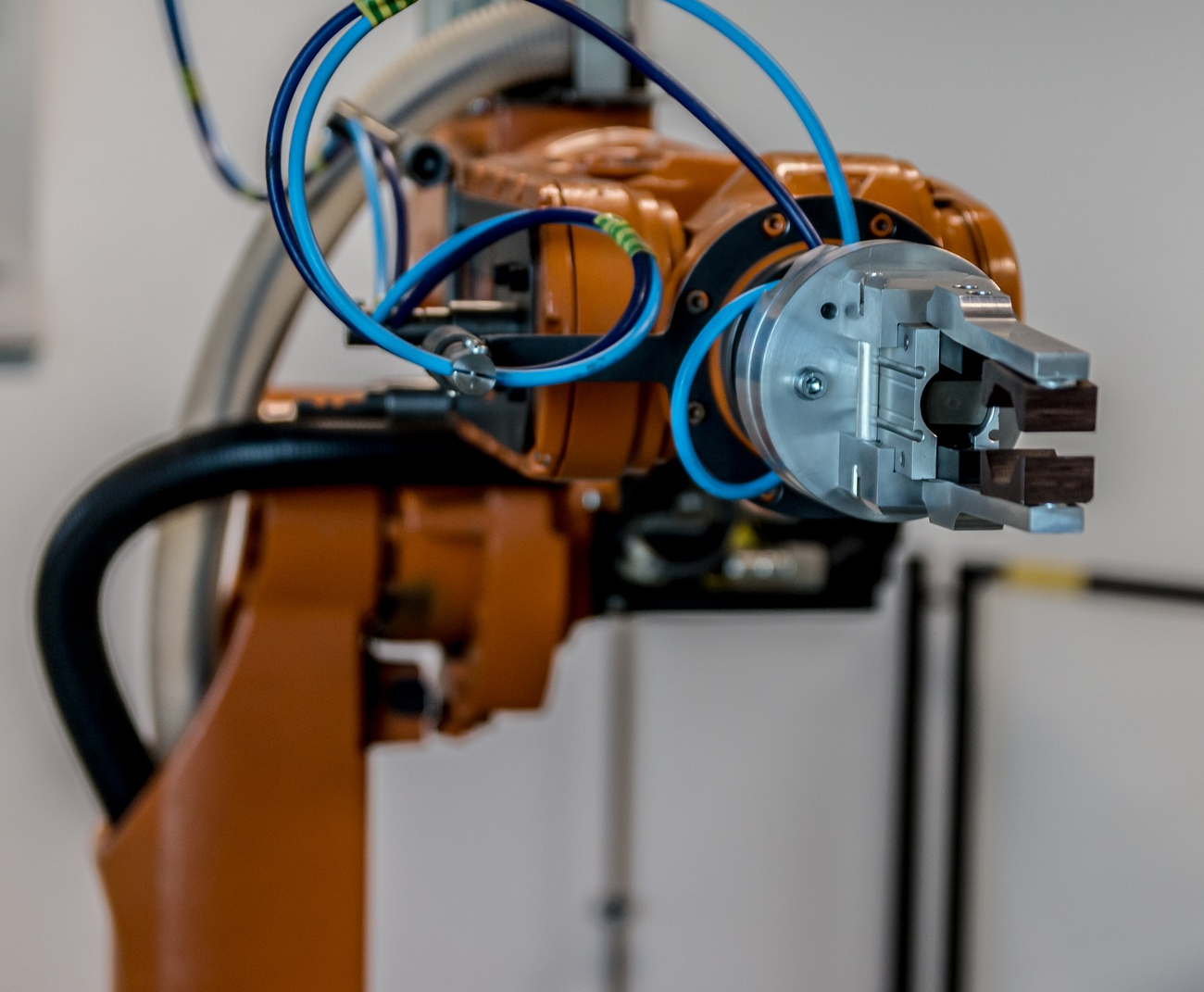

But what do we really mean by a serious game? New digital practices that cover several key concepts: A serious game is a video game created for educational or practical purposes. Serious gaming is a broader concept that refers to the way certain games can be used as serious tools. Finally, gamification refers to adding a fun aspect to a serious subject.

For Imed Boughzala, it all started with a very practical situation. “At Institut Mines-Télécom Business School, 200 management students were enrolled in our program. Capturing their attention was very complicated when it came to very technical topics. The atmosphere in class and the exams were not always great. So why not have them play a game? By sheer coincidence, one day we came across a game by IBM. It was INNOV8, which aims to help future entrepreneurs develop certain computer and business skills,” the researcher explains.

Virtual worlds and serious games therefore helped the students tackle decision-making processes that are very real. “It was an immediate success, which led us to create a new, more customized scenario, and teach them how to create data patterns. Instead of doing exercises, this allowed them to play as much as necessary to understand how the tool behind the game is implemented. We therefore tried to take into account the technical aspects: how the game is played, how it is used and the specific context, that of Millennials,” the researcher explains.

Innovating through serious games

Does digital technology truly transform our relationship with knowledge? Is a good serious game worth more than a long speech? For Imed Boughzala, there’s no doubt about it. A fun game can simulate a real professional environment. Students become active participants in their learning as they are confronted with a problem, a dilemma they must resolve. “This is an important thing for a generation that quickly jumps from one thing to the next. We can try to fight against this reality, but it is quite clear: We can no longer teach the same way. We must add variety to our teaching outlines and add games to give everyone a breath of fresh air. That’s the true benefit.”

While it has now become necessary to use entertainment to reach training objectives, Imed Boughzala sought to link the development of these teachings to his research. He did this by focusing on the effectiveness and assessment of serious games in training programs. This is a complex subject because “we must distinguish between the performance perceived due to the format, content and presentation as a fun game, and the measurable assessment, the real performance. In other words, the knowledge related to professional activities that has actually been gained.”

The researcher is already convinced by the results of the gamification of certain educational processes, especially in learning complex procedures and in many different areas of management techniques, finance, city administration, sustainable development and healthcare and medicine. The immersive and interactive serious game also tests the student’s collective intelligence. For example, the Foldit project, an experimental video game created in 2008 on protein folding. Whereas scientists had spent 10 years searching for the three-dimensional structure of a protein of an AIDS virus in monkeys, the “players” were able to find a solution in three weeks, leading to the development of new antiretroviral drugs.

These practical cases can now be added to scientific databases made available to the scientific community. The institutional community is also beginning to recognize these realities, with The French Foundation for Management Education (FNEGE) creating a certification board to assess these new digital tools. This board will assess the educational added value of these tools, in other words, their ability to meet the defined learning goals. Solving puzzles, creating, experiencing, participating—serious games offer new was of learning and highlight the importance of variety. Since video games can motivate users to become intensely involved for unprecedented period of time, their educational counterparts are completely appropriate for training purposes.

Article written for I’MTech by Anne-Sophie Boutaud

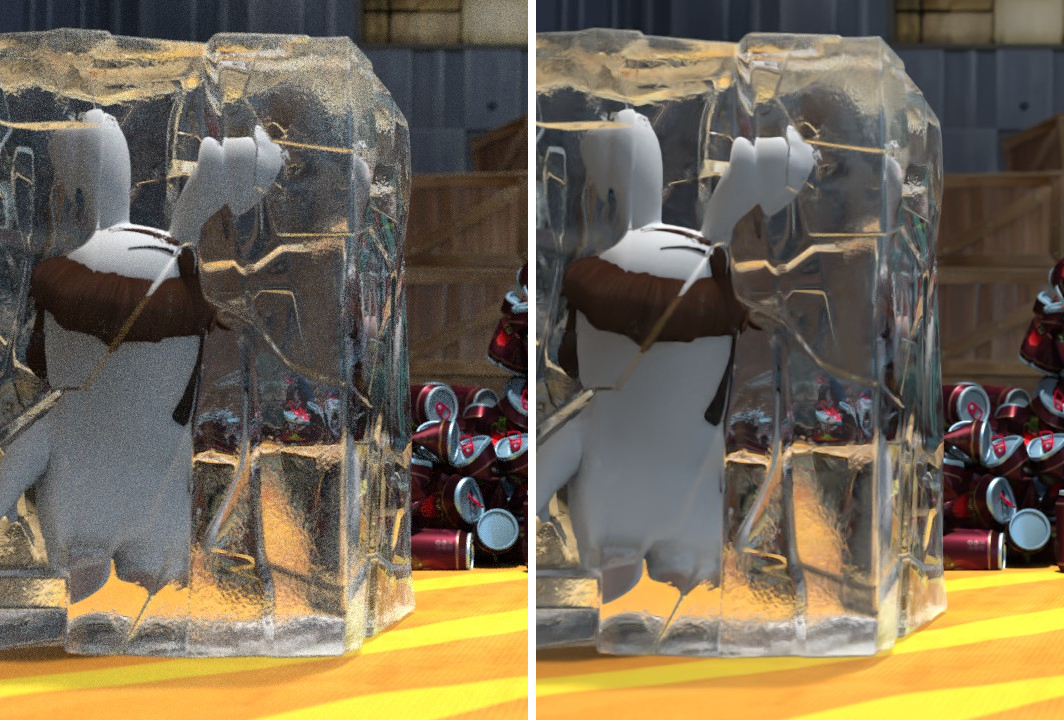

Illustration of BCD denoising a scene, before and after implementing the algorithm

Illustration of BCD denoising a scene, before and after implementing the algorithm