When healthcare professionals form communities through digital technology

Digital technology is shaking up the healthcare world. Among its other uses, it can help break isolation and facilitate online interactions in both the private and professional spheres. Can these virtual interactions help form a collective structure and community for individuals whose occupations involve isolation and distance from their peers? Nicolas Jullien, a researcher in economics at IMT Atlantique, looks at two professional groups, non-hospital doctors and home care workers, to outline the trends of these new digital connections between practices.

On the Twitter social network, doctors interact using a bot with the hashtag #DocTocToc. These interactions include all kinds of professional questions, requests for details about a diagnosis, advice or medical trivia. In existence since 2012, this channel for mutual assistance appears to be effective. With rapid interactions and reactive responses, the messages pour in minutes after a question is asked. None of this comes as a surprise for researcher Nicolas Jullien: “At a time when we hear that digital technology is causing structures to fall apart, to what extent does it also contribute to the emergence and organization of new communities?”

Doctors–and healthcare professionals in general–are increasingly connected and have a greater voice. On Twitter, some star doctors have thousands of followers: 28,900 for Baptiste Beaulieu, a family doctor, novelist and formerly a radio commentator on France Inter; 25,200 for medical intern and cartoonist @ViedeCarabin; and nearly 9,900 for Jean-Jacques Fraslin, family doctor and author of an op-ed piece denouncing alternative medicine. Until now, few studies had been conducted on these new online communities of practice. Under what conditions do they emerge? What are the constraints involved in their development? What challenges do they face?

New forms of collective action

For several years, Nicolas Jullien and his colleagues have been studying the structure and development of online communities. These forms of collective action, which exist in the popular imagination as the prisoner’s dilemma, question the way action is taken: the classic dilemma involves two suspects arrested by the police and isolated for interrogation. They are then given three possible outcomes: the one can denounce the other and be released. In this case, the accomplice receives the maximum sentence. They can plead guilty or denounce each other and receive a more lenient sentence, or they can both deny their wrongdoings and receive the minimum sentence. Although it would be to the individuals’ advantage to take collective action–and despite an awareness of this fact–it is not always done. In the absence of dialogue, individuals seek to maximize their individual interests. Is it in fact possible for digital platforms to facilitate the coordination of these individual interests? Can they be used to create collective projects for sharing, knowledge and mutual assistance, particularly in the professional sphere, with projects like Wikipedia and open source software? These professional social networks represent a new field of exploration for researchers.

Read on I’MTech: Digital commons: individual interests to serve a community

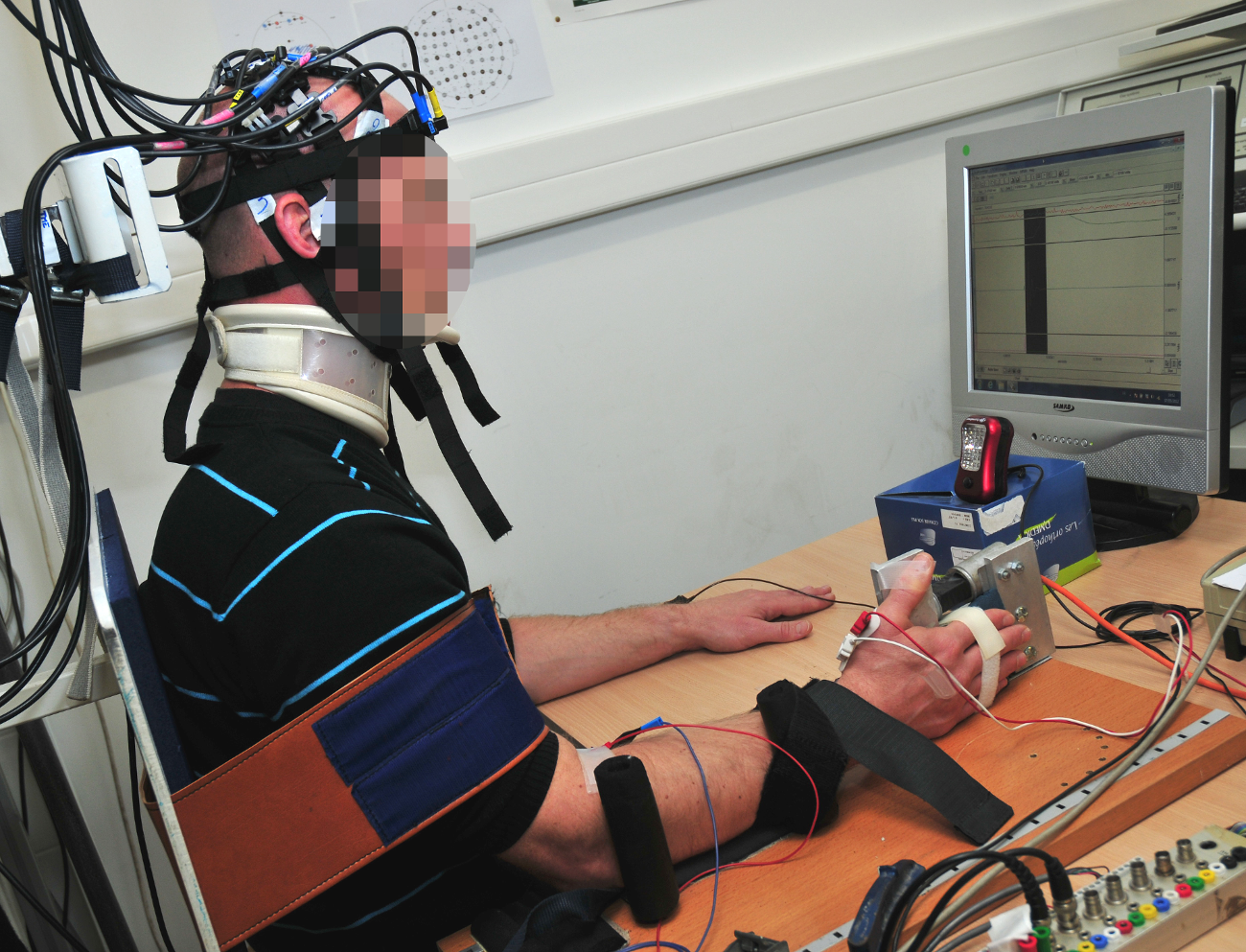

“We decided to focus on two professional groups, doctors and home care workers, which are both involved in health and service relationships, but are diametrically opposed in terms of qualifications. The COAGUL project, funded by the Brittany Region, analyzes the relationships established in each of these professional groups through online interactions. We are conducting these studies with Christèle Dondeyne’s team at Université de Bretagne Occidentale”, Nicolas Jullien explains. These interactions can be linked to technical problems and uncertainties (diagnoses, ways of performing a procedure), ethical and legal issues (especially related to terms and conditions of employment contracts and relations with the health insurance system), employment or working conditions (amount of time spent providing care at a home, discussions on home care worker tasks that go beyond basic health procedures). Isolation therefore favors the emergence of communities of practice. “Digital technology offers access to tools, platforms and means of coordinating work and the activities of professionals. These communities develop autonomously and spontaneously,” the researchers add.

So how can a profession be carried out online? The researchers are currently conducting work that is exploratory and necessarily qualitative. For the first phase of their study, they identified 20 stakeholders mobilized on the internet in order to determine the ties of cooperation and solidarity that are being created on social networks, forums and dedicated websites by collecting their interactions. Where do people go? Why do they stay there? “We have observed that usage practices vary according to profession. While home care workers interact more on Facebook, with several groups of thousands of people and a dozen messages per day, family doctors prefer to interact on Twitter and form more personal networks,” Nicolas Jullien explains. “This qualitative method allows us to understand what lies behind the views people share. Because in these shifting groups, tensions arise related to position and each individual’s experiences,” the researcher explains. For the second phase of the study, researchers will conduct a series of interviews with local professional groups. The long-term objective is to compare various motivations for action and means of interaction from the two different professions.

On a wider scale, behind these digital issues, researchers are seeking to analyze the capacity of these groups to collectively produce knowledge. “In the past, we worked on the Wikipedia model, free online encyclopedia software that brings together nearly 400,000 contributors per month. This collective action is the most extensive that has ever been accomplished. It has seen massive success–that is unexpected and lasting–in producing knowledge online,” Nicolas Jullien explains.

But although contributors participate on a volunteer basis, the rules for contributions are becoming increasingly strict, for example through moderation or based on topics that have already been created. “The contribution is what is regulated in these communities, not the knowledge,” the researcher adds. “Verifying the contribution is what takes time and, for communities of practice, responding. An increase in participants and messages brings with it a greater need to limit noise, i.e. irrelevant comments.” With intellectual challenges, access to peers, and the ability to have contributions viewed by others, the digital routine of daily professional acts has the potential to shake up communities of practice and support the development of new forms of professional solidarity.

Article written (in French) by Anne-Sophie Boutaud, for I’MTech.

Created in 2007 to help accelerate and share scientific knowledge on key societal issues, the Axa Research Fund has been supporting nearly 600 projects around the world conducted by researchers from 54 countries. To learn more, visit the site of the

Created in 2007 to help accelerate and share scientific knowledge on key societal issues, the Axa Research Fund has been supporting nearly 600 projects around the world conducted by researchers from 54 countries. To learn more, visit the site of the