Measuring and quantifying have informed Véronique Bellon-Maurel’s entire scientific career. A pioneer in near infrared spectroscopy, the researcher’s work has ranged from analyzing fruit to digital agriculture. Over the course of her fundamental research, Véronique Bellon-Maurel has contributed to the optimization of many industrial processes. She is now the Director of #DigitAg, a multi-partner Convergence Lab, and is the winner of the 2019 IMT-Académie des Sciences Grand Prix. In this wide-ranging interview, she retraces the major steps of her career and discusses her seminal work.

You began your research career by working with fruit. What did this research involve?

Véronique Bellon-Maurel: My thesis dealt with the issue of measuring the taste of fruit in sorting facilities. I had to meet industrial requirements, particularly in terms of speed: three pieces of fruit per second! The best approach was to use near infrared spectroscopy to measure the sugar level, which is indicative of taste. But when I was beginning my thesis in the late 1980s, it took spectrometers one to two minutes to scan a piece of fruit. I suggested working with very near infrared, meaning a different type of radiation than the infrared that had been used up to then, which made it possible to use new types of detectors that were very fast and inexpensive.

So that’s when you started working on near infrared spectroscopy (NIRS), which went on to became your specialization. Could you tell us what’s behind this technique with such a complex name?

VBM: Near infrared spectroscopy (NIRS) is a method for analyzing materials. It provides a simple way to obtain information about the chemical and physical characteristics of an object by illuminating it with infrared light, which will pass through the object and become charged with information. For example, when you place your finger on your phone’s flashlight, you’ll see a red light shining through it. This light is red because the hemoglobin has absorbed all the other colors of the original light. So this gives you information about the material the light has passed through. NIRS is the same thing, except that we use particular radiation with wavelengths that are located just beyond the visible spectrum.

Out of all the methods for analyzing materials, what makes NIRS unique?

VBM: Near infrared waves pass through materials easily. Much more easily than “traditional” infrared waves which are called “mid-infrared.” They are produced by simple sources such as sunlight or halogen lamps. The technique is therefore readily available and is not harmful: it is used on babies’ skulls to assess the oxygenation saturation of their brains! But when I was starting my career, there were major drawbacks to NIRS. The signal we obtain is extremely cluttered because it contains information about both the physical and chemical components of the object.

And what is hiding behind this “cluttered signal”?

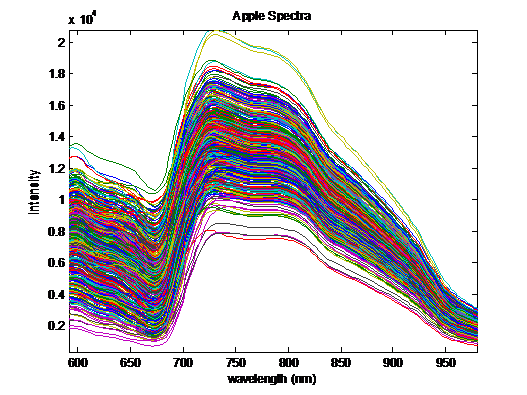

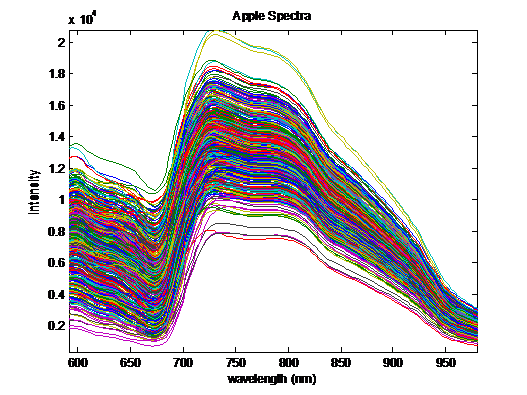

VBM: In concrete terms, you obtain hill-shaped curves and the shape of these curves depends on both the object’s chemical composition and its physical characteristics. You’ll get a huge hill that is characteristic of water. And the signature peak of sugar, which allows you to calculate a fruit’s sugar level, is hidden behind it. That’s the chemical component of the spectrum obtained. But the size of the hills also depends on the physical characteristics of your material, such as the size of the particles or cells that make it up, physical interfaces — cell walls, corpuscles — the presence of air etc. Extracting solely the information we’re interested in is a real challenge!

Near infrared spectrums of apples.

One of your earliest significant findings for NIRS was precisely that – separating the physical component from the chemical component on a spectrum. How did you do that?

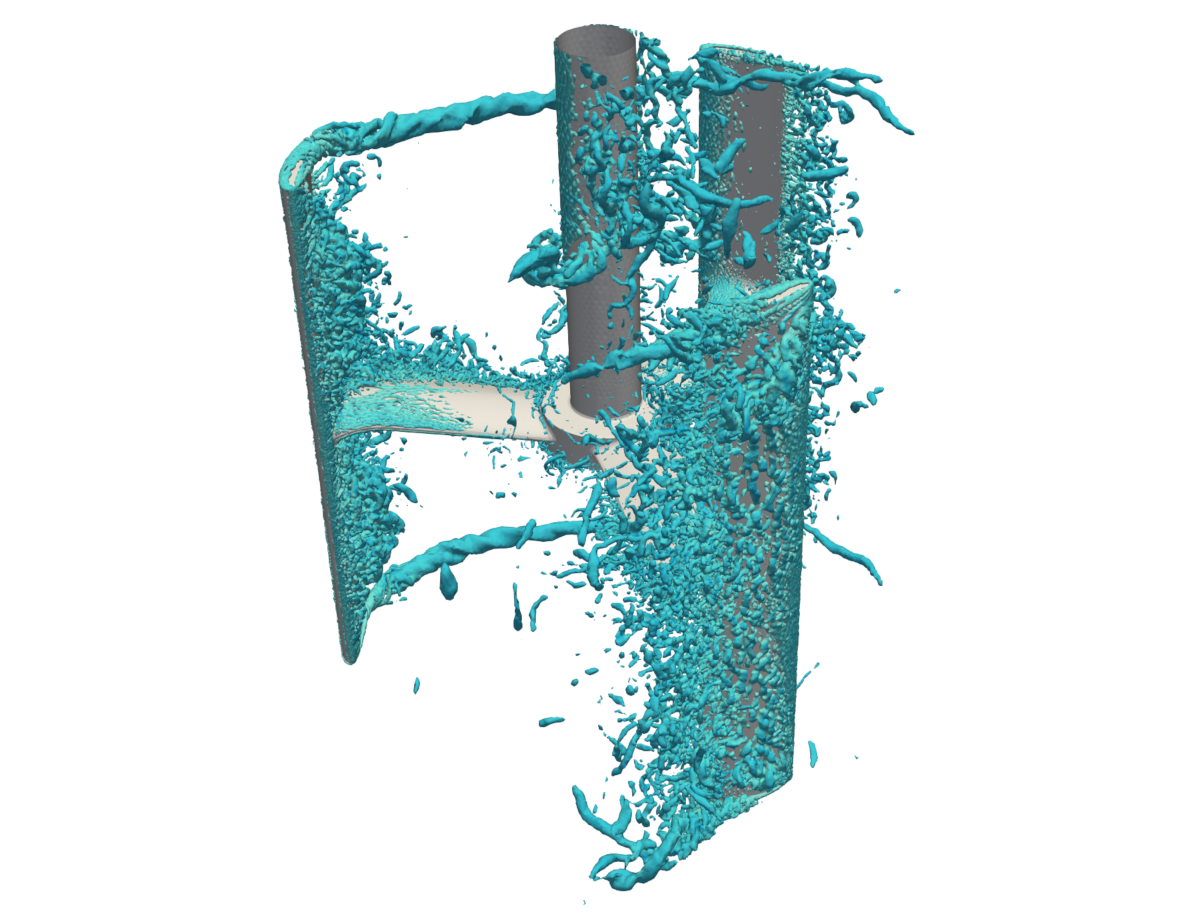

VBM: The main issue at the beginning was to get away from the physical component, which can be quite a nuisance. For example, light passes through water, but not the foam in the water, which we see as white, even though they are the same molecules! Depending on whether or not the light passes through foam, the observation — and therefore the spectrum — will change completely. Fabien Chauchard was the first PhD student with whom I worked on this problem. To better understand this optical phenomenon, which is called diffusion, he went to the Lund Laser Center in Sweden. They have highly-specialized cameras: time-of-flight cameras, which operate at a very high speed and are able to capture photos “in flight.” We send photons onto a fruit in an extremely short period of time and we recover the photons as they come out since not all of them come out at the same time. In our experiments, if we place a transmitter and a receiver on a fruit spaced 6 millimeters apart, when they came out, certain photons had travelled over 20 centimeters! They had been reflected, refracted, diffracted etc. inside the fruit. They hadn’t travelled in a straight line at all. This gave rise to an innovation, spatially resolved spectroscopy (SRS) developed by the Indatech company that Fabien Chauchard started after completing his PhD.

We looked for other optical arrangements for separating the “chemical” component from the “physical” component. Another PhD student, Alexia Gobrecht, with whom I worked on soil, came up with the idea of using polarized near infrared light. If the photons penetrate the soil, they lose their polarization. Those that have only travelled on the surface conserve it. By differentiating between the two, we recover spectrums that only depend on the chemical component. This research on separating chemical and physical components was continued in the laboratory, even after I stopped working on it. Today, my colleagues are very good at identifying aspects that have to do with the physical component of the spectrum and those that have to do with to the chemical component. And it turns out that this physical component is useful! And to think that twenty years ago, our main focus was to get rid of it.

After this research, you transitioned from studying fruit to studying waste. Why did you change your area of application?

VBM: I’d been working with the company Pellenc SA on sorting fruit since around 1995, and then on detectors for grape ripeness. Over time, Pellenc transitioned to waste characterization for the purpose of sorting, based on the infrared knowledge developed through sorting fruit. They therefore called on us, with a new speed requirement, but this one was much tougher. A belt conveyor moves at a speed of several meters per second. In reality, the areas of application for my research were already varied. In 1994, while I was still working on fruit with Pellenc, I was also carrying out projects for biodegradable plastics. NIRS made it possible to provide quality measurements for a wide range of industrial processes. I was Ms. “Infrared sensors!”

“I was Ms. ‘Infrared sensors’!”

– Véronique Bellon-Maurel

Your work on plastics was among the first in the scientific community concerning biodegradability. What were your contributions in this area?

VBM: 1990 was the very beginning of biodegradable plastics. Our question was determining whether we could measure a plastic’s biodegradability in order to say for sure, “this plastic is truly biodegradable.” And to do so as quickly as possible, so why not use NIRS? But first, we had to define the notion of biodegradability, with a laboratory test. For 40 days, the plastics were put in reactors in contact with microorganisms, and we measured their degradation. We were also trying to determine whether this test was representative of biodegradability in real conditions, in the soil. We buried hundreds of samples in different plots of land in various regions and we dug them up every six months to compare real biodegradation and biodegradation in the laboratory. We wanted to the find out if the NIRS measurement was able to achieve the same result, which was estimating the degradation kinetics of a biodegradable plastic – and it worked. Ultimately, this benchmark research on the biodegradability of plastics contributed to the industrial production and deployment of the biodegradable plastics that are now found in supermarkets.

For that research, was your focus still on NIRS?

VBM: The crux of my research at that time was the rapid, non-destructive characterization — physical or chemical— of products. NIRS was a good tool for this. We used it again after that on dehydrated household waste in order to assess the anaerobic digestion potential of waste. With the laboratory of environmental biotechnology in Narbonne, and IMT Mines Alès, we developed a “flash” method to quickly determine the quantity of bio-methane that waste can release, using NIRS. This research was subsequently transferred to the Ondalys company, created by Sylvie Roussel, one of my former PhD students. My colleague Jean-Michel Roger is still working with them to do the same thing with raw waste, which is more difficult.

So you gradually moved from the agri-food industry to environmental issues?

VBM: I did, but it wasn’t just a matter of switching topics, it also involved a higher degree of complexity. In fruit, composition is restricted by genetics – each component can vary within a known range. With waste, that isn’t the case! This made environmental metrology more interesting than metrology for the food industry. And my work became even more complex when I started working on the topic of soil. I wondered whether it would be possible to easily measure the carbon content in soil. This took me to Australia, to a specialized laboratory at the University of Sydney. To my mind, all this different research is based on the same philosophy: if you want to improve something, you have to measure it!

So you no longer worked with NIRS after that time?

VBM: A little less, since I changed from sensors to assessment. But even that was a sort of continuation: when sensors were no longer enough, how could we make measurements? We had to develop assessment methods. It’s very well to measure the biodegradability of a plastic, but is that enough to successfully determine if that biodegradable plastic has a low environmental impact? No, it isn’t – the entire system must be analyzed. I started working on life-cycle analysis (LCA) in Australia after realizing that LCA methods were not suited to agriculture: they did not account for water, or notions of using space. Based on this observation, we improved the LCA framework to develop the concept of a regional LCA, which didn’t exist at the time, allowing us to make an environmental assessment of a region and compare scenarios for how this region would evolve. What I found really interesting with this work was determining how to use data from information systems and sensors to build the most reliable and reproducible model as possible. I wanted the assessments to be as accurate as possible. This is what led me to my current field of research – digital agriculture.

Read more on I’MTech: The many layers of our environmental impact

In 2013 you founded #DigitAg, an institute dedicated to this topic. What research is carried out there?

VBM: The “Agriculture – Innovation 2025” report submitted to the French government in 2015 expresses a need to structure French research on digital agriculture. We took advantage of the opportunity to create Convergence Labs by founding the #DigitAg, Digital Agriculture Convergence Lab. It’s one of ten institutes funded by the Investments in the Future program. All of these institutes were created in order to carry out interdisciplinary research on a major emerging issue. At #DigitAg, we draw on engineering sciences, digital technology, biology, agronomy, economy, social sciences, humanities, management etc. Our aim is to establish knowledge bases to ensure that digital agriculture develops in a harmonious way. The challenge is to develop technologies but also to anticipate how they will be used and how such uses will transform agriculture – we have to predict how technologies will be used and the impacts they will have to help ensure ethical uses and prevent misuse. To this end, I’ve also set up a living lab, Occitanum — for Occitanie Digital Agroecology — set to start in mid-2020. The lab will bring together stakeholders to assess the use value of different technologies and understand innovation processes. It’s a different way of carrying out research and innovation, by incorporating the human dimension.