CoronaCheck : separating fact from fiction in the Covid-19 epidemic

Rumors about the origins of the Covid-19 epidemic and news about miracle cures are rampant. And some leaders have taken the liberty of putting forth questionable figures. To combat such misinformation, Paolo Papotti and his team at EURECOM have developed an algorithmic tool for the general public, which can determine the accuracy of the figures. In addition to its potential for informing the public about the epidemic, this work illustrates the challenges and current limitations of automated fact-checking tools.

The world is in the midst of an unprecedented health crisis, which has unfortunately been accompanied by an onslaught of incorrect or misleading information. Described by the World Health Organization (WHO) as an ‘infodemic’, such ‘fake news’ – which is not a new problem – has been spreading over social media and by public figures. We see the effects this may have on the overall vision of this epidemic. One notable example is when public figures such as the president of the United States use incorrect figures to underestimate the impact of this virus and justify continuing the country’s economic activity.

“As IT researchers in data processing and information quality, we can contribute by providing an algorithmic tool to help with fact-checking,” says Paolo Papotti, a researcher at EURECOM. Working with PhD student Mohammed Saeed, and with support from Master’s student Youssef Doubli, he developed a tool that can check this information, following research previously carried out with Professor Immanuel Trummer from Cornell University.

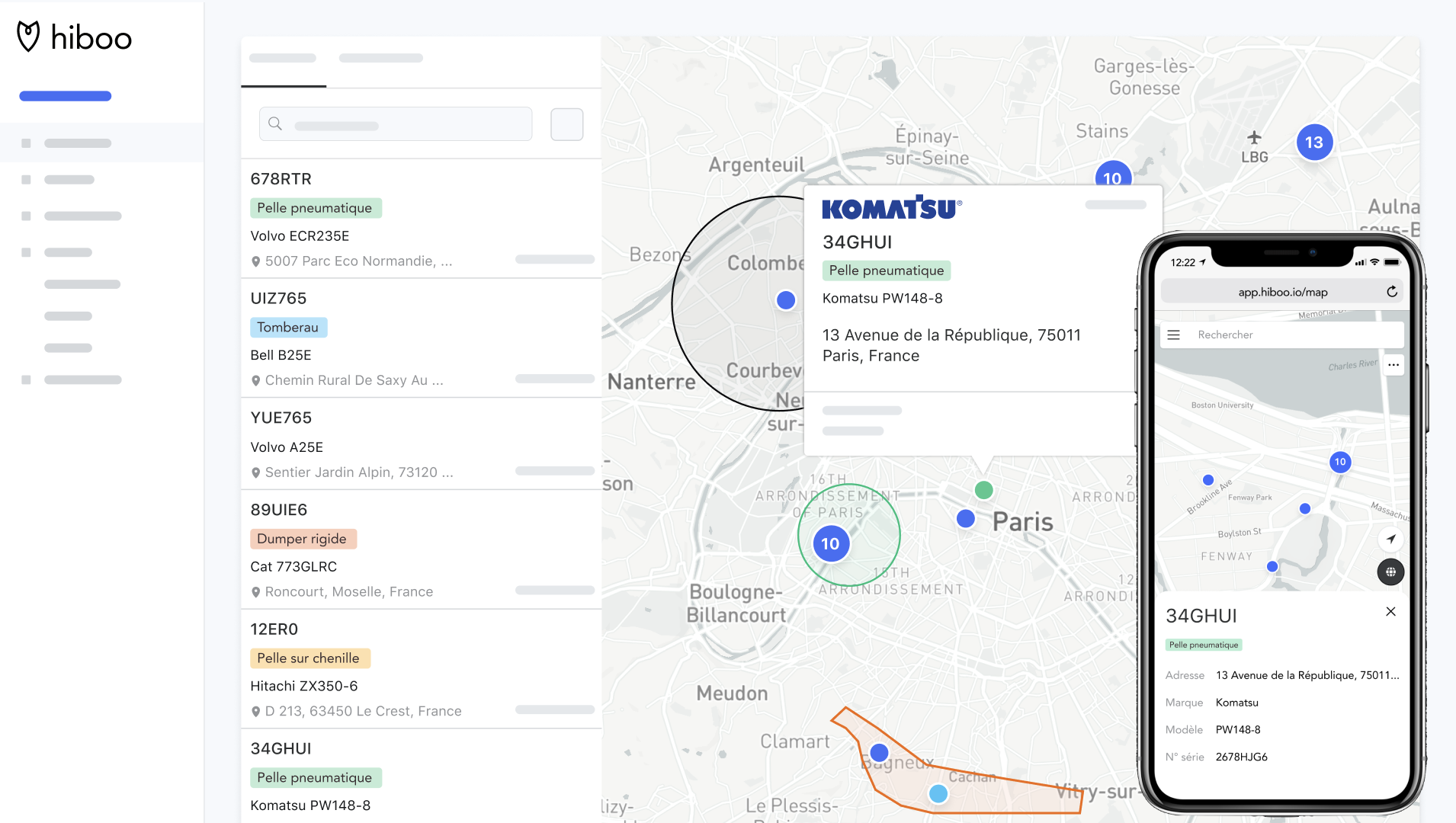

Originally intended for the energy industry – where data is constantly changing and must be painstakingly verified – this tool, which is called CoronaCheck and is now available in French, was adapted in early March to meet current needs.

This fact-checking work is a job in its own right for many journalists: they must use reliable sources to check whether the information heard in various places is correct. And if it turns out to be a rumor, journalists must find sources and explanations to set the record straight. “Our tool does not seek to replace journalists’ investigative work,” explains Paolo Papotti, “but a certain amount of this information can be checked by an algorithm. Our goal is therefore to help social media moderators and journalists manage the wealth of information that is constantly springing up online.”

With millions of messages exchanged every day on networks like Twitter or Facebook, it is impossible for humans to accomplish such a task. Before checking information, at-risk claims must first be identified. But an algorithm can be used by these networks to analyze various data simultaneously and target misinformation. This is the aim of the research program funded by Google to combat misinformation online, which includes the CoronaCheck project. The goal is to provide the general public with a tool to verify figures relating to the epidemic.

A statistical tool

CoronaCheck is a statistical tool that is able to compare quantitative data with the proposed queries. The site works a bit like a search engine: the user enters a query – a claim – and CoronaCheck says whether it is true or false. For example, “there are more coronavirus cases in Italy than in France.” It’s a tool that speaks with statistics. It can handle logical statements using terms such as “less than” or “constant” but will not understand queries such as “Donald Trump has coronavirus.”

“We think that it’s important for users to be able to understand CoronaCheck’s response,” adds Paolo Papotti. To go back to the previous example, the software will not only respond as to whether the statement is true or false, but will also provide details in its response. It will specify the number of cases in each country and the date for which these data are correct. “If the date is not specified, it will take the most recent results by default, meaning for the month of March,” says the researcher.

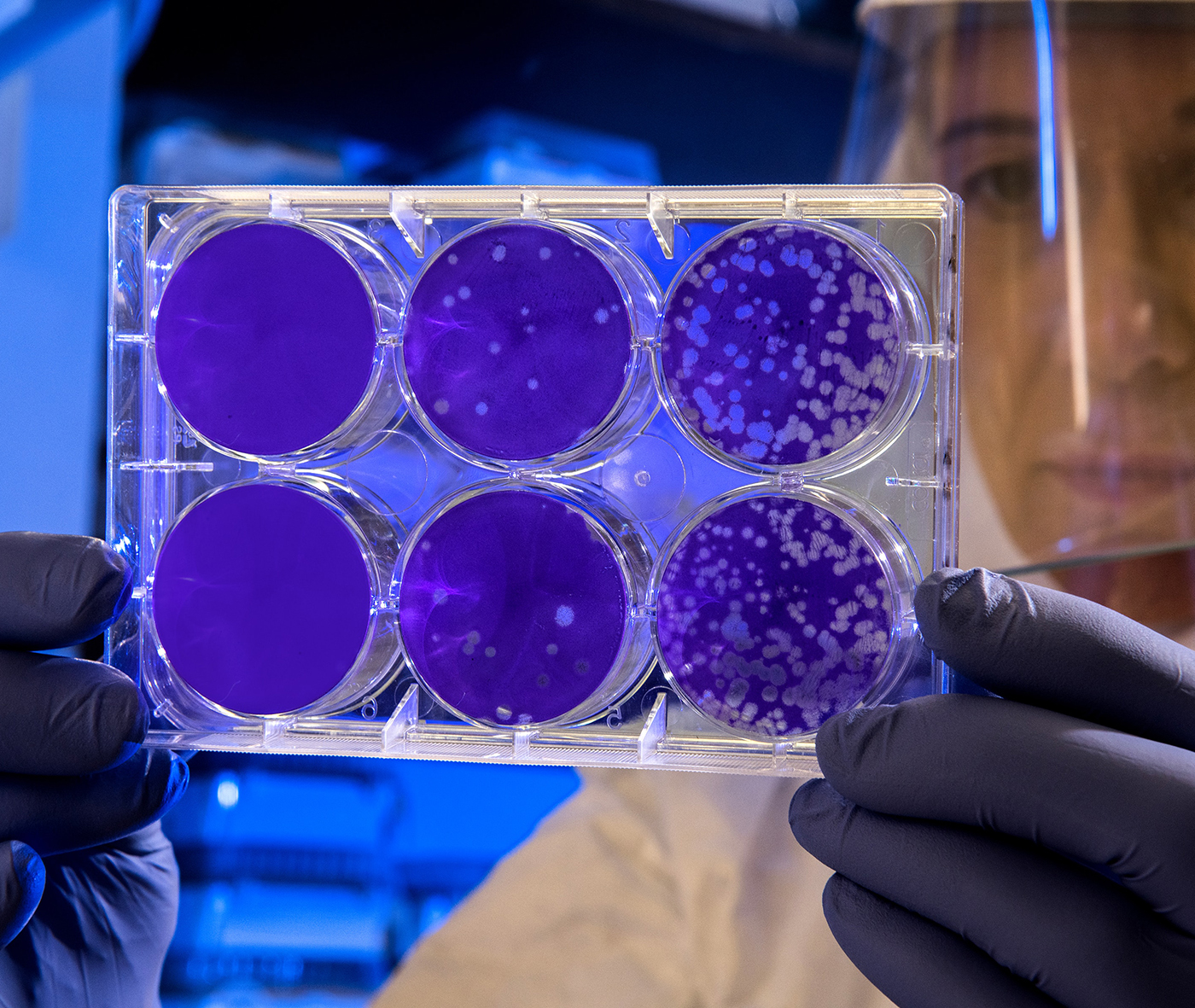

This means that it is essential to update the data regularly. “Every day, we enter the new data compiled by John Hopkins University,” he says. The university also collects data from several official sources such as the WHO and the European Centre for Disease Prevention and Control.

“We know that this tool isn’t perfect,” says Paolo Papotti. The system relies on machine learning, so the model must be trained. “We know that it is not exhaustive and that a user may enter a word that is unknown to the model.” User feedback is therefore essential in order to improve the system. Comments are analyzed to incorporate questions or wording of statements that have not been taken into account. Users must also follow CoronaCheck’s instructions and speak a language the system understands.

Ambiguity of language

It is important to recognize that language can be a significant barrier for an automatic verification tool since it is ambiguous. The term “death rate” is a perfect example of such ambiguity. For the general public it refers to the mortality rate, meaning the number of deaths in relation to a population for a given period of time. However, the “death rate” can also mean the case fatality rate, meaning the number of deaths in relation to the total number of cases of the disease. The results will therefore differ greatly depending on the meaning of the term.

Such differences in interpretation are always possible in human language, but must not be possible be in this verification work. “So the system has to be able to provide two responses, one for each interpretation of death rate,” explains Paolo Papotti. This would also work in cases where a lack of rigor may lead to an interpretation problem.

If the user enters the query, “there are more cases in Italy than in the United States,” this may be true for February, but false for April. “Optimally, we would have to evolve towards a system that gives different responses, which are more complex than true or false,” says Paolo Papotti. “This is the direction we’re focusing on in order to solve this interpretation problem and go further than a statistical tool,” he adds.

The team is working on another system, which could respond to queries that cannot be determined with statistics, for example, “Donald Trump has coronavirus.” This requires developing a different algorithm and the goal would be to combine the two systems. “We will then have to figure out how to assign a query to one system or another, and combine it all in a single interface that is accessible and easy to use.”

Tiphaine Claveau for I’MTech