RAMSES: Time keeper for embedded systems

I’MTech is dedicating a series of articles to success stories from research partnerships supported by the Télécom & Société Numérique Carnot Institute (TSN), to which Télécom ParisTech belongs.

[divider style=”normal” top=”20″ bottom=”20″]

Embedded computing systems are sometimes responsible for performing “critical” functions. In the transport industry, they sometimes prevent collisions between two vehicles. To help design these important systems, Étienne Borde, a researcher at Télécom ParisTech specialized in embedded systems, developed RAMSES. This platform gives developers the tools they need to streamline the design process for these systems. Its potential for various applications in the industrial and transport sectors and robotics has been recognized by Télécom & Société Numérique Carnot Institute, which has made it a part of its technological platform.

What is the purpose of the RAMSES platform?

Étienne Borde: RAMSES is a platform that helps design critical real-time embedded systems. This technical term refers to embedded systems that have a significant time component: if a computer operation takes longer than planned, a critical system failure could occur. In terms of the software, time is managed by a real-time operating system. RAMSES automates the configuration of this system while ensuring the system’s time requirements are met.

What sectors could this type of system configuration support be used for?

EB: The transport sector is a prime candidate. We also have a case study for the railway sector that shows what the platform could contribute in this field. RAMSES is used to estimate the worst data transmission time for a train’s control system. The most critical messages transmitted ensure that the train does not collide with another train. For safety reasons, the calculations are carried out using three computing units at the back of the train and three computing units at the front of the train. What RAMSES offers is a better control of latency and better management of the flow of transmission operations.

How does RAMSES help improve the configuration of critical real-time embedded systems?

EB: RAMSES is a compiler of the AADL language. This language is used to describe computer architectures. The basic principle of AADL is to define categories of software or hardware components that correspond to physical objects used in the everyday life of computer scientists or electronic engineers. An example of one of these categories is that of processors: AADL can describe the computer’s calculation unit by its parameters and frequency. RAMSES helps assemble these different categories to represent the system with different levels of abstraction. This explains how the platform got its name: Refinement of AADL Models for Synthesis of Embedded Systems.

How does a compiler like RAMSES benefit professionals?

EB: Professionals currently develop their systems manually using the programming language of their choice, or generate this code using a model. They can assess the data transmission time on the final product, but with poor traceability in relation to the initial model. If a command takes longer than expected, it is difficult for the developers to isolate the step causing the problem. RAMSES generates intermediate representations as it progresses, analyzing the time associated with each task to ensure no significant deviations occur. As soon as an accumulation of mechanisms present a major divergence in relation to the set time constraints, RAMSES alerts the professional. The platform can indicate which steps are causing the problem and help correct the AADL code.

Does this mean RAMSES is primarily a decision-support tool?

EB: Decision support is one part of what we do. Designing critical real-time embedded systems is a very complex task. Developers do not have all the information about the system’s behavior in advance. RAMSES does not eliminate all of the uncertainties, but it does reduce them. The tool makes it possible to reflect on the uncertainties to decide on possible solutions. The alternative to this type of tool is to make decisions without enough analysis. But RAMSES is not used for decision support only. The platform can also be used to improve systems’ resilience, for example.

How can optimizing the configuration impact the system’s resilience?

EB: Recent work by the community of researchers in systems security looks at mixed criticality. The goal is to use multi-core architectures to deploy critical and less critical functions on the same computing unit. If the time constraints for the critical functions are exceeded, the non-critical functions are degraded. The computing resources made available by this process are then used for critical functions, thereby ensuring their resilience.

Is this a subject you are working on?

EB: Our team has conducted work to ensure that critical tasks will always have enough resources, come what may. At the same time, we are working on the minimum availability of resources for less critical functions. This ensures that the non-critical functions are not degraded too often. For example, for a train, this ensures that the train does not stop unexpectedly due to a reduction in computational resources. In this type of context, RAMSES assesses the availability of the functions based on their degree of criticality, while ensuring enough resources are available for the most critical functions.

Which industrial sectors could benefit from the solutions RAMSES offers?

EB: The main area of application is the transport sector, which frequently uses critical real-time embedded systems. We have partnerships with Thales, Dassault and SAFRAN in avionics, Alstom for the railway sector and Renault for the automotive sector. The field of robotics could be another significant area of application. The systems in this sector have a critical aspect, especially in the context of large machines that could present a hazard to those nearby, should a failure occur. This sector could offer good use cases.

[divider style=”normal” top=”20″ bottom=”20″]

A guarantee of excellence in partnership-based research since 2006

The Télécom & Société Numérique Carnot Institute (TSN) has been partnering with companies since 2006 to research developments in digital innovations. With over 1,700 researchers and 50 technology platforms, it offers cutting-edge research aimed at meeting the complex technological challenges posed by digital, energy and industrial transitions currently underway in in the French manufacturing industry. It focuses on the following topics: industry of the future, connected objects and networks, sustainable cities, transport, health and safety.

The institute encompasses Télécom ParisTech, IMT Atlantique, Télécom SudParis, Institut Mines-Télécom Business School, Eurecom, Télécom Physique Strasbourg and Télécom Saint-Étienne, École Polytechnique (Lix and CMAP laboratories), Strate École de Design and Femto Engineering.

[divider style=”normal” top=”20″ bottom=”20″]

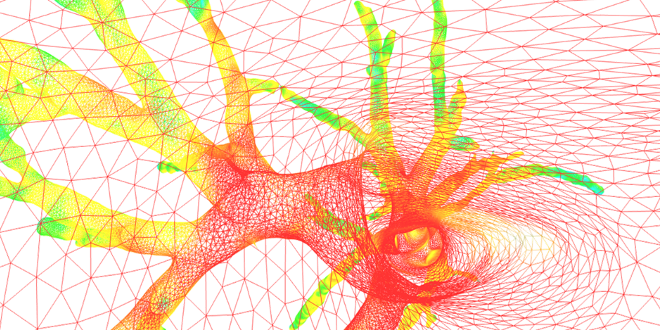

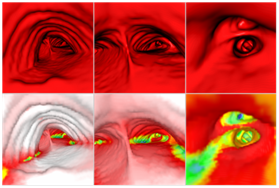

AirWays software uses a graphic grid representation of bronchial tube surfaces after analyzing clinical images and then generates 3D images to view them both “inside and outside” (above, a view of the local bronchial diameter using color coding). This technique allows doctors to plan more effectively for endoscopies and operations that were previously performed by sight.

AirWays software uses a graphic grid representation of bronchial tube surfaces after analyzing clinical images and then generates 3D images to view them both “inside and outside” (above, a view of the local bronchial diameter using color coding). This technique allows doctors to plan more effectively for endoscopies and operations that were previously performed by sight. “For now, we have limited ourselves to the diagnosis-analysis aspect, but I would also like to develop a predictive aspect,” says the researcher. This perspective is what motivated

“For now, we have limited ourselves to the diagnosis-analysis aspect, but I would also like to develop a predictive aspect,” says the researcher. This perspective is what motivated