The automatic semantics of images

Recognizing faces, objects, patterns, music, architecture, or even camera movements: thanks to progress in artificial intelligence, every plan or sequence in a video can now be characterized. In the IA TV joint laboratory created last October between France Télévisions and Télécom SudParis, researchers are currently developing an algorithm capable of analyzing the range of fiction programs offered by the national broadcaster.

As the number of online video-on-demand platforms has increased, recommendation algorithms have been developed to go with them, and are now capable of identifying (amongst other things) viewers’ preferences in terms of genre, actors or themes, boosting the chances of picking the right program. Artificial intelligence now goes one step further by identifying the plot’s location, the type of shots and actions, or the sequence of scenes.

The teams of France Télévisions and Télécom SudParis have been working towards this goal since October 2019, when the IA TV joint laboratory was created. Their work focuses on automating the analysis of the video contents of fiction programs. “Today, our recommendation settings are very basic. If a viewer liked a type of content, program, film or documentary, we do not know much about the reasons why they liked it, nor about the characteristics of the actual content. There are so many different dimensions which might have appealed to them – the period, cast or plot,” points out Matthieu Parmentier, Head of the Data & AI Department at France Télévisions.

AI applied to fiction contents

The aim of the partnership is to explore these dimensions. Using deep learning, a neural network technique, researchers are applying algorithms to a massive quantity of videos. The different successive layers of neurons can extract and analyze increasingly complex features of visual scenes: the first layer extracts the image’s pixels, while the last attaches labels to them.

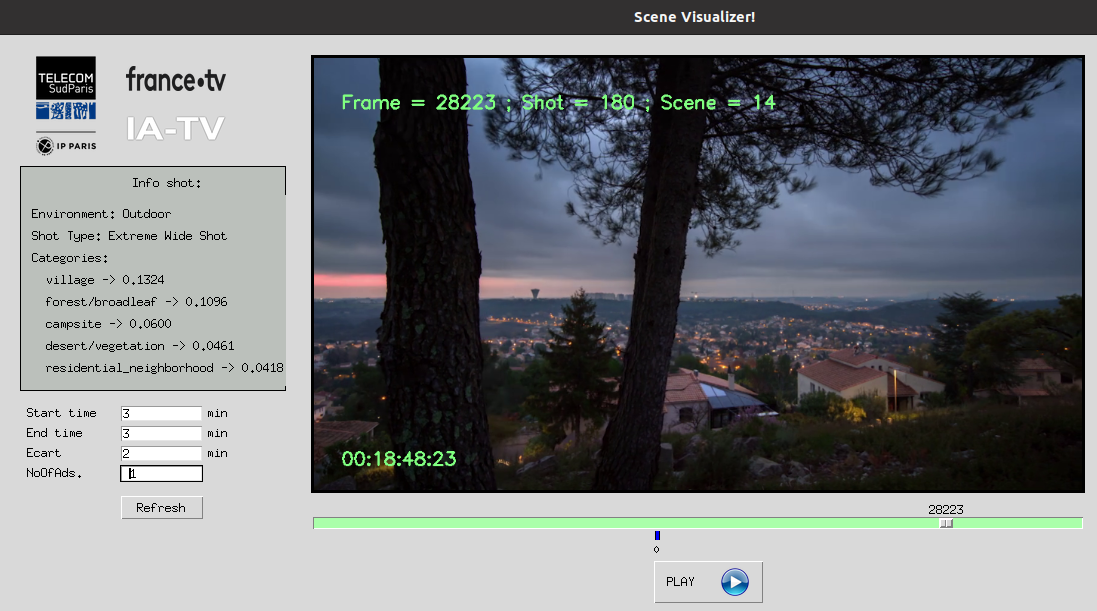

“Thanks to this technology, we are now able to sort contents into categories, which means that we can classify each sequence, each scene in order to identify, for example, whether it was shot outside or inside, recognize the characters/actors involved, identify objects or locations of interest and the relationships between them, or even extract emotional or aesthetic features. Our goal is to make the machine capable of progressing automatically towards interpreting scenes in a way that is semantically close to that of humans”, says Titus Zaharia, a researcher at Télécom SudParis and specialist in AI applied to multimedia content.

Researchers have already obtained convincing results. Is this scene set in a car? In a park? Inside a bus? The tool can suggest the most relevant categories by order of probability. The algorithm can also determine the types of shots in the sequences analyzed: wide, general or close-up shots. “This did not exist until now on the market,” says Matthieu Parmentier enthusiastically. “And as well as detecting changes from one scene to another, the algorithm can also identify changes of shot within the same scene.”

According to France Télévisions, there are many possible applications. Firstly, the automatic extraction of the key frames, meaning the most representative image to illustrate the content of a fiction, for each sequence and according to aesthetic criteria. Then there is the identification of the “ideal” moments in a program to insert ad breaks. “Currently, we are working on fixed video shots, but one of our next aims is to be able to characterize moving shots such as zooms, traveling or panoramic shots. This could be very interesting for us, as it could help to edit or reuse contents”, adds Matthieu Parmentier.

Multimodal AI solutions

In order to adapt to the new digital habits of viewers, the teams of France Télévisions and Télécom SudParis have been working together for over five years. They have contributed to the creation of artificial intelligence solutions and tools applied to digital images, but also to other forms of content, texts and sounds. In 2014, the two entities launched a collaborative project, Média4Dplayer, a prototype of a media player designed for all four types of screens (TV, PC, tablet and smartphone). This would be accessible to all, and especially to elderly people or people with disabilities. A few months later, they were looking into the automatic generation of subtitles. The are several advantages to this: equal access to content and the possibility to view a video without sound.

“In the case of television news, for example, subtitles are generated live by professionals typing, but as we have all seen, this can sometimes lead to errors or to delays between what is heard and what appears on screen,” explains Titus Zaharia. The solution developed by the two teams allows automatic synchronization for the Replay content offered by France TV. The teams were able to file a joint patent after two and a half years of development.

“In time, we are hoping to be able to offer perfectly synchronized subtitles just a few seconds after the broadcast of any type of live television program,” continues Matthieu Parmentier.

“France Télévisions still has issues to be addressed by scientific research and especially artificial intelligence. What we are interested in is developing tools which can be used and put on the market rapidly, but also tools that will be sufficiently general in their methodology to find other fields of application in the future,” concludes Titus Zaharia.