Open RAN opening mobile networks

With the objective of standardizing equipment in base stations, EURECOM is working on the Open RAN. This project aims to open the equipment manufacturing market to new companies, to encourage the design of innovative material for telecommunications networks.

Base stations, often called relay antennas, are systems that allow telephones and computers to connect to the network. They are owned by telecommunications operators, and the equipment used is provided by a small number of specialized companies. The components manufactured by some are incompatible with those designed by others, which prevents operators from building antennas with the elements of their choice. The roll-out of networks such as 5G depend on this private technology.

To allow new companies to introduce innovation to networks without being caught up in the games between the various parties, EURECOM is working on the Open RAN project (Open Radio Access Network). It aims to standardize the operation of base station components to make them compatible, no matter their manufacturer, using new network architecture that gets around each manufacturer’s specific component technology. For this, EURECOM is using the Open Air Interface platform, which allows industrial and academic actors to develop and test new software solutions and architectures for 4G and 5G networks. This work is performed in an open-source framework, which allows all actors to find common ground for collaboration on interoperability, eliminating questions of the components’ origin.

Read on I’MTech: OpenAirInterface: An open platform for establishing the 5G system of the future

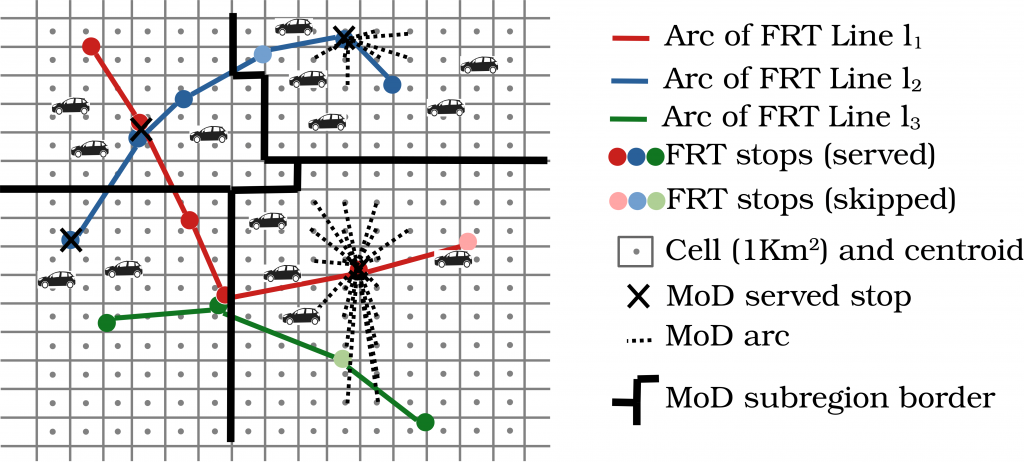

“The Open RAN can be broken down into three key blocks: the radio antenna, distributed unit and centralized unit”, describes Florian Kaltenberger, computer science researcher at EURECOM. The role of the antenna is to receive and send signals to and from telephones, while the second two elements serve to give the radio signal network access, so that users can watch videos or send messages, for example. Unlike radio units, which require specific equipment, “distributed units and centralized units can function with conventional IT material, like servers and PCs,” explains the researcher. There is no longer a need to rely on specially developed, proprietary equipment. Servers and PCs already know how to interact together, independently of their components.

RIC: the key to adaptability

This standardization would allow users of one network to use antennas from another, in the event that their operator’s antennas are too far away. To make the Open RAN function, researchers have developed the RAN Intelligent Controller (RIC), software that represents the heart of this architecture, in a way. The RIC functions thanks to artificial intelligence, which provides indications about a network’s status and guides the activity of base stations to adapt to various scenarios.

“For example, if we want to set up a network in a university, we would not approach it in the same way as if we wanted to set one up in a factory, as the issues to be resolved are not the same,” explains Kaltenberger. “In a factory, the interface makes it possible to connect machines to the network in order to make them work together and receive information,” he adds. The RIC is also capable of locating the position of users and adjusting the antenna configurations, which optimizes the network’s operations by allowing for more equitable access between users. For industry, the Open RAN represents an interesting alternative to the telecommunications networks of major operators, due to its low cost and ability to manage energy consumption in a more considered way, evaluating the needs for transmission strength required by users. This system can therefore provide the power users need, and no more.

Simple tools in service of free software

According to Kaltenberger, “the Open RAN architecture would allow for complete control of networks, which could contribute to greater sovereignty.” For the researcher, the fact that this system is controlled by an open-source program ensures a certain level of transparency. The companies involved in developing the software are not the only ones to have access to it. Users can also improve and check the code. Furthermore, if the companies in charge of the Open RAN were to shut down, the system would remain functional, as it was created to exist independently of industrial actors.

“At present, multiple research projects around the world have shown that the Open RAN functions, but it is not yet ready to be deployed,” explains Kaltenberger. One of the reasons is the reticence of equipment manufacturers to standardize their material, as this would open the market to new competitors and thereby put an end to their commercial domination. Kaltenberger believes that it will be necessary “to wait for perhaps five more years before standardized systems come on the market”.

Rémy Fauvel