France’s elderly care system on the brink of crisis

In his book Les Fossoyeurs (The Gravediggers), independent journalist Victor Castanet challenges the management of the private elderly care facilities of world leader Orpea, reopening the debate around the economic model – whether for-profit or not – of these structures. Ilona Delouette and Laura Nirello, Health Economics researchers at IMT Nord Europe, have investigated the consequences of public (de)regulation in the elderly care sector. Here, we decode a system that is currently on the brink of crisis.

The Orpea scandal has been at the center of public debate for several weeks. Rationing medications and food, a system of kickback bribes, cutting corners on tasks and detrimental working conditions are all practices that Orpea group is accused of by Victor Castenet in his book,”Les Fossoyeurs”. Through this abuse in private facilities is currently casting aspersions on the entire sector, professionals, families, NGOs, journalists and researchers have been denouncing such dysfunction for several years.

Ilona Delouette and Laura Nirello, Health Economics researchers at IMT Nord Europe, have been studying public regulation in the elderly care sector since 2015. During their inquiries, the two researchers have met policy makers, directors and employees of these structures. They came to the same conclusion for all the various kinds of establishments: “in this sector, the challenging burden of funding is now omnipresent, and working conditions and services provided have been continuously deteriorating,” emphasizes Laura Nirello. For the researchers, these new revelations about the Orpea group reveal a basic trend more than anything else: the progressive competition between these establishments and cost-cutting imperatives has put more and more distance between them and their original missions.

From providing care for dependents…

In 1997, to deal with the growth in the number of dependent elderly, the category of nursing homes known as ‘Ehpad’ was created. “Since the 1960s, there has been debate around providing care for the elderly with decreasing autonomy from the public budget. In 1997, the decision was made to remove loss of autonomy from the social security system; it became the responsibility of the departments,” explained Delouette. From then on, public organizations, such as those in the social and solidarity-based economy (SSE), entered into competition with private, for-profit establishments. 25 years later, out of the 7,400 nursing homes in France that house a little less than 600,000 residents, nearly 50% of them are public, around 30% are private, not-for-profit (SSE) and around 25% are private and for-profit.

The (complex) funding of these structures, regulated by regional health agencies (ARS) and departmental councils, is organized into three sections: the ‘care’ section (nursing personnel, medical material, etc.) handled by the French public health insurance body Assurance Maladie; the ‘dependence’ section (daily life assistance, carers, etc.) managed by the departments via the personal autonomy benefit (APA); and the final section, accommodation fees, which covers lodgings, activities and catering, at the charge of residents and their family.

“Public funding is identical for all structures, whether private — for-profit or not-for-profit — or public. It’s often the cost of accommodation, which is less regulated, that receives media coverage, as it can run to several thousand euros,” emphasizes Nirello. “And it is mainly on this point that we see major disparities, justified by the private for-profit sector by higher real estate costs in urban areas. But it’s mainly because half of these places are owned by companies listed on the stock market, with the profitability demands that this involves,” she continues. And while companies are facing a rise in dependence and need for care from their residents, funding is frozen.

…to the financialization of the elderly

A structure’s resources are determined by its residents’ average level of dependency, transposed to working time. This is evaluated according to the AGGIR table (“Autonomie Gérontologie Groupes Iso-Ressources” or Autonomy Gerontology Iso-Resource Groups): GIR 1 and 2 correspond to a state of total or severe dependence, GIR 6 to people who are completely independent. Nearly half of nursing home residents belong to GIR 1 and 2, and more than a third to GIR 3 and 4. “While for-profit homes are positioned for very high dependence, public and SSE establishments seek to have a more balanced mix. They are often older and have difficulties investing in new, adapted facilities to handle highly dependent residents,” indicates Nirello. Paradoxically, the rate of assistants to residents is very different according to a nursing home’s status: 67% for public homes, 53% for private not-for-profit and 49% for private for-profit.

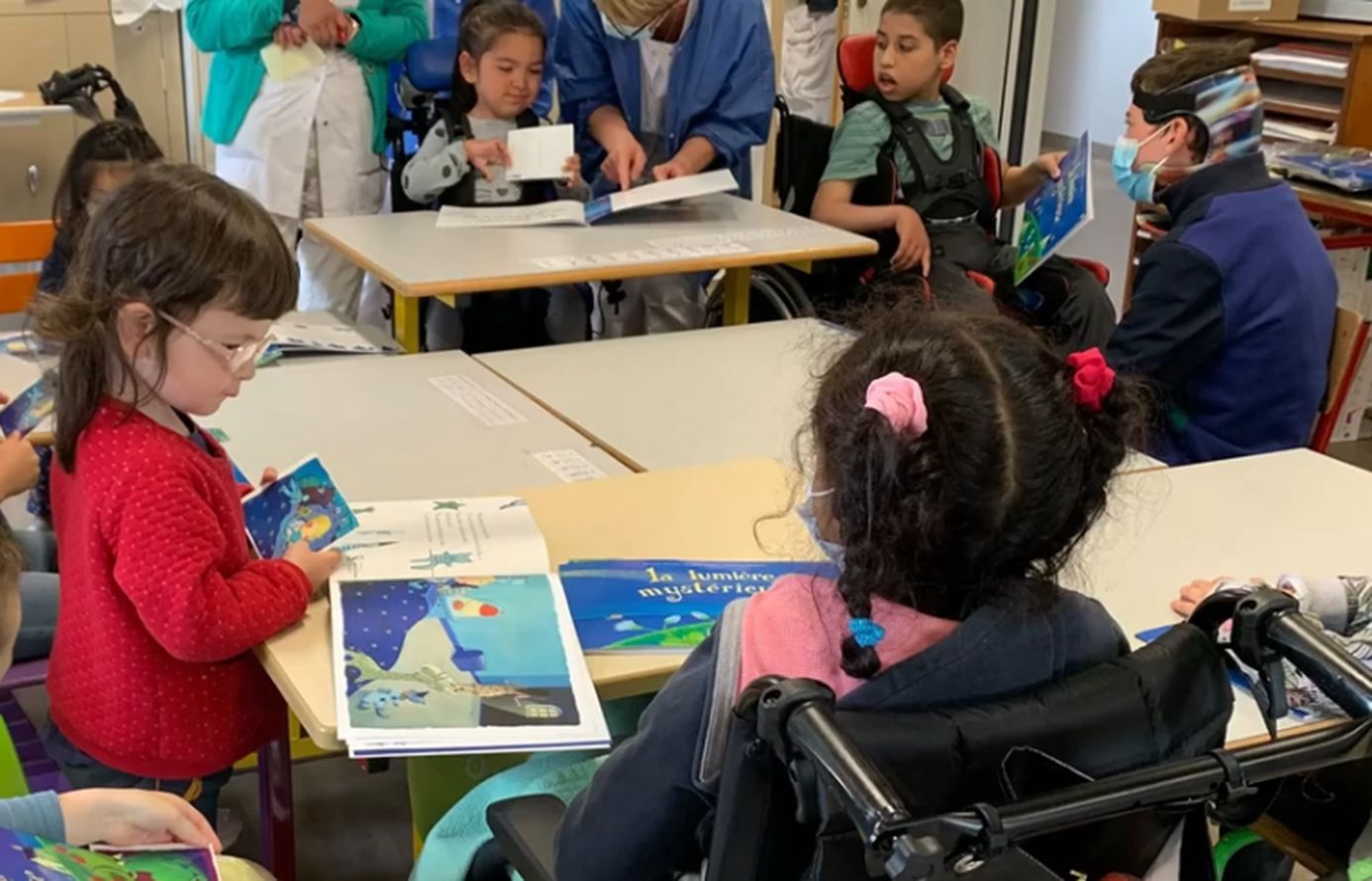

In the context of tightening public purse strings, this goes alongside a phenomenon of extreme corner-cutting for treatment, with each operation charged for. “Elderly care nurses need time to take care of residents: autonomy is fundamentally connected to social interaction,” insists Delouette. The Hospital, Patients, Health, Territories law strengthened competition between the various structures: from 2009, new authorizations for nursing home creation and extension were established based on calls for project issued by ARSs. For the researcher, “this system once again places groups of establishments in competition for new locations, as funding is awarded to the best option in terms of price and service quality, no matter its status. We know who wins: 20% public, and 40-40 for private for-profit/not-for-profit. What we don’t know is who responds to these calls for project. With tightened budgets, is the public sector no longer responding or is this a choice by regulators in the field?”

What is the future for nursing homes?

“Funding, cutting corners, a managerial view of caring for dependents: the entire system needs to be redesigned. We don’t have solutions, we are making observations,” emphasizes Nirello.But despite promises, reform has been delayed too long.The Elderly and Autonomy law, the most recent effort in this area, was announced by the current government and buried in late 2019, despite two parliamentary reports highlighting the serious aged care crisis (the mission for nursing homes in March 2018 and the Libault report in March 2019).

In 2030, Insee estimates that there will be 108,000 more dependent elderly people; 4 million in total in 2050. How can we prepare for this demographic evolution, currently underway? Just to cover the increased costs of caring for the elderly with loss of autonomy, it would take €9 billion every year until 2030. “We can always increase funding; the question is how we fund establishments. If we continue to try to cut corners on care and tasks, this goes against the social mission of these structures. Should vulnerable people be sources of profit? Is society prepared to invest more in taking care of dependent people?” asks Delouette. “This is society’s choice.” The two researchers are currently working on the management of the pandemic in nursing homes. For them, there is still a positive side to all this: the state of elderly care has never been such a hot topic.

Anne-Sophie Boutaud

Also read on I’MTech: