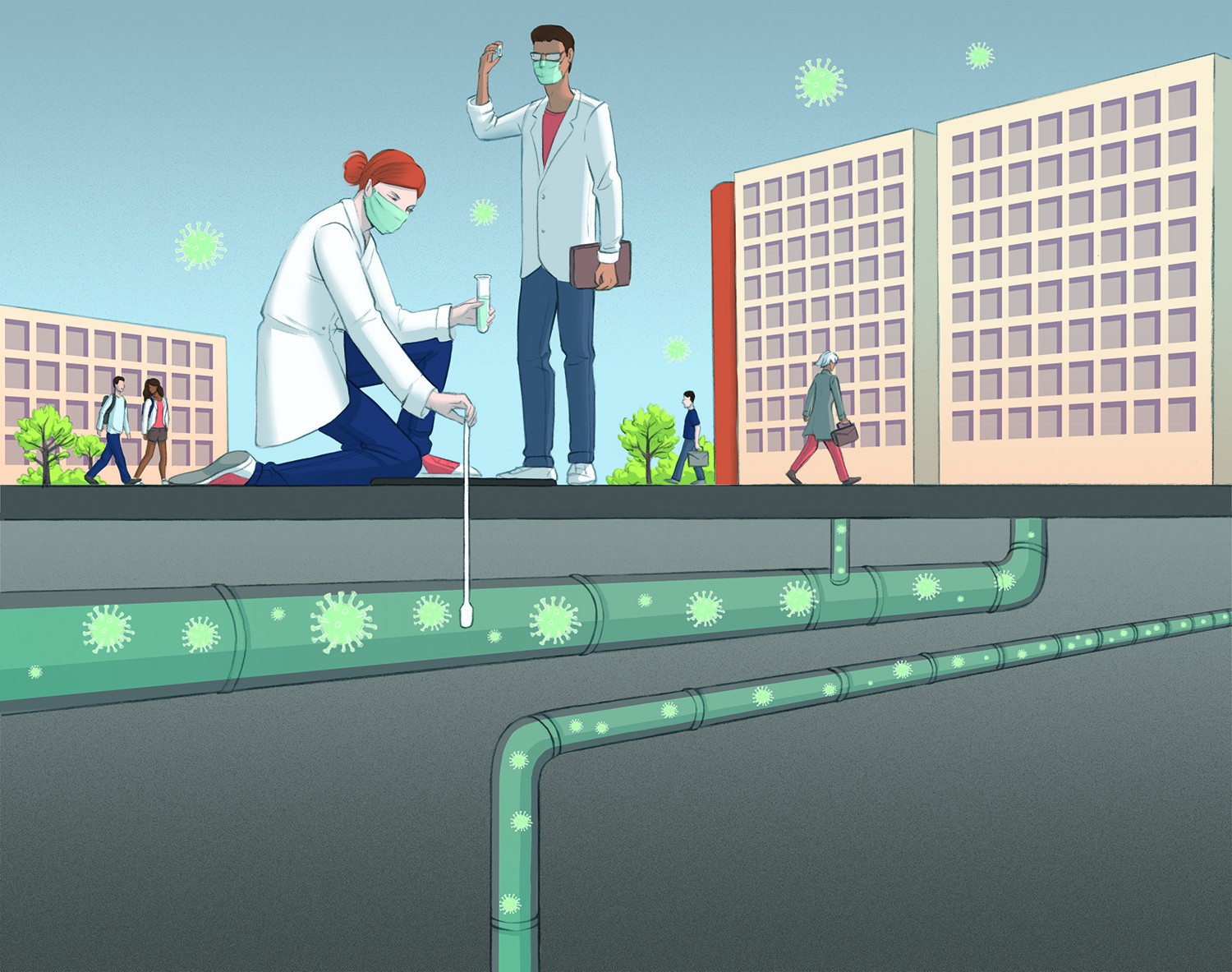

Covid-19: what could subsurface wave detection mean for the pandemic?

The detection of SARS-CoV-2 viral infections responsible for Covid-19 allows us to monitor the evolution of the pandemic. Most methods are based on individual patient screening, with the disadvantages of cost and time. Other approaches based on the detection of SARS-CoV-2 in urban wastewater have been developed to monitor the trends in infections. Miguel Lopez-Ferber, a researcher at IMT Mines Alès, conducted a study to detect the virus in wastewater on the school’s campus. This precise, small-scale approach allows us to collect information on the probable causes of infection.

How do you detect the presence of Sars-CoV-2 in wastewater?

Miguel-Lopez-Ferber: We use the technique developed by Medema in 2020. After recovering the liquid part of the wastewater samples, we use a centrifugation technique that allows us to isolate a phase that contains the virus-sized particles. From this phase, we proceed with the extraction of the viral genomes present to perform PCR tests. PCR (polymerase chain reaction) is a technique used to amplify a genetic signal. If the PCR amplifies viral genome fragments specific to Sars-CoV-2, then the virus is present in the wastewater sample.

Does this technique tell us the concentration of the virus?

MLF: Yes. Thanks to our partnership with the PHYSE team of the HydroSciences Montpellier laboratory and the IAGE startup, we use the digital PCR technique which is a higher-resolution version of quantitative PCR. This allows us to know how many copies of the viral genome are present in the samples. With weekly sampling, we can know the trend in virus concentrations in the wastewater.

What value is there in quantifying the virus in wastewater?

MLF: This method allows for early detection of viral infections: SARS-CoV-2 is present in feces the day after infection. It is therefore possible to detect infection well before the first potential symptoms appear in individuals. This makes it possible to determine quickly whether the virus is actively circulating or not and whether there is an increase, stagnation or decrease in infections. However, at the scale at which these studies are conducted, it is impossible to know who is infected, or how many people are infected, because the viral load is variable among individuals.

How can your study on the IMT Mines Alès campus contribute to this type of approach?

MLF: To date, studies of this type have been conducted at a city level. We have reduced the cohorts to the scale of the school campus, as well as to different buildings on campus. This has allowed us to trace the sampling information from the entire school to specific points within it. Since mid-August, we have been able to observe the effects of the different events that influence the circulation of the virus, in both directions.

What kind of events are we talking about?

MLF: For example, in October, we quickly saw the effect of a party in a campus building: only 72 hours later, we observed a spike in virus circulation in the wastewater of that building, thus indicating new infections. On the contrary: when restrictive measures were put in place, such as quarantine or a second lockdown, we could see a decrease in virus circulation in the following days. This is faster than waiting to see the impact of a lockdown on infection rates 2 to 3 weeks after its implementation. This not only shows the effectiveness of the measures, but also allows us to know where the infections come from and to link them to probable causes.

What could this type of approach contribute to the management of the crisis?

MLF: This approach is less time-consuming and much less expensive than testing every person to track the epidemic. On the scale of schools or similar organizations, this would allow rapid action to be taken, for example, to quarantine certain areas before infection rates become too great. In general, this would better limit the spread and anticipate future situations, such as peak hospitalizations, up to three weeks before they occur.

By Antonin Counillon